Deploy an enterprise RAG chatbot with Red Hat OpenShift AI

Deploy an enterprise-ready RAG chatbot using OpenShift AI. This quickstart automates provisioning of components like vector databases and ingestion pipelines.

Deploy an enterprise-ready RAG chatbot using OpenShift AI. This quickstart automates provisioning of components like vector databases and ingestion pipelines.

Discover the self-service agent AI quickstart for automating IT processes on Red Hat OpenShift AI. Deploy, integrate with Slack and ServiceNow, and more.

Learn how to fine-tune a RAG model using Feast and Kubeflow Trainer. This guide covers preprocessing and scaling training on Red Hat OpenShift AI.

Learn how to implement retrieval-augmented generation (RAG) with Feast on Red Hat OpenShift AI to create highly efficient and intelligent retrieval systems.

Most log lines are noise. Learn how semantic anomaly detection filters out repetitive patterns—even repetitive errors—to surface the genuinely unusual events.

Optimize AI scheduling. Discover 3 workflows to automate RayCluster lifecycles using KubeRay and Kueue on Red Hat OpenShift AI 3.

Learn how to share an NVIDIA GPU with an OpenShift Local instance to run containerized workloads that require GPU acceleration without a dedicated server.

Learn how we built a simple, rules-based algorithm to detect oversaturation in LLM performance benchmarks, reducing costs by more than a factor of 2.

Your Red Hat Developer membership unlocks access to product trials, learning resources, events, tools, and a community you can trust to help you stay ahead in AI and emerging tech.

Enhance your Python AI applications with distributed tracing. Discover how to use Jaeger and OpenTelemetry for insights into Llama Stack interactions.

Learn how to implement Llama Stack's built-in guardrails with Python, helping to improve the safety and performance of your LLM applications.

Enterprise-grade artificial intelligence and machine learning (AI/ML) for

Tackle the AI/ML lifecycle with OpenShift AI. This guide helps you build adaptable, production-ready MLOps workflows, from data preparation to live inference.

Learn how to use the CodeFlare SDK to submit RayJobs to a remote Ray cluster in OpenShift AI.

Learn about the advantages of prompt chaining and the ReAct framework compared to simpler agent architectures for complex tasks.

Discover the comprehensive security and scalability measures for a Models-as-a-Service (MaaS) platform in an enterprise environment.

Learn how to overcome compatibility challenges when deploying OpenShift AI and OpenShift Service Mesh 3 on one cluster.

Harness Llama Stack with Python for LLM development. Explore tool calling, agents, and Model Context Protocol (MCP) for versatile integrations.

Learn how to build a Model-as-a-Service platform with this simple demo. (Part 3 of 4)

This article introduces Models-as-a-Service (MaaS) for enterprises, outlining the challenges, benefits, key technologies, and workflows. (Part 1 of 4)

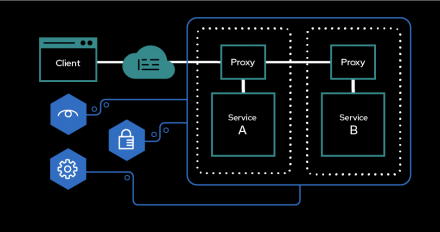

Learn how to secure, observe, and control AI models at scale without code changes to simplify zero-trust deployments by using service mesh.

Enhance your Node.js AI applications with distributed tracing. Discover how to use Jaeger and OpenTelemetry for insights into Llama Stack interactions.

Deploy AI at the edge with Red Hat OpenShift AI. Learn to set up OpenShift AI, configure storage, train models, and serve using KServe's RawDeployment.

Members from the Red Hat Node.js team were recently at PowerUp 2025. It was held

Discover how IBM used OpenShift AI to maximize GPU efficiency on its internal AI supercomputer, using open source tools like Kueue for efficient AI workloads.