The interaction of AI/ML and edge computing occurs in a variety of businesses. For example, in the retail industry, customer behavior and patterns can be used to determine promotions. In a manufacturing facility, likewise, data inference can help improve processes where machines are failing, products are not up to standard, and so on. The basic premise is that you might collect data and then infer from it in order to make decisions based on it.

In the context of this retail use case example, a superstore company has branches spread across the country. Each branch decides the products it wants to sell and can customize an AI/ML intelligent app for customers to use.

This article presents a video demonstration illustrating an opinionated solution capable of automating a continuous cycle for releasing and deploying new AI/ML models.

About the solution pattern

The solution goes well beyond the core topic of creating and inferencing AI/ML models. It provides all the supporting systems and data pipelines, working in concert, to execute end-to-end workflows that ensure the most up-to-date information is delivered to clients.

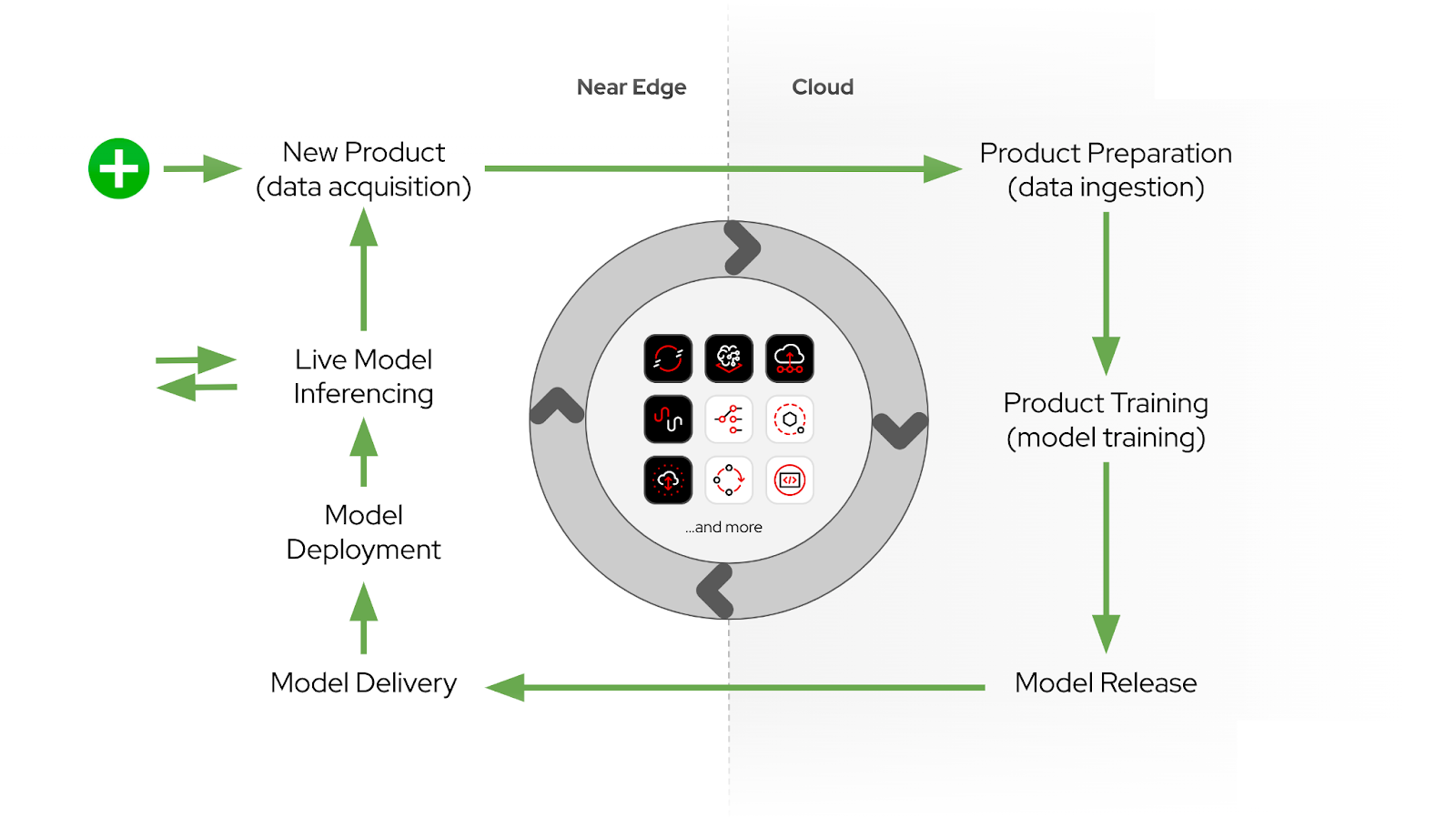

As the business evolves and grows, new products are offered to customers. When adding new products to the platform, upgrade cycles are initiated by acquiring and moving product information over data bridges between the near-edge and core data centers. Figure 1 depicts this process.

Capabilities on top of Red Hat OpenShift, such as highly dynamic connectivity with Red Hat Service Interconnect, integration flows with the Red Hat build of Apache Camel, in-sync platform automation with Tekton and Red Hat OpenShift AI, and object storage with OpenShift Data Foundation are all combined in the ecosystem to form a closed loop, allowing the platform to evolve with agility.

Video demo

Watch the demonstration in the embedded video below:

Additional resources

The solution is well documented under the Solution Patterns portal. You can read more detailed information about the use case, its architecture, and even find guided instructions on how to provision and run it yourself.

You can explore more solution patterns that show how the different Red Hat technologies can be used together to solve business needs elegantly.

Keep learning by following the resources listed below:

- Find detailed information about this article’s demo in the Solution Pattern portal.

- Explore other solution patterns.

- Read the Apache Camel page on Red Hat Developer to learn more about the capabilities of Apache Camel.

- Use Service Interconnect to connect applications and microservices.

- Create and manage your AI/ML models using Red Hat OpenShift AI.