In today's digital landscape, where organizations increasingly rely on interconnected applications and services to execute business operations, the ability to connect software and data across different environments is becoming increasingly vital. However, achieving seamless connectivity is no easy feat, as varying application architectures and on-premises and cloud infrastructures often present disparities that require careful management of interconnections for optimal operation, risk management, managing skill sets across the technical team, ensuring enough observability, and how security is being handled.

This article discusses two Red Hat technologies, Red Hat OpenShift Service Mesh and Red Hat Service Interconnect, that offer different approaches to address these requirements and provides guidance to help you make informed decisions on which to use when.

What is OpenShift Service Mesh?

Red Hat OpenShift Service Mesh addresses a variety of needs in a microservice architecture by creating a centralized point of control for service interactions. It adds a transparent layer to existing distributed applications without requiring changes to the application code. OpenShift Service Mesh facilitates service interconnections by capturing or intercepting traffic between services and, where required by policy, modifying, redirecting, or creating new requests to other services.

Based on the open source Istio project, Red Hat OpenShift Service Mesh provides an easy way to create a network of deployed services with discovery, load balancing, service-to-service authentication, failure recovery, metrics, and monitoring. In late 2021, OpenShift Service Mesh 2.1 introduced the Service Mesh Federation to enable interconnections and management across different Red Hat OpenShift clusters, improving resilience and enabling disaster recovery for organizations adopting microservices architectures in distributed environments.

What is Service Interconnect?

Red Hat Service Interconnect was introduced in May 2023 and is based on skupper.io. Service Interconnect simplifies and protects the interconnection of applications and services across different environments and platforms, such as Kubernetes clusters, virtual machines, and bare-metal hosts, including platforms such as Red Hat OpenShift and Red Hat Enterprise Linux. Unlike traditional means of interconnecting across environments, such as VPNs and complex firewall rules, trusted interconnections can be created by anyone on the development team without requiring elevated administrative privileges while maintaining the organization's infrastructure and data compliance policies.

In the following sections, we will delve into the similarities and differences between OpenShift Service Mesh and Red Hat Service Interconnect, providing a comprehensive analysis of their features, functionalities, and benefits.

How are they similar?

Similarities between the two technologies include the following:

- Both technologies use mutual TLS to secure communication across different environments.

- Both technologies can be deployed as a container on top of Red Hat OpenShift, ensuring pods can be securely interconnected to pods in another cluster. In this particular scenario, both pods can be interconnected securely between namespaces in each cluster, regardless of the underlying networking configuration and topology.

How are they different?

Although there are some similarities between both technologies, there are numerous differences.

Red Hat OpenShift Service Mesh typically adopts a sidecar proxy model architecture. Each service instance is accompanied by a lightweight proxy container (known as a sidecar), which handles communication and provides essential functionalities, such as pod-to-pod security. Though typically service mesh is used for services within the same OpenShift cluster, the Federation feature enables cross-cluster connectivity. With the Federation feature, multiple service meshes across different Red Hat OpenShift clusters are connected through the ingress gateways of the respective service mesh. This article provides additional details about the technology architecture and operation of a service mesh.

Note: The Istio community is developing Istio ambient mesh, which is an architectural alternative that does not rely on sidecars for a service mesh. This will be available in a future release of Red Hat OpenShift Service Mesh.

Red Hat Service Interconnect establishes interconnections between applications and services across multiple environments and platforms, such as Kubernetes clusters, virtual machines, and bare-metal hosts, by creating and managing a virtual network overlay. Each environment running Service Interconnect has a site with virtual routers that communicate with each other, exchanging service information and establishing links across environments. This architecture simplifies connectivity by abstracting the underlying physical and logical networking complexities away from applications .

So, which product is more suitable for my needs?

The way each product establishes interconnections diverges considerably. Some factors in deciding which option is right for you include environment and service architecture, ownership of the interconnection, observability, and ideal use cases. This section details comparisons to help you decide which technology best suits your needs.

Environment and service architecture

- Red Hat OpenShift Service Mesh with Federation: Red Hat OpenShift (version 4.10 onwards)

- Red Hat Service Interconnect: Red Hat OpenShift, Red Hat Enterprise Linux, Kubernetes cluster, virtual machine, bare-metal host

If you are already using Red Hat OpenShift 4.10 or later, Red Hat OpenShift Service Mesh is already included and integrated, offering capabilities such as traffic management, workload and request-based authorization, built-in observability using Kiali (adding visualization between clusters), enhanced Operator integration, improved security and policy enforcement, and deep integration with the OpenShift ecosystem.

Additionally, Red Hat OpenShift Service Mesh allows each pod to have a secure connection (using mutual TLS), adopting a zero-trust security model approach and enhancing the security aspect of the final architecture. The federation allows each control plane running in a cluster to have a separate administration, giving the opportunity to maintain different trust domains. By selecting each application or service using a combination of Import/Export CRDs (ImportServiceSet/ExportServiceSet, respectively), administrators can fine-tune which will be seen by the other end.

Red Hat Service Interconnect doesn't provide all of the traffic management and observability capabilities of OpenShift Service Mesh, but it does support interconnection of applications or services across heterogeneous environments, including Red Hat platforms and non-Red Hat Kubernetes clusters, virtual machines, and bare-metal hosts. If the environment includes different versions of OpenShift, especially versions older than 4.10, Service Interconnect enables application connectivity across those clusters and simplifies migrations.

Ownership of the interconnection

- Red Hat OpenShift Service Mesh with Federation: Cluster administrator

- Red Hat Service Interconnect: Development team (without elevated privileges)

Who typically owns the bridge between clusters, who decides which services to share, and who maintains that interconnection? If we're talking about Kubernetes environments, cluster ownership is a critical topic to consider. Ownership is usually handled by an administration team that needs to serve development teams that interconnect applications or services within or across clusters. Since policy is typically governed at the cluster level, advanced security privileges are often needed to make changes.

In the case of Red Hat OpenShift Service Mesh, a cluster administrator will be deploying a control plane and setting up some namespaces where the data plane will reside, config the gateways of intercommunication across clusters, and (if needed) determining which services will be exported or imported using the appropriate CRDs. Once this is established, either cluster administrators or the development teams can use the Kiali dashboard to observe and combine VirtualService and DestinationRule's CRD to fine-tune the traffic across each application or service. This allows them to employ change practices such as canary releasing or A/B testing.

Red Hat Service Interconnect doesn't need elevated privileges, and the development team can create their interconnections across each individual namespace without extensive network planning using a simple command line. This allows it to perform progressive migrations or interconnect to remote clusters and perform testing in environments using Red Hat (or other products), or even older versions of clusters such as Red Hat OpenShift 3.

Observability

- Red Hat OpenShift Service Mesh with Federation: Uses a sidecar to collect and control the traffic and visualizes through Kiali across clusters.

- Red Hat Service Interconnect: Offers a very basic visualization of Routers, application components, application TCP connections, and application HTTP requests.

One of the core capabilities of service mesh is to provide visibility into your services. OpenShift Service Mesh provides detailed metrics, logs, and traces of your service interactions. It has a thorough management console in Kiali and provides tracing visibility through Jaeger, Prometheus, and Grafana consoles for query and metrics analysis, dashboards for Istio data, and telemetry information.

Though not as exhaustive as OpenShift Service Mesh, Red Hat Service Interconnect also provides a console UI to visualize the connections, basic traffic logs, and metrics between routers and applications components between namespaces. It provides a good visibility "per link" and enables the user to understand when the routers are properly working across environments.

Ideal use cases

Red Hat OpenShift Service Mesh with Federation:

- Observability such as metrics, logging, and tracing

- A/B testing

- Canary rollouts

- Traffic management

- Authorization

- Automate and enforce pod to pod mTLS encryption

- Automated identity and certificate management

Red Hat Service Interconnect:

- Progressive migration from OpenShift 3 to 4

- Progressive migration from Kubernetes to OpenShift

- Interconnecting to never-migrate-to-cloud workloads (e.g., legacy databases)

- Shift-left networking where developers control application interconnectivity

When should you use one over the other? Although both technologies allow you to design architectures to interconnect applications or services across clusters, it's important to pay attention to what kind of use cases they were designed in the first place.

OpenShift Service Mesh was initially conceived to ease the management of microservices and allow easy changes to be made and fine-tuning on the traffic management for deployment purposes within an OpenShift cluster by cluster administrators that is transparent to developers. With the Federation feature, it's now possible to apply all that across different Red Hat OpenShift clusters and perform changes with no downtime.

Red Hat Service Interconnect is designed to interconnect applications or services across different environments without requiring changes to the underlying networking infrastructure or configuration. It prioritizes developer self-service over granular central management capabilities at multiple levels of the network stack.

Key considerations

There are more differences than similarities between the two. Ultimately, deciding which technology is more suitable for your needs might come down based on the following questions.

Platform

- Does my organization already have a Red Hat OpenShift subscription?

- Do I need a heterogeneous Kubernetes ecosystem (OpenShift, EKS, GKE, vanilla Kubernetes) in the multicluster platform?

- Are all Kubernetes versions lifecycled concurrently?

Workload

- Do I need traffic management, security, and network policy management across all my clusters?

- Do I need traceability and performance monitoring (APM) across the ecosystem?

- Is my organization refactoring applications on the journey to the cloud?

- Are applications workloads sensitive to where they are deployed?

- Do I need to shield the application or services from the multicluster details?

- Do I need to distribute my application or services across a mix of traditional infrastructure and Kubernetes?

Workforce

- Do I have the right skills in my team to manage the interconnections?

- Can we cope with the operational overhead?

Network and storage

- Do I need to interconnect multiple meshes?

- Do my workloads need to access the same data across multiple clusters or on traditional infrastructure?

In answering these questions, you might lean toward one over the other (or maybe toward both) depending on what type of project your team is working on and your business and technical requirements.

Conclusion

Red Hat Service Interconnect is sold separately, whereas Red Hat OpenShift Service Mesh is an add-on of Red Hat OpenShift entitlement. The skill set of your team and who has the right to set up the interconnections across clusters will also play a major role in your decision.

At the end of the day, Red Hat is committed to offering options for interconnectivity and ensuring the success of your business, our team, and, obviously, your customers.

To learn more, visit the Red Hat OpenShift Service Mesh and Red Hat Service Interconnect pages.

Appendix

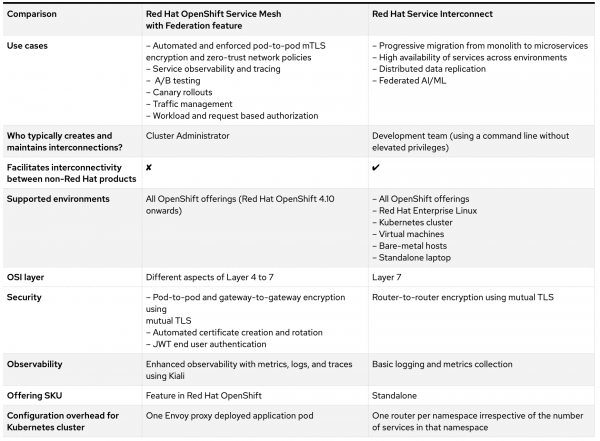

Read on for a comparison of Red Hat Service Interconnect and Red Hat OpenShift Service Mesh with Federation feature, as shown in Figure 1.

Use cases

- Red Hat OpenShift Service Mesh with Federation: Automated and enforced pod-to-pod mTLS encryption and zero-trust network policies, service observability and tracing, A/B testing, canary rollouts, traffic management, workload, and request-based authorization

- Red Hat Service Interconnect: Progressive migration from monolith to microservices, high availability of services across environments, distributed data replication, federated AI/ML

Interconnection is typically created and maintained by:

- Red Hat OpenShift Service Mesh with Federation: Cluster administrator

- Red Hat Service Interconnect: Development team (using a command-line without elevated privileges)

Facilitates interconnectivity between non-Red Hat products

- Red Hat OpenShift Service Mesh with Federation: No

- Red Hat Service Interconnect: Yes

Supported environments

- Red Hat OpenShift Service Mesh with Federation: All OpenShift offerings (Red Hat OpenShift 4.10 onwards)

- Red Hat Service Interconnect: All OpenShift offerings, Red Hat Enterprise Linux, Kubernetes cluster, virtual machines, bare-metal hosts, standalone laptop

OSI layer

- Red Hat OpenShift Service Mesh with Federation: Different aspects of Layer 4 to 7

- Red Hat Service Interconnect: Layer 7

Security

- Red Hat OpenShift Service Mesh with Federation:

- Pod-to-pod and gateway-to-gateway encryption using mutual TLS

- Automated certificate creation rotation

- JWT end-user authentication

- Red Hat Service Interconnect: Router-to-router encryption using mutual TLS

Observability

- Red Hat OpenShift Service Mesh with Federation: Enhanced observability with metrics, logs, and traces using Kiali

- Red Hat Service Interconnect: Basic logging and metrics collection

Offering SKU

- Red Hat OpenShift Service Mesh with Federation: Feature in Red Hat OpenShift

- Red Hat Service Interconnect: Standalone product

Configuration overhead for Kubernetes cluster

- Red Hat OpenShift Service Mesh with Federation: One Envoy proxy deployed application pod

- Red Hat Service Interconnect: One router per namespace irrespective of the number of services in that namespace