This article covers the message migration feature of AMQ Broker Operator. Enterprise messaging is of vital importance for mission critical businesses like financial and telecom firms. Important transaction information often rely on reliable messaging brokers like Red Hat's AMQ broker as the backbone for messaging processing. With the help of AMQ Broker Operator, users can easily deploy their AMQ broker-based application to a cloud environment like Red Hat OpenShift.

The AMQ Broker Operator makes it easy to scale your broker deployment up and down according to your business requirement. When you scale up the broker deployment, more broker pods are available to handle messages. When you scale down, you get less broker processing power but you can free up cloud resources that you do not need.

The message migration solution

However, there is one concern that needs to be addressed. How do you deal with the important messages in the brokers that are going to be scaled down? If you simply let the broker pod down, those messages will be taken offline and stay in the persistent store of the scaled down broker and will never get processed any more—a situation unacceptable for any serious businesses. Here is where message migration comes to the rescue.

For each of its broker deployment the AMQ Broker Operator watches all the pods it deploys. When a user scales down a broker deployment and some of the pods go down the operator detects the event and will immediately performs message migration.

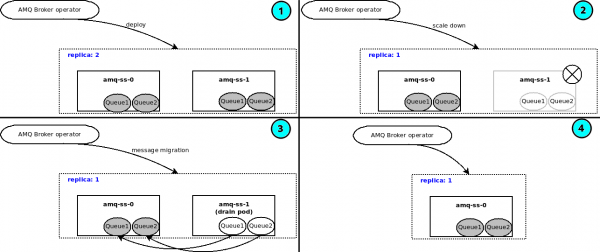

When a broker pod is scaled down, the operator will start a new broker pod in its place, mount the same Persistent Volume Claim (PVC) as its persistent store. When the new pod (called drainer pod) is started, it launches a special process where it finds a living broker pod and sends a scaleDown command via the jolokia endpoint to the drainer pod. The drainer pod then sends all its persistent messages to the living broker.

If all messages are migrated without error, the drainer pod will terminate itself with a 0 exit code from the process. If the migration fails, the drainer pod will return with a non-zero code. The operator sees the non-zero code and will launch a new pod again and repeat the migration process until all the messages are migrated. The operator deletes the PVC only if the message migration is successful.

The diagram in Figure 1 illustrates how the message migration works.

Messeage migration can be enabled in a Broker custom resource. It also requires persistence to be enabled as only persistent messages are migrated during a scale-down.

Note that the operator only performs message migration on a normal scale down. It does not handle pod crashes.

Let’s take an example to show how to set up a broker deployment and do message migration.

Prerequisites

-

a Kubernetes cluster

-

admin access to the cluster

Step 1: Create a new namespace

Create a new namespace called message-migration-example:

$ kubectl create namespace message-migration-example

namespace/message-migration-example created

Change the context to use it as current namespace:

$ kubectl config set-context --current --namespace=message-migration-example

Context "minikube" modified.

We will deploy all the resources to this namespace.

Step 2: Install AMQ Broker operator

We will be using upstream operator. With the current namespace message-migration-example:

$ kubectl create -f https://raw.githubusercontent.com/artemiscloud/activemq-artemis-operator/v1.0.15/deploy/crds/broker_activemqartemis_crd.yaml

$ kubectl create -f https://raw.githubusercontent.com/artemiscloud/activemq-artemis-operator/v1.0.15/deploy/crds/broker_activemqartemissecurity_crd.yaml

$ kubectl create -f https://raw.githubusercontent.com/artemiscloud/activemq-artemis-operator/v1.0.15/deploy/crds/broker_activemqartemisaddress_crd.yaml

$ kubectl create -f https://raw.githubusercontent.com/artemiscloud/activemq-artemis-operator/v1.0.15/deploy/crds/broker_activemqartemisscaledown_crd.yaml

$ kubectl create -f https://raw.githubusercontent.com/artemiscloud/activemq-artemis-operator/v1.0.15/deploy/service_account.yaml

$ kubectl create -f https://raw.githubusercontent.com/artemiscloud/activemq-artemis-operator/v1.0.15/deploy/role.yaml

$ kubectl create -f https://raw.githubusercontent.com/artemiscloud/activemq-artemis-operator/v1.0.15/deploy/role_binding.yaml

$ kubectl create -f https://raw.githubusercontent.com/artemiscloud/activemq-artemis-operator/v1.0.15/deploy/election_role.yaml

$ kubectl create -f https://raw.githubusercontent.com/artemiscloud/activemq-artemis-operator/v1.0.15/deploy/election_role_binding.yaml

$ kubectl create -f https://raw.githubusercontent.com/artemiscloud/activemq-artemis-operator/v1.0.15/deploy/operator.yaml

Check that the operator pod is running:

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

activemq-artemis-controller-manager-7465974d85-z94dr 1/1 Running 0 30m

Step 3: Deploy a broker deployment

With the operator up and running, we can deploy two broker pods using the following CR:

broker.yaml:

apiVersion: broker.amq.io/v1beta1

kind: ActiveMQArtemis

metadata:

name: amq

spec:

deploymentPlan:

size: 2

persistenceEnabled: true

messageMigration: true

$ kubectl create -f broker.yaml

activemqartemis.broker.amq.io/amq created

Note by default if spec.persistenceEnabled is set to true, the spec.messageMigration is also set to true.

After the CR is deployed, a StatefulSet (amq-ss) is created by the operator with replica 2 and each broker gets a PVC volume mounted for storing persistent messages. You will see 2 broker pods created in namespace message-migration-example, along with the operator pod.

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

activemq-artemis-controller-manager-7465974d85-z94dr 1/1 Running 0 76m

amq-ss-0 1/1 Running 0 14m

amq-ss-1 1/1 Running 0 4m31s

Step 4: Send messages to pod 1

When doing scale down, it is always the pods whose name has the largest ordinal number get deleted. In this example there are 2 pods whose names are amq-ss-0, amq-ss-1. If we scale down from 2 to 1 pod, the pod amq-ss-1 will get deleted.

We will send a few messages to broker pod amq-ss-1 in order to see scale down in action.

$ kubectl exec amq-ss-1 -- amq-broker/bin/artemis producer --url tcp://amq-ss-1:61616 --message-count 10 --destination test

Defaulted container "amq-container" out of: amq-container, amq-container-init (init)

Connection brokerURL = tcp://amq-ss-1:61616

Producer ActiveMQQueue[test], thread=0 Started to calculate elapsed time ...

Producer ActiveMQQueue[test], thread=0 Produced: 10 messages

Producer ActiveMQQueue[test], thread=0 Elapsed time in second : 0 s

Producer ActiveMQQueue[test], thread=0 Elapsed time in milli second : 74 milli seconds

The above command uses the AMQ broker’s built-in command line tool artemis to send 10 messages to a queue test in each broker. We can use the command line tool to check the messages on each broker:

$ kubectl exec amq-ss-1 -- amq-broker/bin/artemis queue stat --url tcp://amq-ss-1:61616

Defaulted container "amq-container" out of: amq-container, amq-container-init (init)

Connection brokerURL = tcp://amq-ss-1:61616

|NAME |ADDRESS |CONSUMER_COUNT|MESSAGE_COUNT|MESSAGES_ADDED|DELIVERING_COUNT|MESSAGES_ACKED|SCHEDULED_COUNT|ROUTING_TYPE|

|DLQ |DLQ |0 |0 |0 |0 |0 |0 |ANYCAST |

|ExpiryQueue |ExpiryQueue |0 |0 |0 |0 |0 |0 |ANYCAST |

|activemq.management.67...|activemq.management.67...|1 |0 |0 |0 |0 |0 |MULTICAST |

|test |test |0 |10 |10 |0 |0 |0 |ANYCAST |

Step 5: Scale down the broker pods

Edit the custom resource to change the spec.deploymentPlan.size to 1.

apiVersion: broker.amq.io/v1beta1

kind: ActiveMQArtemis

metadata:

name: amq

spec:

deploymentPlan:

size: 1

persistenceEnabled: true

messageMigration: true

Apply the change:

$ kubectl apply -f broker.yaml

activemqartemis.broker.amq.io/amq configured

When the change is applied, broker pod amq-ss-1 will be terminated and a new pod will be created to perform message migration. When the message migration is completed, the new pod will be terminated.

Now the scale down is done and there is only one living broker pod:

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

activemq-artemis-controller-manager-7465974d85-z94dr 1/1 Running 0 4h50m

amq-ss-0 1/1 Running 0 3h47m

Checking the messages you will see the messages are now migrated to the living pod:

$ kubectl exec amq-ss-0 -- amq-broker/bin/artemis queue stat --url tcp://amq-ss-0:61616

Defaulted container "amq-container" out of: amq-container, amq-container-init (init)

Connection brokerURL = tcp://amq-ss-0:61616

|NAME |ADDRESS |CONSUMER_COUNT|MESSAGE_COUNT|MESSAGES_ADDED|DELIVERING_COUNT|MESSAGES_ACKED|SCHEDULED_COUNT|ROUTING_TYPE|

|DLQ |DLQ |0 |0 |0 |0 |0 |0 |ANYCAST |

|ExpiryQueue |ExpiryQueue |0 |0 |0 |0 |0 |0 |ANYCAST |

|activemq.management.66...|activemq.management.66...|1 |0 |0 |0 |0 |0 |MULTICAST |

|test |test |0 |10 |10 |0 |0 |0 |ANYCAST |

Troubleshooting

If the message migration failed, the drainer pod (the pod created to do message migration) won’t exit immediately. Instead it will sleep for 30 seconds, during which time users can get the pod’s log to find details of the message migration for debug.

You can also check out the operator's log to find more details about the message migration.

The persistent volume of the scaled down pod will not be deleted in case of a message migration failure. You will be able to manually recover the messages from it in case of a failed message migration.