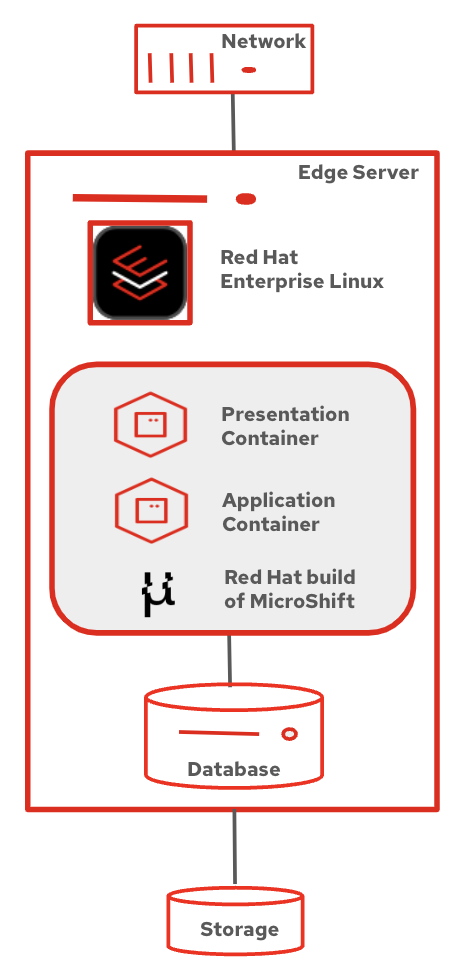

The Red Hat build of MicroShift and a database are installed on Red Hat Enterprise Linux (RHEL) on an edge server. The presentation and application layers are deployed as containers within the Red Hat build of MicroShift, while the database resides on the base operating system. Figure 1 depicts this.

Technical approach

- Install and configure Red Hat build of MicroShift: Deploy and set up Red Hat build of MicroShift on the Red Hat Enterprise Linux installed on an edge server.

- Install and configure database: Install and configure a database, such as MySQL. Note that any database certified for Red Hat Enterprise Linux can be used.

- Create database, import data, and grant access: Create a database, import necessary data, and provide appropriate access privileges to the application user.

- Deploy presentation and application containers: Create and deploy containers for the presentation and application layers within MicroShift.

Benefits

The benefits of this approach include flexibility, isolation, and efficiency.

Flexibility:

Independent updates: The application and presentation layers can be updated and modified independently without affecting the database layer, ensuring flexibility and agility in development and maintenance.

Technology choice: The choice of database technology is not constrained, allowing for the selection of the most suitable database for the specific application requirements.

Isolation:

Security: Containers provide isolation between the application and presentation layers.

Resource management: Each container has its own isolated environment, allowing for efficient resource allocation and management.

Efficiency:

Lightweight: MicroShift, as a lightweight container orchestration platform, is well-suited for edge environments, minimizing overhead and maximizing resource utilization.

Optimized resource allocation: The base operating system can be optimized for database performance, while the containerized layers can be tailored for specific application requirements.

Install and configure the Red Hat build of MicroShift

- As a root user, start the MicroShift service by entering the following command:

$ sudo systemctl start microshift To configure your RHEL machine to start MicroShift when your machine starts, enter the following command:

$ sudo systemctl enable microshift- Follow the Procedure to Access the MicroShift cluster.

Install and configure database

Create database, import data, and grant access

Follow the steps below:

mysql> create database qod;

- Create the application user and grant privileges to qod database:

Note: 10.42.0.0/16 is the subnet of pod network in Red Hat build of MicroShift cluster. Since this IP address is expected to change with every pod, privilege is granted for entire subnet.

mysql>create user 'appuser'@'10.42.0.0/16' identified with mysql_native_password by 'Appuser@1';

mysql> grant all privileges on qod.* to 'appuser'@'10.42.0.0/16' ;Load the data in qod database:

mysql> source /var/lib/mysql-files/1_createdb.sql mysql> source /var/lib/mysql-files/2_authors.sql mysql> source /var/lib/mysql-files/3_genres.sql mysql> source /var/lib/mysql-files/4_quotes.sql

Deploy presentation and application containers

- Download the resource definition files from microshift-qod.

Edit the

qod-db-credentialsfile and update theDB_HOSTwithbase64encoded hostname of your RHEL where the MySQL is installed and configured:$ hostname | base64

Example:

[root microshift-qod]# cat qod-db-credentials

kind: Secret

apiVersion: v1

metadata:

name: qod-db-credentials

data:

DB_HOST: bXlzcWwtdm0=

DB_PASS: QXBwdXNlckAx

DB_USER: YXBwdXNlcg==

type: Opaque

[root microshift-qod]#Create a namespace qod in the MicroShift cluster:

$ oc create namespace qod

Optional: Switch to namespace qod:

$ oc config set-context --current --namespace=qodOptional: Set alias for oc config set-context --current --namespace:

$ alias oc-project='oc config set-context --current --namespace'Deploy the Presentation and Application tiers on MicroShift in qod namespace with Kustomize:

$ oc apply -k ./microshift-qod -n qodCheck the status of the applications deployed:

$ oc get all -n qod

The following example illustrates the kind of output you should expect:

$ oc get all -n qod

NAME READY STATUS RESTARTS AGE

pod/qod-api-5d6bf8b5fc-88m6q 1/1 Running 0 6m32s

pod/qod-web-79cf4c949b-fz2hq 1/1 Running 0 6m32s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/qod-api ClusterIP 10.43.52.46 <none> 8080/TCP 6m32s

service/qod-web ClusterIP 10.43.31.180 <none> 8080/TCP 6m32s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/qod-api 1/1 1 1 6m32s

deployment.apps/qod-web 1/1 1 1 6m32s

NAME DESIRED CURRENT READY AGE

replicaset.apps/qod-api-5d6bf8b5fc 1 1 1 6m32s

replicaset.apps/qod-web-79cf4c949b 1 1 1 6m32s

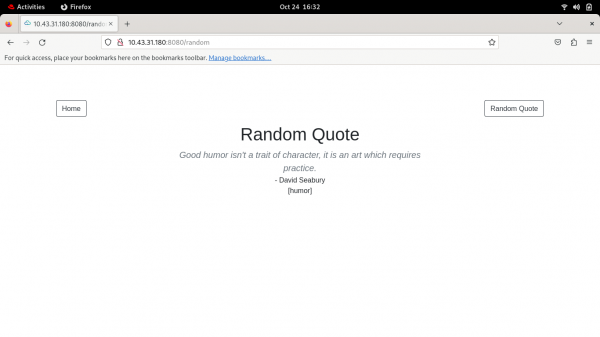

$Open the web browser in the Red Hat Linux host and key in qod-web service IP address with port 8080 to test the 3-tier application deployed. In the above example, IP address of qod-web service is 10.43.31.180. You should see output similar to what is shown in Figure 2.

Optional: To check the application from command line, run the following code:

$ curl 10.43.31.180:8080/random | grep '<i>'The following example illustrates the kind of output you should expect:

$ curl 10.43.31.180:8080/random | grep '<i>'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1144 100 1144 0 0 44000 0 --:--:-- --:--:-- --:--:-- 44000

<i>So long as the memory of certain beloved friends lives in my heart, I shall say that life is good.</i>Conclusion

The 3-tier architecture, coupled with Red Hat Device Edge, provides flexibility and isolation for deploying and managing edge applications.

The following use cases demonstrate the versatility of the 3-tier architecture in edge computing, enabling a wide range of applications that require low latency, high performance, and real-time processing capabilities.

Edge analytics:

Real-time data processing: Analyzing large volumes of data generated at the edge to extract valuable insights and make timely decisions.

Machine learning: Training and deploying machine learning models at the edge for tasks such as predictive maintenance, anomaly detection, and object recognition.

Content delivery networks (CDNs)

Caching content: Storing frequently accessed content closer to end-users to reduce latency and improve performance.

Dynamic content generation: Generating customized content based on user location, preferences, and device characteristics.

Edge gaming:

Low-latency gaming: Providing immersive gaming experiences with minimal lag by processing game logic and rendering graphics closer to the player.

Multiplayer gaming: Enabling real-time multiplayer interactions with reduced network latency.

Augmented and virtual reality:

Real-time rendering: Processing and rendering complex 3D graphics and environments at the edge for immersive AR/VR experiences.

Interactive content: Enabling interactive content and experiences that respond to user inputs in real time.

Edge robotics:

Autonomous systems: Controlling and coordinating autonomous robots and drones for tasks such as inspection, delivery, and surveillance.

Real-time decision-making: Enabling robots to make quick decisions based on local data and environmental conditions.