Red Hat OpenShift Serverless Functions is a framework with tooling to support function development. The framework is built on top of the open source Knative project. It has been available in Tech Preview since Red Hat OpenShift 4.7 and is available on 4.6 (EUS) as well. The central tool in OpenShift Serverless Functions is the kn func command, a plug-in extending the Knative kn command-line interface (CLI) to enable function development on the Knative platform.

Development of this plug-in is led by Red Hat through the Boson Project. This open source project has generated interest in the Knative community because function development capabilities atop Knative have been long-awaited and highly anticipated. The Boson Project has been officially donated to the Knative project and its community.

Let's take a look at some of the new features added recently to OpenShift Serverless Functions. To demonstrate these capabilities, we will implement a simple Quarkus function that consumes data from an AWS S3 bucket and sends it to a Telegram chat.

Prerequisites

This article assumes that you have a Red Hat OpenShift account and an account on AWS. Before you start, be sure to do the following:

- Install version 1.16.0 or higher of the OpenShift Serverless Operator on your cluster.

- Install version 0.22.0 or higher of the

kncommand-line interface (CLI) on your local computer.

If you are new to OpenShift Serverless Functions, you might want to check the article Create your first serverless function with Red Hat OpenShift Serverless Functions by Naina Singh and read through the kn func documentation.

Sending data to a Telegram chat

As mentioned in the introduction, this demonstration creates a function that consumes data from an AWS S3 bucket and sends it to a Telegram chat. Let's start with the part that sends data to a Telegram chat.

First, export the FUNC_REGISTRY environment variable:

$ export FUNC_REGISTRY=docker.io/USERNAME

Each function is built as a container, and we need to host this container in some registry. By specifying this environment variable, we are telling the function framework which container registry to use. And don't be afraid: If you forget to specify this variable, kn func asks you for the registry when it is needed.

You can now create scaffolding for your function. Use the kn func command to create a Quarkus function that will respond to CloudEvents events. We call this function s3-consumer:

$ kn func create s3-consumer -l quarkus -t events

$ cd s3-consumer

The kn func command creates a Quarkus Maven project. Now jump into the src/main/java/functions/Function.java file to implement your business logic: Receiving an event and sending its data to the Telegram chat.

Let's take a look at one possible implementation (demo source code can be found at https://github.com/boson-samples/s3-to-telegram):

package functions;

import java.util.Date;

import java.util.List;

import com.pengrad.telegrambot.TelegramBot;

import com.pengrad.telegrambot.model.Update;

import com.pengrad.telegrambot.request.GetUpdates;

import com.pengrad.telegrambot.request.SendMessage;

import com.pengrad.telegrambot.response.SendResponse;

import org.eclipse.microprofile.config.inject.ConfigProperty;

import io.quarkus.funqy.Funq;

public class Function {

@ConfigProperty(name = "TELEGRAM_API_KEY")

String telegramBotApiKey;

@Funq

public void function(byte[] data) {

String input = new String(data);

System.out.println("Received: " + input);

TelegramBot bot = new TelegramBot(telegramBotApiKey);

List<Update> updates = bot.execute(new GetUpdates()).updates();

if (updates.size() > 0) {

long chatId = updates.get(0).message().chat().id();

String message = new Date() + ": New data in S3 Bucket: " + input;

SendResponse response = bot.execute(new SendMessage(chatId, message));

if (response.isOk()) {

System.out.println("Data from S3 bucket has been consumed and sent to Telegram chat");

} else {

System.out.println("Sending data to Telegram chat failed, response: " + response);

}

} else {

System.out.println("Telegram chat hasn't been initialized, to do so please send any message to the chat.");

}

}

}

We are using the Java Telegram Bot API to communicate with a Telegram bot. To use this API, we need to add a dependency in pom.xml, as we would normally do in a Quarkus project:

<dependency>

<groupId>com.github.pengrad</groupId>

<artifactId>java-telegram-bot-api</artifactId>

<version>5.1.0</version>

</dependency>

The business logic is implemented in the public void function(byte[] data) function. First, it outputs the received event, then calls Telegram Bot to get updates. You need to get updates because they include a Telegram chatId, which is needed to target the correct Telegram conversation—that is, the specific chat you are having with a bot. To get a chatId, the conversation needs to be initialized, which means that at least one message needs to be sent to the conversation.

Once you get the chatId, you can send the incoming event data to the specified Telegram conversation by calling bot.execute(new SendMessage(...)). The rest of the code just logs messages that are printed to the function's output. So this function is very simple.

Referring to a Secret from within the function

You have probably noticed in the code that before the function definition, we are injecting the TELEGRAM_API_KEY environment variable into the telegramBotApiKey property. To communicate with a Telegram bot, you need an API key, but for security reasons, hardcoding this kind of information into the source code is not the best idea.

A better option is to consume this information from some configuration outside the function. Because you are deploying your function to OpenShift, it makes sense to store the data in a Kubernetes Secret and refer to it in your function. Luckily, referring to Secrets or ConfigMaps in environment variables (or mounted as volumes) from within a function is one of our new features.

First, create a Secret that will hold your Telegram API key:

$ kubectl create secret generic telegram-api --from-literal=TELEGRAM_API_KEY=YOUR_TELEGRAM_API_KEY

Now add an environment variable to the function that extracts the key from this Secret. To do that, use the kn func interactive dialog that prompts you to select the newly created telegram-api secret and specify the key:

$ kn func config envs add

Figure 1 is a video that shows the sequence of prompts and answers you should give.

To check that the environment variable reference was added to the function, look at the envs section in the func.yaml file:

envs:

- name: TELEGRAM_API_KEY

value: '{% raw %}{{ secret:telegram-api:TELEGRAM_API_KEY }}{% endraw %}'

Deploying the function

The business logic is all set now. You deploy the function by logging in to OpenShift and creating a new project:

$ oc login

$ oc new-project demo

Now run the following command, which deploys the function to the project demo:

$ kn func deploy

This command causes CNCF Buildpacks to produce an image in Open Container Initiative (OCI) format containing your function. The image is then deployed to the OpenShift cluster as a Knative Service, and you should see output similar to the following in the console:

Testing the function

The function has been successfully deployed, but what if you want to test it to make sure that it can accept an event and that the business logic is working correctly?

To do that, fortunately, you can use another cool new feature: kn func emit, which sends a CloudEvent event to the deployed function. The command offers many parameters to tweak the CloudEvent data and metadata. In our case, we just want to send the string "test message" to our function and check that it was forwarded to the Telegram chat:

$ kn func emit -d "test message":

If you see a display like Figure 3, your test message has been successfully received by the Telegram chat.

Using a Camel K Kamelet to consume data from AWS S3

Your function can now send events to the Telegram chat. The last task is to forward new files from an AWS S3 bucket to the function. This example uses Knative Eventing for this job. You will need an event source that sends events to the Knative Eventing Broker. Your function can then subscribe to the broker to consume the events.

Create a new broker called default:

$ kn broker create default

Now subscribe your function to the broker. You can do this easily in the OpenShift developer console (Figure 4).

Alternatively, you can create a trigger via a kn command called mytrigger, linking the trigger to the s3-consumer function:

$ kn trigger create mytrigger --sink s3-consumer

Now create a Knative event source that reads data from AWS S3. On OpenShift, you can do that pretty easily, thanks to the Apache Camel K project and its Kamelets concept.

First, you need to install the Camel K Operator from the OperatorHub. The minimal recommended version is 1.4.0.

Then create an IntegrationPlatform CustomResource in the namespace with your deployed function to enable Kamelets as Knative Eventing sources. There is a known problem in version 1.4.0 of the Camel K Operator. Therefore, it is important to set BuildStrategy to routine (Figure 5) if you are using this Camel K version.

Now, in the OpenShift developer console, add an AWS S3 source to your project. Specify the credentials to your AWS account along with the S3 bucket name (Figure 6). At the bottom of the page, the display should show that your new event source will be automatically sending events to the previously created broker default.

OpenShift Serverless Functions in action

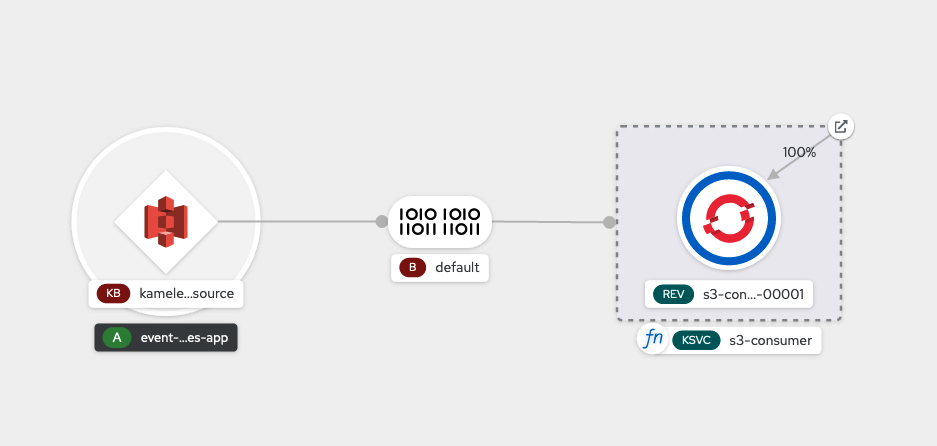

Figure 7 shows that we have successfully deployed a function and created an event source via the OpenShift developer console.

Let's test the function. Create a file in your AWS S3 bucket and see what happens. You can create the file in the AWS console or via the aws CLI:

$ aws s3 cp data_file.txt s3://YOUR-BUCKET

A display like Figure 8 shows the new Telegram message with the uploaded file contents (in our case, "hello from bucket!").

Summary

In this article, you learned how to create a Quarkus function that receives data from a Knative Eventing source, powered by Camel K, and sends the messages to a Telegram conversation. I hope that you enjoyed this short tutorial and learned something new.

Here are the main takeaways:

- It is easy to refer to Kubernetes Secrets and ConfigMaps in a function.

- You can test event-based functions with the

kn func emitcommand. - You can use Camel K components as Knative Eventing sources.

- Boson Project is being donated to Knative!

Try OpenShift Serverless Functions and let us know what you think by leaving a comment below. Check out these useful links to get started:

Last updated: September 20, 2023