The Kamelet is a concept introduced near the end of 2020 by Camel K to simplify the design of complex system integrations. If you’re familiar with the Camel ecosystem, you know that Camel offers many components for integrating existing systems. An integration's granularity is related to its low-level components, however. With Kamelets you can reason at a higher level of abstraction than with Camel alone.

A Kamelet is a document specifying an integration flow. A Kamelet uses Camel components and enterprise integration patterns (EIPs) to describe a system's behavior. You can reuse a Kamelet abstraction in any integration on top of a Kubernetes cluster. You can use any of Camel's domain-specific languages (DSLs) to write a Kamelet, but the most natural choice is the YAML DSL. The YAML DSL is designed to specify input and output formats, so any integration developer knows beforehand what kind of data to expect.

A Kamelet also serves as a source or sink of events, making Kamelets the building blocks of an event-driven architecture. Later in the article, I will introduce the concept of source and sink events and how to use them in an event-driven architecture with Kamelets.

Capturing system-complex behavior

In the pre-Kamelet era, you would typically design an enterprise integration by reasoning about its lower-level components. An integration would be composed of queues, APIs, and databases glued together to produce or consume an event. Ideally, you would use Camel EIPs and components to develop the integration, and Camel K to run it on Kubernetes.

With the advent of Kamelets, you can think of the design phase as a declarative phase, where you describe the flow in a YAML document. You simply need to describe your system specifications. Most of the time, you will define variables that are provided at runtime, just before running the integration. Eventually, you will use visual tools to help with features such as suggestions and autocomplete. Once the Kamelet is ready, you can install its definition on a Kubernetes cluster and use it in a real integration.

At this stage, you still haven't executed anything. To see a Kamelet in action, you must use it inside an integration, likely specifying concrete values for the expected variables. I think this is Kamelet's most remarkable feature. It splits integration development into two phases: design (abstract) and execution (concrete).

With this approach, you can provide to your integration developers a set of prebuilt, high-level abstractions to use in their concrete integrations. You can create, for example, a Kamelet whose scope is to expose events of a certain enterprise domain. You can think of each Kamelet as an encapsulation of anything with a certain “business” complexity. You can use these building blocks in any refined integration with less hassle.

Basically, we can view a Kamelet as a distilled view of a complex system exposing a limited set of information. The integration uses this information in its execution flow.

Kamelets in a Camel integration

Using Kamelets in a real integration is straightforward. You can think of Kamelets as a new Camel component. Each Kamelet can be designed as either a source (from in the Camel DSL) or a sink (to in the Camel DSL). (We will talk more about designing Kamelets as sources and sinks soon.)

A Camel K Operator can spot the kamelet component handling all the magic behind the scenes. So, as soon as you run your integration, the Kamelet immediately starts doing its job.

Kamelets in action

A demonstration is usually worth a thousand words, so let’s see a real example. First of all, we’ll define a Kamelet. For this example, we are going to enjoy a fresh beer:

apiVersion: camel.apache.org/v1alpha1

kind: Kamelet

metadata:

name: beer-source

labels:

camel.apache.org/kamelet.type: "source"

spec:

definition:

title: "Beer Source"

description: "Retrieve a random beer from catalog"

properties:

period:

title: Period

description: The interval between two events

type: integer

default: 1000

types:

out:

mediaType: application/json

flow:

from:

uri: timer:tick

parameters:

period: "#property:period"

steps:

- to: "https://random-data-api.com/api/beer/random_beer"

- to: "kamelet:sink"

The flow section could be much more elaborate depending on the complexity of the abstraction you want to design. Let’s call this file beer-source.kamelet.yaml. We can now create this Kamelet on our Kubernetes cluster:

$ kubectl apply -f beer-source.kamelet.yaml

In this case, we’ve used the Kubernetes command-line interface (CLI) because we’re just declaring the kind of Kamelet. Using Red Hat OpenShift's oc would also be fine. To see the list of available Kamelets on the cluster, let's run:

$ kubectl get kamelets NAME PHASE beer-source Ready

We’ll use it in a real integration by replacing the previous flow section with the following:

- from:

uri: "kamelet:beer-source?period=5000"

steps:

- log: "${body}"

Now, we have a new component named kamelet. We’re creating a new integration from beer-source, which is the Kamelet name we’ve provided. We’re also setting a variable, period, that we’ve previously declared in the Kamelet.

Run the Kamelet

Camel K is the easiest way to run a Kamelet. If you don’t yet have Camel K installed, follow the instructions to install it. When you are ready, bundle the integration in a file (say, beers.yaml) and run:

$ kamel run beers.yaml --dev

As soon as you run the integration, you will start enjoying fresh beers (just on screen) every five seconds.

Additional integration features

Now that you've seen a demonstration let's get back to theory. Kamelets support type specification and reusability, which are both useful features for complex integrations.

Type specification

An important aspect of using Kamelets is type specification. In a Kamelet, you can declare the kind of data type that the Kamelet will consume as input and produce as output. This specification is very useful if the integration developer does not want to deal with the complex logic the Kamelet is hiding. Type information is available as a JSON schema, so a developer can easily read it and understand the information it exposes.

Let’s imagine a Kamelet designed to extract the prices from a catalog of products. The prices are calculated by filtering, splitting, and transforming data in a system composed of various endpoints and databases. At the end of the day, a developer wants to know what data format to expect. Having a name, description, and data types gives the developer a fair idea of what to expect from the Kamelet.

Reusability

I mentioned that a Kamelet is installed on a Kubernetes cluster, where it is ready to be used in any Camel K integration. The installation procedure is an extension of Kubernetes via a custom resource endpoint.

This is a nice way to make Kamelets first-class citizens of the cluster, letting them be discovered and used by other applications. Having a variety of Kamelets that others in your organization can reuse means you won’t need to reinvent the wheel if something is already there.

The possibility of defining variables as abstract placeholders for data that can change is key to reusability. If you can define a general flow that describes an integration or a part of an integration with parameters, you will have a Kamelet ready for possible reuse.

Using Kamelets in an event-driven architecture

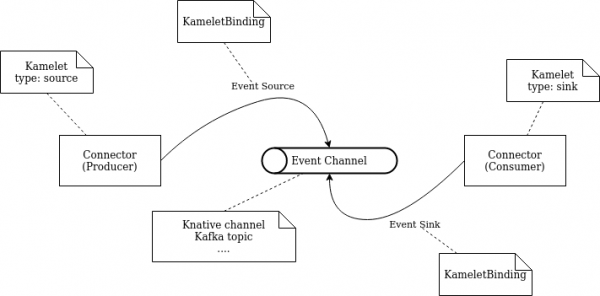

Because Kamelets are high-level concepts that define input and output, it’s possible to think of them as the building blocks of an event-driven architecture. Earlier, I mentioned that you could create a Kamelet as a source or sink. In an event-driven architecture, a source emits events, which a sink can consume. Therefore, you can think of a system that produces events (a source) or consumes events (a sink). The idea is to distill a view of a more complex system.

Declaring high-level flows in a Kamelet binding

Camel K engineers have put together these concepts to include a middle layer in charge of routing events to the interested parties. This is done declaratively in a Kamelet binding.

A Kamelet binding lets you tell a Kamelet source to send events to a destination that can be represented by a Knative channel, a Kafka topic, or a generic URI. The same mechanism lets a Kamelet sink consume events from a destination. Thus, you can use Kamelet bindings to define the high-level flows of an event-driven architecture, as shown in Figure 1. The logic of routing is completely hidden from the final user.

An integration developer can create a workflow based on the relevant events by binding these events to a Kamelet sink. Imagine an online store. An order system emits events that can be captured by a shipping system, an inventory system, or a user preference system. The three sinks work independently, relying on this framework. Each of them watches and reacts to events.

The order system could be represented by the order source Kamelet, which hides its internal complexity. Then, you create a Kamelet binding to start emitting events into a Knative, Kafka, or URI channel. On the producer side, a developer could design a decoupled shipping sink Kamelet and bind it to the order event channel via a Kamelet binding. The shipping sink will therefore start receiving events and executing the business logic that was coded into it.

Kamelet bindings in action

Let’s use the previous Kamelet to emit events (random beers) into a Kafka topic. To make this example work, you might need to have a running Kafka cluster. If you don't have a Kafka cluster, you can set one up with Strimzi. Once it's ready, create a Kafka topic to use as your destination channel:

apiVersion: kafka.strimzi.io/v1beta1

kind: KafkaTopic

metadata:

name: beer-events

labels:

strimzi.io/cluster: my-cluster

spec:

partitions: 1

replicas: 1

config:

retention.ms: 7200000

segment.bytes: 1073741824

Let’s call this file beer-destination.yaml. Now, create the Kubernetes topic on the cluster:

$ kubectl apply -f kafka-topic.yaml

As soon as that is ready, declare a KameletBinding whose goal is to forward any event generated by the producer to a destination:

apiVersion: camel.apache.org/v1alpha1

kind: KameletBinding

metadata:

name: beer-event-source

spec:

source:

ref:

kind: Kamelet

apiVersion: camel.apache.org/v1alpha1

name: beer-source

properties:

period: 5000

sink:

ref:

kind: KafkaTopic

apiVersion: kafka.strimzi.io/v1beta1

name: beer-events

Call this file beer-source.binding.yaml and use it to create the resource on the cluster:

$ kubectl apply -f beer-source.binding.yaml

Create the sink flow

Let’s pause a moment. The structure of this document is quite simple. We declare a source and a sink. In other words, we’re telling the system where we want certain events to end up. So far, we have a source, along with a mechanism to collect its events and forward them to a destination. To continue, we need a consumer (aka, a sink).

Let’s create a log-sink. Its goal is to consume any generic event and print it out on the screen:

apiVersion: camel.apache.org/v1alpha1

kind: Kamelet

metadata:

name: log-sink

labels:

camel.apache.org/kamelet.type: "sink"

spec:

definition:

title: "Log Sink"

description: "Consume events from a channel"

flow:

from:

uri: kamelet:source

steps:

- to: "log:sink"

This is a simple logger, but you can design a sink flow as complex as your use case requires. Let’s call this one log-sink.kamelet.yaml and create the logger on our cluster:

$ kubectl apply -f log-sink.kamelet.yaml

Create the Kamelet bindings

Now, we create a KameletBinding to match the events to the logger we just created:

apiVersion: camel.apache.org/v1alpha1

kind: KameletBinding

metadata:

name: log-event-sink

spec:

source:

ref:

kind: KafkaTopic

apiVersion: kafka.strimzi.io/v1beta1

name: beer-events

sink:

ref:

kind: Kamelet

apiVersion: camel.apache.org/v1alpha1

name: log-sink

Notice that we've just swapped the previous binding sink (beer-event) to be the source, and declared the sink as the new log Kamelet. Let’s call the file log-sink.binding.yaml and create it on our cluster:

$ kubectl apply -f log-sink.binding.yaml

Check the running integration

Behind the scenes, the Camel K Operator is in charge of transforming those Kamelet bindings into running integrations. We can see that by executing:

$ kamel get NAME PHASE KIT beer-event-source Running kit-c040jsfrtu6patascmu0 log-event-sink Running kit-c040r3frtu6patascmv0

So, let’s have a final look at the logs to see the sink in action:

$ kamel logs log-event-sink

…

{"id":9103,"uid":"19361426-23dd-4051-95ba-753d7f0ebe7b","brand":"Becks","name":"Alpha King Pale Ale","style":"German Wheat And Rye Beer","hop":"Mt. Rainier","yeast":"1187 - Ringwood Ale","malts":"Vienna","ibu":"39 IBU","alcohol":"3.8%","blg":"11.1°Blg"}]

{"id":313,"uid":"0c64734b-2903-4a70-b0a1-bac5e10fea75","brand":"Rolling Rock","name":"Chimay Grande Réserve","style":"Dark Lager","hop":"Northern Brewer","yeast":"2565 - Kölsch","malts":"Chocolate malt","ibu":"86 IBU","alcohol":"4.8%","blg":"19.1°Blg"}]

Conclusion

Thanks to the Kamelets and the Kamelet binding mechanism, we managed to create a simple event-driven architecture; a consumer decoupled from a producer. The nicest thing is that we did everything by just declaring the origin of events (beer-source) and its destination (log-sink).