Red Hat Developer Blog

Here's our most recent blog content. Explore our featured monthly resource as well as our most recently published items. Don't miss the chance to learn more about our contributors.

Here's our most recent blog content. Explore our featured monthly resource as well as our most recently published items. Don't miss the chance to learn more about our contributors.

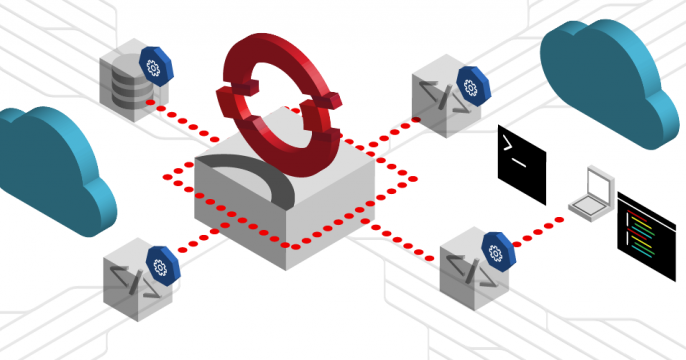

The RamaLama project simplifies AI model management for developers by using...

Creating Grafana dashboards from scratch can be tedious. Learn how to create...

This guide walks through how to create an effective qna.yaml file and context...

This article details new Python performance optimizations in RHEL 9.5.

A list of curated posts of essential AI tutorials from our Node.js team at...

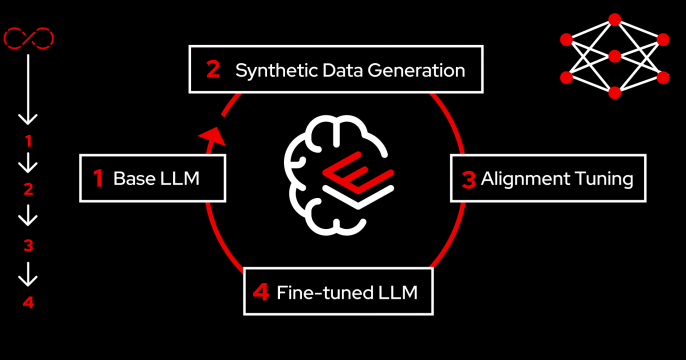

Discover how you can use RHEL AI to fine-tune and deploy Granite LLM models,...

Monitor policy compliance, detect violations, and track detailed network...

Explore 3 issues that can compromise your Java application's data...

The .NET 9 release is now available, targeting Red Hat Enterprise Linux...

Learn how to install the Red Hat OpenShift AI operator and its components in...

Learn how to generate complete Ansible Playbooks using natural language...

Learn how to fine-tune large language models with specific skills and...

Learn how to get started with Red Hat Connectivity Link, which provides...