GitOps practices are becoming the de facto way to deploy applications and implement continuous delivery/deployment in the cloud-native landscape. Red Hat OpenShift GitOps, based on the community project Argo CD, is taking this to the next level by providing a seamless integration with Red Hat OpenShift.

In this two-part series, we will present an approach for automating application deployments and infrastructure component deployments across multiple OpenShift multi-tenant clusters, leveraging OpenShift GitOps (Argo CD) as the core component.

Architectural aspects and design patterns

More organizations are starting to deploy numerous, smaller, dedicated OpenShift clusters instead of big, multi-stage, multi-network zone clusters thanks to the simplified OpenShift installation procedures and the ability to manage multiple clusters.

However, from an application perspective, this architecture comes with several challenges. Imagine a scenario where some of the application components integrate with sensitive data that needs to be protected from the outside world. As a result, these components must be deployed in an internal network zone to satisfy compliance requirements. Other application components that do not manage sensitive assets can be exposed externally and deployed into the DMZ network zone.

The next question that arises relating to OpenShift deployment architecture is: will a single OpenShift multi-network zone cluster be used to deploy all the application components, or will there be one OpenShift cluster per network zone? This architectural decision typically depends on the enterprise environment and any associated regulations. For the purposes of this article, we will explore the latter option. The goal is to have an implementation where every possible task is automated. Therefore, the use of Argo CD is highly recommended to achieve the automation target.

Prerequisites

This scenario consists of an infrastructure with multiple single-network zone OpenShift clusters, with application components deployed across those clusters.

Before proceeding, ensure you have the following prerequisites:

- An OpenShift account.

- A GitHub account.

- Helm installed.

You will learn how to resolve your design questions before configuring your deployment and:

- Learn the GitOps practices.

- Install the OpenShift GitOps operator.

- Deploy application workflow.

- Work with Helm charts.

Standalone vs. hub-and-spoke

We will approach the design by considering how Argo CD handles the application deployments and which architectural pattern to use. There are two common approaches to consider:

- Standalone pattern: In this approach, each OpenShift cluster has its own Argo CD instance that will manage the configuration of the cluster on which it is deployed.

- Hub and spoke: This approach utilizes a centralized Argo CD instance, ideally deployed in a so-called management zone in another OpenShift cluster which typically serves components to support other clusters. With this model, the Argo CD instance will manage configurations across all OpenShift clusters.

Both architectural pattern options come with advantages and disadvantages, summarized as follows:

Standalone:

- Less complexity for configuration of Argo CD instances.

- The only cluster internal network connectivity required for managing resources.

- Management of more than one Argo CD instance.

- No centralized view for applications.

Hub-and-spoke:

- Centralized view of applications.

- Fewer Argo CD instances to manage.

- Network requirements may be needed to support the centralized Argo CD instance within an OpenShift cluster. Otherwise, bottlenecks might occur when managing resources on different OpenShift clusters.

- Additional management network zones and OpenShift clusters are required to deploy a centralized Argo CD.

Single-tenant vs. multi-tenant Argo CD instances

Another aspect to consider is the tenancy of the OpenShift clusters and how the architecture impacts the Argo CD design decisions. Do the OpenShift clusters contain a single tenant used by a single application team, or do they contain multiple tenants and share resources between more than one team?

For single-tenant use cases, a single Argo CD instance can handle application configuration without the need for any special role-based access control (RBAC) configurations. However, for multi-tenant clusters, the scenario is more complex.

Another architectural decision must be made, involving the following two options:

- Platform owners will deploy a multi-tenant Argo CD instance for all application teams. The platform team will be in charge of managing access for all application teams and will properly set Argo CD RBAC.

- Each application team will own and manage its own Argo CD instance. The team-owned Argo CD instances will leverage OpenShift RBAC, and therefore will only have access to the team resources.

These tenancy options come with pros and cons, listed as follows:

Multi-tenant:

- Fewer Argo CD instances to manage.

- All applications are managed in a single location.

- Fine-grained RBAC required.

- More complex setup and configuration.

Team-owned single-tenant:

- Less complexity required for the configuration of single-tenant Argo CD instances.

- Better overview and control for the team.

- No need for a fine-grained RBAC definition.

- Greater number of Argo CD instances (one per team).

Design options

Considering the previous architectural design scenario, the following options are available to implement multi-cluster deployments, utilizing GitOps and Argo CD.

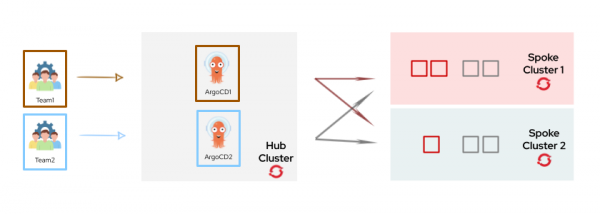

Figure 1 depicts a mono-tenant Argo CD instance with a hub-spoke architecture.

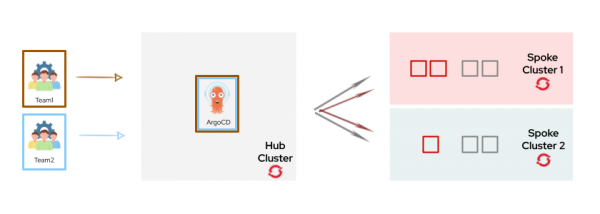

Figure 2 shows a mono-tenant Argo CD with a standalone architecture.

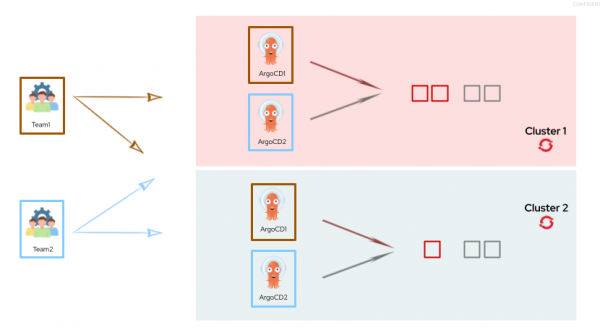

Figure 3 depicts a multi-tenant Argo CD with a hub-spoke architecture.

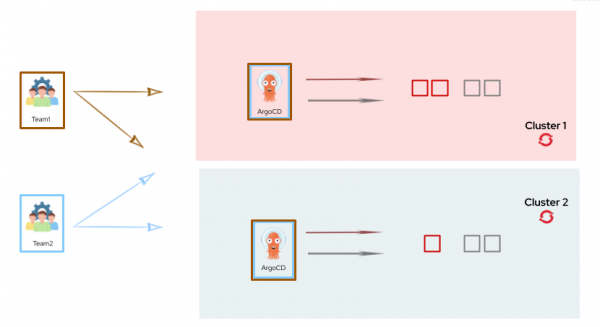

Figure 4 illustrates a multi-tenant Argo CD with a standalone architecture.

For the remainder of this series, we will present a solution based on a hub-and-spoke architecture using mono-tenant Argo CD instances (as shown in Figure 1).

Disclaimer:

This solution assumes that you are not using Red Hat Advanced Cluster Management for Kubernetes (RHACM) to manage the deployment of OpenShift infrastructure configurations and their associated applications. Instead, use Argo CD as the primary management tool.

Set up Argo CD admin instance

Let’s begin by providing an overview of the proposed target solution, including all of the components and infrastructure services.

Now that you've resolved your design questions, let's get to configuring your deployment. Now you will:

- Install a GitOps operator.

- Configure clusters.

- Create a Secret object.

Install the OpenShift GitOps operator

The first step of our implementation installs the OpenShift GitOps operator and the Argo CD admin instance on the hub cluster. For the sake of simplicity, we will use the default Argo CD instance deployed with the operator, called openshift-gitops.

To do this on your OpenShift console, go to OperatorHub and install the openshift-gitops operator. Then customize the installation per your own requirements.

Disclaimer:

Keep in mind that the openshift-gitops Argo CD instance has cluster-wide permissions, and it’s meant for dealing with cluster configurations. So in practice, a brand new Argo CD instance might better fit the security requirements.

Configure the clusters

After Argo CD is up and running, we need to configure the spoke clusters. This task consists of creating namespaces and service accounts for Argo CD with the necessary permissions for it to properly manage these clusters.

Perform the next steps on all the spoke clusters.

Create a new namespace for Argo CD on each spoke cluster as follows:

apiVersion: v1

kind: Namespace

Metadata:

name: admin-argocdNext, create a new ServiceAccount for Argo CD on the namespace:

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-argocd-sa

namespace: admin-argocdThen create a cluster role binding that assigns the cluster-admin role to the service account:

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: argocd-admin-remote-binding

subjects:

- kind: ServiceAccount

name: admin-argocd-sa

namespace: admin-argocd

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-adminDisclaimer:

The manifest assigns the Argo CD service account with cluster-admin privileges which may not be desirable for those employing the least privilege principle approach in their environments. You may create a custom ClusterRole with a reduced permission-set that better aligns with the security requirements in the organization.

Create a long-lived OAuth token for the service account on all spoke clusters.

Disclaimer:

In OpenShift versions newer than 4.10 (Kubernetes 1.23), service account API credentials are requested with TokenRequest API and are bound to the lifetime of the pod. This is the most secure way to interact with Kubernetes API. Before Kubernetes 1.22, credentials for accessing the API were obtained by creating token Secrets, which were non-expiring. Because in our scenario, the ArgoCD instance needs regular access to the Kubernetes API of the Spoke cluster, and this is not bound to the lifetime of any pod, we will still use non-expiring API Credentials. Be aware that creating a long-lived OAuth token can involve security risks.

Create the secret object to enable a long-term token, defined as follows:

apiVersion: v1

kind: Secret

metadata:

name: argocd-admin-sa-secret

annotations:

kubernetes.io/service-account.name: argocd-admin-sa

type: kubernetes.io/service-account-tokenOnce the secret has been created in the cluster, you can extract the service account token using the following command:

oc get secret argocd-admin-sa-secret -o jsonpath='{.data.token}'Note:

With the emergence of Argo CD Agent, long-lived tokens will not be required in a hub-and-spoke architecture. However, the recently-born argocd-agent project is far from being feature complete and ready for general consumption.

Finally, move back to the hub cluster and create a new secret declaratively for every spoke cluster, thus describing the connection and including the OAuth token of the respective spoke cluster.

Since the secret we need to create must contain the CA certificate of the cluster, we need to extract it first:

echo | openssl s_client -connect https://<SPOKE_API_SERVER>:6443/api 2>/dev/null | openssl x509 -outform PEMThen, let’s create the secrets on the hub cluster for every spoke cluster:

apiVersion: v1

kind: Secret

metadata:

name: spoke-cluster-1

labels:

argocd.argoproj.io/secret-type: cluster

type: Opaque

stringData:

name: spoke-cluster-1

server: https://api.<cluster-name>.<cluster-domain>:6443

config: |

{

"bearerToken": "<authentication token>",

"tlsClientConfig": {

"insecure": false,

"caData": "<base64 encoded certificate>"

}

}Disclaimer:

You shouldn't directly commit plain Kubernetes secrets to Git repositories. Use a secret management solution to better manage your secrets.

The admin Argo CD instance

You can automate all of these steps with Helm, like the creation of namespaces, roles, and service accounts. However, you must manually execute the initial step for the token configuration in Argo CD.

Once up and running, the admin Argo CD instance may perform deployments and configurations on the configured OpenShift spoke clusters.

This is a list of configurations for which an admin Argo CD instance can be responsible:

- Configuring clusters.

- Creating and configuring namespaces.

- Managing and configuring tenant Argo CD instances.

- Managing observability and/or CI tooling.

- Managing the centralized tooling, like container registries, security tooling, and observability.

Next steps

Your admin Argo CD instance should now be up and running! In the next installment, How to configure and manage Argo CD instances, we'll move on to configuring a tenant-level instance, creating namespaces, and deploying applications.

Last updated: December 10, 2025