As high-quality human text reaches its limits, synthetic data generation has become a core technique for scaling and refining large language models (LLMs). By leveraging one model to produce training examples, instructions, and evaluations for another, teams can expand datasets, target domain-specific gaps, and improve controllability without relying on scarce or sensitive human data. With advances in open source LLMs, fast inference frameworks like vLLM, and reproducible generation pipelines, synthetic data is now a practical foundation for modern model development.

This is where SDG Hub comes in, a flexible open source toolkit designed to simplify, scale, and customize synthetic data workflows for efficient model training and evaluation.

What is SDG Hub?

Synthetic Data Generation Hub (SDG Hub) is an open source framework that simplifies and standardizes the process of creating synthetic data. It is built to be modular and scalable, allowing users to design and orchestrate complex pipelines for data generation and processing.

With SDG Hub, users can mix and match LLM-based components with traditional data processing tools. It supports YAML-based orchestration, schema discovery, input validation, asynchronous execution, and monitoring.

Rather than relying on custom scripts for each project, SDG Hub provides a consistent framework that encourages reuse, reproducibility, and easy integration into larger workflows.

LLM customization workflow

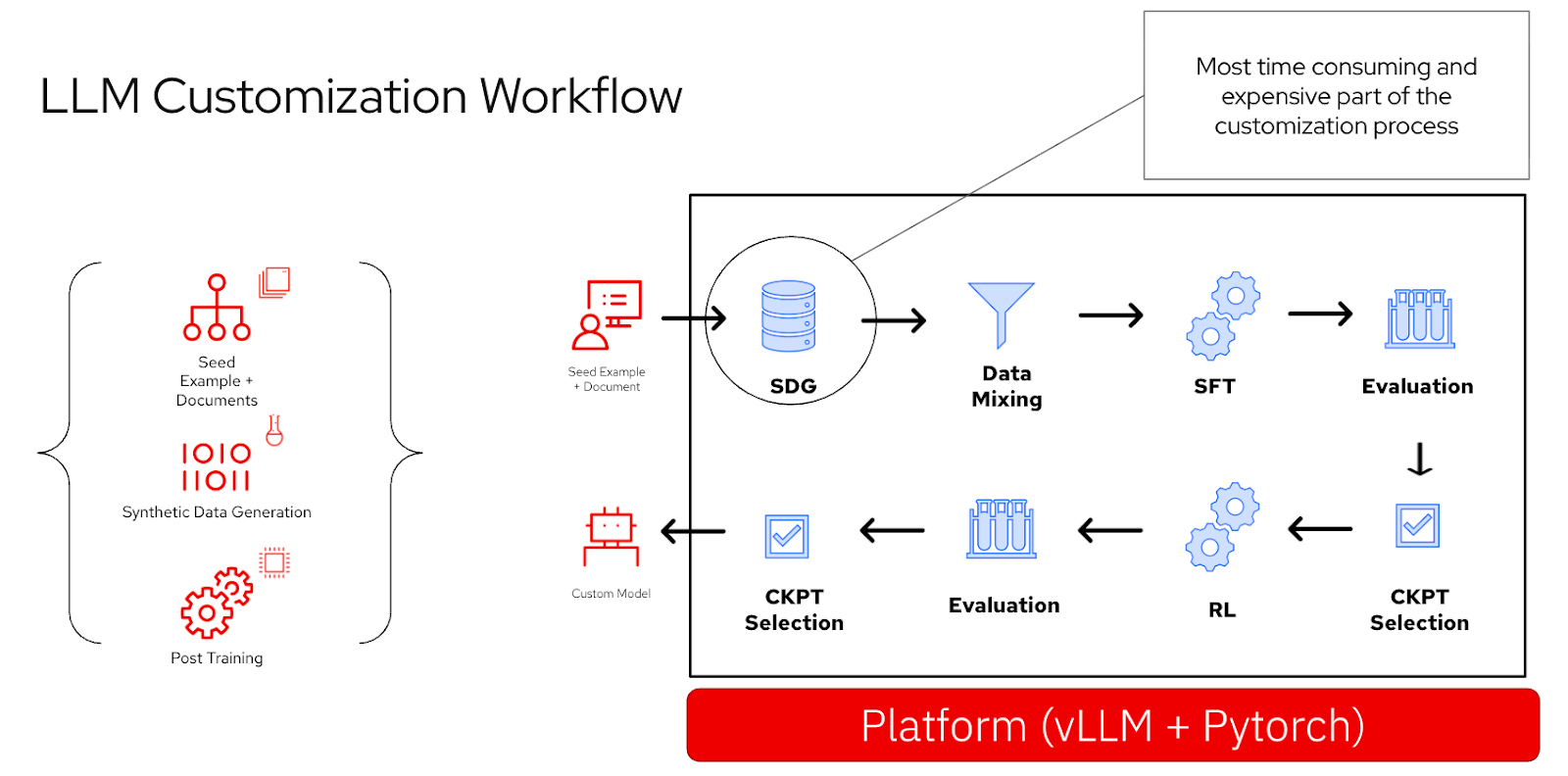

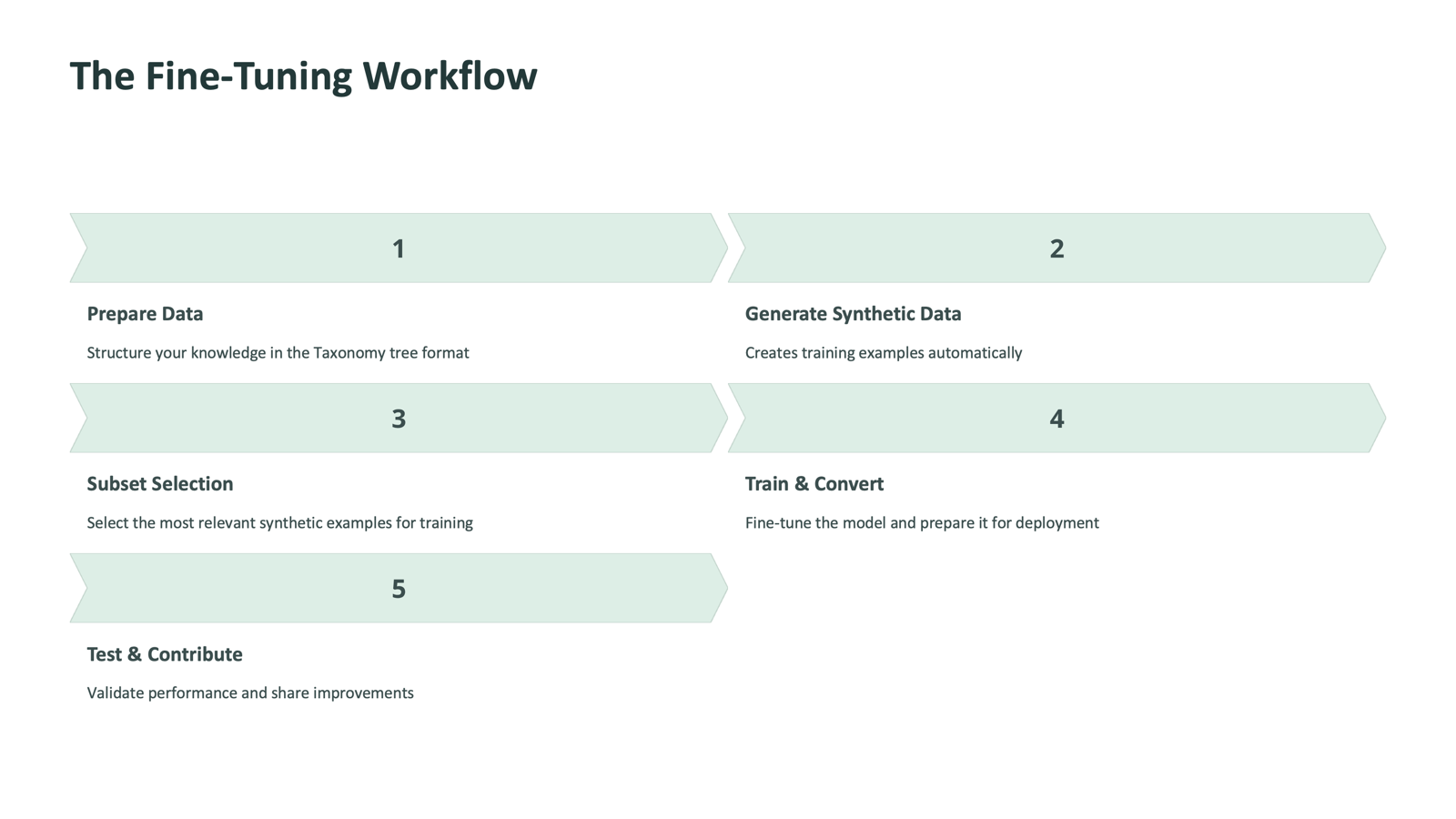

To understand how synthetic data fits into the development process, consider the LLM customization workflow shown in Figure 1.

The process begins with generating synthetic data, then mixing and filtering it. The model then goes through supervised fine-tuning (SFT) followed by reinforcement learning (RL) before producing a domain-tuned model ready for deployment.

Among these steps, the most time-consuming is synthetic data generation. SDG Hub was built specifically to make this step more efficient.

How SDG Hub works

SDG Hub is organized around two core concepts: blocks and flows. These abstractions make it easier to build, connect, and scale different components of a synthetic data pipeline.

Blocks: The building units

Blocks are the smallest functional units of SDG Hub. Each block performs a specific transformation on data, such as generating examples, parsing outputs, or structuring responses. Every block follows a consistent pattern: take input, process it, and return output.

This standardization makes blocks composable. They can be reused across different projects, swapped for alternatives, or combined to create sophisticated workflows.

from sdg_hub.core.blocks import LLMChatBlock, JSONStructureBlock

# Create an instance with configuration

chat_block = LLMChatBlock(

block_name="question_answerer",

model="openai/gpt-4o",

input_cols=["question"],

output_cols=["answer"],

prompt_template="Answer this question: {question}"

)

structure_block = JSONStructureBlock(

block_name="json_structurer",

input_cols=["summary", "entities", "sentiment"],

output_cols=["structured_analysis"],

ensure_json_serializable=True

)Flows: Chaining blocks into pipelines

Flows are the orchestration layer that connects multiple blocks into a working system. They determine how data moves through the pipeline, when components run, and how results are validated.

Flows can be defined in Python or YAML, offering both flexibility and readability. YAML-based flows are particularly useful because they allow for declarative definitions that include metadata, validation, and model recommendations.

Users can validate flows, debug them step by step, or execute them asynchronously at scale. Here is an example YAML flow that chains multiple blocks in sequence:

blocks:

# Extract Summary

- block_type: "PromptBuilderBlock"

block_config:

block_name: "build_summary_prompt"

input_cols:

- "text"

output_cols: "summary_prompt"

prompt_config_path: "summarize.yaml"

- block_type: "LLMChatBlock"

block_config:

block_name: "generate_summary"

input_cols: "summary_prompt"

output_cols: "raw_summary"

max_tokens: 1024

temperature: 0.3

async_mode: true

- block_type: "TextParserBlock"

block_config:

block_name: "parse_summary"

input_cols: "raw_summary"

output_cols: "summary"

start_tags:

- "[SUMMARY]"

end_tags:

- "[/SUMMARY]"With these fundamentals in place, creating a custom flow requires only a few clear steps.

Creating your own flow

Creating a custom SDG Hub flow takes just a few steps:

- Explore the built-in library of available blocks.

- Write any new blocks needed for your domain or data type.

- Define a flow in YAML or Python, chaining the blocks together.

- Run your new pipeline using the same generate command used in the quick start:

from sdg_hub.core.blocks.base import BaseBlock

from sdg_hub.core.blocks.registry import BlockRegistry

@BlockRegistry.register(

"MyCustomBlock", # Block name for discovery

"custom", # Category

"Description of my block" # Description

)

class MyCustomBlock(BaseBlock):

"""Custom block that performs specific processing."""

def generate(self, samples: Dataset, **kwargs: Any) -> Dataset:

"""Implement your custom processing logic here."""

#TODO: Add Custom block generate logic hereOnce the fundamentals of SDG Hub are in place, the next step is applying it to real-world enterprise data.

Customizing LLMs for domain expertise

The real power of this toolkit comes when we use it to solve problems that require domain-specific expertise.

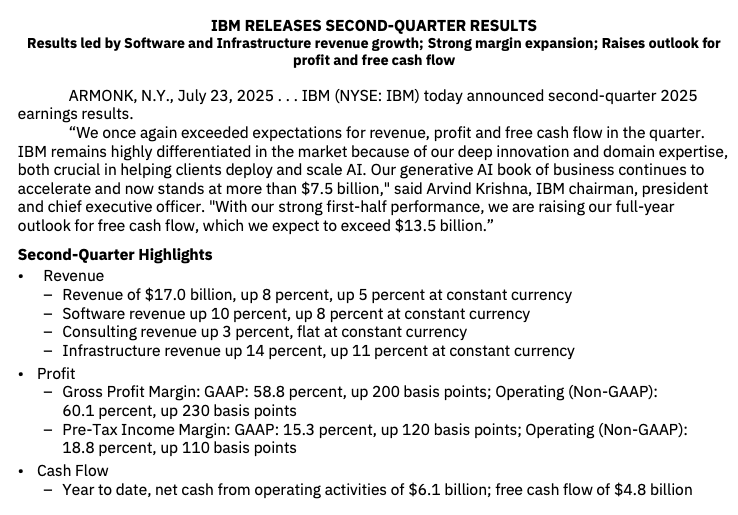

Imagine building a finance assistant for IBM. The goal is to train a model to interpret IBM's quarterly reports, as shown in Figure 2, and answer questions such as:

“Name two business lines that showed year-over-year revenue growth but turned negative at constant currency in Q2 2025, and report both percentage changes.”

A base model will not have this knowledge, since it was trained before these financials existed. Even retrieval-augmented generation may struggle to combine the right information for a multi-step query.

By generating synthetic question-and-answer pairs from IBM's reports, we can fine-tune a model to respond accurately to this type of query.

From raw documents to domain-tuned models

Turning enterprise documents into training-ready data is a three-step process: processing the raw content, generating synthetic examples, and preparing the model for fine-tuning. Each step builds on the last to ensure that data moves smoothly from unstructured formats to structured, domain-specific examples that can be used to train or adapt a large language model.

Step 1: Process the documents

Enterprise data often exists in formats that are difficult for LLMs to interpret, such as PDFs, Word documents, or scanned reports. These formats contain valuable information but lack the structure needed for downstream processing.

Docling provides a simple solution. It converts raw enterprise documents into structured Markdown that is both consistent and easy for models to consume. After installing Docling with a single command, pip install docling, your documents can be transformed into clean, machine-readable text that is ready for synthetic data generation.

Step 2: Generate synthetic data using SDG Hub

Once the documents have been processed, the next step is to generate synthetic data using SDG Hub. The workflow is designed to be intuitive and consists of three parts: discovering available flows, configuring a teacher model, and generating structured outputs.

The first step is to explore the available flows in SDG Hub, as shown in the following code snippet. These are prebuilt pipelines for common data generation tasks. For example, there are ready-made templates that can generate question-and-answer pairs directly from processed documents. These flows act as blueprints for fast, reliable setup.

from sdg_hub import FlowRegistry, Flow

# Auto-discover all available flows

FlowRegistry.discover_flows()

# Every flow gets a deterministic ID

flow_id = "small-rock-799"

# Use ID to reference the flow

flow_path = FlowRegistry.get_flow_path(flow_id)

flow = Flow.from_yaml(flow_path)Next, configure your teacher model, as shown in the following code block. This model is the engine that drives data creation. You can use an open source model such as Llama 3, or a hosted model like GPT, depending on your setup and requirements. The teacher model is responsible for producing the high-quality examples that will later be used to fine-tune your target model.

# Discover recommended models

default_model = flow.get_default_model()

# Configure model settings at runtime

flow.set_model_config(

model=f"hosted_vllm/{default_model}",

api_base="http://localhost:8000/v1",

api_key="your_key",

)Finally, call the generate function on your dataset. SDG Hub takes care of the orchestration process, runs the teacher model, structures the output, and produces clean, validated data that can be used immediately for training.

# Generate high-quality QA pairs

result = flow.generate(dataset)Behind the scenes, SDG Hub manages validation, schema alignment, and monitoring, ensuring that each run produces consistent and scalable results. This workflow allows data engineers and researchers to go from raw text to training-ready examples with just a few simple steps.

Data subset selection for efficient training

After generating synthetic data, the next step is to identify the most useful portion for training. Using all available data can be inefficient since many samples are redundant or add limited value. Selecting only the most informative subsets makes training faster and more cost-effective while maintaining model quality.

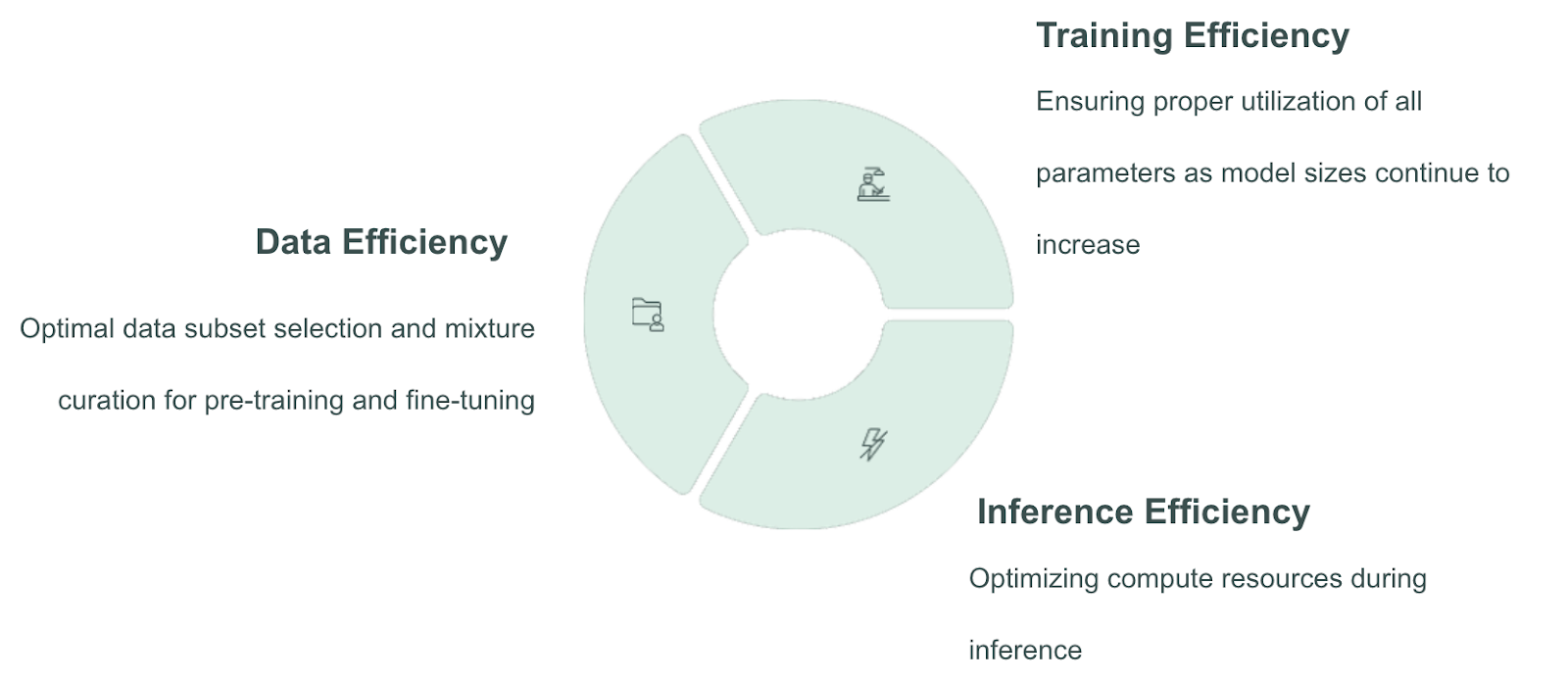

This work, done in collaboration with IBM Research, explores how to improve efficiency across three dimensions: data, training, and inference. As shown in Figure 3, focusing on data efficiency by selecting the right subset before training often provides the greatest benefits in both speed and compute savings.

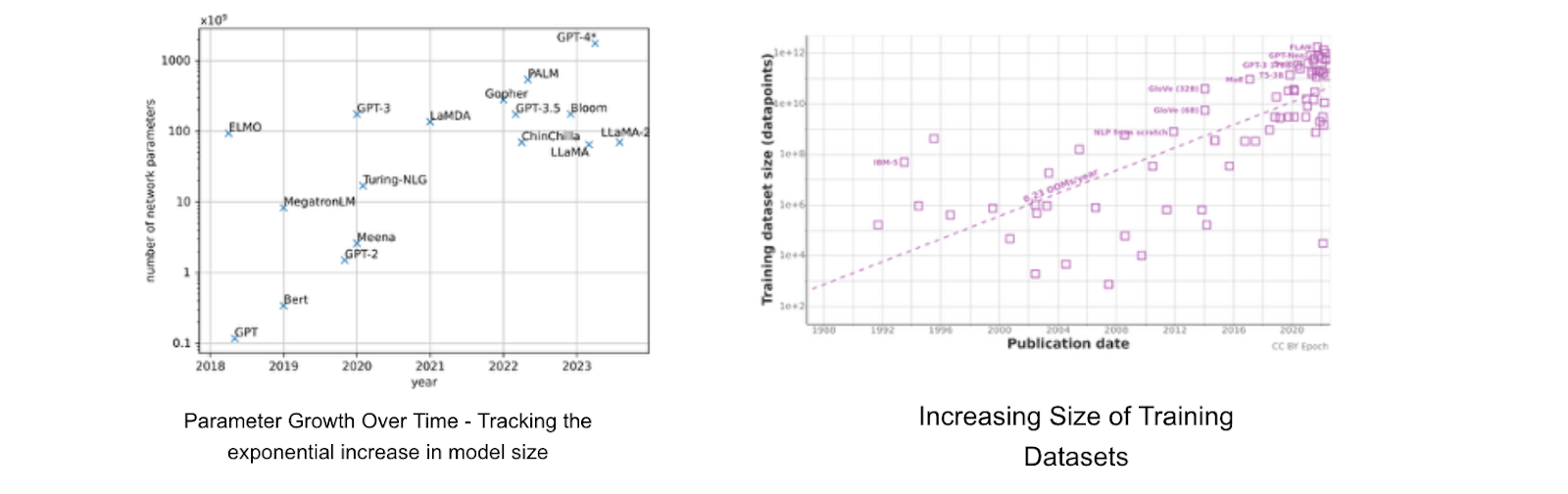

Scaling LLMs: A quest for peak performance

Large language models are continuing to grow, both the size of the models and the amount of data used to train them have increased dramatically. Figure 4 shows how parameter counts and dataset volumes have expanded side by side. This exponential scaling creates two major challenges.

First, larger datasets require far more compute power to process. Pretraining or fine-tuning models with billions of parameters becomes increasingly expensive. Second, as datasets grow, they often include redundant examples that add little value but consume significant resources.

Improving training efficiency requires identifying and removing that redundancy. By selecting only the most informative examples, we can achieve the same or better model performance while significantly reducing cost and compute time.

As models and datasets expand, redundant data and escalating compute requirements make intelligent subset selection critical.

Use cases for data subset selection

Data subset selection provides a structured way to improve model efficiency and relevance. There are three main use cases where it delivers the most value.

Use case 1: Enhancing efficiency with data subset selection

Data subset selection can make the training recipe more efficient. If you can use only 10 percent of your training data to train a large language model, that can lead to training that is roughly ten times faster. As a result, you save a significant amount of compute. The goal is to reduce the amount of data while maintaining the same level of model performance after training on the selected subset.

Use case 2: Targeted data subset selection

Subset selection can help you customize a general purpose large language model into a domain expert. You choose the portion of data that is most relevant to your domain or to the task that you care about, and use that subset to fine tune the model so it is tailored to the deployment environment that matters most.

Use case 3: Complementary data selection

Subset selection can be used to choose a dataset that complements the data you already have. This adds new and novel information when you continue training your model. It involves identifying data that fills knowledge gaps or introduces examples that were not represented in the original training corpus. It can also be used to exclude data that may contain bias or harmful content.

So far, we have covered why data subset selection is important and the three key use cases that guide when to apply it. Next, we describe how to select informative subsets using a practical optimization approach.

How to select informative data subsets

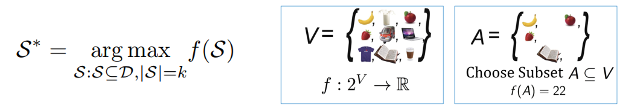

Selecting the right data for training is not guesswork. It can be framed as a formal optimization problem, shown in Figure 5. The idea is to select a subset of data points that maximizes an objective function, or utility, which measures how informative or representative each sample is.

Instead of manually filtering data, this approach provides a quantitative way to select the best examples while keeping the subset within practical limits, such as a maximum dataset size or available compute budget.

Solving this optimization exactly would be computationally infeasible for large datasets. To address this, researchers use submodular optimization, which offers an efficient, theoretically sound way to approximate the best subset.

Submodular optimization framework

Submodular functions (Figure 6) have a mathematical property similar to diminishing returns: adding a new data point provides less incremental benefit as the subset grows. This property enables the use of greedy algorithms that can find high-quality subsets efficiently.

By optimizing a submodular function, we can select diverse, representative examples that collectively cover the entire dataset while avoiding redundancy. This approach balances efficiency and accuracy, making it practical for large-scale model training.

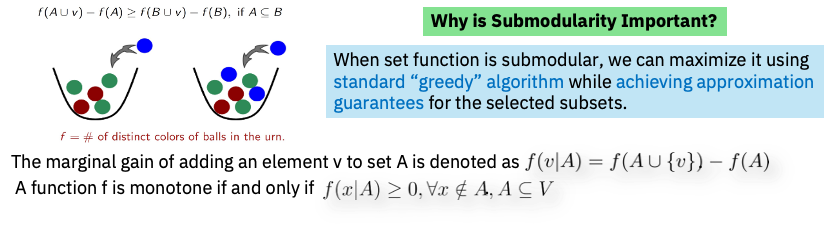

Embedding and similarity computation

Once the optimization framework is established, the next step is to represent each training example numerically. Each data point is converted into an embedding vector, which captures its semantic meaning in a high-dimensional space. Pairwise similarity between samples can then be computed, as shown in Figure 7.

This similarity information allows the optimization algorithm to identify which samples are redundant and which provide unique information. The result is a subset of examples that collectively reflect the diversity of the full dataset.

Facility-location optimization

The final step is to apply a facility-location function, which is a specific form of submodular function. It ensures that the selected subset best represents the entire dataset by covering all regions of the embedding space.

Intuitively, each chosen data point acts like a “facility” serving similar examples nearby. This approach guarantees that the subset maintains comprehensive coverage of the dataset while eliminating repetitive samples. See Figure 8.

Experimental results

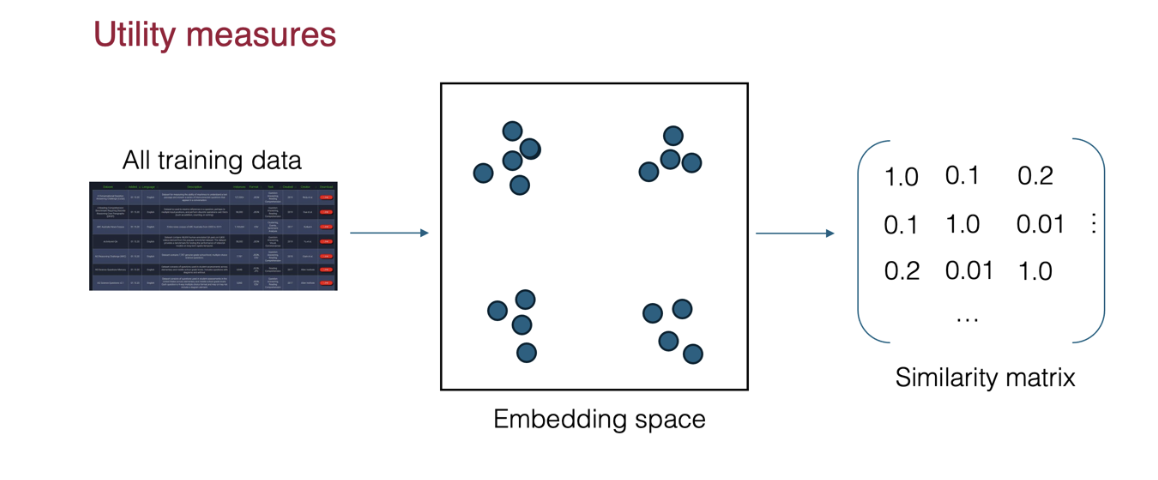

To evaluate the effectiveness of data subset selection, researchers applied this approach using InstructLab, an open source framework for large language model training and evaluation.

In this experiment, only 50 percent of the available training data was selected using the submodular optimization method described earlier. The reduced dataset was then used to fine-tune the model and tested on two standard benchmarks: MMLU (Massive Multitask Language Understanding) and MT-Bench.

As shown in Figure 9, the model trained on the optimized subset achieved nearly the same level of accuracy as the one trained on the full dataset. However, it required only half the training data, resulting in a twofold improvement in training speed. This demonstrates that by selecting the most representative and diverse examples, teams can maintain model quality while significantly lowering compute costs and time.

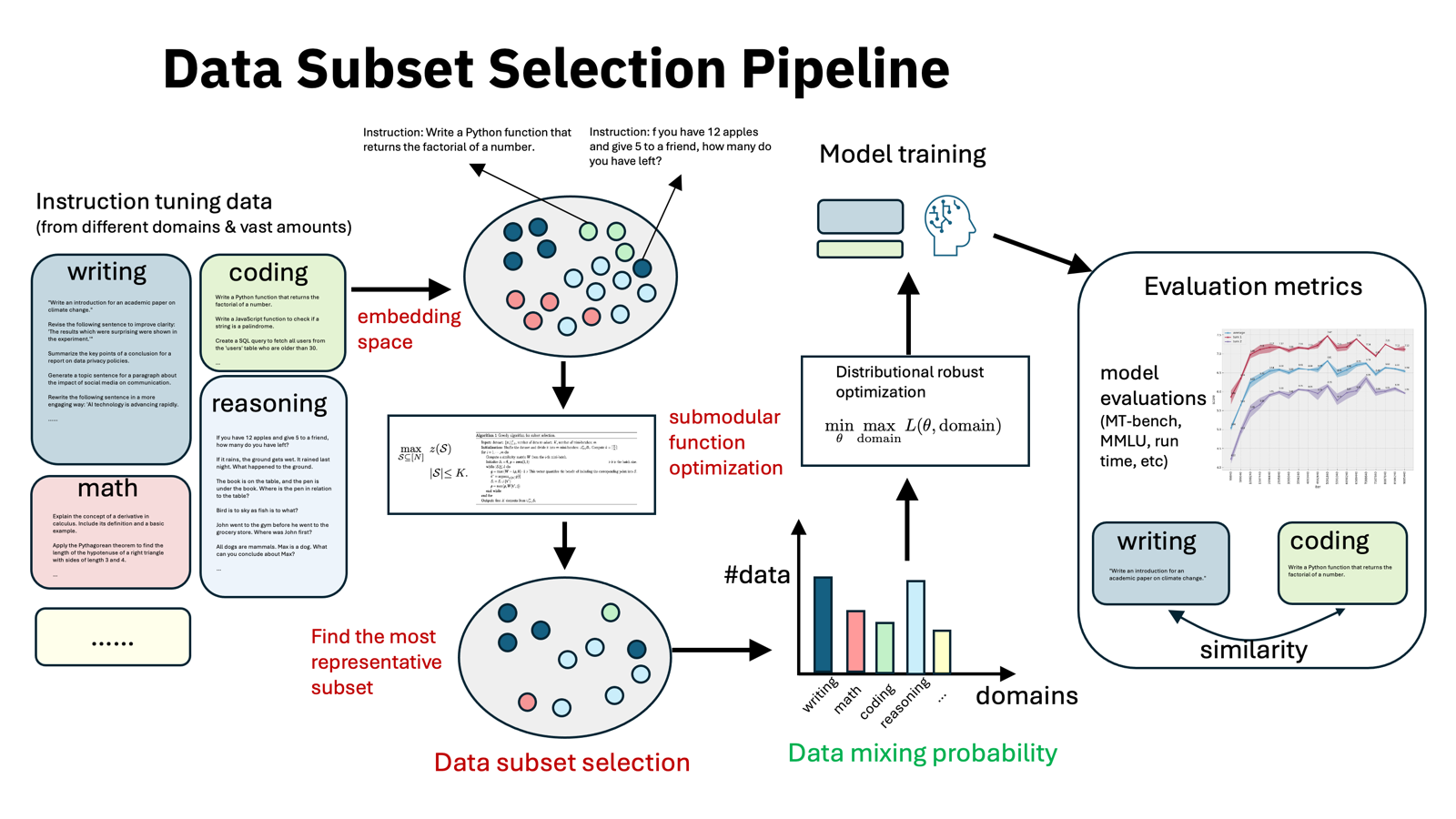

The full training pipeline

The combined process of generating, selecting, and training is summarized in Figure 10. SDG Hub produces synthetic data, subset selection identifies the most useful samples, and the refined dataset is used to fine-tune the model.

Visualizing the workflow

The complete synthetic data pipeline is illustrated in Figure 11. This visualization shows how the full process fits together, from data generation to fine-tuning and evaluation.

The workflow begins with SDG Hub, which generates instruction-tuning data from multiple domains. Each data point produced by the hub is then mapped into an embedding space using an embedding function. This step enables the algorithm to measure similarity and diversity among samples.

Next, the data subset selection algorithm runs by optimizing the submodular function described earlier. The output is a carefully chosen subset of data that is both representative and diverse.

After subset selection, the resulting dataset is divided into domain-specific partitions. From each domain, samples are drawn for training the target model. These domain-specific subsets are then used to fine-tune any open source large language model using the established training recipe.

Finally, the fine-tuned model is evaluated on existing benchmarks to validate performance, efficiency, and alignment improvements.

Conclusion

Synthetic data generation and data subset selection are redefining how organizations train and optimize language models. With SDG Hub, teams can generate structured, domain-specific data, integrate enterprise knowledge securely, and scale production workflows efficiently.

By coupling these capabilities with submodular data selection, organizations can cut training costs, eliminate redundancy, and focus resources on the most informative data. Together, these open source tools enable a more efficient, transparent, and customizable approach to AI development, which are empowering teams to build smarter, domain-aware models at scale.

Check out the SDG Hub project on GitHub.