When developers or engineers want to code a new application or a project, they often have to go through the stages of learning a framework or adopting the best practices associated with the framework. Wouldn’t it be easier if there was a standard template that scaffolds the basic code for you with all the necessities that can get you up and running in a short time? In the world of AI development and deploying large language models (LLM) with your applications, it is even more important to get familiar with the basics of AI. We can achieve this by using the software templates in Red Hat Developer Hub.

This article demonstrates how you can easily create and deploy applications to your image repository or a platform like Red Hat OpenShift AI.

Introduction to Red Hat Developer Hub

Red Hat Developer Hub is an internal developer platform (IDP) built on Backstage, which enables engineers, teams, and organizations to increase productivity by consolidating elements of the development process into a single portal.

Red Hat Developer Hub provides software templates which streamline the process of creating new resources, such as websites and applications, giving you the ability to load code skeletons, insert variables, and publish the template to a repository. There are many open source or third-party software templates available, but you can also create your own custom software templates to extend your business needs.

Installing Red Hat Developer Hub

You can install your instance of Red Hat Developer Hub using the OpenShift OperatorHub. Alternatively, there is an easy-to-use Red Hat Developer Hub installer at redhat-ai-dev/ai-rhdh-installer, which also installs and configures the Argo CD and Tekton plug-ins for the GitOps and the Pipelines requirement of the AI software template.

Installing Red Hat Developer Hub with the installer is as easy as executing two commands:

helm upgrade --install ai-rhdh ./chart --namespace ai-rhdh --create-namespacebash ./configure.shFollow the instructions on the installer README to install your GitHub application and provide the GitHub application details during the installation.

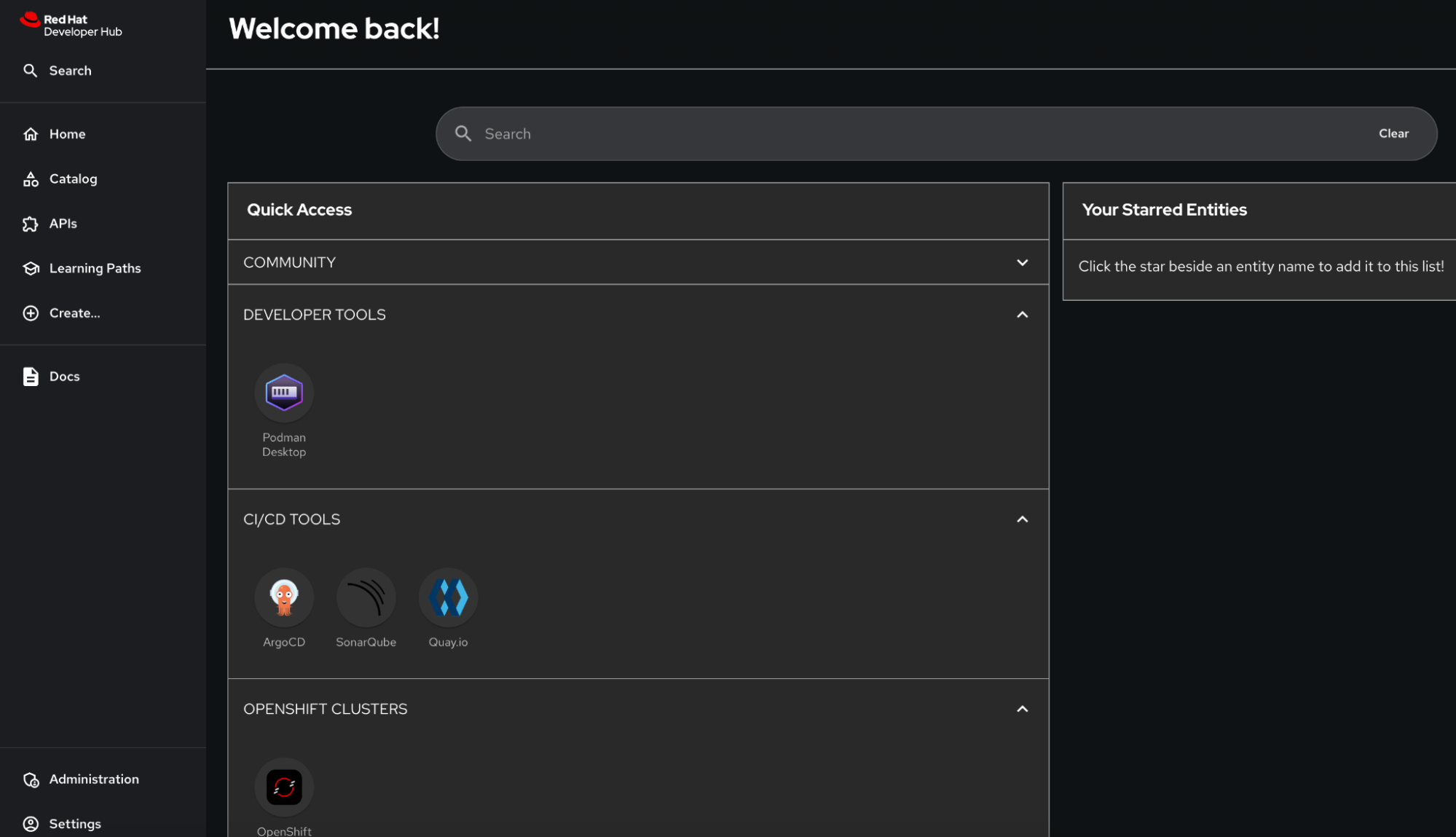

Once the Argo CD, Tekton pipelines, and the Red Hat Developer Hub installations are configured, you can view the Red Hat Developer Hub portal (Figure 1) using the route URL. The Argo CD dashboard should also be accessible via the route URL. If you already have a pre-existing Red Hat Developer Hub and Argo CD, refer to the installer configuration guide for the manual steps.

Importing software templates

You can import any software templates, including our AI software templates, by clicking Create → Register Existing Component. You can use the software templates location link to import the AI software templates. The installer mentioned in the previous section has this already installed with Red Hat Developer Hub by default.

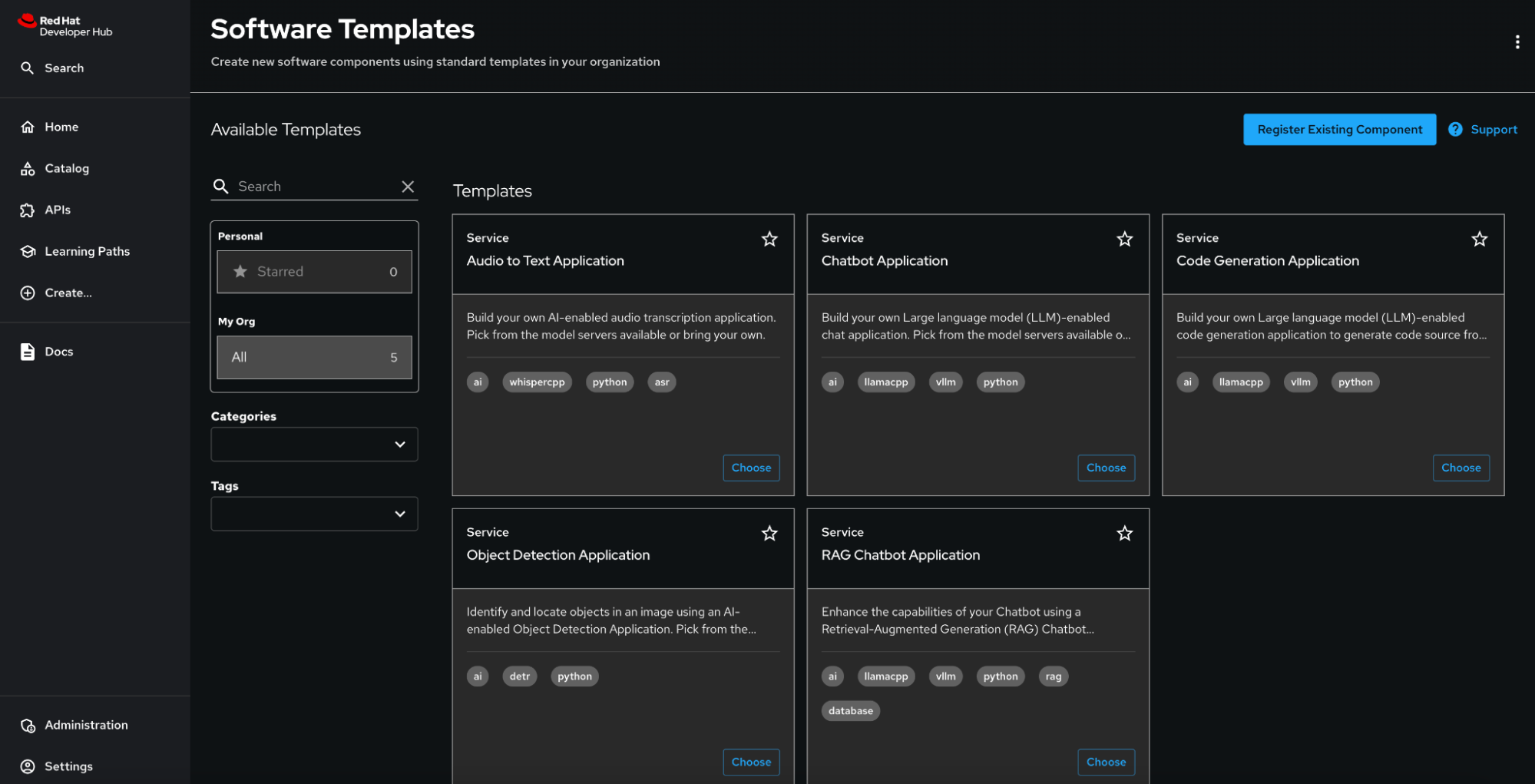

The five templates are described briefly below and shown in Figure 2:

- Audio to Text Application: An AI-enabled audio transcription application. Upload an audio file to be transcribed.

- Chatbot Application: A large language model (LLM)-enabled chat application. The bot replies with AI-generated responses.

- Code Generation Application: An LLM-enabled code generation application. This specialized bot helps with code related queries.

- Object Detection Application: A DEtection TRansformer (DETR) model application. Upload an image to identify and locate objects in the image.

- RAG Chatbot Application: RAG (retrieval augmented generation) streamlit chat application with a vector database. Upload a file containing relevant information to train the model for more accurate responses.

Creating a component

Choose a template to create a component. For this example, we will select the RAG Chatbot Application. This chatbot application uses RAG to enhance the application's accuracy by providing a document to train the LLM. Retrieval-augmented generation (RAG) is a method for getting better answers from a generative AI application by linking a LLM to an external resource.

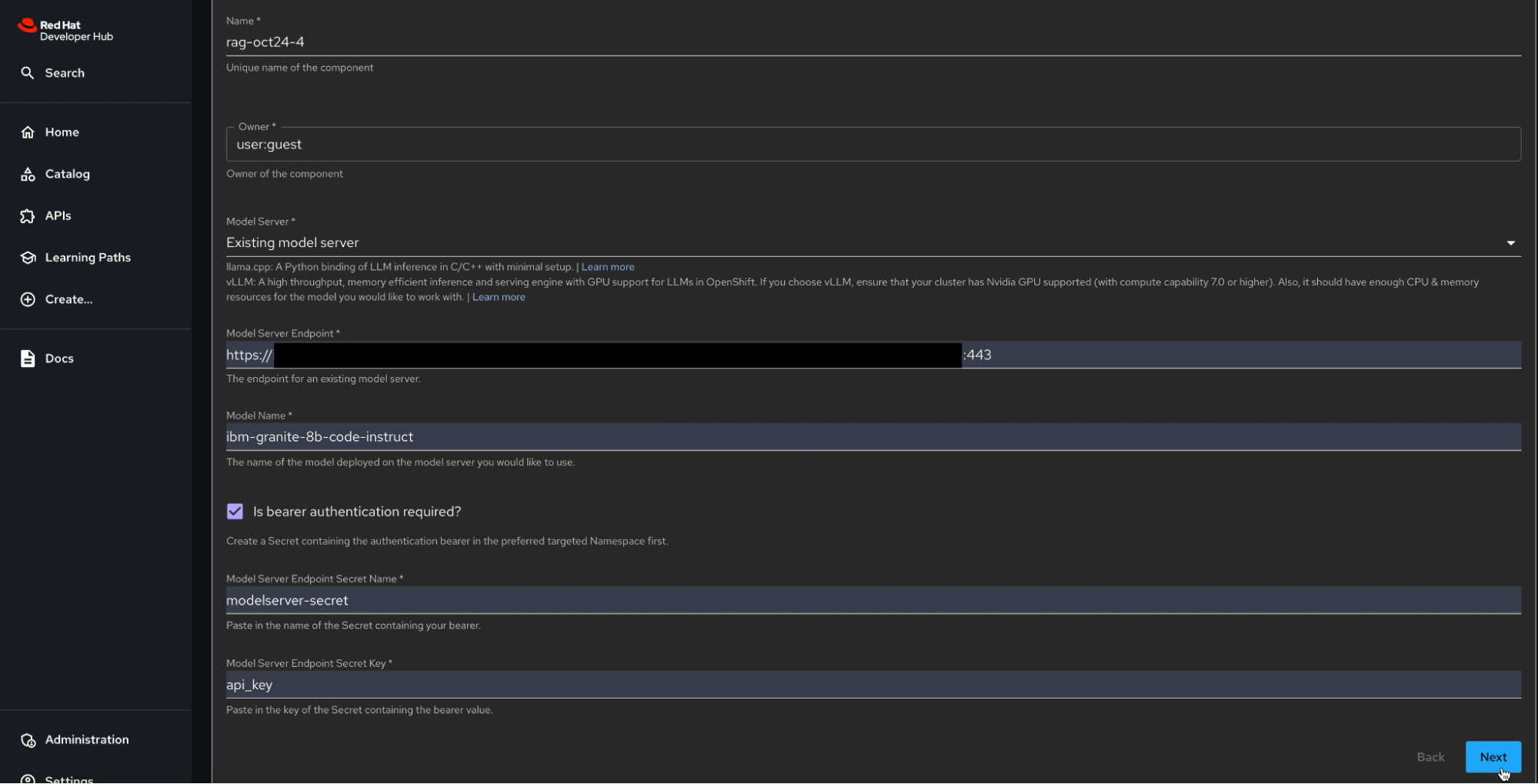

On the first page, provide the application information and select the model server with the model that comes with the template. In this case, we have the option to select between the predefined llama.cpp and vLLM model servers. The llama.cpp is a Python binding of LLM inference in C/C++ with minimal setup and the vLLM is a high throughput, memory efficient inference, and serving engine with GPU support for LLMs in OpenShift. If you choose vLLM, ensure that your cluster has nodes with NVIDIA GPUs (for more information on the NVIDIA GPU Operator on Red Hat OpenShift, see the NVIDIA GPU Operator on Red Hat OpenShift Container Platform documentation). Select either of these options if you want to use the pre-packaged model servers; then, skip specifying an existing model server and continue to the Application Repository Information page.

You can also provide your own model server by specifying the Model Server Endpoint and the Model Name deployed on the server. If the model server endpoint requires authentication, a Kubernetes secret with the authentication details is required.

If you need to create the Kubernetes secret for the model server, create it in the same project or namespace that you will be deploying the application in; if the project or namespace does not yet exist, create it. Create a key-value Kubernetes secret in this project or namespace and provide your model server authentication details. The secret key can be a name of your choice and the credentials must be provided in the value.

Specify the created secret name and the key in the fields Model Server Endpoint Secret Name and the Model Server Endpoint Secret Key respectively. In the next Deployment Information stage, you will have to specify the same project or namespace. See Figure 3.

You can then provide the Application Repository Information like the GitHub or GitLab server, repository owner, and a repository name. The AI software templates create two repositories for the user. One for storing the scaffolded application source along with the Tekton pipeline definitions and the second is a GitOps repository which includes the Kubernetes manifests and resources for deploying the application, model server, database, etc., along with the services, and other relevant configurations.

Provide the information for the GitHub or the GitLab repository for your Red Hat Developer Hub instance. The Deployment Information page requires the Image Registry, Image Organization, and an Image Name. Provide the same Deployment Namespace where you created the key-value secret earlier. This is where the Kubernetes and OpenShift resources are created.

Select Create Workbench for OpenShift AI if you want to create a workbench in Red Hat OpenShift AI for your application. Note that will require that OpenShift AI be installed on your cluster. OpenShift AI lets you bring all your AI-enabled applications to a platform where you can scale across our cloud environments and leverage AI tools like Jupyter, scikit-lean, PyTorch, etc. See the article Red Hat OpenShift AI installation and setup for more information.

You can also view the detailed information on the component via the TechDocs associated with the component by clicking View TechDocs from the overview tab.The TechDocs cover a variety of subjects like Pipelines, GitOps, and OpenShift AI.

Accessing the component in OpenShift Container Platform

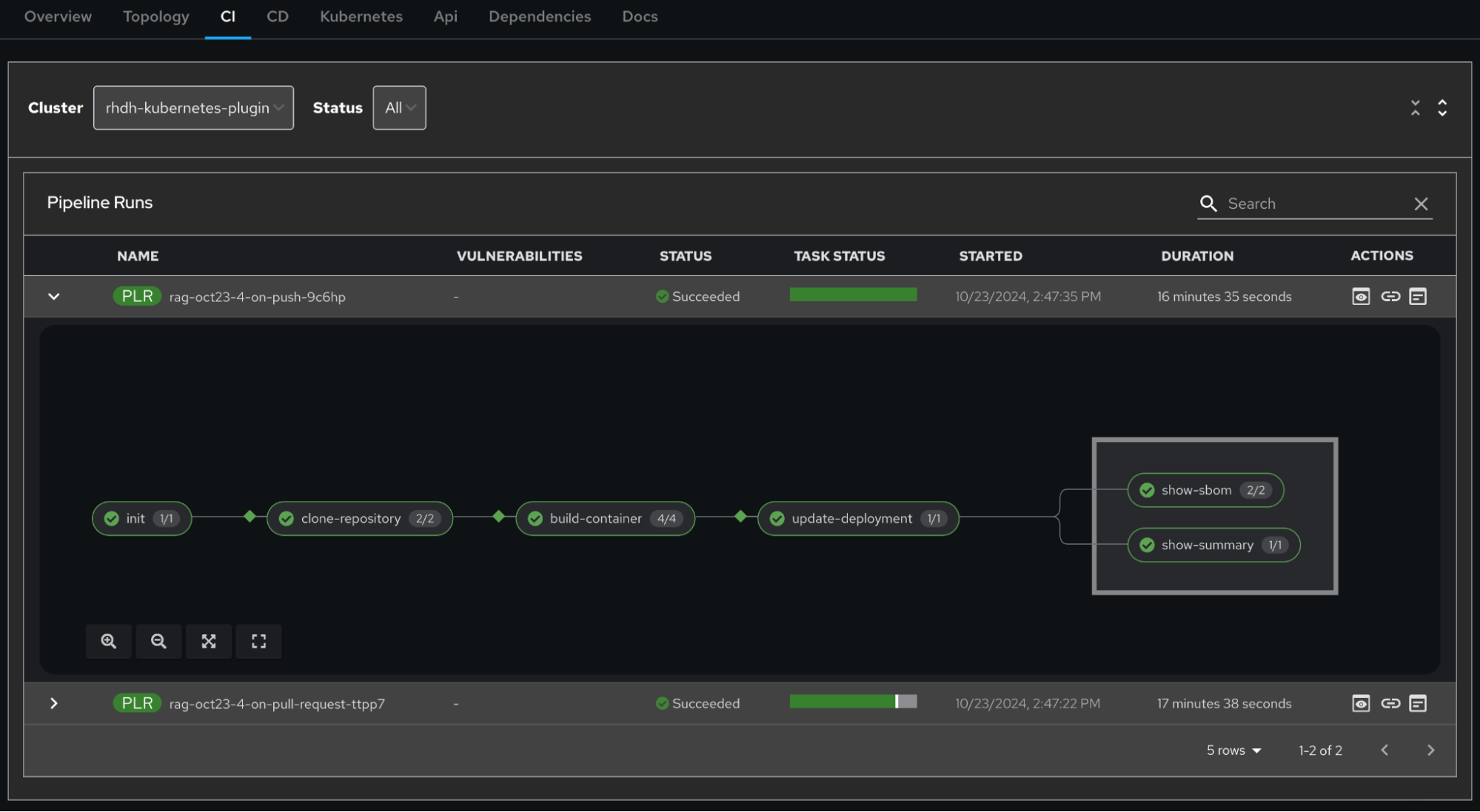

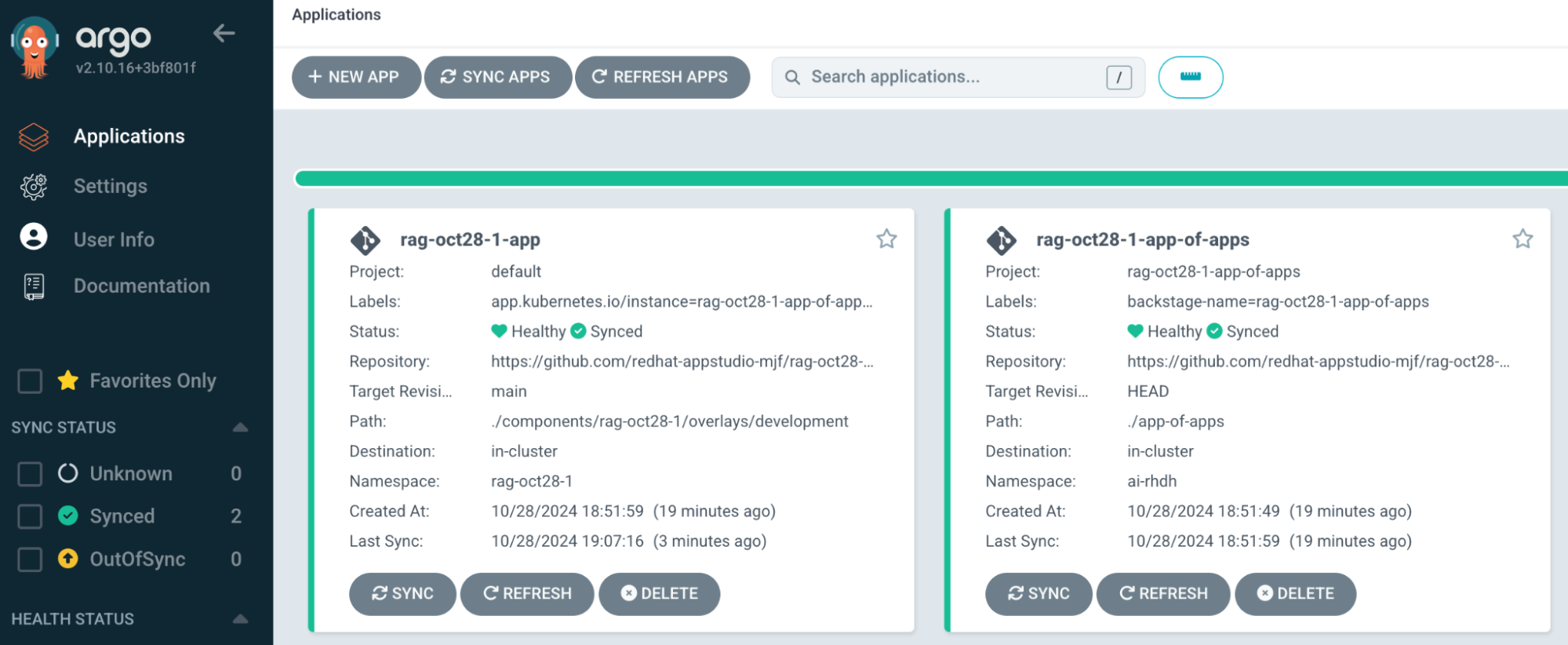

If you navigate to the CI tab of your catalog component, you will see the PipelineRuns for your application (Figure 4). These PipelineRuns execute Tekton tasks that clone the application repository, build the application container, and update the GitOps deployment with the new container image that is hosted on your image registry. You can also see the syncing of the Kubernetes resources in the Argo CD dashboard for your application (Figure 5).

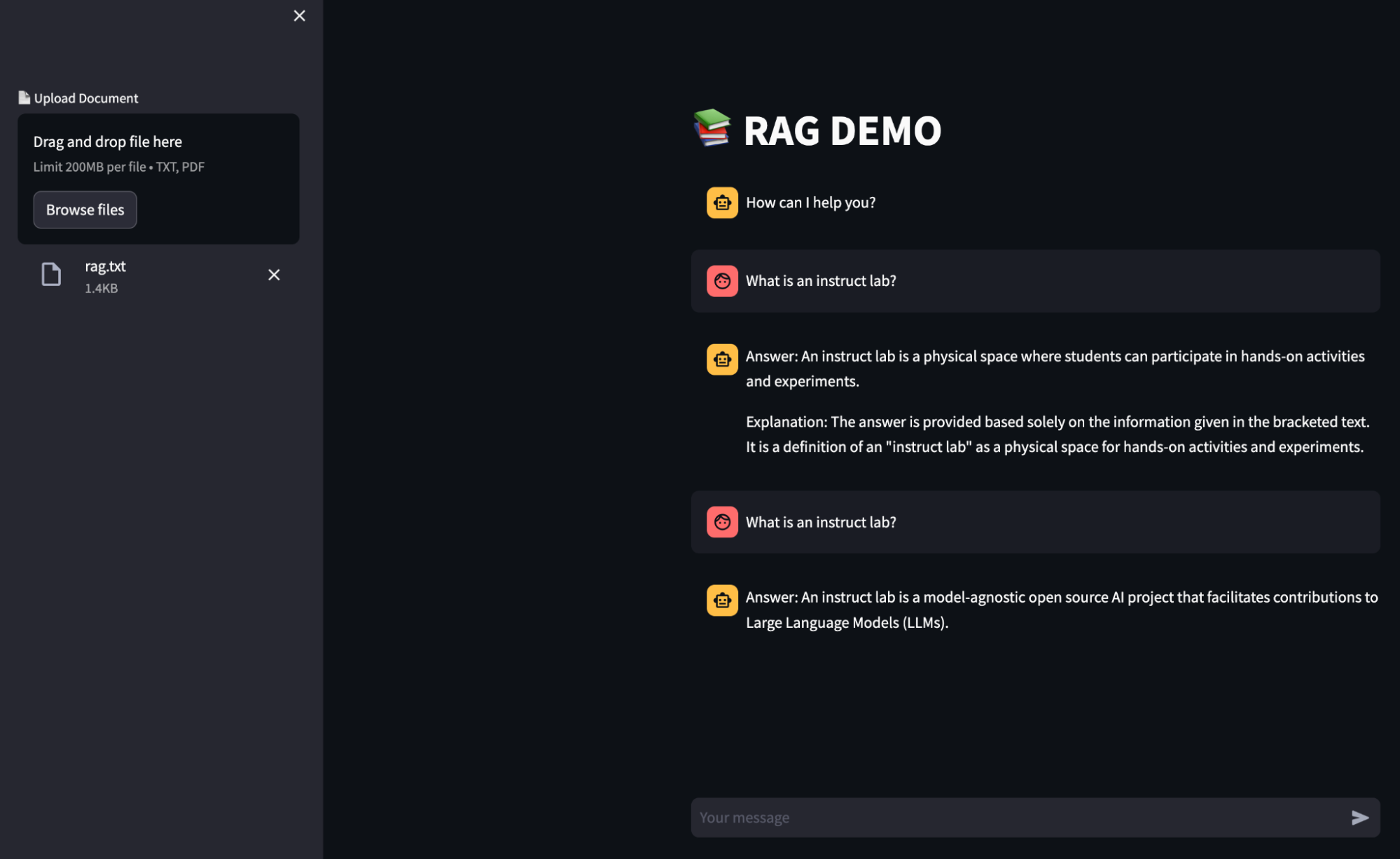

You can access the application endpoint by clicking on the Route in the Topology tab. When the application opens in the browser, let's ask a simple question: What is InstructLab? You will notice that the Chatbot gives a very generic answer; it may or may not be right but we know for certain that it is not right if you look at the InstructLab project.

For our next step, copy some of the InstructLab information and save this information to a text file—for instance, the following text from the InstructLab docs:

InstructLab is a model-agnostic open source AI project that facilitates contributions to Large Language Models (LLMs).

We are on a mission to let anyone shape generative AI by enabling contributed updates to existing LLMs in an accessible way.From your RAG application, click Browse and upload the saved text file. Now ask the RAG chatbot the same question; you will notice that the chatbot gives a more accurate answer this time, as illustrated in Figure 6. This is because we directed the RAG application’s model to retrieve specific, real-time information from our chosen source of truth. This can be beneficial if we want a custom or specific topic expertise when querying a LLM.

If you would like to update or submit pull requests to the application source repository, the Tekton definition looks for the Git events such as pull request and push to initiate new Pipeline Runs. Head over to the rag_app.py file and submit a simple change to see it for yourself.

As an example, in rag_app.py, update the application chat prompt so that the chatbot responds as if it were a pirate. Append Provide all responses as if you are a pirate to the prompt variable as shown below and save the changes:

prompt = ChatPromptTemplate.from_template("""Answer the question based only on the following context:

{context}

Question: {input}

Provide all responses as if you are a pirate

"""

)Open a pull request and merge the changes. You will see that the PipelineRun successfully completes, and the GitOps deployment is updated. In the Argo CD dashboard, once the application and the Kubernetes resources are synced, you can refresh your application in the browser to view the changes. The chatbot responds in a pirate speak to the questions.

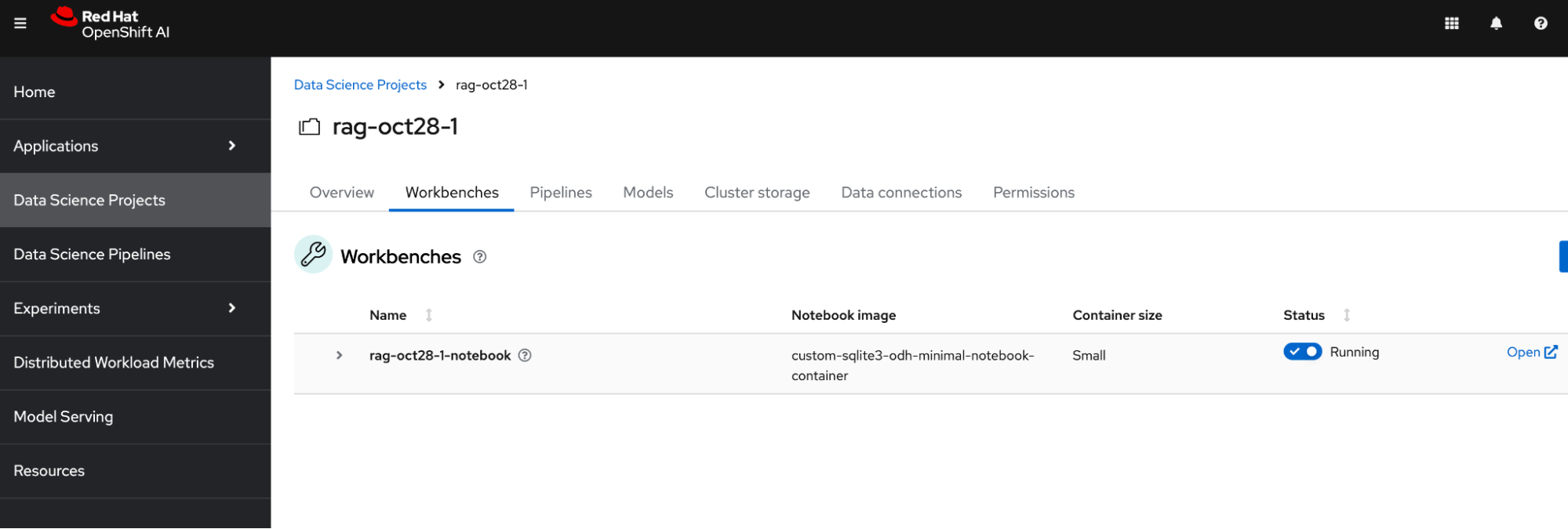

OpenShift AI

To access your application on the OpenShift AI platform so that we can try it out on a Jupyter notebook, go to the Data Science Projects on your OpenShift AI dashboard and click the project associated with your Component. Click the Workbenches tab and open the workbench. See Figure 7.

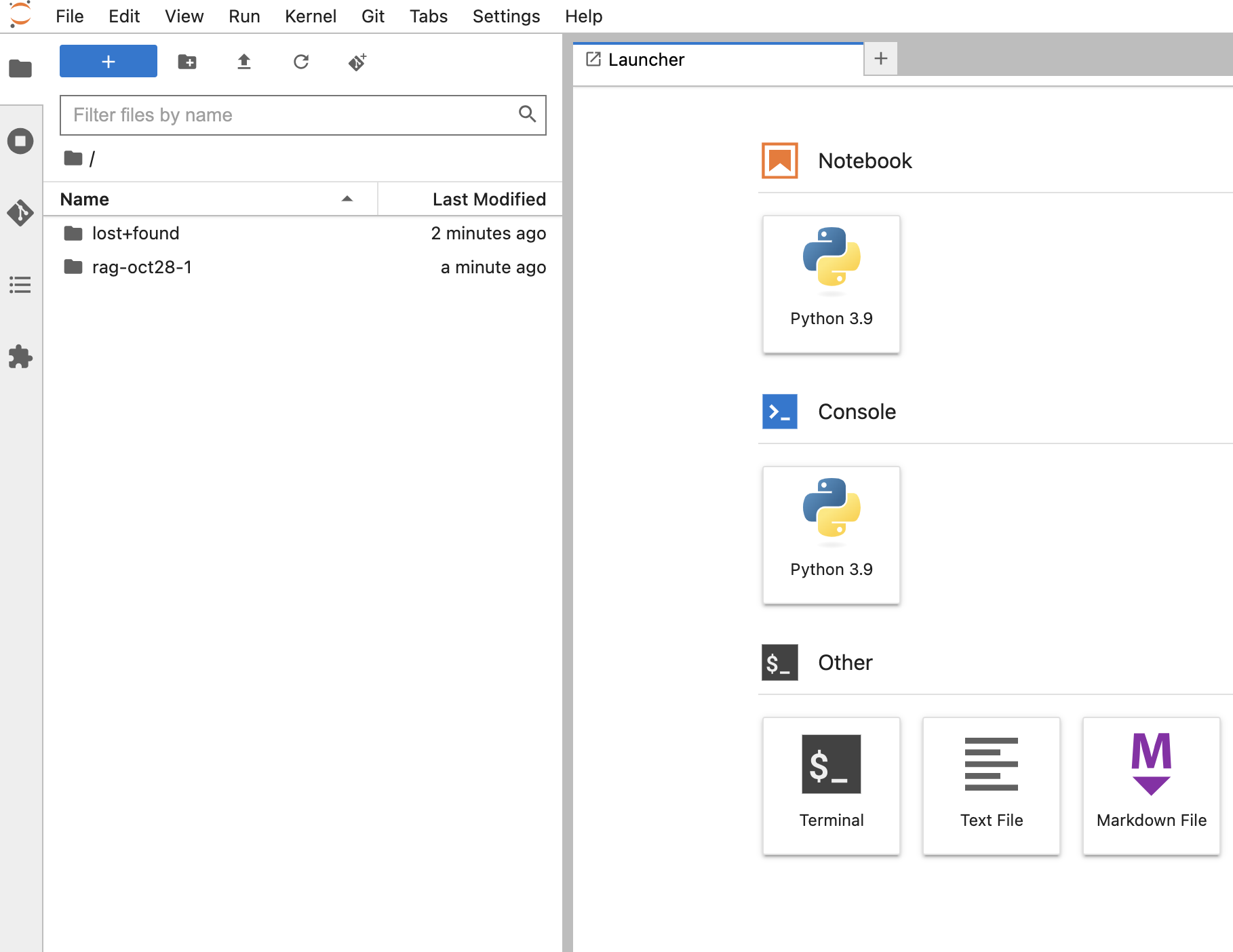

In the JupyterLab (Figure 8), open the terminal and go to the base directory of your application. Install the dependencies and run your application. You can find these instructions in docs/rhoai.md, accessible either via the Jupyter Notebook or via the TechDocs in Red Hat Developer Hub mentioned earlier or via the source repository on GitHub.

pip install --upgrade -r requirements.txt

streamlit run rag_app.pyOnce the application is running, as per the docs/rhoai.md instructions, open a terminal window on your machine and oc port-forward the sample application's notebook Pod to your local machine. You can now access the application via your machine’s browser on localhost.

Conclusion

This article walked through the steps to template code scaffolders and code skeleton for your AI software projects that can be used frequently in your enterprise or environment. These AI software templates streamline the development process, especially if the AI application requires a large language model: you can focus on your application's business needs while the AI software template delivers a skeleton for the LLM. You can also customize and extend existing software templates according to their business requirements, like updating the code scaffold or bringing your own specific LLM for their AI application.

Explore more topics:

Last updated: November 15, 2024