This article outlines how to use Red Hat Developer Hub to catalog AI assets in an organization. Cataloging AI assets can be particularly useful for platform engineers who are looking to consolidate their organization's list of approved models while enforcing access and usage restrictions, as well as for AI developers, looking for a straightforward way to consume AI models.

The catalog structure that this post outlines is based on real model servers and models in use by our development team (Developer Tools and AI), and represents ongoing investigations and research into how organizations can best manage their AI assets.

Introduction to Red Hat Developer Hub catalog

Red Hat Developer Hub is an internal developer platform (IDP) based on Backstage, which enables development teams and organizations to increase productivity by consolidating elements of the development process into a single portal. Red Hat Developer Hub provides a software catalog based on the Backstage catalog that provides a central location for applications, APIs, and software resources used within a team.

The entities defined by the catalog include:

- Components: Represent deployed software, typically ones that expose some kind of API.

- Resources: Represent files, databases, and other resources that may be deployed somewhere.

- APIs: Represent the APIs exposed by software, typically linked to one or more component or resource entities.

Each catalog entity can provide metadata about the entity that is being modeled: a brief description, filterable tags, and ownership information, and is defined in a catalog-info.yaml file. You can use TechDocs to extend the information stored in the catalog.

For more information about the Backstage catalog and the full list of entities it defines, see the upstream Backstage documentation on the catalog format.

Red Hat Developer Hub and AI models

At Red Hat, we have been looking at how development teams can manage and use their AI assets in the future. As part of our first approach, we've looked at how Red Hat Developer Hub can be used by platform engineers to manage the assets, and answer a number of questions that an AI developer might have. Some of these questions might be:

- What models have been approved for enterprise use by my organization?

- What is each model and API designed or tuned for?

- How to download the model and how do I run it?

- How can I browse the API, and how can I access it?

- Are there any training or ethical considerations?

Through its software catalog and catalog entities, it's possible for developers and platform engineers to use Red Hat Developer Hub to record and share the details of an organization's AI assets, including large language models (LLM), AI servers, and their APIs. In addition to that, through TechDocs, platform engineers can curate important information about those assets, allowing them to answer the questions highlighted above, such as a TechDoc covering how to sign up to a model service, or usage restrictions affecting a specific model.

Our approach to modeling AI models in Red Hat Developer Hub

We've developed a standard approach for cataloging AI assets in Red Hat Developer Hub, based around native Backstage catalog entities. Our approach was based upon models and model servers in use by our team for development purposes, and how we wished to manage our development team's models.

- Model servers are represented as Components in the catalog with type

model-server.- Whose catalog entry contains information such as: name, description, access and API URLs, authentication status.

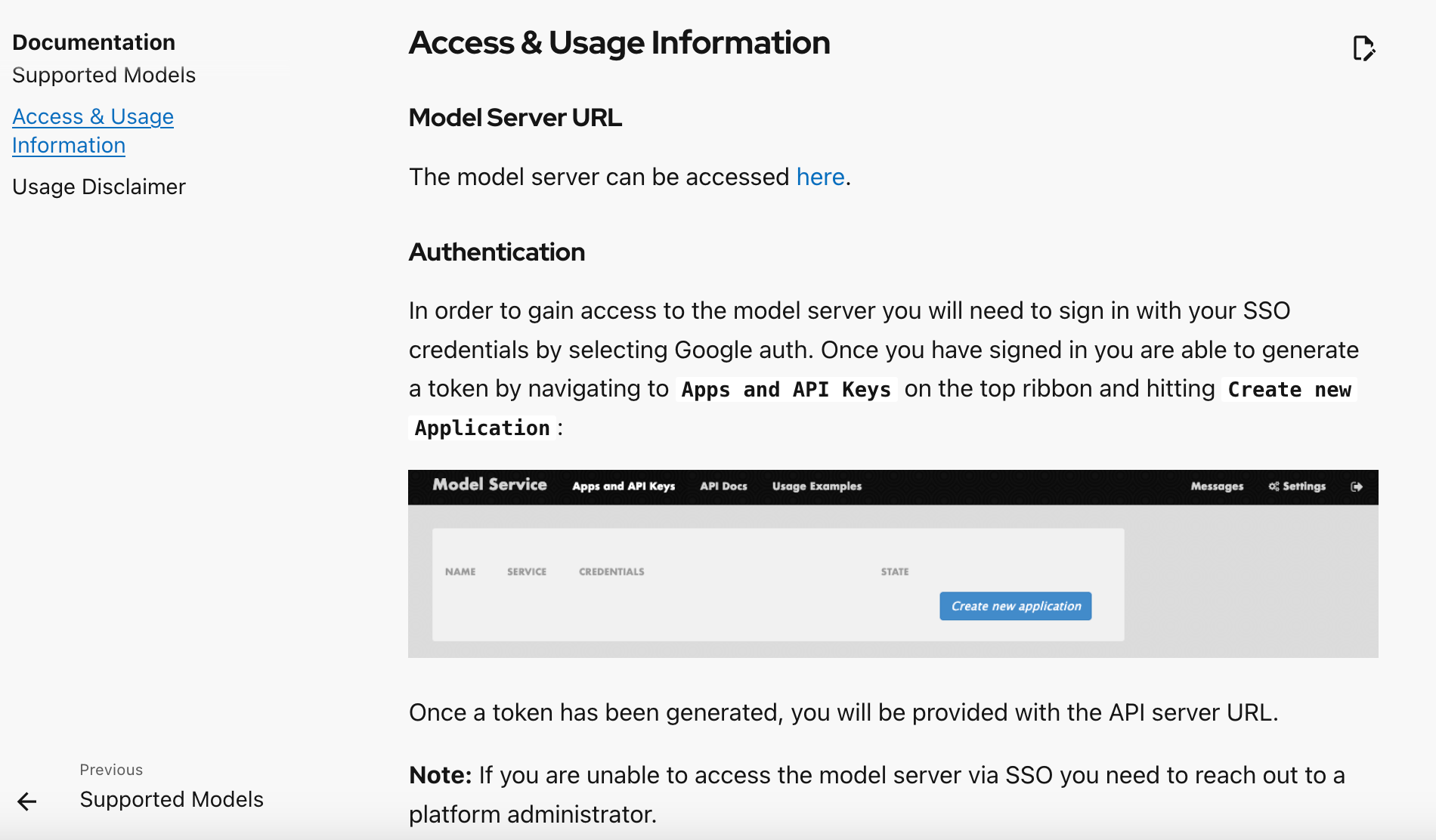

- TechDocs provide additional information, such as how to access the model server, obtain an API key, and usage examples.

- For the full list of metadata and TechDocs, and where they're stored, see the Model Server Metadata.

- Models are represented in the catalog as Resources with type

ai-model.- Whose catalog entry contains information such as: model name, a brief description, download and access URLs, model type, tags, license, and author.

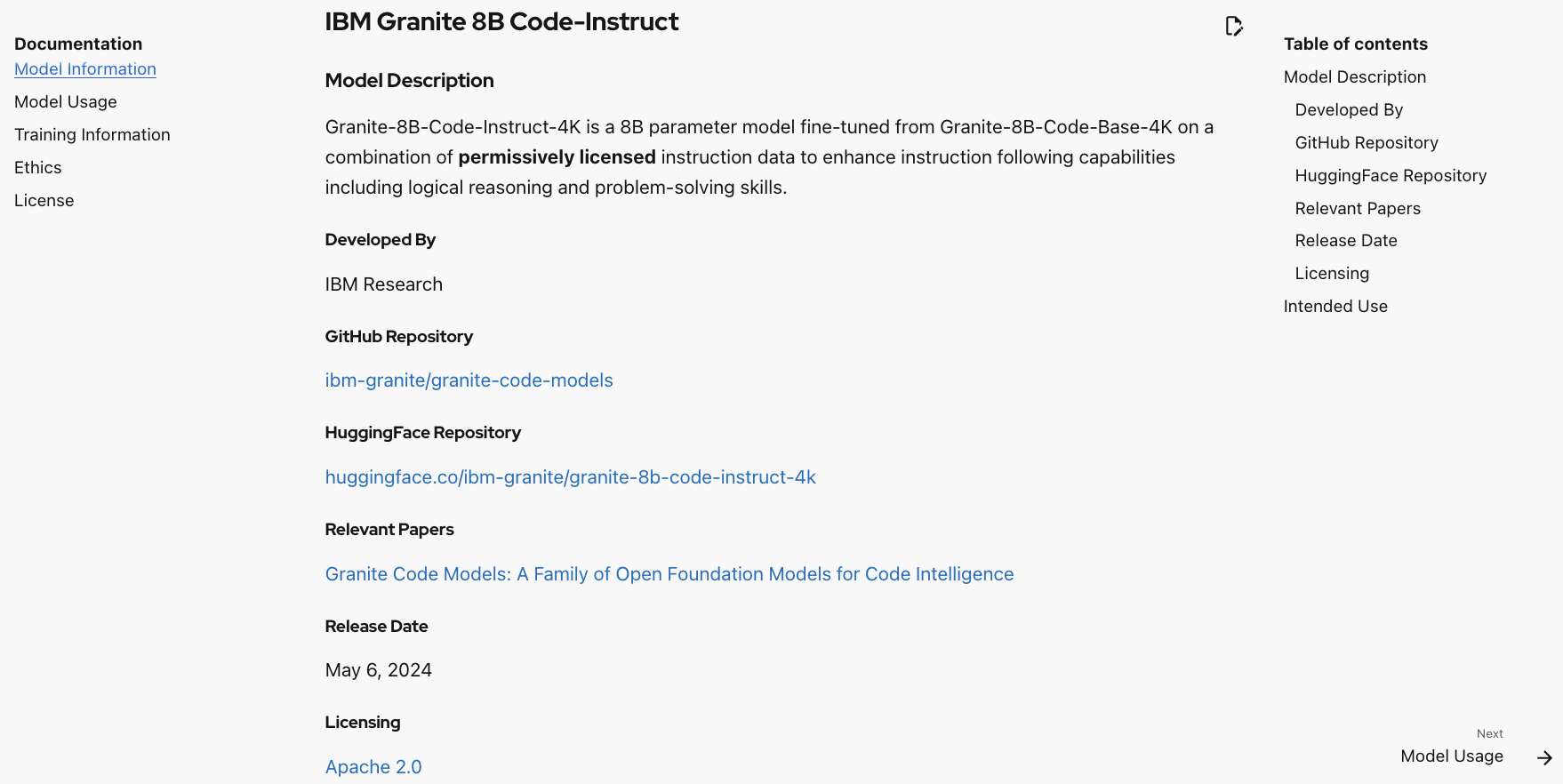

- TechDocs provide additional information such as a basic model card, approved use cases, training and ethical considerations.

- For the full list of metadata and TechDocs, and where they're stored, see the Model Metadata.

- Model server APIs are represented in the catalog as

APIs. They contain the API schema for the model server, providing a visualized representation of the API, along with an ability to test the individual endpoints.

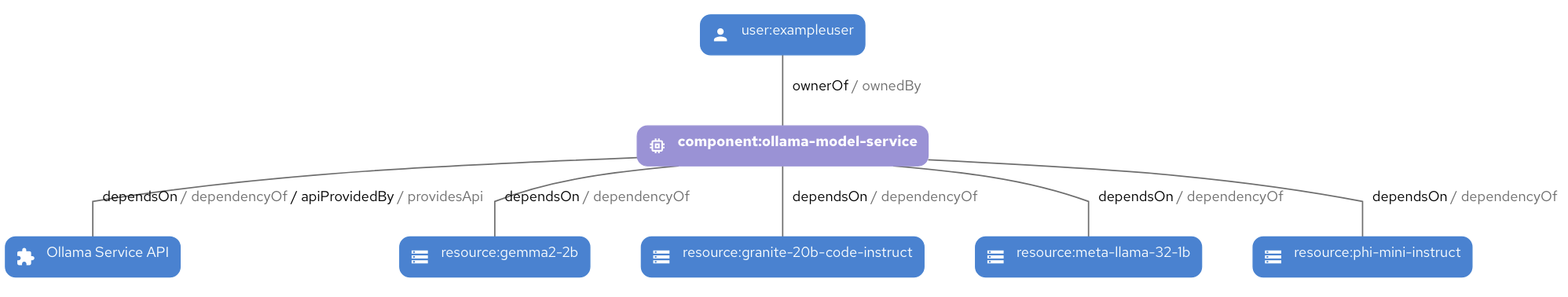

Figure 1 shows an example dependency graph of a sample model catalog in Red Hat Developer Hub, based on the previously mentioned structure.

Example walkthrough

Based on the model catalog structure, we have created two example model catalogs, representing the two model servers that our development team uses:

- Developer model service: A vLLM-based single model service deployed through Red Hat OpenShift AI, serving IBM granite-code 8b, with Red Hat 3scale API Management acting as an API gateway.

- Ollama model service: An Ollama-based multi-model service running on Red Hat OpenShift, serving a variety of large language models (LLMs).

Each example has its own catalog-info.yaml file, along with corresponding TechDocs for the models and model servers.

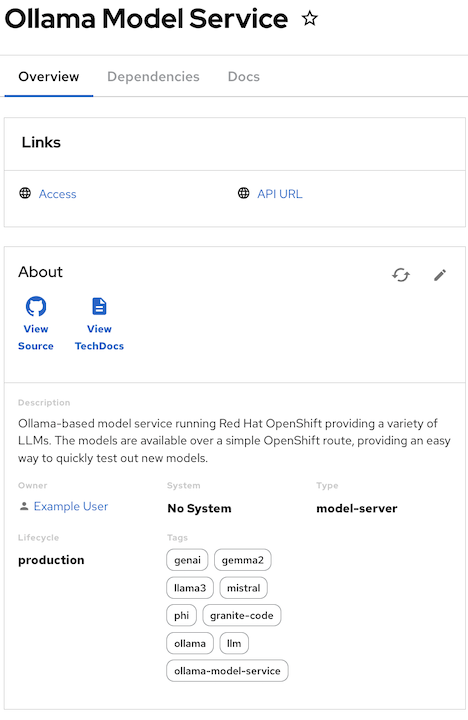

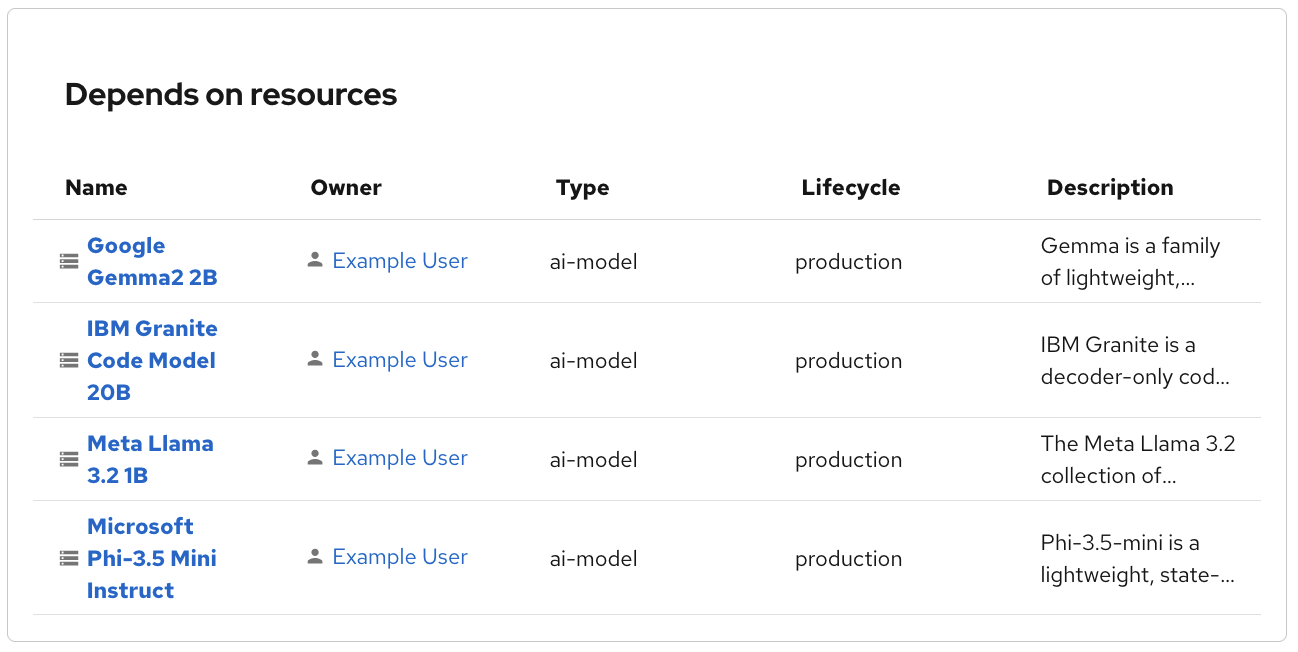

Once imported into Red Hat Developer Hub, you can view each individual model server component from the catalog. Click into a model server to receive more information about the model server, such as its URLs and the models that are running within it, as shown in Figures 2 and 3.

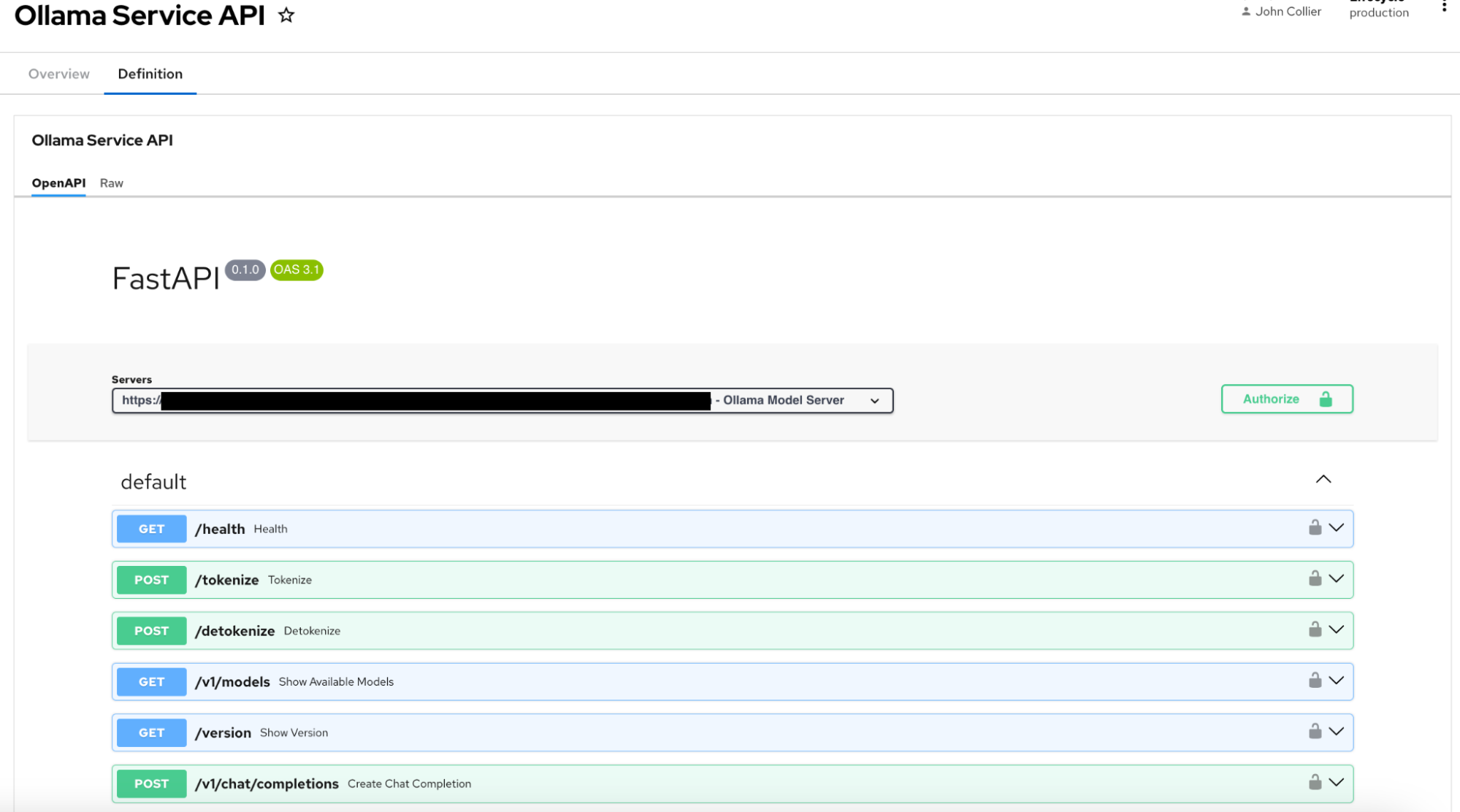

The API entity associated with each model server provides an option to visualize and test the API provided by the model server (Figure 4).

If a model resource is viewed from within the catalog, we're presented with a similar view as the model servers, provided with details such as useful links, a brief description, and filterable tags, as shown in Figure 5.

The TechDocs for the model catalog provide a basic model card, and additional information such as usage restrictions, ethical considerations, and training information for the model. See Figure 6.

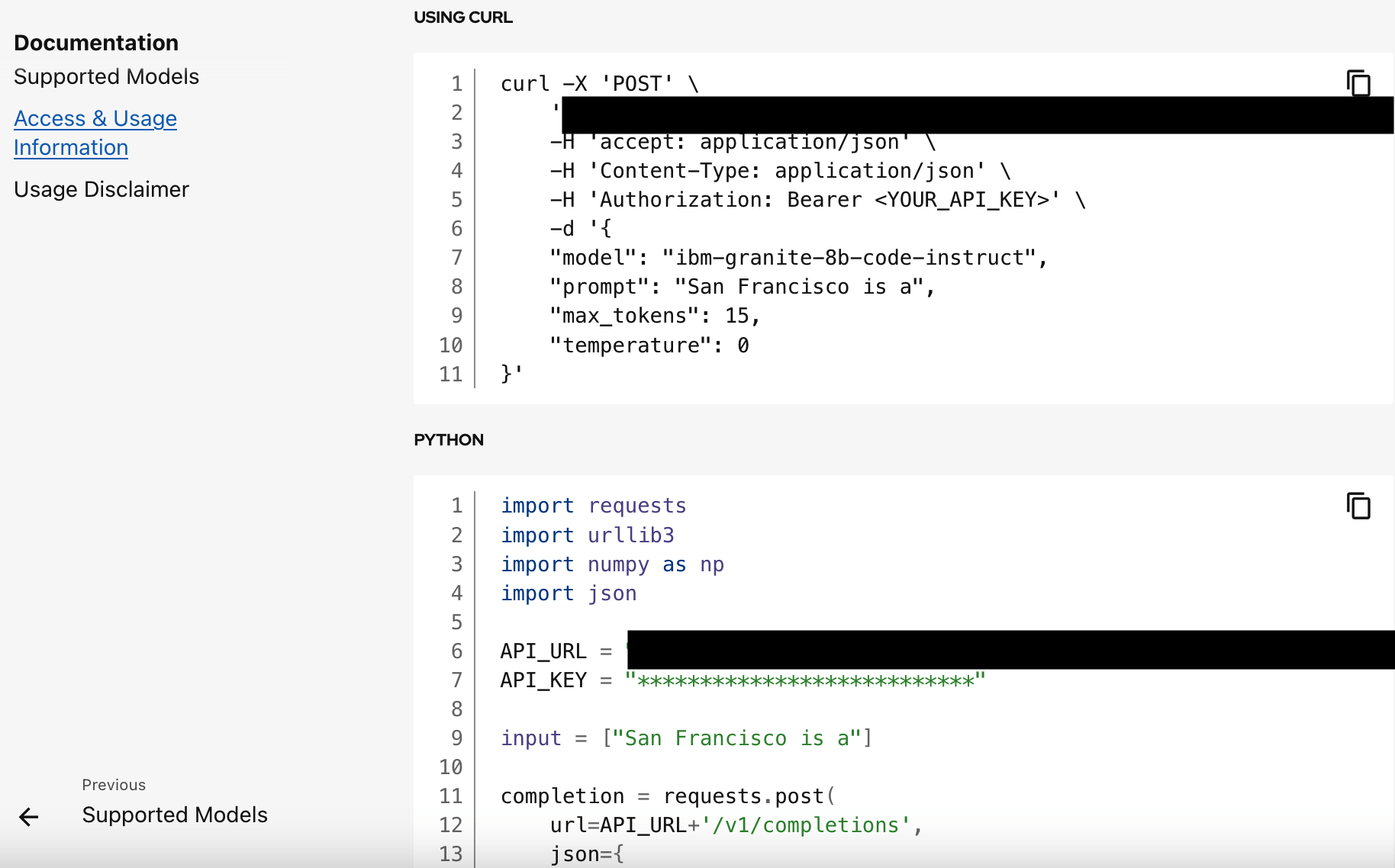

Finally, each model server component also has TechDocs that provide information on how to sign up and access the model server and how to generate an API key (Figure 7), along with some usage examples (Figure 8).

Following the steps to retrieve an API URL and key for the model service, it's easy for an AI developer to directly consume the API, whether it be interacting with the API directly or consuming tools or software templates.

Example using the model server API with curl:

bash-5.2$ curl -X 'POST' \

'https://***************************:443/v1/completions' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer ***************************' \

-d '{

"model": "ibm-granite-8b-code-instruct",

"prompt": "San Francisco is a",

"max_tokens": 15,

"temperature": 0

}'

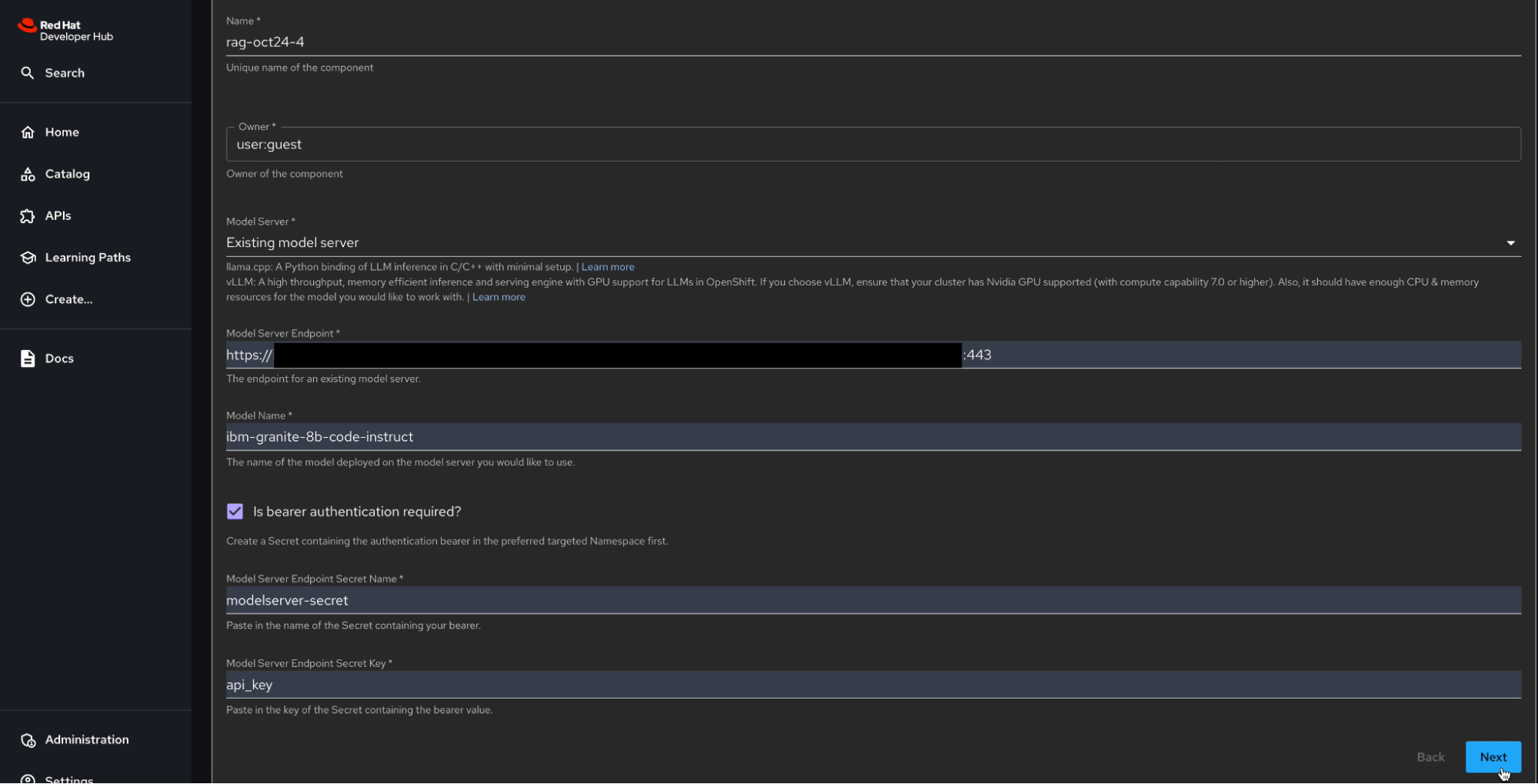

{"id":"cmpl-4e41ef0012404c4da7b342efcf3d55eb","object":"text_completion","created":1730135332,"model":"ibm-granite-8b-code-instruct","choices":[{"index":0,"text":" city in the U.S. state of California and the county seat","logprobs":null,"finish_reason":"length","stop_reason":null}],"usage":{"prompt_tokens":4,"total_tokens":19,"completion_tokens":15}}Figure 9 shows an example of consuming the model server API in the catalog from within a software template.

Importing our examples

If you are interested in trying out our catalog, you can import each of the following catalog-info.yaml files into your Red Hat Developer Hub instance—no plug-ins or additional setup required:

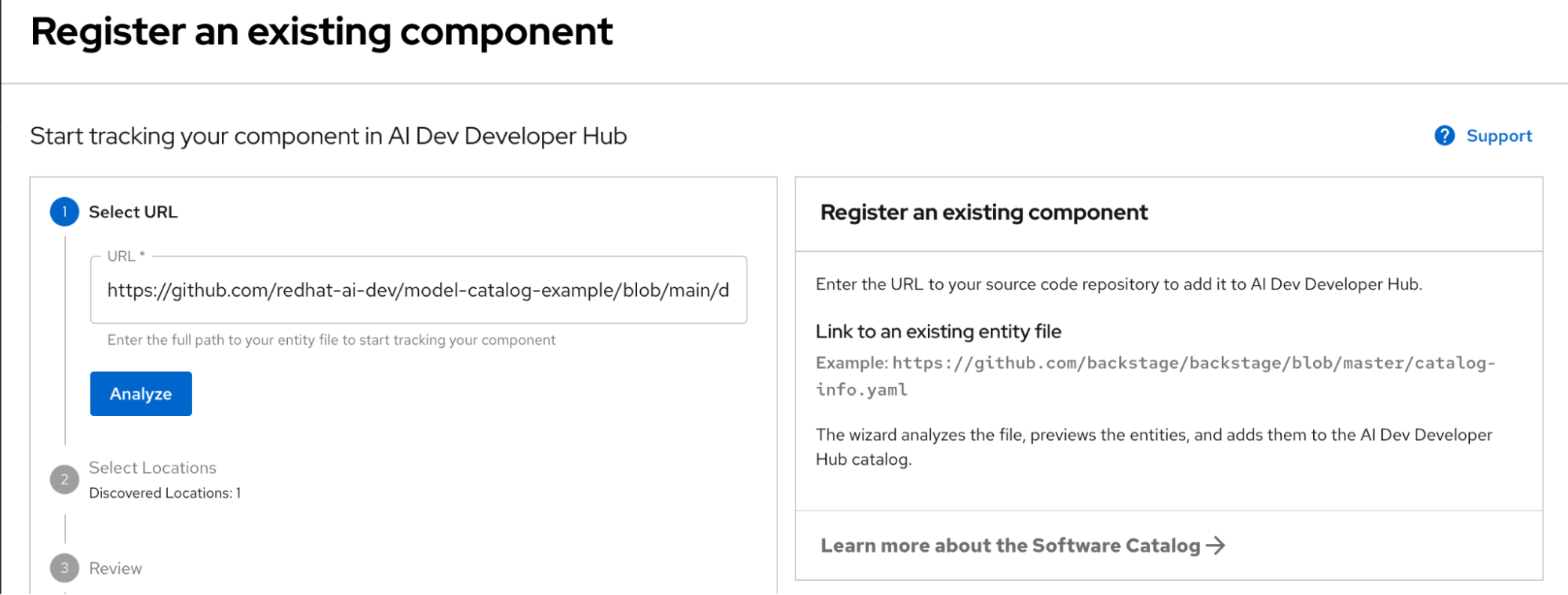

To import into Red Hat Developer Hub, simply copy the catalog-info.yaml URL, navigate to the catalog, and click Create → Register Existing Component. When prompted, paste the URL and hit Import. The model catalog example will then be imported into your catalog in Red Hat Developer Hub. See Figure 10.

If you are interested in modifying our examples or replicating the two model servers we have deployed as part of our examples, you can find instructions to do so in the following READMEs:

Conclusion

With the model catalog structure defined, we're hoping that it will form a solid foundation for integrations between Red Hat Developer Hub and AI-related systems. With the current model structure, we've shown how it's possible for platform engineers to define a central location for their team's models and model servers, while providing a location for answers to many common questions that AI developers might have when working with AI models. Finally, looking beyond the two examples we've provided based on our current use cases, we're hoping that the structure we've developed will prove flexible enough for a variety of model and model server types in use today.

Explore more topics: