In prior articles, we introduced a new technology called bootable containers, discussed how we can debug image mode for Red Hat Enterprise Linux (RHEL), and explored how to containerize and manage applications on top of image mode for RHEL. This article gives a comprehensive view of how to set up CI/CD pipelines for automating the build process when working with image mode for RHEL.

Today, many developers use GitHub and GitLab to manage their source code and CI/CD activities, so we will focus on these two environments in this article and present some best practices and tools. In a subsequent article, we will take a closer look at testing image mode for RHEL at scale.

Note: There is a plethora of resources for diving into the world of image mode for RHEL and bootable containers. The Fedora community provides an excellent getting started guide along with hands-on videos and technical documentation. You might also enjoy this hands-on lab, which guides you through the process of working with image mode for RHEL.

Updating an image mode for RHEL system

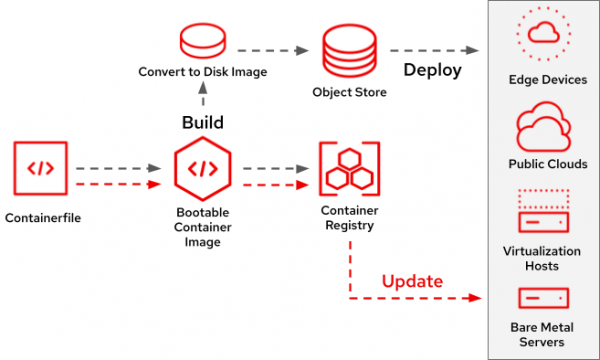

The lifecycle of a bootc image includes a number of steps. Similar to an application container, a bootc image is built via a Containerfile along with standard container build tools. A common workflow for installing the contents of a bootc image on a physical or virtual machine is to convert the container image into a disk image that is pushed to an object store, such as Amazon S3, which is eventually deployed in the target environment.

The bootc-image-builder tool is responsible for this conversion and supports a wide range of disk-image formats, such as qcow2, raw, AMI, ISO, VMDK for vSphere, and more. bootc-image-builder can easily be integrated into any existing build pipeline, and it is also used by the Podman Desktop bootc extension to manage and build disk images. This workflow is illustrated in Figure 1.

Updating an image mode for RHEL system follows a similar pattern as an application container: building a new image and pushing it to a registry. Once published, bootc can pull down the updated image from the registry onto the deployed system and reboot into the new image.

Dependency management and image digests

Dependency management plays a crucial role in automating and securing such pipelines. There are a number of tools in this space that can help us manage dependencies, whether it be libraries or code dependencies, parent images used in Containerfiles, or updates to infrastructure tools, such as GitHub Actions. Dependency management tools track the versions of the dependencies and update them whenever a new version is made available by opening a pull or merge request against the repository. Two of the most prominent dependency management tools in use today are Dependabot and Renovate Bot, both of which have an excellent integration with code-hosting platforms, such as GitHub or GitLab.

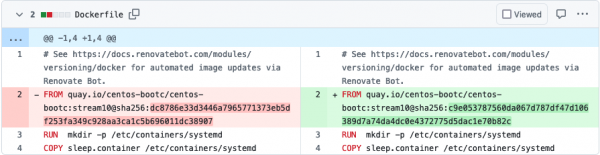

These tools come in very handy when working with image mode for RHEL as they can be used to automatically update the parent images used in Containerfiles. As a result, we highly recommend referencing parent images by a static tag and by digest (e.g., rhel-bootc:9.5@sha256:...). The tag instructs the dependency tools to look at the specific major and minor versions of the image. The digest ensures that any update to the specific major and minor versions of the image is considered.

Using a digest has a number of advantages:

- Builds are reproducible. A tag can be changed at any point in time, so building the very same Containerfile might yield different results depending on the content included within the build. This ambiguity is not possible when referencing an image by digest where the container registry will return the image with the exact matching digest.

- Using a digest is also slightly more secure as an attacker cannot return another image. The digest will be verified when pulling the image, so any changes in the metadata or the layers would be detected.

- Using a digest allows the dependency management bots described above the opportunity to take full control over updating the parent images. Such digest updates are easy for machines while using the tag allows humans to better understand which image is being used. Using both options results in the best of both worlds.

Once configured, the bot will open pull requests against the Git repository so that dependencies will be updated automatically. It is a very off-hands experience, as all that is needed is to review the proposed changes (see Figure 2) and click the merge button. The entire OS can then be built and tested as a fully automated workflow. What a time to be alive!

Building container images

Associated with the CI/CD pipeline are the steps to build the corresponding container image. Once built, the image can then be used in subsequent stages of a pipeline including converting it to disk images, pushing it to a container registry, and eventually various forms of testing. Similar to managing dependencies, there are a number of tools available for building images. And as so often is the case, there really is no right or wrong way. It all depends on what is required and needed. So, let’s review some of the tooling options available.

- GitHub Actions provide a comprehensive list of CI/CD tools that integrate well into the GitHub ecosystem. It also offers a marketplace to easily browse and install extensions. There are many extensions that can be used to build containers, such as those based on Buildah or Docker, and other extensions that can integrate with CI frameworks, such as CircleCI, Cirrus CI, or Jenkins. The choice is yours.

- Red Hat Quay is a container registry that supports so-called Build Triggers, which, among other things, can initiate the build of Containerfiles/Dockerfiles. Once a pull request is merged, the build trigger associated with a particular branch will fire and build the specific container image. Such build triggers can come in handy during the release process.

- The execution of build activities occurs in what are commonly called “Runners” and range from cloud vendor-provided infrastructure to machines that you run and manage yourself. Both GitHub and GitLab provide solutions for both approaches while other frameworks, such as Cirrus CI favors a “bring your own” execution model.

By no way is the list above a comprehensive look at the tooling available. It is our intention that an overview of the tools be provided allowing you to make an informed decision for the solution most suitable for your environment and use case..

Container registries

In case you haven’t already picked one, at some point you will need to choose a container registry for hosting your container images. Again, there are a lot of choices on the market. Depending on your needs and requirements, you might pick a self-hosted registry on your own or use of the many public registries available. The most prominent public ones are Docker Hub, Red Hat Quay, GitHub Container Registry , GitLab Container Registry, and the individual ones of the public cloud providers.

In order to push to a registry, the build pipeline needs to be extended with the proper step. The push step implies authenticating against the registry with our credentials. There are various ways to do that, and they generally depend on the framework being used. Just be aware that this is a security-sensitive step where you risk leaking your credentials to the public. The documentation of the various registries, CI/CD frameworks and tools involved generally do a great job of guiding users through setting up a secure pipeline.

Managing Red Hat subscriptions

When using a Red Hat Enterprise Linux bootc image, we need to make sure that our build environment has a registered Red Hat subscription. There are numerous ways to apply subscriptions and it usually depends on the environment. Matt Micene has written a comprehensive guide with examples for GitHub for using GitHub Actions that we highly recommend reviewing.

If you do not have a Red Hat subscription yet, we recommend joining the Red Hat Developer program to get a Red Hat Developer Subscription for Individuals. It is a no-cost benefit of the Red Hat Developer program that includes access to Red Hat Enterprise Linux and other Red Hat products for development use. It is an offering designed for individual developers. If you are using Podman Desktop, you can get a subscription in just a couple of clicks, as described in the following article.

Best practices for tagging and versioning images for clients

As outlined previously, building and publishing container images is an integral part of updating image mode for RHEL hosts. For automation and CI/CD pipelines, we recommend making use of digests to be intentional and explicit about which specific image version is used during the build. But how should the produced images be tagged and versioned?

When it comes to publishing and releasing our own images, we need to make sure that clients can easily consume them. Choosing the right tagging and versioning schemes for our images will pay off long term as it impacts a number of aspects for managing workloads. Semantic versioning is an expressive strategy for tagging images. Semantic versioning is like a contract for consumers and uses the format X.Y.Z where incrementing either component has a very specific (semantic) meaning:

- X: A major version bump with backwards incompatible changes.

- Y: A minor version bump with new functionality and backwards compatible changes.

- Z: A patch version bump with bug fixes but without new functionality in a backwards compatible way.

Using semantic versioning has a number of advantages over using less expressive versioning schemes such as the latest tag. First, it is obvious which version of a given image is used. Second, consumers of the image can pin to major and/or minor versions. For instance, using image:2.3 will pin to the version 2.3 and consume all patch updates (e.g., 2.3.1, 2.3.2, etc). Using image:2 will pin to the major version 2 including all minor and patch versions (e.g., 2.1, 2.2, etc). Using image:2.3.1 would pin to this exact version and not include future patch versions. A less expressive tag such as latest could include any version and does not necessarily give consumers any guarantees of stability and compatibility.

Building disk images

At this point, we have set up a pipeline for managing dependencies and building bootc images which may have already been pushed to a container registry. The next step in the pipeline is to convert the bootc container image into a so-called disk image that we can use to bootstrap and provision virtual or physical machines in a target environment. The target environment could really be anything, from public cloud, to on-prem, to some far edge deployments somewhere in the Arctic.

Converting a bootc container image to a disk image can easily be automated with the bootc-image-builder (BIB) tool. BIB itself is shipped in the form of a container image, so it fits just perfectly in a CI/CD pipeline. It Support is available for the majority of l disk image formats, including AMI, qcow2, VMDK, raw, vhd, anaconda-iso, and more. BIB has a feature-rich configuration that allows for customizing the disk image further by adding users, SSH keys, kernel arguments and managing partitions. For more details on the capabilities provided by BIB, refer to the Red Hat documentation and the upstream repository.

Once the disk images have been built, they can be pushed to so-called object stores to accomplish two key goals:

- Storage of the disk images.

- Deployment on the desired machines.

The target environment and use case usually determine which kinds of stores that can be used. If the target environment is AWS, Amazon S3 is an ideal object storage solution.

Summary

In this article, we outlined how to set up a CI/CD pipeline for image mode for RHEL. The choice of tools and the number of alternative frameworks can be overwhelming at times, so we highlighted the most prominent tools in use. We further highlighted the importance of referencing parent images by digest and the critical role of using a meaningful tagging and versioning scheme, such as semantic versioning.

In a future article, we will dive deeper into how we can extend the pipeline with test cases to build a comprehensive, production-ready, and fully automated pipeline.

Last updated: January 13, 2025