Image mode for Red Hat Enterprise Linux (RHEL) is designed to simplify the experience of deploying and maintaining operating systems (OS). Effectively, we use the same tools and patterns, made popular on the application side of the house with Linux containers, to manage complete operating system images. If you’re into containers, immutable infrastructure, CI/CD, and GitOps driven environments, this technology is a natural fit. I’d even argue that if you manage a very conservative "ITIL-style" environment, we think you’re going to love this too.

Fun fact, this technology grew out of OpenShift where Red Hat Enterprise CoreOS is managed by the cluster. A container-native experience to manage and modify the cluster nodes is not only a perfect fit, but it also works incredibly well for workloads running on Red Hat OpenShift Virtualization. We extended the idea further to Linux systems running outside of the control of a cluster technology and so far the results have been incredible. In this article, we are going to look at four use cases where image mode will streamline your OS and its operations.

Single application systems

The first use case that we’ll take a closer look at is for single application systems, depicted in Figure 1.

This is when you run a single application on a single operating system and it is one of the most common deployment patterns that we see in enterprise IT today. For those of us who lived through the meteoric rise of virtualization, we saw first hand the shift that occurred from multi-tenancy at the OS level to the hypervisor level. This style of isolation brought about countless benefits that are still desirable today, hence the dominance in both the data center and cloud instances.

Now, consider the primary management touchpoints that exist here. At a high level, we have the virtualization, operating system, and application levels. Sure there’s still networking, security, and on and on, but let’s stick to these for the time being. Now imagine an appliance experience for these systems where we collapse the OS and application into a single unit that we can cleanly test every single update, make patch Tuesday disruptions a thing of the past, and *simplify* how these systems are built and run.

It turns out there are many other benefits as well. Once we move the OS to a bootable container, we gain consistency and better reproducibility. Sure there are other ways to do this with Linux systems, but historically these are much slower to build and/or have a higher barrier to entry. The consistency also makes headaches around system drift a thing of the past, and perhaps the best feature is the A/B update model where if we ever did run into a problem, we can rollback and revert it—no snapshotting or storage trickery for the OS needed! Figures 2 and 3, illustrated by Kelsea Mann, showcase this process.

Potential workload targets: Application servers (JBoss EAP, tomcat, Node.js), web/proxy (nginx, httpd, HAProxy, Caddy, lighttpd, etc.), databases, commercial-off-the-shelf (COTS) apps, etc.

If this list of workloads struck a nerve with you and you thought, "Hey, it’s 2024, those applications should be run in containers!" We agree, but remember there are massive amounts of systems and workloads maintained that pre-date modern container infrastructures. Image mode can serve as the perfect entry point for moving these systems into a container workflow.

Container hosts

Another use case is with container hosts (Figure 4).

One of the most profound concepts from Linux containers is the ability to decouple applications and dependencies from the underlying operating system. This model allows each to be maintained separately, often by different teams, and reduce, or even completely eliminate, conflicts between the two. This has proven to be incredibly successful and is often viewed as the evolution of the virtual machine.

For workloads that are already containerized, image mode for RHEL can help users gain a number of operational efficiencies, including:

- Common infrastructure across apps and OS: Use any OCI container engine, registry, and build environment to create both OS and application containers. That’s right, no new infrastructure or tools are necessary for environments already using containers.

- Common operational process: Maybe more importantly is organizational and cultural implications of bringing the app and infrastructure sides of the house together operationally. Containers follow a simple build, ship, run model which we can now apply across the IT landscape.

- Increased flexibility: Compared to previous iterations of container-optimized operating systems, like CoreOS Container Linux, we can now more easily inject drivers and software into the OS when required. The result gives us the best of an immutable OS with transactional updates that also provides exactly the software combination necessary to meet your organization’s operational and security requirements.

- Potential workload targets: Any underlying container infrastructure (Podman, OCP/kube nodes), CI infra (Github runners, Jenkins nodes, etc.)

Edge appliances

"The edge" encompasses a vast array of workload types and environmental challenges. One commonality we see here is that all the bells and whistles found in a "light’s-out-data center" do not exist—worst case, we could even be talking about reliable electricity or connectivity. We also typically don’t have enough infrastructure to leverage the types of APIs and services available in cloud environments. (if you do have the hardware footprint to support it, you should look at OpenShift’s edge deployments including MicroShift)

For edge environments with a minimal footprint, combining the previous two examples solves a lot of infrastructure and operational challenges we see in this space. Image mode for RHEL makes it easy to create and update "appliance style" systems running traditional applications, containerized applications, or both at the same time. As our OS images come from the container registry, it’s easy to scale deployments that are consistent and repeatable (Figure 5).

By default, image mode allows updates to happen automatically based on registry tags. Imagine a pipeline where each update passes rigorous CI testing and upon passing, is promoted in the container registry. If desired, entire fleets of systems would automatically download and apply this update, giving us a completely hands-free management experience. It’s also easy to ensure nodes spread out the update window over any period of time to ensure the registry doesn’t hit unmanageable peaks.

Users in this space will also benefit from the image-based updates that provide a familiar A/B approach to updating edge servers and devices. Rollbacks also provide peace of mind for remote environments that lack personnel to assist when needed. Support for air-gapped and disconnected, intermittent, and limited (DIL) environments is enhanced by our automated installer and ability to do off-line updates with container images.

Potential workload targets: point-of-sale, kiosks, gateways, etc.

AI/ML stacks

The fourth use case addresses AI/ML stacks and workloads (see Figure 6).

These workloads commonly require carefully versioned dependencies that span all layers of the stack. This is also an incredibly fast moving and dynamic space. While a lot of this complexity can be managed with a combination of traditional container images and orchestration platform, image mode helps by curating the delivery of the whole stack. In fact, this is exactly how Red Hat is delivering Red Hat Enterprise Linux AI. We have a series of containers for frameworks, models, and InstructLab. All of these are presented in a final, bootable container image with the corresponding accelerator stack. Not only is this a good model for generative AI (gen AI) stacks, but it also works well for predictive and inferencing based workloads.

Image mode is powerful for managing these stacks across their life cycle. While yes, image mode gives us a quick win on "hello world" style tests, the value goes far beyond that. Versioning components in AI/ML stacks can be complex as they span very different rates of change. Higher-level frameworks and models are evolving quickly and often need to change at a much faster pace than many production environments are used to handling. For these fast moving layers, we can always create new *complete stack* images, but users may also want to take advantage of applications containers and update select components independently of the rest of the image at runtime. For slower moving parts of the stack that are more deeply connected with the kernel and accelerators, we can carefully version these with the operating system image. This type of flexibility allows users to deal with these highly dynamic environments and deliver the changing requirements.

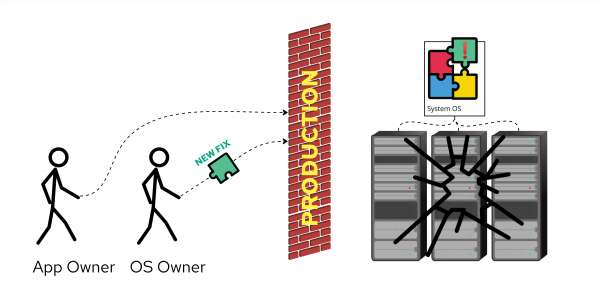

We also gain further operational stability by assembling these pieces at build time, rather than patching in production. This model helps handle multi-vendor RPM repositories that deal with release time lag. The scenario where patching a system to a new kernel version is ahead of a third party kernel module(s) or driver can be caught every time. Users no longer risk an rpm dependency problem, or worse, reboot a production system only to discover a module no longer loads and the system is in a degraded state. The beauty of image mode is we handle all of this during the container build process which allows us to catch misalignments before they are discovered in production.

Potential workload targets: Nodes for both training and inferencing, e.g., RHEL AI.

Conclusion

Each of these use cases build upon each other, but these are by no means the only areas where image mode for RHEL is valuable. Potentially any use case where preassembling the exact components of the system outside of production is a potential fit. We invite you to explore the possibilities with us and start experimenting with image mode and see what bootable containers can do for your teams and organization. As a next step, get hands-on with our intro lab and then move on to our day-2 lab to go deeper.