Welcome back to part two of this series. In the previous article, we defined edge and looked at use cases and constraints. Now let’s talk about Red Hat Edge platforms and how they can be used to address them.

Platforms overview

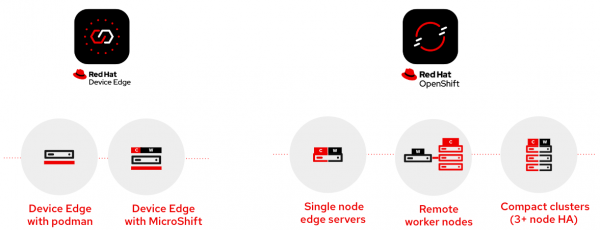

No one solution fits all needs. Red Hat offers a wide range of platforms to address different requirements and constraints. We distinguish between two main categories: Red Hat Device Edge and Red Hat OpenShift. Each category allows different topologies to deploy in order to adjust to different requirements.

Figure 1 depicts the options, sorted left to right by growing sizes, hardware, and resource requirements.

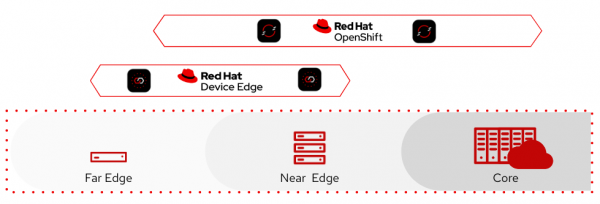

Please note: it is not “either/or”–a mixture of the two Red Hat products can help address many of these requirements and constraints. There is intentional overlap between them to allow you to start on one side and grow into the other. For example, you can start on the far edge device side and connect those to the cloud, or reach out from the hybrid cloud to edge (Figure 2).

Red Hat Device Edge

Red Hat Device Edge is targeted to small form factor, field-deployed edge devices, meaning that it is designed to operate at far edge deployments with very limited hardware and software resources, in hard to reach locations, and with only intermittent connectivity and utilities. It allows solution builders to take full control of hardware, operating systems, and application platforms to create a tailor-made, highly optimized, and specialized solution that solves their specific needs using their specific methods. After all, it’s open source!

Red Hat Device Edge offers both lightweight Kubernetes using Red Hat build of MicroShift (an enterprise-ready distribution of the Red Hat-led open source community project) and Red Hat Enterprise Linux (RHEL) to meet the needs of field-deployed devices. Through this single platform, users choose the capabilities that meet their needs–they can deploy the operating system (OS) and later add Kubernetes orchestration using MicroShift, or deploy both the OS and MicroShift at the same time.

The main features of Red Hat Device Edge are:

- Image generation. This allows IT to build an operating system that contains exactly the bits and bytes needed, but nothing more. That image is built once and then distributed to all the edge locations, which reduces time spent deploying and minimizes errors inherent in manual workflows.

- Transactional updates with intelligent rollbacks. Updates of the operating system and workload can be staged on the system and applied by rebooting into the new version. Hence, it is an atomic update that can not break during the update. Compare this, for example, to a traditional yum update, which might fail during applying patches to the system. Health checks (for the operating system and the applications) validate the success and automatically revert to the last working state in case of errors.

- Efficient over-the-air updates. New updates require only the delta changes to be transferred over the network, minimizing the amount of data that needs to be transferred to hundreds or thousands of remote nodes.

Red Hat Device Edge workloads can be traditional RPM-based applications, virtual machines using KVM, containers, or Kubernetes-based. It comes in two variants to run containerized workloads: with Podman or with the Red Hat build of MicroShift.

Red Hat Device Edge with Podman

Using Red Hat Device Edge with Podman is the smallest possible deployment with minimum system requirements of 1 core, 1.5 GiB RAM, 10GiB disk. Workloads can be RPM-based, virtual machines (KVM), or containerized. Basic container orchestration is done using systemd (e.g., startup in the right sequence, updates, ensuring containers running).

Using RHEL image builder and ostree-based deployments, everything can be included in a single image that is then distributed to the edge locations. This works really well for airgapped, disconnected, or offline sites. For example, the image can be installed on a USB stick, which is brought to the device and then booted or installed within minutes. The same goes for updates: you can put the new ostree commit onto the USB stick.

An additional benefit of RHEL image builder is that resolving and downloading dependencies happens just once, during build time at the central location instead of in every single edge installation. That saves bandwidth and time/CPU cycles during deployment at the edge site.

Red Hat Device Edge with Podman is best used for rather static workloads with only a couple of containers (rule of thumb: 1-5 containers) and updates that can tolerate workload interruption. You can do automatic updates with Podman, but there will be a brief interruption when the old pod is stopped and the new pod is started. No complex container orchestration needed (e.g., no dynamic adding of components, no rolling updates, no pod scaling, no workload isolation).

For example, Red Hat Device Edge with Podman can help facilitate Passenger Information Systems in rail cars (transportation application), software defined control systems (industrial application), and digital signage that may change regularly, with or without refreshed data (retail application).

To manage this kind of configuration, we recommend Red Hat Ansible Automation Platform.

Red Hat Device Edge with Red Hat build of MicroShift

Either at deployment or later, users can add Kubernetes orchestration by augmenting Red Hat Device Edge with Red Hat build of MicroShift. The minimum system requirements are 2 cores, 2GB of RAM, 10GB of disk. It supports the full Kubernetes API, but not additional features provided by OpenShift such as the developer tooling. The goal is to support workload portability. To accomplish this, the Red Hat build of MicroShift design starts with a clean slate, then adds only the components needed to achieve the goal, such as etcd, container networking, and storage. This results in a different experience compared to OpenShift.

Also worth mentioning is that the Red Hat build of MicroShift, in contrast to OpenShift, does not actively manage the underlying OS and is not that tightly coupled with it. While this provides more degrees of freedom for tailor made bespoke applications, it adds additional responsibilities to the solution builder to take care of the operating system.

Red Hat Device Edge with Red Hat build of MicroShift is ideal when a modern microservices-based solution needs to be scaled down to a small form-factor, field-deployed edge device using management capabilities (Kubernetes API, such as Helm). We also recommend it for container workloads that require edge locations within the minimal size requirements, microservices-based solutions (rule of thumb: more than a handful of containers), dynamic workloads for when users frequently add components/applications, rolling updates of workloads, and when full control of the underlying OS is needed.

For example, it helps facilitate IoT Gateway/Condition Monitoring (industrial application), Point of Sale (POS)/Point of Information Systems and loss prevention using machine vision (retail application), and quickly changing workload profiles during/between missions of forward deployed units, e.g., using different machine learning algorithms/models (defense application).

To manage these configurations, we recommend Red Hat Ansible Automation Platform and Red Hat Advanced Cluster Management for Kubernetes.

When to use Kubernetes

Red Hat Device Edge is one platform, with two options for managing containers: Podman and MicroShift. This raises the question: “When do I need MicroShift/Kubernetes?”

Red Hat build of MicroShift adds Kubernetes container orchestration capabilities to Red Hat Device Edge. Therefore, should you use Kubernetes? It depends on a couple of factors. Here are some guiding questions and advice, depending on the answer.

What's the architecture of your solution?

With a rather monolithic application, made of only a couple of containers, Podman with systemd orchestration is probably good enough. Rule of thumb: if only a handful of containers are required, use Podman. For microservices/event-driven architectures with less than 10 microservices in containers, Kubernetes usually is the right choice.

What type of container scheduling do you need?

Keep in mind, Kubernetes has been invented to schedule large amounts of containers to large amounts of nodes–if you have only 3 containers running on a single node, you probably don't need Kubernetes. Critical applications require container management for resilience and self-healing, ensuring my application is available and can scale depending on the traffic

How mature is your development organization with containers and Kubernetes?

If you just started the journey, try to avoid the additional complexity of Kubernetes–you can step up your game later. Also, do you really need a microservices architecture? You trade development complexity (less) with higher runtime complexity (more). So maybe running just 2-3 monolithic containers, but being able to roll out updates every three weeks, is a better fit.

Do you need to scale from very small via intermediate to large scale solutions?

Do you run the same containers at the edge and also in the core? Then Kubernetes is a must-have, because that is the standard API for declarative deployments in all the different scenarios. How dynamic is the workload? Do you want to add or let your customers add additional components that rely on base services during solution lifetime? That is an indicator in favor of Kubernetes.

How frequently do you update your workload (e.g., for new features and functionality)?

Do you or can you do a rolling update without interruption (the workload must support this!). If you are in the cloud, using a dynamic 3 week to 3 month cadence, Kubernetes with rolling updates is a must have.

How frequently do you update the base system (e.g., OS security patches)?

If you want to update as little as possible, Red Hat Device Edge with Podman best supports long-term stability. Keep in mind that you still should apply security patches on a regular basis, for example monthly or quarterly. Don't rely on the assumption that your network is secure.

Conclusion

This article covered Red Hat Device Edge in detail. It is the edge solution targeting far edge, small form factor edge deployments. In other words, it provides full control for tailor-made solutions, with optional Kubernetes container orchestration capabilities when required.

In the next article, we will explore OpenShift topologies.

Read Part 3: Red Hat OpenShift's flexibility: Our topologies for your topographies

Last updated: September 12, 2024