No matter how much you know about Apache Camel, Camel K is designed to help and simplify how you connect systems in Kubernetes and Red Hat OpenShift, and it comes with cloud-native Kafka integration. This article helps you discover the power and simplicity of Camel K and opportunities for using it in ways you might not have previously considered.

About Camel K: Camel K lives in Apache Camel, the most popular open source community project aimed at solving all things integration. Camel K simplifies working with Kubernetes environments so you can get your integrations up and running in a container quickly. See the end of this article for a list of resources for learning more about Camel K and the latest general availability (GA) release.

Challenges in cross-team collaboration

Teams in organizations frequently connect online to discuss their shared objectives and tasks. They also sometimes need to connect with other teams within the organization to answer questions and concerns and determine the right strategy forward. Figure 1 is an overview of this cross-functional collaboration between teams.

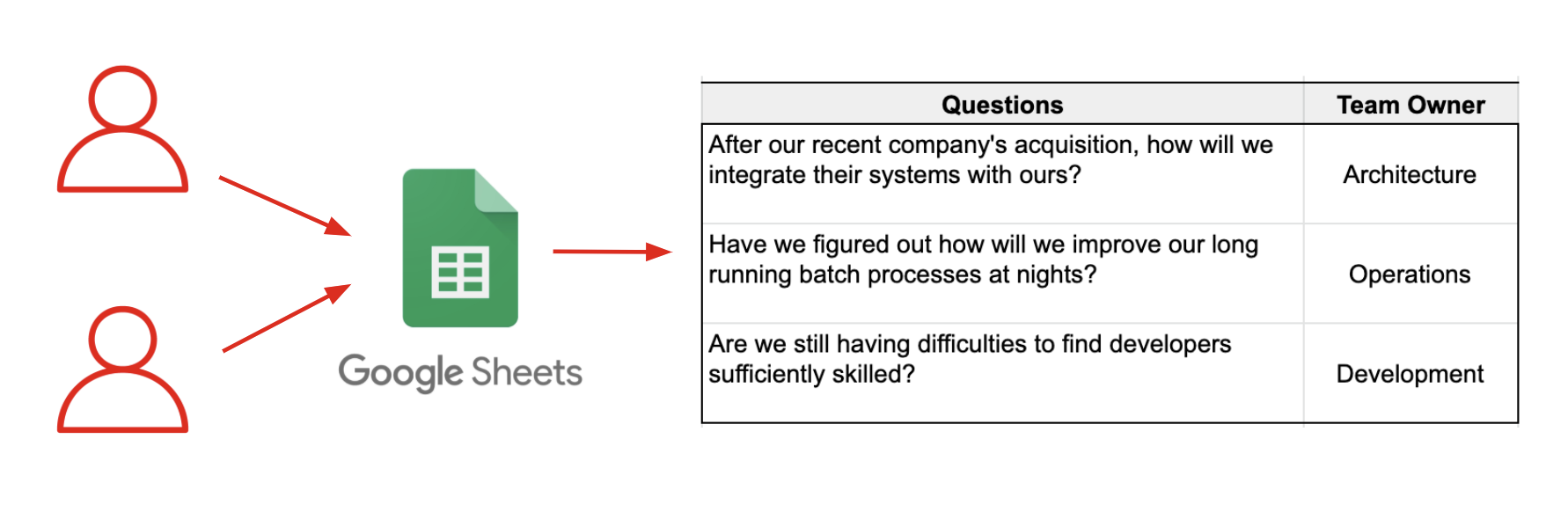

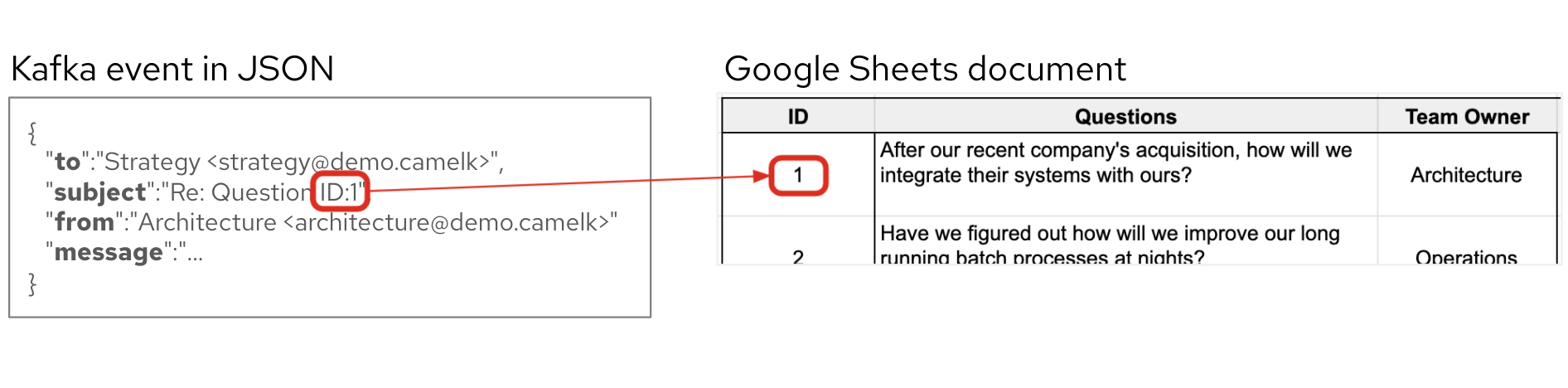

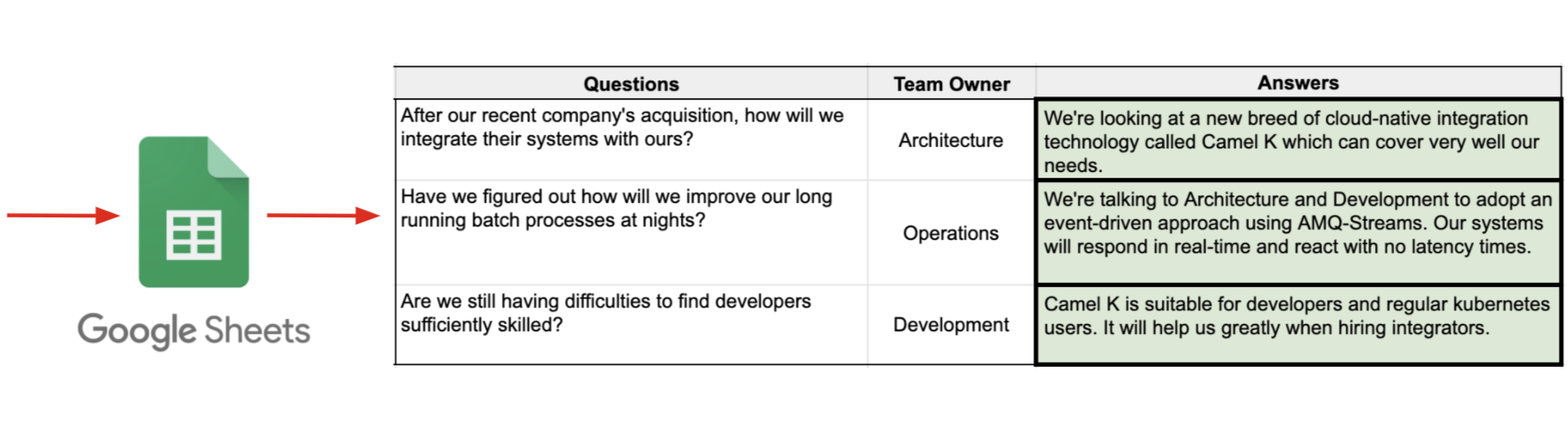

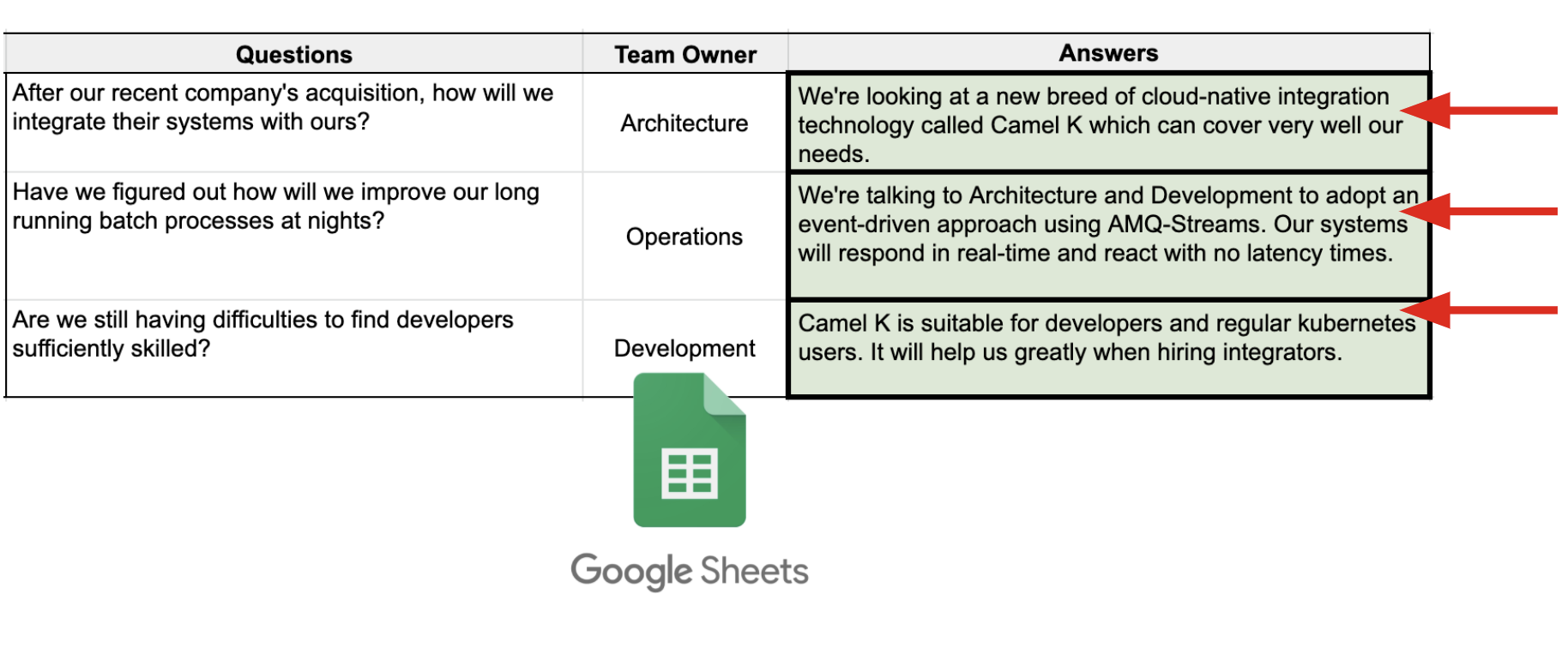

As a collaboration tool, teams frequently use Google spreadsheets to document open questions, as shown in Figure 2. Getting these questions answered requires finding the right individuals outside the team who can help complete the document. Contacting and communicating across teams is time-consuming and not always easy.

Automating cross-team interactions

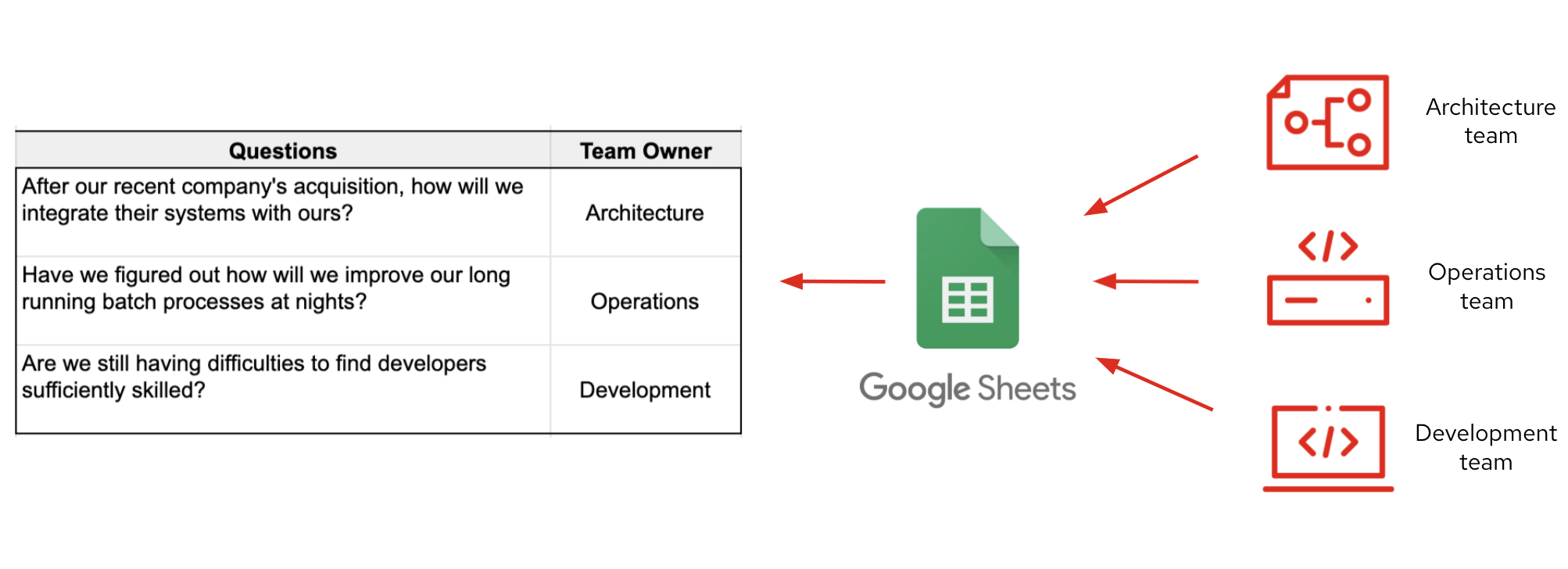

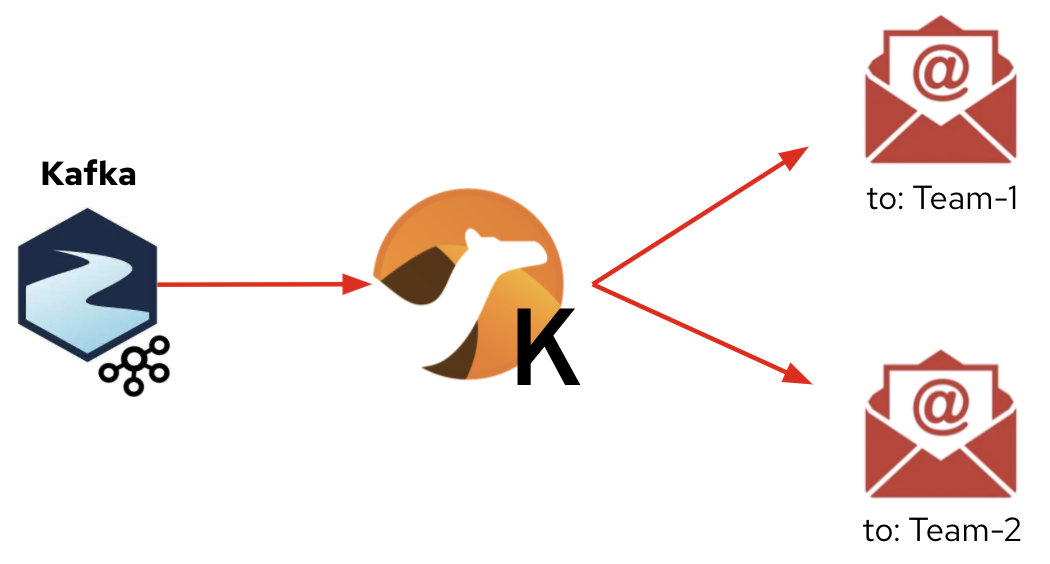

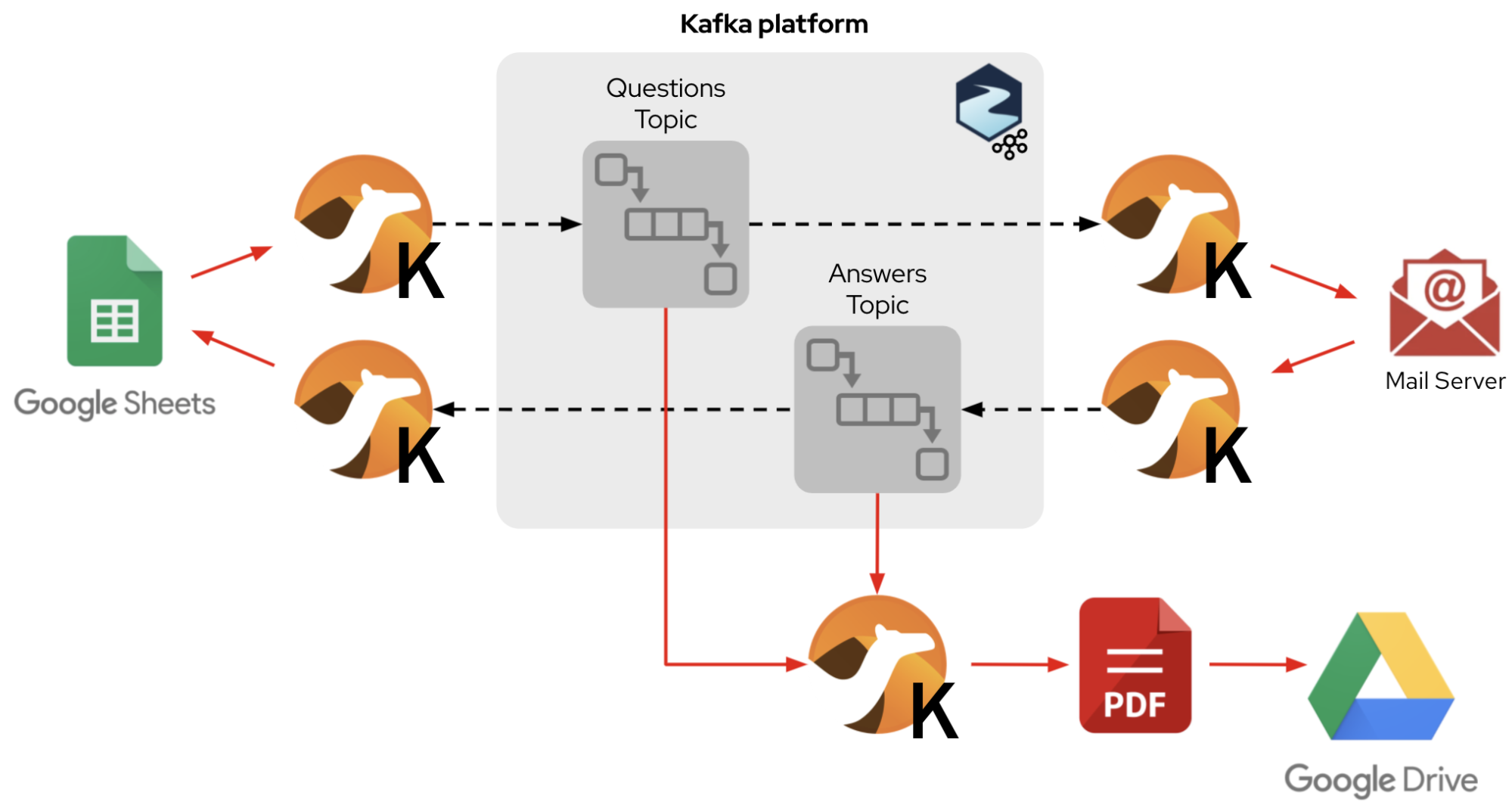

For our scenario, consider an organization that would like to automate the process of sending out questions for cross-team collaboration. The system would distribute the questions to the relevant teams and collect their responses back, as shown in Figure 3.

We can use Camel K and Kafka, running on a Kubernetes platform, to solve this scenario. Camel K provides great agility, rich connectivity, and mature building blocks to address common integration patterns. Kafka brings an event-based backbone and keeps a record of all the cross-team interactions.

Let's walk through the stages of the integration.

Stage 1: Data as a stream

Camel K introduces the concept of KameletBindings. In short, these are no-code configurable definitions that typically bind two Camel K connectors. We use them to open an integration path so that information flows from a source to a destination (the sink).

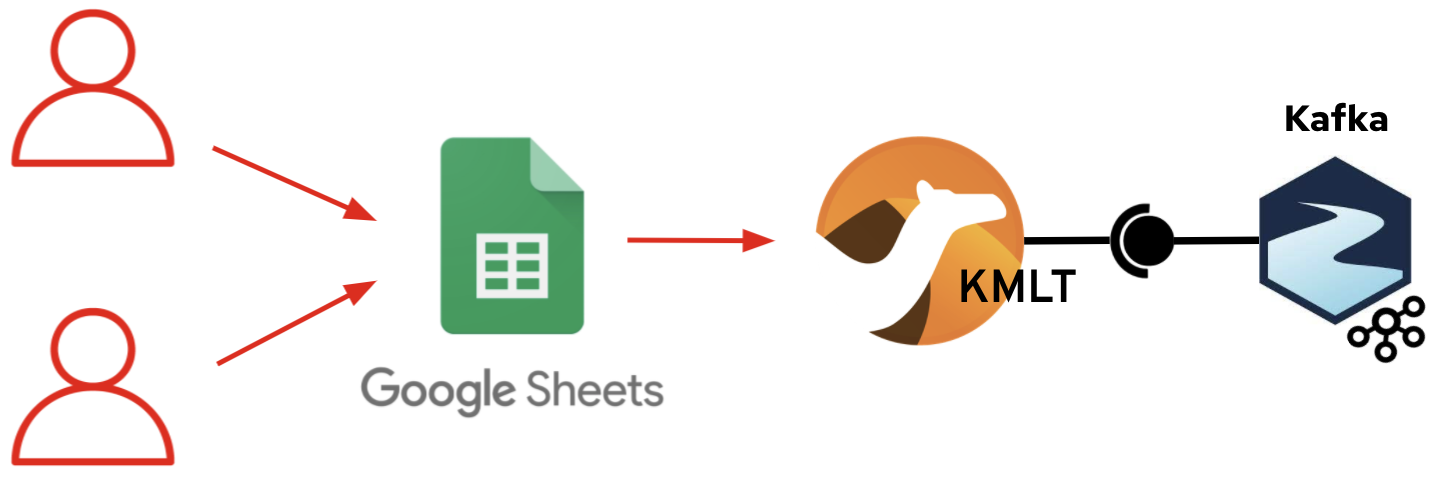

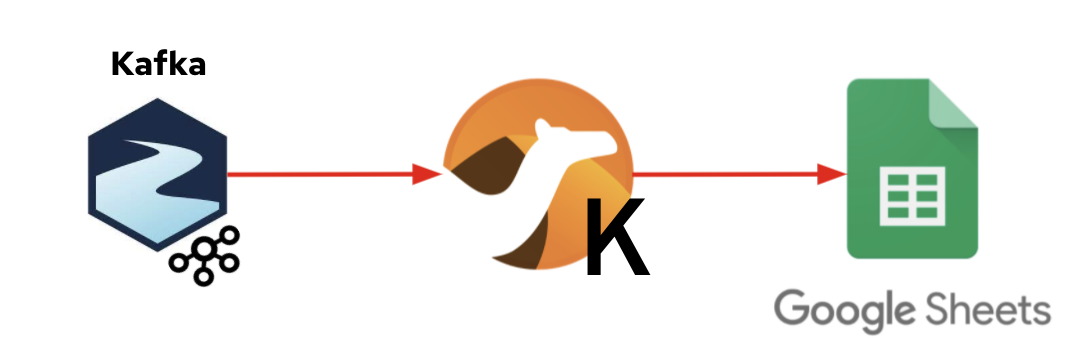

In our simulated scenario, any regular Kubernetes user can define a KameletBinding that includes two connectors and enables the data flow shown in Figure 4.

In this data flow, the KameletBinding captures and forwards the information in a Google Sheets document to Kafka. This binding only requires configuring a source and a sink in a YAML definition, as follows:

apiVersion: camel.apache.org/v1alpha1

kind: KameletBinding

metadata:

name: stage-1-sheets2kafka

namespace: demo-camelk

spec:

source:

ref:

kind: Kamelet

apiVersion: camel.apache.org/v1alpha1

name: google-sheets-source

properties:

accessToken: "the-token"

applicationName: "the-app-name"

clientId: "the-client-id"

clientSecret: "the-client-secret"

index: "the-index"

refreshToken: "the-refresh-token"

spreadsheetId: "the-spreadsheet-id"

range: "the-range"

delay: 500000

sink:

ref:

apiVersion: kafka.strimzi.io/v1beta1

kind: KafkaTopic

name: questions

The following command shows an example of how to create this Camel K integration using the Kubernetes (kubectl) or OpenShift (oc) client:

oc apply -f kameletbindings/stage-1-sheets2kafka.yamlWhen the KameletBinding is created via the web console or command-line interface (CLI), Camel K automatically triggers a build and deploys a container that immediately starts the data transfer from Google Sheets to the Kafka platform.

Stage 2: Data distribution

The questions in the spreadsheet have now been ingested into Kafka: One event per question. Our next mission is to deliver them to the designated teams via email, as illustrated in Figure 5.

Each Kafka message includes three pieces of information, as the following sample from the spreadsheet shows:

| 1 | After our recent company's acquisition, how will we integrate their systems with ours? | Architecture |

We apply the message router enterprise integration pattern (EIP). Note that we're using the third column in the spreadsheet ("Architecture") as the routing key to deliver each message to its designated team.

As this integration requires routing logic, instead of defining a KameletBinding, we prefer using Camel's DSL (domain specific language) for its implementation. Only one Camel K source file is required. Its core logic would look as follows:

Note: The team is extracted from the array’s position 2 using the syntax ${body[2]}.

.choice()

.when(simple("${body[2]} == 'Development'"))

.setProperty("mail-to", constant("development@demo.camelk"))

.to("direct:send-mail")

.when(simple("${body[2]} == 'Architecture'"))

.setProperty("mail-to", constant("architecture@demo.camelk"))

.to("direct:send-mail")

.when(simple("${body[2]} == 'Operations'"))

.setProperty("mail-to", constant("operations@demo.camelk"))

.to("direct:send-mail")

.otherwise()

.log("Message discarded: team is unknown.");The Camel K code consumes events using the Kafka connector, applies the message routing logic shown, and then pushes the data via the mail component connected to the mail server.

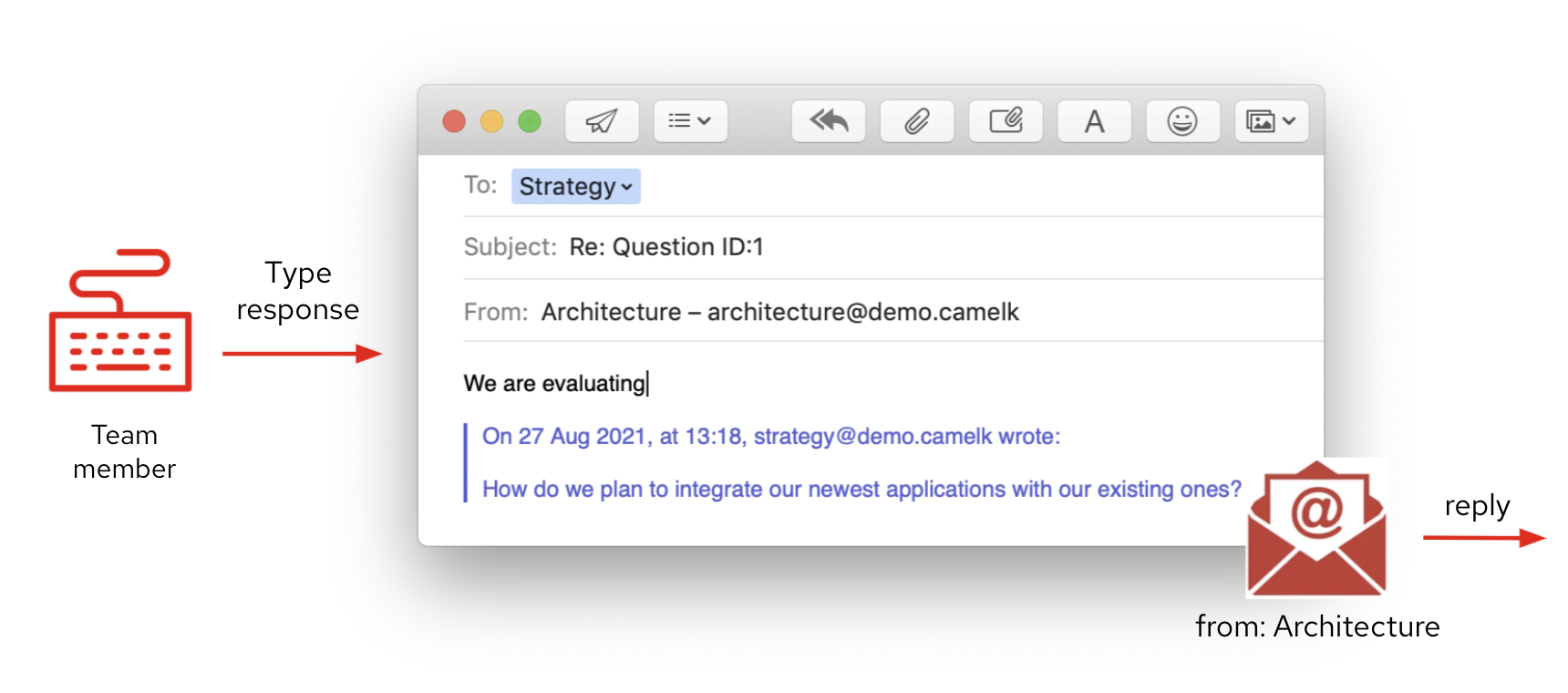

Each team receives an email in their inbox containing the question directed to them. Any member of that team can pick up the message and reply with an answer, as shown in Figure 6.

Running this Camel K component takes one command in the CLI. Here is an example of how to run it on Kubernetes:

kamel run stage-2-kafka2mail -d camel-jacksonStage 3: Data collection

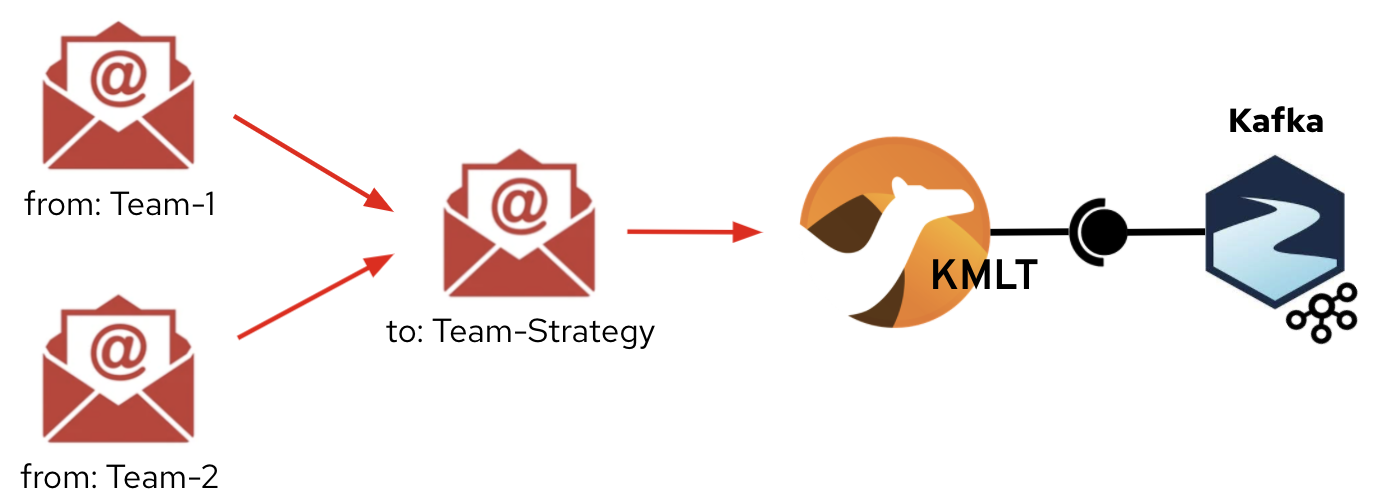

So far, we have successfully distributed the questions on behalf of the Strategy team. Teams answering the questions will direct their replies to this team's inbox. The next integration step in the chain (Figure 7) will channel email replies back to Kafka, thus enabling it to keep a history of events containing cross-team interactions.

Again, this piece does not require any coding or experience with Camel. To establish this data flow, we can define a KameletBinding as follows:

apiVersion: camel.apache.org/v1alpha1

kind: KameletBinding

metadata:

name: stage-3-mail2kafka

namespace: demo-camelk

spec:

source:

ref:

kind: Kamelet

apiVersion: camel.apache.org/v1alpha1

name: mail-imap-insecure-source

properties:

host: "standalone.demo-mail.svc"

port: "3143"

username: "strategy"

password: "demo"

steps:

- ref:

kind: Kamelet

apiVersion: camel.apache.org/v1alpha1

name: mail-to-json-action

sink:

ref:

apiVersion: kafka.strimzi.io/v1beta1

kind: KafkaTopic

name: answersThe following command shows an example of how to create this Camel K integration using oc. The same command would work with kubectl:

oc apply -f kameletbindings/stage-3-mail2kafka.yamlWhen the KameletBinding is created (via the web console or CLI), Camel K automatically triggers a build and deploys a container. The container immediately starts collecting email responses and pushing them to the Kafka platform.

Stage 4: Push to cloud service

The piece that closes the circle is setting up the data flow from Kafka to the Google Sheets document. It requires the necessary intelligence to correctly place each response into the corresponding cell in the spreadsheet, as shown in Figure 8.

A Camel DSL implementation fits the purpose here. It will extract from the Kafka event the correlation information that indicates where to push the answer to in the spreadsheet, as shown in Figure 9.

The Kafka event represents (in JSON format) the email reply. One of its fields, the email "subject," contains the key value that identifies the question in the Google Sheets document. Camel K extracts the ID and uses it when invoking the Google API.

After processing all the responses, the Strategy team can advance to the next stage and decide what their next strategic decision will be (Figure 10).

Running this Camel K component takes one command in the CLI. Here's an example of how to run it on Kubernetes:

kamel run stage-4-kafka2sheets -d camel-jacksonStage 5: Stream post-processing

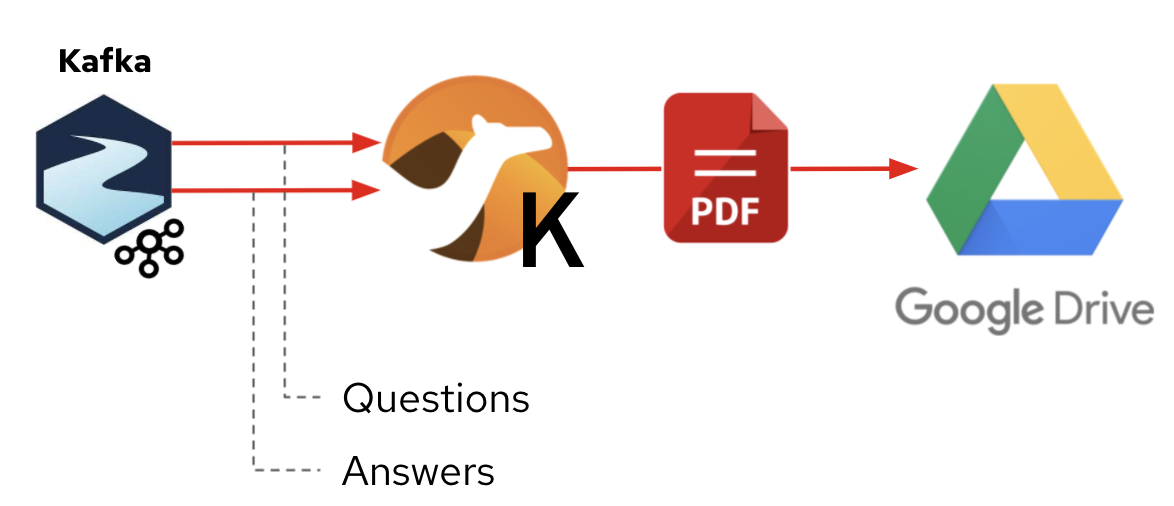

The Kafka backbone keeps a historical record of all the events flowing in both directions: both questions and answers. It enables systems to subscribe to and (for example) replay and process event streams. Teams can then use this capability to generate valuable information for other departments in the organization.

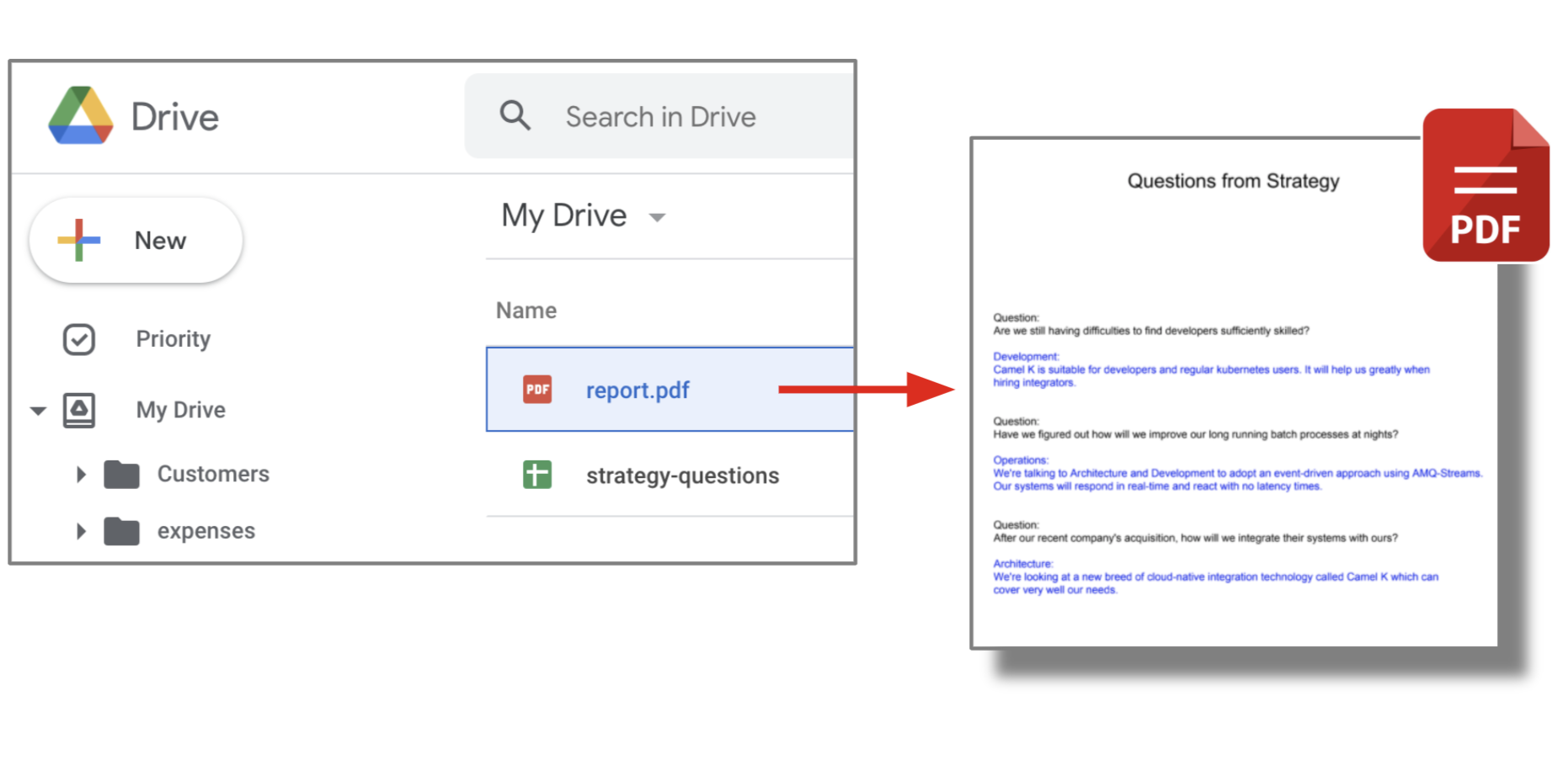

In our example, we use a Camel K unit to consume from both Kafka topics: questions and answers. We then render a report in PDF format that will be made available to anyone in the organization who is interested in cross-functional interactions related to strategy. Figure 11 illustrates the integration.

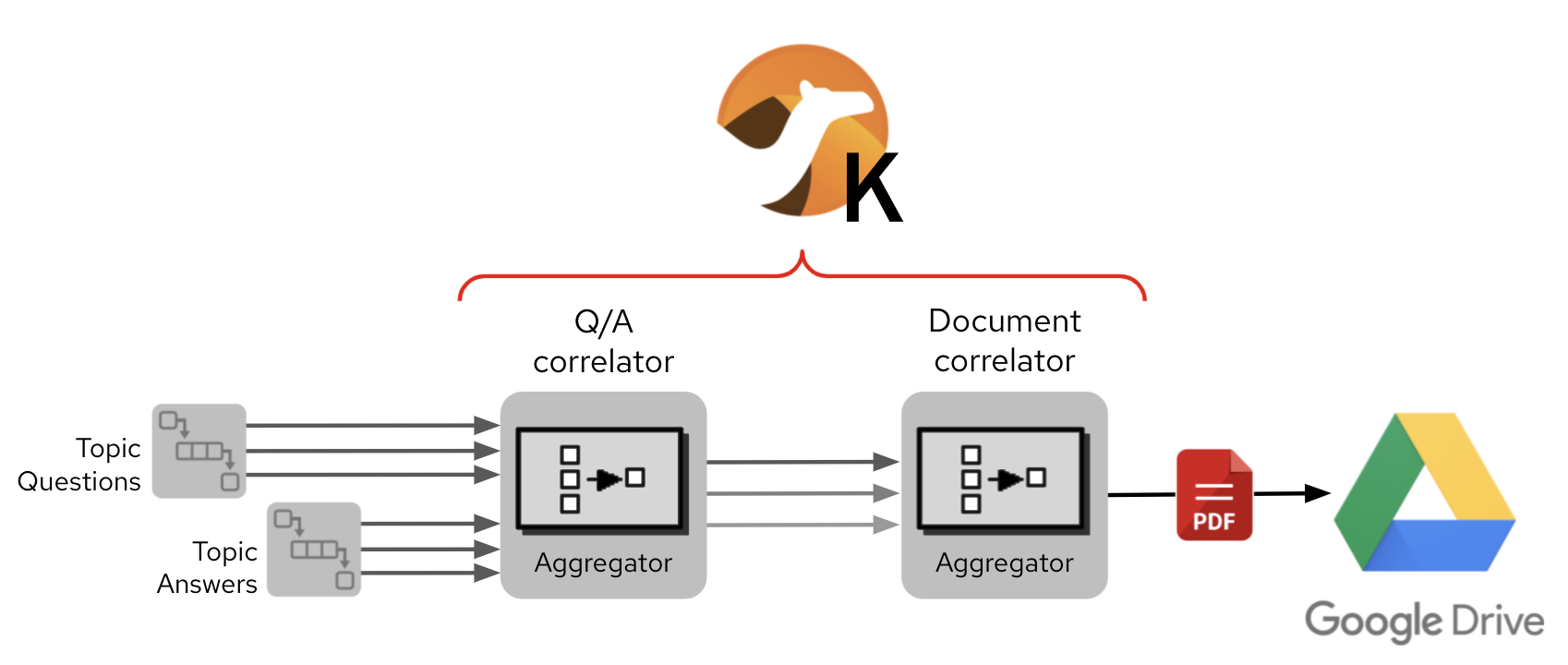

This Camel K implementation uses the aggregator EIP pattern, which helps identify all the related Kafka events and merge them to produce a PDF report and upload it to Google Drive.

The process involves two aggregators. The first one, the Q/A correlator, pairs each question with its corresponding answer into a unit. The second aggregator, the Document correlator, combines all of the questions and answers that belong to the same spreadsheet. The Camel PDF component renders the resulting document. Figure 12 illustrates the process flow.

This logic might be a less trivial processing flow for the ordinary Kubernetes user, but it's familiar territory for an experienced integrator making good use of Camel’s functionality. The following code snippet highlights the main Camel K functionality:

from("kafka:questions")

[data extraction here]

.to("direct:process-stream-events");

from("kafka:answers")

[data extraction here]

.to("direct:process-stream-events");

from("direct:process-stream-events")

.aggregate(header("correlation"), new QAStrategy())

.completionSize(2).

.to("direct:aggregate-document-qas");

from("direct:aggregate-qas")

.aggregate(header("correlation"), new DocStrategy())

.completionSize(3).

.to("direct:process-document");

from("direct:process-document")

.to("pdf:create")

.to("google-drive://drive-files/insert");Note: This code snippet only shows the most relevant parts of the integration. You can find the complete integration detail in the GitHub repository for this article.

In total, we required five Camel routes to perform the execution:

- Two Kafka consumers (questions and answers).

- Two aggregators.

- One processor to create the PDF and upload it to Google Drive.

Running this Camel K component takes one command in the CLI. Here is an example of how to run it on Kubernetes:

kamel run stage-5-pdf2drive -d camel-jackson -d camel-pdfSummary of the integration

The overall automation contains a total of five Camel K pieces. Four of them mainly dedicate their effort to streaming data in and out of the Kafka platform by first distributing the questions from the strategy team to other departments and then returning all answers to the originating source. A fifth Camel K process replays both Kafka streams to produce a public report of team interactions.

When this integration is implemented, the Strategy team can see all the answers popping up on their screens in no time, as shown in Figure 13. (This assumes a perfect world where teams answer quickly.)

Anyone with sufficient access privileges to Google Drive, where Camel K uploads the report, can open the PDF document and inspect its contents, as shown in Figure 14.

Watch a video demonstration

Watch the following video to see an execution of the use case described in this article:

Conclusion

You've seen in this article that Camel K makes it easy to move data between cloud services and corporate systems via stream-based messaging.

Whatever you want to call them—cloud-native microservices, mediations, integrations, automations, enablers, etc.—Camel K has taken a giant leap forward in running them effortlessly. Its DNA empowers developers to implement event-driven architectures, and traditional synchronous flows, with or without serverless capabilities. Camel K offers connectivity to Kubernetes-native platforms (like Knative and Strimzi), with over 200 connectors and EIP building blocks.

Crucially, Camel K has widened its audience by introducing no-code building blocks. Anyone can use these to rapidly deploy data flows that virtually connect any data source to any data target. For example, users on Kafka platforms can now enjoy supercharged connectivity with simple configure-and-run KameletBindings.

This article showed you how Camel K resolves integrations with great elegance and simplicity. While this example was relatively simple, Camel K can solve integrations of the highest complexity, where its heritage of Apache Camel's rich, out-of-the-box functionality excels.

Next steps with Camel K

See the following resources to learn more about Camel K and the current GA release:

- A good place to start learning about Camel K is the Camel K landing page on Red Hat Developer.

- See Six reasons to love Camel K for an overview of the highlights of using Camel K.

- Get a hands-on introduction to Camel K with our collection of interactive tutorials.

- Learn more about Camel K in Apache Camel.

- Be sure to visit the GitHub repository for the cross-team collaboration demo featured in this article.

- Review what you learned by watching the video of the cross-team scenario implementation.