In this article, we will explain how to enable NVIDIA DOCA Platform Framework with Red Hat OpenShift. We'll dive into how this combination provides hardware-enabled networking for containerized workloads, then demonstrate how this approach can improve performance, security, and resource utilization.

Performance challenges in modern AI workloads

Modern containerized environments face growing network performance challenges, particularly as workloads become more demanding and complex. Traditional network architectures where CPUs handle networking, storage, and security functions are becoming bottlenecks, especially with the rise of data-intensive applications like AI/ML and high-throughput data processing.

Inferencing has rapidly evolved from single-shot query responses from a pre-trained foundation model to something much more sophisticated and powerful. A simple query now will generate dozens of agentic queries accessing confidential data across the entire enterprise. AI agents plan and orchestrate tasks, using hundreds of containerized inference microservices, operating in concert as part of powerful AI blueprints.

With AIs talking to AIs in complex ensembles, there is simply no way to manually address scaling, security, and performance imperatives of modern AI workloads. Delivering secure, performant infrastructure at scale must use the automation, multitenancy, and acceleration delivered by solutions such as Red Hat OpenShift, NVIDIA BlueField DPUs, and the NVIDIA DOCA Platform Framework (DPF).

Data processing units (DPUs) have emerged as a powerful solution for network offloading. Unlike traditional NICs, DPUs incorporate dedicated processing capabilities that can offload entire networking stacks from host CPUs, freeing those resources for application workloads. NVIDIA DPF extends this concept by providing a comprehensive orchestration framework for DPU deployment and management in Kubernetes environments. Service Function Chaining (SFC) with DPF enables the integration of multiple services such as telemetry, routing, or security on a single DPU, unlocking a flexible, multivendor ecosystem for accelerated network service delivery.

Understanding the NVIDIA BlueField-3 DPU architecture

The NVIDIA BlueField-3 DPU represents a new class of programmable processors that combines network interface capabilities with a powerful Arm-based computing subsystem. Each BlueField-3 DPU includes:

- 16 Arm Cortex-A78 cores

- 32GB onboard DDR5 memory

- PCIe Gen5 x16 interface

- Dual 200Gb/s network ports based on NVIDIA ConnectX-7 NIC technology

- Hardware accelerators for networking, security, and storage functions

What makes NVIDIA BlueField-3 DPUs fundamentally different from NICs is their ability to run a complete operating system and services as an independent compute node.

DOCA Platform Framework

The DPF serves as the orchestration layer for NVIDIA BlueField-3 DPUs, providing seamless integration with Kubernetes environments.

DPF together with OpenShift brings cloud-native constructs to DPU management, allowing administrators to define DPU infrastructure as code through Kubernetes custom resources. This approach enables consistent, repeatable deployment and management of DPU-enabled infrastructure.

Architecture: A tale of two clusters

The foundation of our DPF integration with OpenShift is a dual-cluster architecture that creates clear separation between application workloads and infrastructure services. This approach delivers significant benefits in terms of performance, security, and resource utilization.

Physical infrastructure

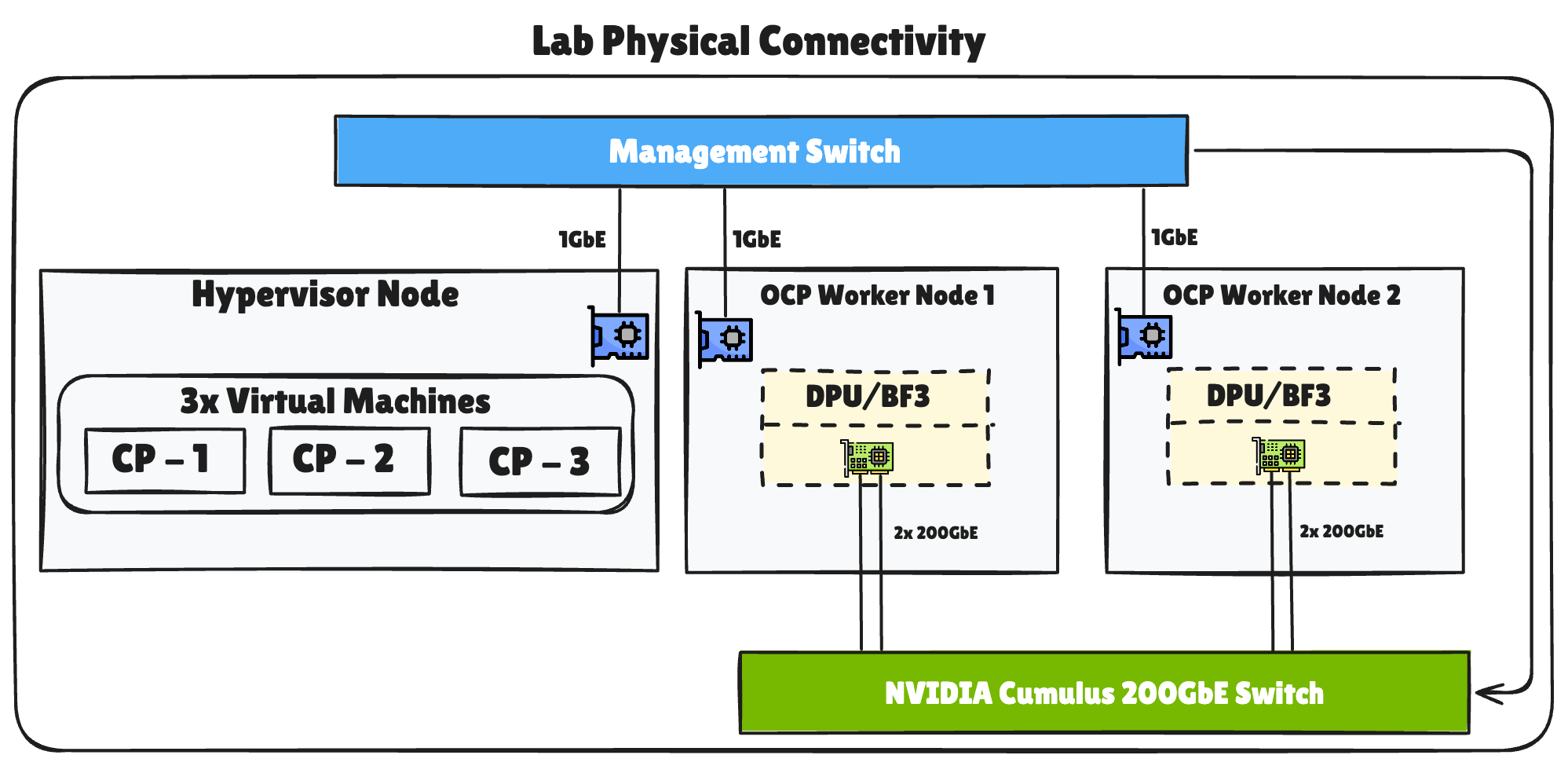

Our deployment environment consists of three main components, as illustrated in Figure 1:

- Hypervisor node: Hosts three virtual machines that serve as the control plane nodes for the management OpenShift cluster.

- Worker nodes: Two physical servers, each equipped with an NVIDIA BlueField-3 DPU installed in a PCIe slot.

- Network infrastructure: A management switch for control plane traffic (1GbE) and a high-speed NVIDIA Cumulus switch (200GbE) for accelerated workload traffic.

The NVIDIA BlueField-3 DPUs have dual 200GbE ports connected to the high-speed switch, creating a fully redundant and aggregated data network. This physical setup enables complete isolation between management traffic and data traffic, while providing substantial bandwidth for demanding workloads as both E/W and N/S networks are accelerated.

High-level architecture

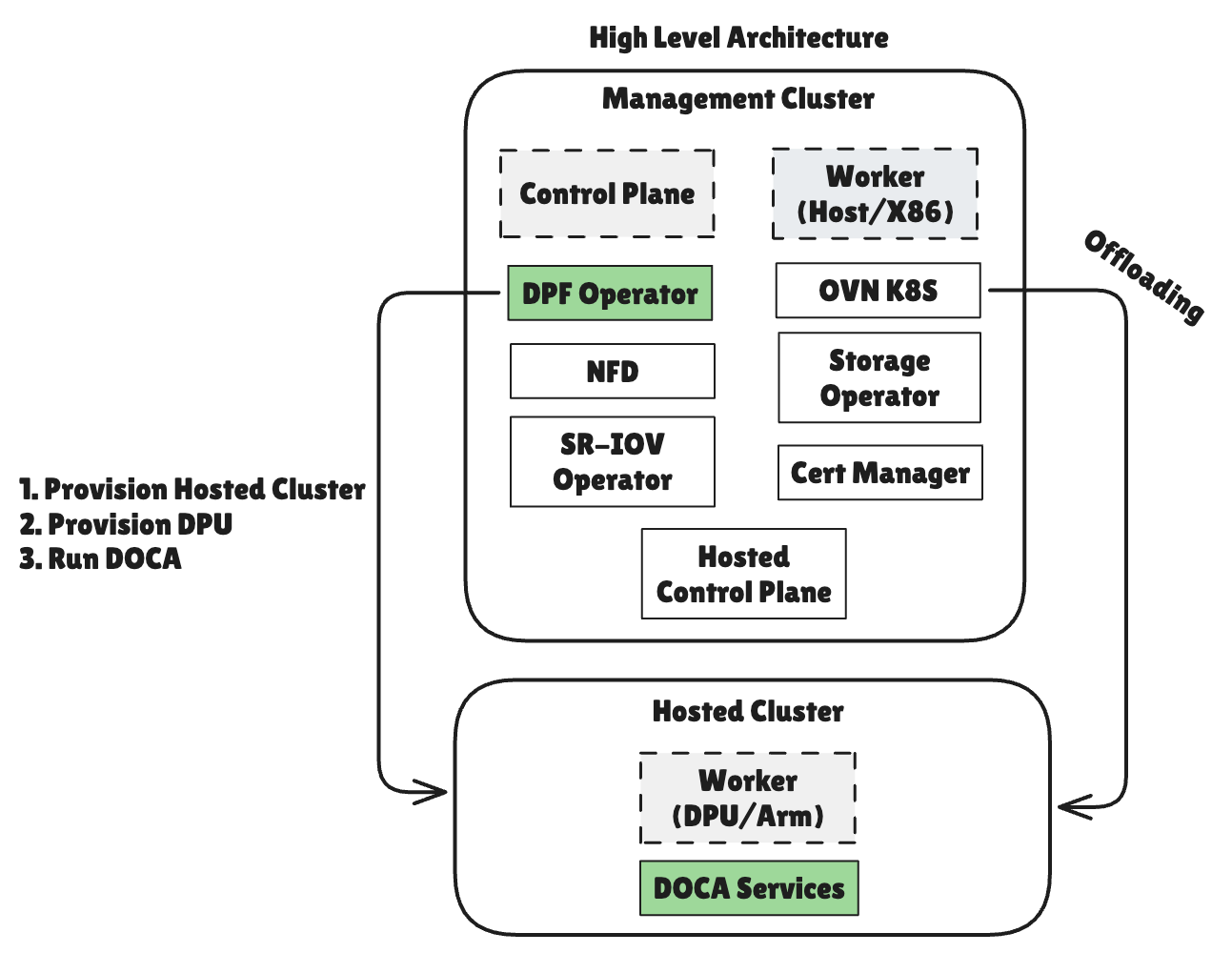

The DPF deployment consists of two logically separate OpenShift clusters, as shown in Figure 2:

- Management cluster: Running on x86 hardware, this cluster hosts application workloads and the control plane components that manage the DPU infrastructure.

- Hosted cluster: Running on the Arm cores of NVIDIA BlueField-3 DPUs, this cluster is dedicated to networking functions and infrastructure services.

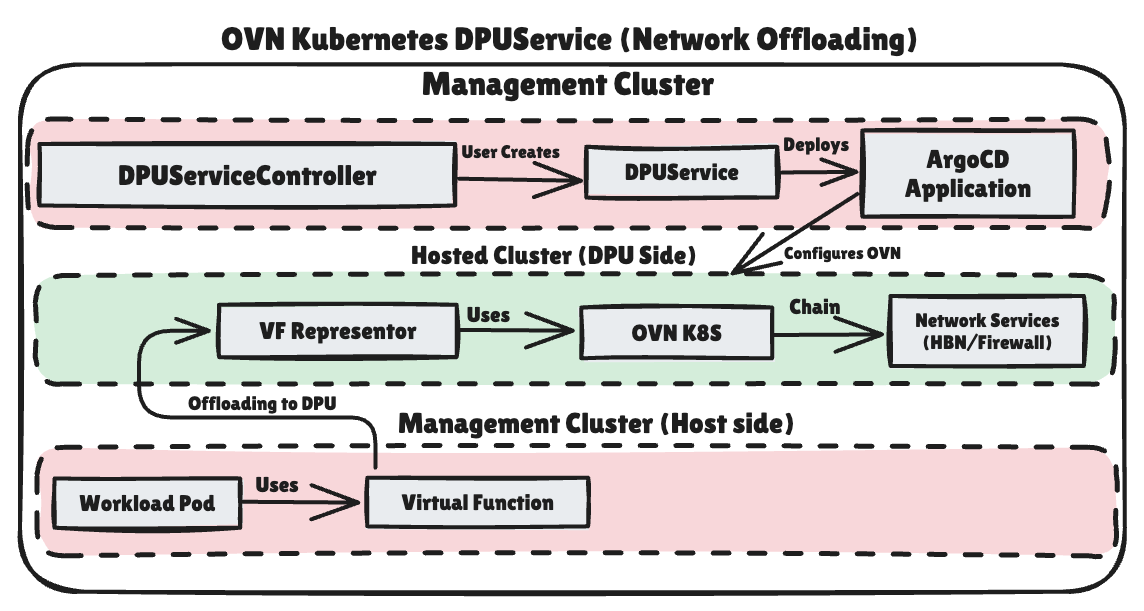

With provisioned NVIDIA BlueField-3 DPUs, we can deploy various networking services using the DPUService resource:

- An administrator creates a

DPUServicefor networking solutions including OVN-Kubernetes, host-based networking (HBN), DOCA Telemetry Service (DTS), and others. - The

DPUServiceControllercreates an Argo CD application targeting the hosted cluster. - Argo CD deploys the selected networking components on the BlueField-3 DPU.

- On the hosted cluster side, these networking services use virtual function (VF) representors to connect with the physical network.

- On the management cluster side, workload pods use virtual functions to communicate with the network.

This architecture offloads all OVN processing from the host CPU to the NVIDIA BlueField-3 DPU, improving performance and freeing host resources for application workloads.

Network flow between host and NVIDIA BlueField-3 DPU

When a workload running on an x86 node needs to communicate over the network, instead of processing network packets in the host's kernel, the traffic is delegated to the NVIDIA BlueField-3 DPU. The NVIDIA BlueField-3 DPU's dedicated resources handle the entire networking stack, including overlay encapsulation, security policies, and routing decisions.

This architecture enables several critical benefits:

- Host CPUs focus exclusively on application workloads.

- Network processing happens on dedicated hardware with specialized accelerators.

- Security boundaries between network infrastructure and applications are hardened.

- Networking functions can scale independently from application workloads.

Network components

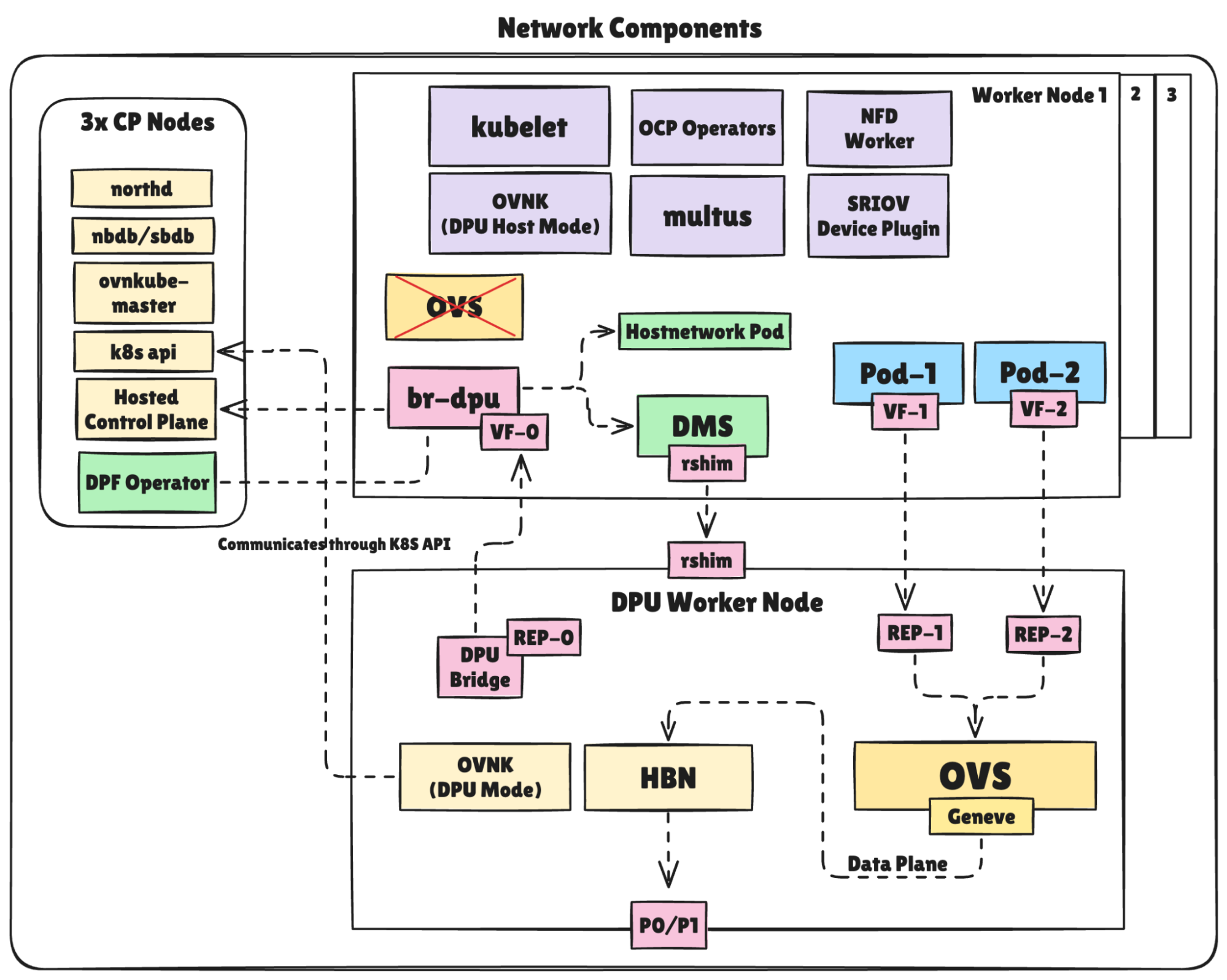

Figure 3 illustrates the complete network architecture spanning both the management and DPU clusters. On the left, we see the control plane nodes running core Kubernetes and OVN components. The worker node (top right) runs application pods with virtual functions and has OVS disabled as networking is handled by the NVIDIA BlueField-3 DPU.

The DPU worker node (bottom) handles all network processing with key components:

- OVNK in DPU mode processes overlay networking.

- Host-based networking (HBN) handles routing.

- The DPU bridge connects VF representors to the host to allow host <-> DPU communication.

- The physical ports (P0/P1) connect to the data center network.

The rshim interface provides management access to the NVIDIA BlueField-3 DPU, while Hostnetwork Pod and DMS handle network configuration and DPU provisioning, respectively.

OpenShift and NVIDIA DPF integration journey

1. Creating an OpenShift cluster

Our journey began with deploying a new OpenShift cluster using the Assisted Installer, which provides a streamlined, web-based approach particularly well-suited for advanced networking scenarios like this one.

Assisted Installer overview

The Assisted Installer simplifies OpenShift deployment through a user-friendly web interface that guides administrators through the installation process. It offers several advantages:

- User-friendly UI: Intuitive interface that eliminates the need for complex CLI commands.

- Automated discovery: Automatically detects and validates host configurations.

- Flexible networking: Supports customizable network configurations, including advanced setups.

- Real-time validation: Provides immediate feedback on potential issues before installation starts.

- Simplified Day 2 operations: Easily add new nodes to existing clusters.

For a detailed walkthrough of the Assisted Installer process, you can refer to the Red Hat documentation.

We successfully deployed a cluster with three control plane nodes.

Custom configuration

We applied machine configuration to disable OVS services on future worker nodes.

2. Installing required operators

With our OpenShift cluster deployed, we needed to install several operators to support DPF:

- DPF Operator: Orchestrates the entire NVIDIA BlueField-3 DPU ecosystem.

- Node Feature Discovery (NFD): Identifies nodes with NVIDIA BlueField-3 DPUs.

- SR-IOV Operator: Manages virtual functions for network interfaces.

- Cert Manager: Handles webhook certificates.

- Storage Operator: Provisions persistent storage.

The SR-IOV Network Operator is particularly important, as it discovers and manages the ConnectX network interfaces embedded in the NVIDIA BlueField-3 DPU. We configured it with a policy using the externallyManaged: true setting, which delegates VF management to the DPF Operator's host networking component rather than the SR-IOV Operator itself.

3. Adding worker nodes

We used the Assisted Installer to add two machines with a single NVIDIA BlueField-3 DPU on each as workers. Using the Assisted Installer's NMState configuration capability, we added configuration that creates a br-dpu bridge on the management NIC as required by DPF.

After the workers joined the cluster, we observed a dpf-dpu-detector pod that provided DPU feature flags for the NFD operand. Workers should have the k8s.ovn.org/dpu-host label in order for OVNK in DPU mode to run, though it will continuously fail until the NVIDIA BlueField-3 DPUs are provisioned. We also applied an OVK injector webhook that adds a VF resource to each pod we would like to run on workers.

4. Setting up DPF components

After installing the operators, we deployed the DPF system components by creating a DPFOperatorConfig object, which instructs the DPF Operator which type of hosted cluster to use. In the current setup, we used Kamaji for the DPU control plane and specified parameters for DPU provisioning.

Next, we created a DPUCluster object to define the Kubernetes cluster that would run on the NVIDIA BlueField-3 DPUs.

The DPF Operator processed this configuration and automatically:

- Created a Kamaji

TenantControlPlane. - Deployed the DPU cluster control plane components (etcd, API server, etc.).

- Set up a load balancer for the DPU cluster control plane.

- Generated a

kubeconfigfor accessing the DPU cluster.

5. NVIDIA BlueField-3 DPU Provisioning

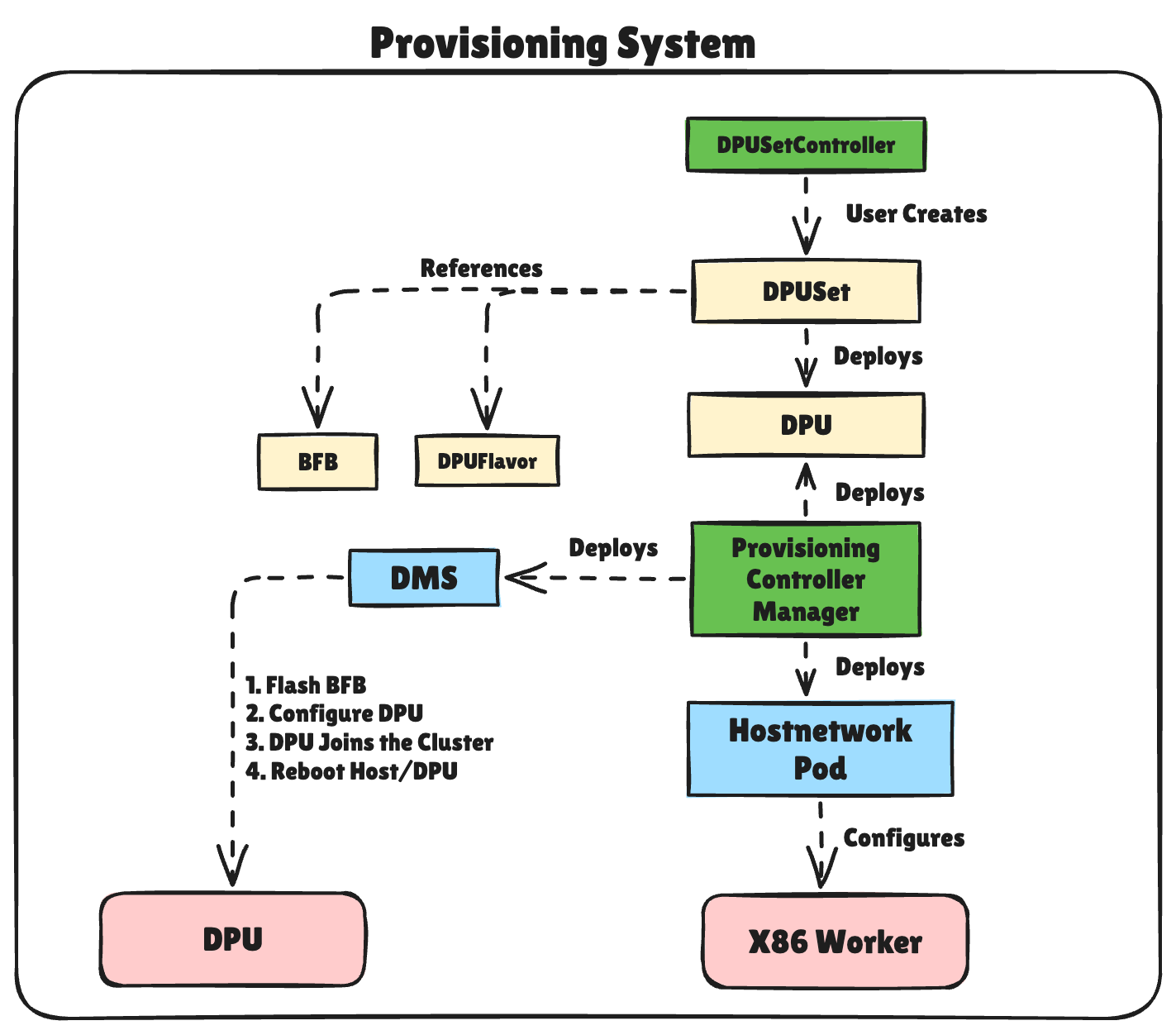

With the infrastructure in place, we provisioned the NVIDIA BlueField-3 DPUs by creating DPUFlavor, BFB, and DPUSet objects to manage the NVIDIA BlueField-3 DPUs:

- DPUFlavor: Provides configuration that should be applied on the NVIDIA BlueField-3 DPU.

- BFB: Provides the image URL that will be flashed.

- DPUSet: Manages on which hosts we should provision NVIDIA BlueField-3 DPU and with which configuration.

These components are shown in Figure 4.

The DPF Operator detected worker nodes with the label that matches DPUSet and proceeded to:

- Create a DPU object for each eligible worker.

- Deploy DOCA Management Service (DMS) pods to flash the BFB to the NVIDIA BlueField-3 DPUs.

- Configure networking between the hosts and NVIDIA BlueField-3 DPUs.

- Reboot NVIDIA BlueField-3 DPU and host.

- Join the NVIDIA BlueField-3 DPUs to the hosted cluster.

At this point, we had both NVIDIA BlueField-3 DPUs joined as worker nodes in the hosted cluster and worker nodes became ready in the host cluster.

6. Deploying network services to NVIDIA BlueField-3 DPUs

With our NVIDIA BlueField-3 DPUs provisioned and joined to the hosted cluster, we deployed two key services to handle network processing, as shown in Figure 5:

- OVN-Kubernetes: To provide container networking and handle pod-to-pod communication.

- Host-based networking (HBN): To implement BGP-based routing between nodes.

These services were deployed using DPUService resources that defined the services to run on the NVIDIA BlueField-3 DPUs. The DPUService controller and Argo CD handled the deployment of these services to the DPU cluster, creating the necessary pods and configurations.

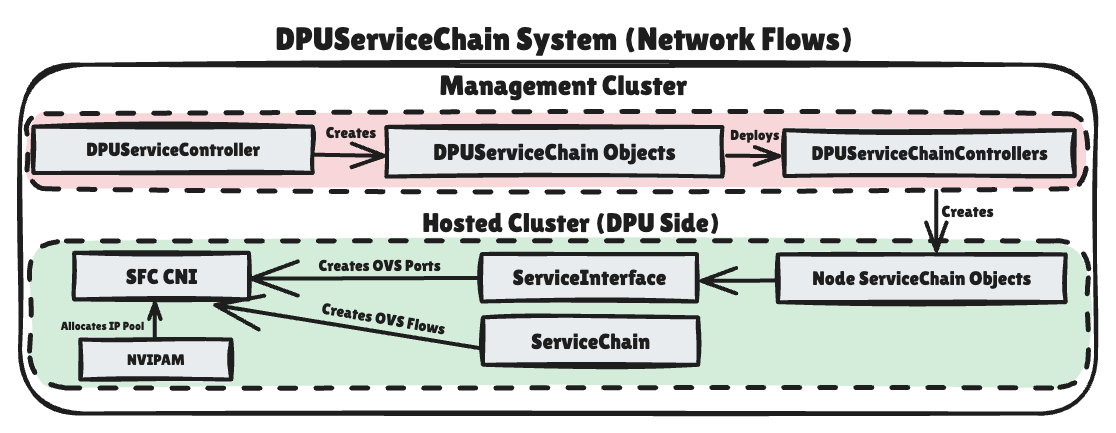

7. Service Function Chaining

After deploying the basic network services on our NVIDIA BlueField-3 DPUs, we implemented Service Function Chaining (SFC) to enable sophisticated network topologies. This functionality is a crucial differentiator for DPF, allowing us to connect OVN, HBN, and physical interfaces in a hardware-accelerated path.

Service Function Chain implementation

The DPF Service Chain system uses a controller-based architecture that spans both the management and DPU clusters:

- In the management cluster, administrators create

DPUServiceChainobjects. - The

DPUServiceChainControllertranslates these into configuration for the hosted cluster. - On the DPU side, the Service Interface and ServiceChain components:

- Create OVS ports for each service.

- Configure flow rules to direct traffic through the chain.

- Apply QoS policies as needed.

In our deployment, we created two specific service chains to enable our traffic flow:

- OVN-to-HBN Chain: Connects container networking (OVN) to the HBN service, allowing pod traffic to reach the BGP routing layer.

- HBN-to-Fabric Chain: Links the HBN service to both physical uplink ports (p0 and p1), enabling ECMP routing to the network.

These chains create a complete pipeline from containers to the physical network, with the entire pipeline accelerated in hardware:

- Pod VF → OVN: (Notice there is no chain for this.) VFs are using the ovn port and the "head" of the chain leads directly to br-int on the NVIDIA BlueField-3 DPU for OVN processing via Geneve tunnels for E/W traffic.

- OVN → HBN: Processed traffic from OVN is directed to HBN's app_sf interface for routing decisions.

- HBN → physical uplinks: HBN directs traffic to the appropriate uplink (p0/p1) based on ECMP and BGP routing decisions.

This architecture enables traffic to flow seamlessly through sophisticated networking topologies with hardware acceleration at each step, providing both the flexibility of software-defined networking and the performance benefits of hardware acceleration.

The value of Service Function Chaining

Service Function Chaining delivers significant business advantages for modern cloud environments:

- Operational automation: SFC eliminates the manual configuration traditionally required to connect network services. Network administrators can define service chains declaratively through Kubernetes resources rather than configuring individual devices or software components.

- Dynamic service provisioning: Network, security, storage, telemetry, and other services can be deployed, modified, and removed without disrupting the underlying infrastructure. This enables rapid adaptation to changing application requirements and traffic patterns.

- Resource optimization: By chaining services together efficiently, network traffic follows optimized paths that reduce unnecessary processing. In our implementation, traffic flows directly from container networking to BGP routing without additional hops.

- Application-specific networking: Different applications can leverage different service chains based on their requirements. For example, data-intensive workloads can use paths optimized for throughput, while latency-sensitive applications can use more direct routes.

- Simplified troubleshooting: The well-defined service chain model provides clarity about how traffic flows through the system, making it easier to identify and resolve networking issues. In our implementation, clearly defined OVS flows make traffic patterns more observable.

With DPF's implementation of Service Function Chaining, we've achieved these benefits while maintaining full hardware acceleration through the ConnectX ASIC—combining the flexibility of software-defined networking with the performance advantages of specialized hardware.

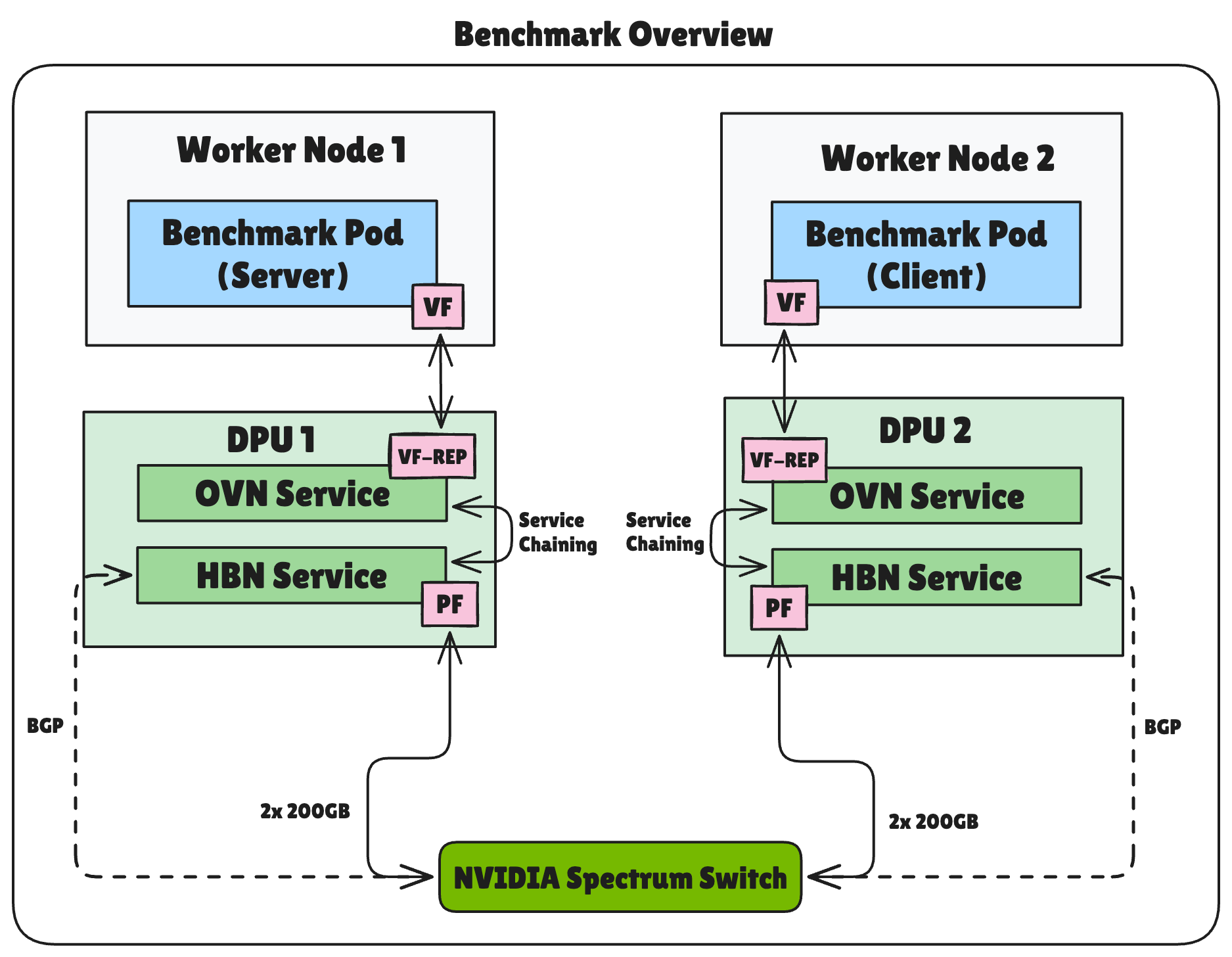

8. Benchmark

To demonstrate the benefits of NVIDIA BlueField-3 DPU offloading, we conducted network performance tests between pods running on separate worker nodes. These tests quantify the throughput advantages gained through hardware acceleration.

Our test environment consisted of two worker nodes, each with a NVIDIA BlueField-3 DPU. As shown in Figure 6, the test pods used SR-IOV virtual functions to communicate with the network, with VF representors on the NVIDIA BlueField-3 DPUs. The host-based networking containers implemented BGP routing over dual 200GbE physical interfaces connected to an NVIDIA Cumulus switch, enabling line-rate testing between pods. See Figure 7.

We selected two types of benchmarks to evaluate different aspects of network performance:

- RDMA Testing: We used

ib_write_bwto measure raw bandwidth through RDMA over Converged Ethernet (RoCE), specifically targeting the hardware acceleration capabilities of the BlueField-3 DPU. - TCP/IP Testing: With

iperf3running multiple parallel streams, we assessed TCP/IP performance as experienced by typical containerized applications.

RDMA performance:

### RDMA Test (ib_write_bw Client) ###

$ ib_write_bw -R -F --report_gbits --qp 8 -D 30 10.128.3.144

----------------------------------------------------------------

#bytes #iterations BW peak[Gb/sec] BW average[Gb/sec] MsgRate[Mpps]

65536 11710083 0.00 383.72 0.731883- Average bandwidth: 383.72 Gb/sec

- Payload size: 65,536 bytes

- Throughput ratio: 95.93% (theoretical maximum 400 Gb/sec)

TCP/IP performance:

### Iperf Test (iperf3 Client) ###

$ ./iperf_client.sh 10.128.3.144 33-62 30

Results for port 5309:

Bandwidth: 8.804 Gbit/sec

Results for port 5311:

Bandwidth: 13.361 Gbit/sec

Results for port 5313:

Bandwidth: 8.800 Gbit/sec

Results for port 5315:

Bandwidth: 8.784 Gbit/sec

Results for port 5317:

Bandwidth: 15.693 Gbit/sec

Results for port 5319:

Bandwidth: 8.793 Gbit/sec

Results for port 5321:

Bandwidth: 8.785 Gbit/sec

Results for port 5323:

Bandwidth: 9.245 Gbit/sec

Results for port 5325:

Bandwidth: 10.461 Gbit/sec

Total Bandwidth across all streams: 354.924 Gbit/sec

### Iperf Test (iperf3 Client) Complete ###- Total aggregated bandwidth: 354.924 Gbit/sec across all streams

- Individual stream bandwidth: 8.8 to 15.7 Gbit/sec

- Effective utilization: 88-96% of theoretical maximum (400 Gb/sec)

What makes these results particularly significant is the comparison to traditional networking architectures. Without NVIDIA BlueField-3 DPU offloading, host-based networking typically achieves only a fraction of this performance due to CPU limitations and software-based packet processing overhead.

Most importantly, these performance levels were achieved with minimal host CPU utilization since network processing was handled by the NVIDIA BlueField-3 DPUs. This architecture frees CPU resources for application workloads, improving overall cluster efficiency and application performance.

The near-line-rate performance demonstrates how DPU-enabled networking can transform containerized environments, especially for data-intensive workloads that require high-throughput networking.

Conclusion

The integration of NVIDIA DOCA Platform Framework with Red Hat OpenShift demonstrates the transformative potential of DPU-enabled networking for containerized environments. Through our implementation, we've seen how this architecture provides several key benefits:

- Performance improvements: Our benchmarks show significant throughput enhancements, with RDMA tests reaching 383.72 Gb/sec average bandwidth and iperf tests achieving a total bandwidth of 354.924 Gbit/sec across all streams.

- Enhanced security posture: The dual-cluster architecture creates clear separation between application workloads and network infrastructure, hardening security boundaries and reducing attack surfaces.

- Operational simplicity: Despite the sophisticated underlying architecture, DPF provides a familiar Kubernetes-based management experience through custom resources and operators.

- Future-ready infrastructure: This approach lays the groundwork for additional capabilities as NVIDIA BlueField-3 DPU technology continues to evolve, positioning organizations to better support increasingly demanding workloads like AI/ML.

Organizations seeking to optimize their containerized infrastructure for high-throughput networking should consider the DPU-enabled approach. The combination of Red Hat OpenShift's enterprise-grade container platform with NVIDIA BlueField DPUs and NVIDIA DOCA Platform Framework provides a powerful solution that delivers both performance and manageability.

As NVIDIA BlueField-3 DPU technology and the DOCA Platform Framework continue to evolve, we anticipate even more capabilities being offloaded from host systems, further enhancing the efficiency and security of containerized environments.