Red Hat Developer Blog

Here's our most recent blog content. Explore our featured monthly resource as well as our most recently published items. Don't miss the chance to learn more about our contributors.

View all blogs & articles

Learn how to fine-tune AI pipelines in Red Hat OpenShift AI 3.3. Use Kubeflow...

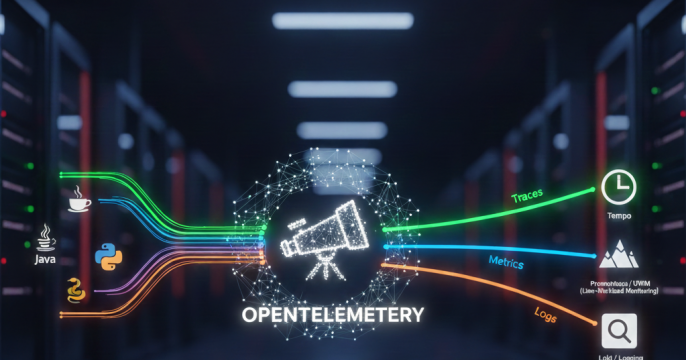

Learn about the Red Hat build of OpenTelemetry and its auto-instrumentation...

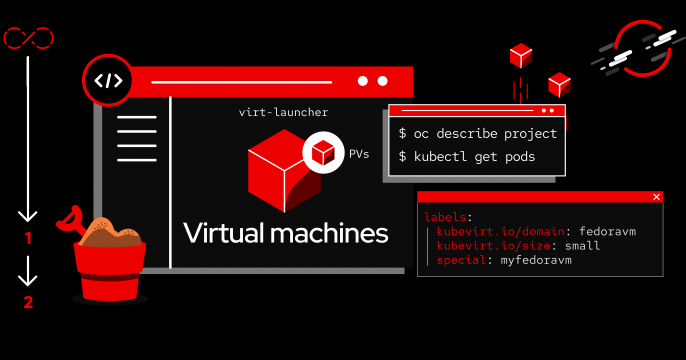

Learn how to simplify your transition from VMware vSphere 8 with Red Hat...

Learn how to migrate managed clusters from one Red Hat Advanced Cluster...

Build better RAG systems with SDG Hub. Generate high-quality...

Explore big versus small prompting in AI agents. Learn how Red Hat's AI...

OpenShift networking moves to real routing (CUDN/BGP), eliminating NAT and...

Learn how ATen serves as PyTorch's C++ engine, handling tensor operations...

Learn how to streamline Red Hat Enterprise Linux image creation and...