Deploying enterprise-grade runtime components into Kubernetes can be daunting. You might wonder:

- How do I fetch a certificate for my app?

- What's the syntax for autoscaling resources with the Horizontal Pod Autoscaler?

- How do I link my container with a database and with a Kafka cluster?

- Are my metrics going to Prometheus?

- Also, how do I scale to zero with Knative?

Operators can help with all of those needs and more. In this article, I introduce three Operators—Runtime Component Operator, Service Binding Operator, and Open Liberty Operator—that work together to help you deploy containers like a pro.

Runtime Component Operator: A personal assistant for your microservices

What better way to upgrade your microservice to executive status than to provide it with a personal assistant? Besides sounding cool, it will make your Kubernetes deployment a lot easier.

To get started, go to OperatorHub.io. Note the Operator categories in the drop-down list to the left of your screen. Click the link for Application Runtime Operators, then filter that to just the two Auto-Pilot entries under Capability Level. (These are Level 5, the highest level on OperatorHub.) You will see two options: Runtime Component Operator and Open Liberty Operator.

The two tools are related: Runtime Component Operator is the upstream community that fuels Open Liberty Operator. I'll introduce Runtime Component Operator first. Later in the article, I'll introduce a couple of Open Liberty Operator's unique features.

Overview of Runtime Component Operator

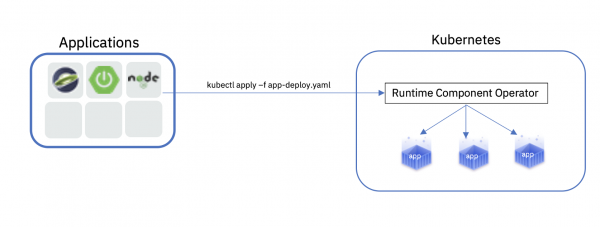

As shown in Figure 1, Runtime Component Operator lets you deploy any runtime container—Liberty, JBoss, Quarkus, Node.js, Spring Boot, and so on.

Runtime Component Operator can assist you with any of the following everyday deployment activities:

- Creating and deploying k8s resources.

- Toggling to create Knative or serverless resources.

- Deploying an image from an image stream (including integration with

BuildConfig). - Autoscaling with Horizontal Pod Autoscaler.

- Managing resource constraints.

- Integrating with Prometheus (it's built-in).

- Integrating with a certificate manager.

- Creating routes with Transport Layer Security (TLS).

- Implementing TLS between microservices.

- Probing for readiness or liveness.

- Setting up environment variables.

- Setting up easy two-step volume persistence.

- Integrating with kAppNav and Red Hat OpenShift's topology view

- Binding app-to-app services.

- Binding app-to-resource services.

And that's just the shortlist. See the Runtime Component Operator User Guide for more details.

Next, let's look at how Runtime Component Operator helps you solve real-world problems.

Mutual TLS trust

Let's say that you wanted to deploy two or more microservices that have established mutual TLS trust. You plan on using certificates issued by a cluster certificate authority (CA). You could try to set up the deployment manually, or you could simply use a configuration like this:

apiVersion: app.stacks/v1beta1

kind: RuntimeComponent

metadata:

name: my-app

namespace: test

spec:

applicationImage: registry.connect.redhat.com/ibm/open-liberty-samples:springPetClinic

service:

port: 9080

certificate: {}

Image streams

For another example, let's say that you want to deploy an image stream (perhaps as the result of a BuildConfig) using Knative. This simple configuration achieves it:

apiVersion: app.stacks/v1beta1 kind: RuntimeComponent metadata: name: my-app namespace: test spec: applicationImage: my-namespace/my-image-stream:1.0 createKnativeService: true expose: true

With one toggle (createKnativeService), you can switch from using vanilla Kubernetes resources to Knative (serverless) resources. The expose element automatically creates a route for your microservice.

Connecting RESTful services

Now let's say that you want to connect two or more RESTful services. Not a problem. Just let Runtime Component Operator know that you want to expose or consume a service, like this:

apiVersion: app.stacks/v1beta1

kind: RuntimeComponent

metadata:

name: my-provider

namespace: test

spec:

applicationImage: appruntime/samples:service-binding-provider

service:

port: 9080

provides:

category: openapi

context: /my-context

In this case, the YAML deploys the providing microservice and makes itself bindable to other microservices, which can then request the binding:

apiVersion: app.stacks/v1beta1

kind: RuntimeComponent

metadata:

name: my-consumer

namespace: test

spec:

applicationImage: appruntime/samples:service-binding-consumer

service:

port: 9080

consumes:

- category: openapi

name: my-provider

That's all you need to do. Runtime Component Operator will inject the requested binding information into the consuming microservice.

That's impressive, but what if you needed to bind to resources that Runtime Component Operator didn't own? Next, I'll show you how to use the new Service Binding Specification and Red Hat Service Binding Operator for just that scenario.

Service Binding Specification: Bring order to your bindings

A few months ago, a new community formed to standardize service bindings within Kubernetes. This collaboration has resulted in the first release candidate of the Service Binding Specification.

This specification focuses on the following questions related to service bindings:

- How do we make something bindable?

- What is the binding schema to be exposed to?

- How do we request a binding?

- How is the binding data injected or mounted into the microservice?

The specification expands each of these topics in detail—for example, it thoroughly illustrates the data model based on x-descriptors and annotations.

Red Hat Service Binding Operator

A specification is useful for creating a consistent path for service producers and consumers to follow. Having a reference implementation is even better. Red Hat Service Binding Operator works together with Runtime Component Operator to improve service binding in Kubernetes deployments.

Service Binding Operator is at the center of the binding: It watches for and manages incoming ServiceBindingRequest custom resources (CRs). These are YAML artifacts that describe the services we are binding.

Out-of-the-box, Service Binding Operator processes specification-compliant annotations from bindable services. It also has a very useful autoDetect mode that allows it to walk through owned Secrets, ConfigMaps, Routes, and so on. It can then expose the binding data extracted from those resources. This "zero code change" approach is a great way to work with existing services, even before they adhere to the new Service Binding Specification.

Runtime Component Operator's role is to detect (automatically or via a reference) a ServiceBindingRequest that links to one of its deployed microservices. It will then inject or mount the corresponding information.

Check out the "Service Binding" section of the Runtime Component Operator User Guide for more information about deploying Runtime Component Operator and Service Binding Operator together.

A quick demonstration

If you want to see how these two Operators work together, check out our sample application: Binding a PostgreSQL Operator database to a Spring Boot application. An Operator manages the PostgreSQL Operator database, and the Spring Boot app is deployed and managed by Runtime Component Operator using Service Binding Operator.

In the demo, we start by deploying a Database CR, which is used by the PostgreSQL Operator to create our database.

Next, we deploy a ServiceBindingRequest CR that gets processed by the Service Binding Operator.

Last, we deploy our RuntimeComponent CR (in this case, a Spring Boot microservice), which is managed by the Runtime Component Operator.

Future enhancements

Future enhancements will include being able to generate a ServiceBindingRequest CR from a given RuntimeComponent CR. Figure 2 shows the pattern we want to achieve:

Figure 2. Service Binding Operator generates a ServiceBindingRequest CR from a given RuntimeComponent CR.">

The application code could then safely navigate all of its bindings via the top-level SERVICE_BINDINGS environment variable, which would look something like this:

SERVICE_BINDINGS = {

"bindingKeys": [

{

"name": "KAFKA_USERNAME",

"bindAs": "envVar"

},

{

"name": "KAFKA_PASSWORD",

"bindAs": "volume",

"mountPath": "/platform/bindings/secret/"

}

]

}

Open Liberty Operator: Optimize your deployments

At the beginning of this article, I noted that Runtime Component Operator is the upstream project for Open Liberty Operator. Everything you've seen so far, in terms of capabilities and bindings, applies to Open Liberty Operator.

Even better, if you know the runtime is Open Liberty, you can use a configuration in Open Liberty Operator for features that are specific to that runtime. We'll look at two of these capabilities.

Liberty's SSO integration

Open Liberty Operator supports Liberty's single sign-on (SSO) Social Media Login feature. Because of this, it can delegate authenticating an application user to an external provider such as Google, Facebook, LinkedIn, Twitter, GitHub, or any OpenID Connect (OIDC) or OAuth 2.0 client.

Your first step is to enable the Social Media Login in your code. Once you have done that, you can create the appropriate social media login secrets (such as a GitHub clientID) and configure your OpenLibertyApplication deployment to wire it all together. You can find detailed information and code samples in the Open Liberty Operator User Guide.

Day-2 Operations

The Day-2 Operations feature is a popular Open Liberty runtime feature for gathering traces and triggering a JVM dump. In Open Liberty Operator, each of these actions is available in a highly specialized custom resource definition (CRD), which ensures a minimalist user experience.

Here's a quick look at how you would set a trace:

apiVersion: openliberty.io/v1beta1 kind: OpenLibertyTrace metadata: name: example-trace spec: podName: my-pod traceSpecification: "*=info:com.ibm.ws.webcontainer*=all" maxFileSize: 20 maxFiles: 5

As you can see, the code is straightforward and intuitive for Open Liberty users. Visit the Open Liberty Operator User Guide for complete configuration details.

Ready to get started?

Both Runtime Component Operator and Open Liberty Operator are available from the Red Hat OpenShift OperatorHub. You can quickly install them cluster-wide, or you could use a namespace-scoped installation via the Operator Lifecycle Manager (OLM) framework. Both Operators are open source, so there are no usage restrictions. Grab your favorite runtime component and give it a try with one of these Operators—you'll notice the enterprise boost right away.

When you are ready to move into a production scenario and want support, I encourage you to check out Red Hat Runtimes, which contains Open Liberty.

Last updated: June 22, 2023