In the past few years, the popularity and adoption of containers has skyrocketed, and the Kubernetes container orchestration platform has been largely adopted as well. With these changes, a new set of challenges has emerged when dealing with applications deployed on Kubernetes clusters in the real world. One challenge is how to deal with communication between multiple clusters that might be in different networks (even private ones), behind firewalls, and so on.

One possible solution to this problem is to use a Virtual Application Network (VAN), which is sometimes referred to as a Layer 7 network. In a nutshell, a VAN is a logical network that is deployed at the application level and introduces a new layer of addressing for fine-grained application components with no constraints on the network topology. For a much more in-depth explanation, please read this excellent article.

So, what is Skupper? In the project's own words:

Skupper is a layer seven service interconnect. It enables secure communication across Kubernetes clusters with no VPNs or special firewall rules.

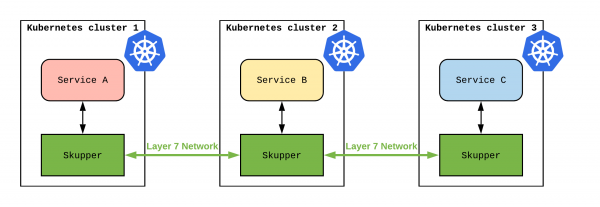

Skupper is a VAN implementation. Its high-level architecture is depicted in Figure 1:

Once Skupper is installed in each Kubernetes cluster and connected with the others, Service A from cluster one can communicate with Service B from cluster two, and with Service C from cluster three (and the other way around). The clusters do not need to be all public or on the same infrastructure. They can be behind a firewall, or even on private networks not accessible from outside (providing that they can reach outside to connect themselves to the skupper VAN).

Skupper architecture

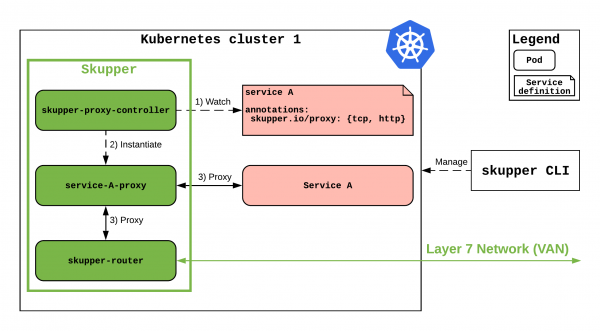

Given the overall architecture, how does the green Skupper component in Figure 1 work? Figure 2 lists the Skupper components for Kubernetes cluster one and describes the interactions between them:

The first thing to notice is that Skupper can be managed using the dedicated skupper CLI command. Next is that when you first install Skupper in a Kubernetes and OpenShift cluster, two components are deployed: skupper-router and skupper-proxy-controller. The former is an instance of Apache Qpid, an open source Advanced Message Queuing Protocol (AMQP) router that is responsible for creating the VAN. Qpid helps Kubernetes and OpenShift clusters communicate by tunneling HTTP and TCP traffic into AMQP.

If you are familiar with the Operator pattern, think of skupper-proxy-controller as an Operator: This tool watches for services annotated with skupper.io/proxy and instantiates, for each of them, a service-*-proxy pod. That pod tunnels the protocols spoken by Service A (i.e., HTTP or TCP) into AMQP and the skupper-router does the rest.

Use case: A local service exporter with Skupper

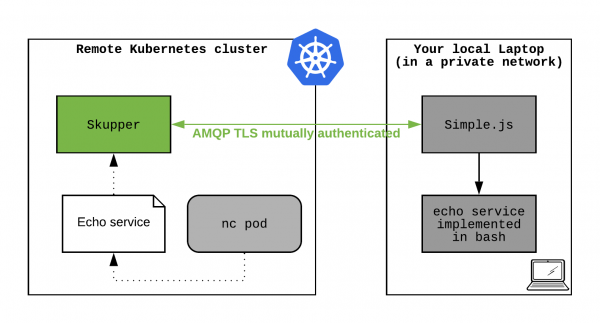

Another challenge that comes with developing cloud-native applications coding and testing effectively in a hybrid environment. Some of the services that compose your application might be, at development time, hosted locally on your laptop (or in your dev environment), while others might be deployed on a remote Kubernetes cluster. How would you easily make your local services accessible to the remote cluster without setting up a VPN or another "complicated" network configuration?

One option is to leverage the Skupper mechanism to export a local service running outside of Kubernetes to a remote Kubernetes cluster. Figure 3 sketches what we want to achieve—a simple TCP echo service running on our laptop that is usually connected to a firewalled private network:

We want to access this service from a pod running on the remote Kubernetes cluster that has no network access to the laptop network.

Note: A number of manual steps are needed at the moment because this use case is not yet implemented as a skupper CLI subcommand. There is an issue open regarding when that feature will be implemented. When it is, all of the following steps will be replaced by a single skupper CLI invocation.

Let us look at the steps you will need to follow (for now):

- Start by downloading the latest version of the

skupperCLI from https://github.com/skupperproject/skupper-cli/releases. Add it to your path and make it executable. - Log into your remote cluster with

ocorkubectl. - Install Skupper on the remote cluster:

$ skupper init --id public

- Make the skupper router accessible from outside the cluster:

$ oc apply -f - << EOF

kind: Route

apiVersion: route.openshift.io/v1

metadata:

name: skupper-messaging

spec:

to:

kind: Service

name: skupper-messaging

weight: 100

port:

targetPort: amqps

tls:

termination: passthrough

insecureEdgeTerminationPolicy: None

wildcardPolicy: None

EOF

- Create a service on the cluster representing the local echo service. This service will not have an implementation, it is just to help Skupper to correctly handle this feature:

$ oc apply -f - << EOF

kind: Service

apiVersion: v1

metadata:

name: echo

annotations:

skupper.io/proxy: tcp

spec:

ports:

- protocol: TCP

port: 2000

targetPort: 2000

selector:

dummy: selector

EOF- Clone the

skupper-proxyproject:

$ git clone git@github.com:skupperproject/skupper-proxy.git

- Go to that project's

bin/directory with:

$ cd skupper-proxy/bin

- Extract certificates that are needed to mutually authenticate your connection:

$ oc extract secret/skupper

- Modify

connection.jsonto look like this:

{

"scheme": "amqps",

"host": "",

"port": "443",

"verify": false,

"tls": {

"ca": "ca.crt",

"cert": "tls.crt",

"key": "tls.key",

"verify": false

}

}where the host can be obtained by:

$ oc get route skupper-messaging -o=jsonpath='{.spec.host}{"\n"}'- Run a simple Bash TCP echo service on port 2000 locally in a separate shell that will be kept running:

$ nc -l 2000 -k -c 'xargs -n1 echo'

- Connect to the Skupper running on the remote cluster. Note that the port must match the one used by the echo service in step 10 (i.e., 2000) and

echomust match the name of the service you created on the cluster earlier:

$ node ./simple.js 'amqp:echo=>tcp:2000'

Now. everything is set. You can go the remote cluster, use a pod that has the Netcat command nc installed like busybox:latest, and run:

$ nc localhost echo

Type a word, and you should see it echoed back as it passes through skupper-proxy-pod -> skupper routerrouter -> simple.js on your laptop -> the TCP echo service on your laptop, and back!

Conclusions and next steps

This project is still under heavy development and improves every day. Check out the progress on the official site or directly from the code on the GitHub project. In particular, here is the issue that tracks all of the work involved in supporting the local service exporter use case directly as a Skupper CLI command.

Last updated: June 29, 2020