Large language models (LLMs) today rely on synthetic data at every stage. This includes pre-training with billions of synthetic tokens (e.g., Cosmopedia), instruction-tuning with synthetic SFT (supervised fine tuning) datasets (e.g., LAB, Tülu3, Orca), and evaluation with benchmarks powered by LLM-as-a-judge (e.g., MT-Bench, AlpacaEval).

Why use synthetic data?

- Strong LMs. Open source models now match closed-source performance, making them effective teacher models.

- It is cheap and fast. Human-labeled instruction data is expensive, but synthetic data generation pipelines scale instantly.

- It is diverse and controllable. Human expertise is limited; synthetic data enables targeted coverage.

Synthetic data has shifted from nice-to-have to fundamental. But most teams are still hacking together one-off scripts, which slows innovation and reproducibility, which makes scaling difficult.

That’s why we built the SDG Hub.

Introducing SDG Hub

SDG Hub is an open framework to build, compose, and scale synthetic data pipelines with modular blocks. The following table summarizes its key capabilities.

What it does | Why it matters |

Build from reusable blocks (LLM-powered or traditional) | Replace ad hoc scripts with a repeatable framework |

Compose flows in Python or YAML | Scale data generation with asynchronous execution and monitoring |

Automatically discover data generation algorithms | Extend with custom blocks to fit your domain |

Blocks: The building units

At the core of SDG Hub are blocks: self-contained, composable units that are reusable, with each block transforming data in a specific way. Blocks have a consistent interface (Input → Process → Output) and are composable, so users can stack them together to form complex flows.

from sdg_hub.core.blocks import LLMChatBlock, JSONStructureBlock

chat_block = LLMChatBlock(

block_name="question_answerer",

model="openai/gpt-4o",

input_cols=["question"],

output_cols=["answer"],

prompt_template="Answer this question: {question}"

)

structure_block = JSONStructureBlock(

block_name="json_structurer",

input_cols=["summary", "entities", "sentiment"],

output_cols=["structured_analysis"],

ensure_json_serializable=True

)From blocks to flows

Flows are pipelines created by chaining blocks. They act as an orchestration layer, combining multiple blocks into sophisticated pipelines. You can define flows flexibly in Python or YAML. They provide optimized execution with asynchronous parallelism, debugging, and dry-run validation.

blocks:

- block_type: "PromptBuilderBlock"

block_config:

block_name: "build_summary_prompt"

input_cols: ["text"]

output_cols: ["summary_prompt"]

- block_type: "LLMChatBlock"

block_config:

block_name: "generate_summary"

input_cols: ["summary_prompt"]

output_cols: ["raw_summary"]

max_tokens: 1024

temperature: 0.3

async_mode: true

- block_type: "TextParserBlock"

block_config:

block_name: "parse_summary"

input_cols: ["raw_summary"]

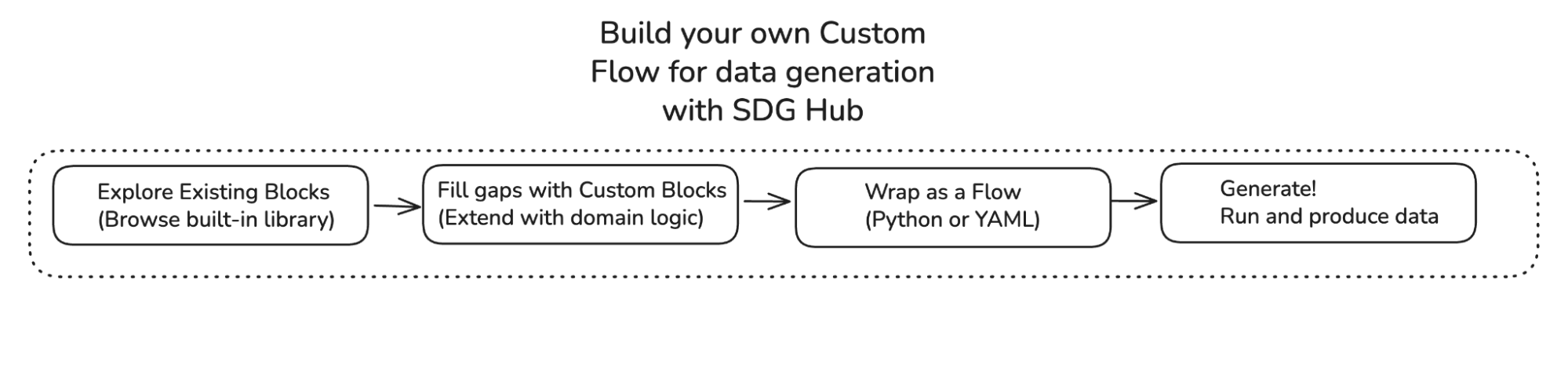

output_cols: ["summary"]SDG Hub offers pre-built flows for common use cases such as knowledge tuning. Users can also build their own custom flows (see Figure 1).

from sdg_hub.core.blocks.base import BaseBlock

from sdg_hub.core.blocks.registry import BlockRegistry

# Define a custom block

@BlockRegistry.register("MyCustomBlock", "custom", "Description of my block")

class MyCustomBlock(BaseBlock):

def generate(self, samples, **kwargs):

# Add domain-specific processing logic here

return samplesApplications of SDG Hub

SDG Hub has several applications:

- Customizing LLMs on domain knowledge: Fine-tune open-weight models with synthetic, domain-rich data. Flows are model-agnostic: we provide a recommended teacher model, but you can swap in any teacher model.

- Reasoning data generation: Use reasoning-capable teacher models (for example, GPT-OSS, Qwen, DeepSeek) to generate datasets with reasoning traces. This helps models learn step-by-step reasoning in complex tasks.

- Multilingual data generation for knowledge tuning: SDG Hub offers a pre-built flow for data generation in Japanese. Users can build other flows for various target languages.

Getting started

SDG Hub provides pre-built flows for common tasks like knowledge tuning, composable pipelines for custom generation and filtering, and example notebooks that show end-to-end use cases. These notebooks also include document pre-processing to ingest any type of document and data mixing to produce training-ready datasets for different models. SDG Hub provides default and compatible teacher model recommendations. You can also experiment with any teacher model and easily swap it into new and existing flows.

What’s next

Future updates for SDG Hub will bring new algorithms for synthetic generation and filtering, SDG for retrieval-augmented generation (RAG) evaluations (systematic testing of retrieval pipelines), and evaluation of teacher models to compare the quality of LLMs used for synthetic data.

Synthetic data is everywhere in the LLM pipeline. Now, with SDG Hub, you can build it in a way that is modular, scalable, and production-ready. SDG Hub helps you move from raw documents to structured data and finally to instruction datasets with composable building blocks.

With Red Hat AI 3, you can run SDG Hub's pre-built validated pipelines or your own custom pipeline on Red Hat OpenShift AI. This is available as a tech preview feature with a supported build of the SDG Hub Python library.

Check out this video to learn more about new ways to build data with SDG Hub: