Sometimes mistakes are opportunities, and wasting half of your LLM performance benchmarking budget, as we discovered in part 1, was one heck of an opportunity. In part 2, we covered how we built the ideal metric to define success (the Soft-C-Index metric) and our augmented dataset.

Now, we're finally ready to build the oversaturation detector (OSD) itself.

The first time we tried to build our OSD was before we had our 4,506-run dataset, so we tried to model the oversaturation problem theoretically. We designed a complex, elegant algorithm based on our whiteboard assumptions. Once we had real data, our entire theoretical model broke. When put to the test, our "smart" modeled algorithm was one of the worst performers. It was time to throw the theory out and follow the data.

Building an oversaturation detector

We adopted a process of iterative error analysis. The loop is simple:

- Start with a simple baseline algorithm.

- Evaluate it and find where it fails (the errors).

- Form a hypothesis about why it failed.

- Create a simple new rule to fix that one error.

- Go back to step 2.

We started by analyzing the errors. Here's what the data showed us.

Insight 1: The messy start

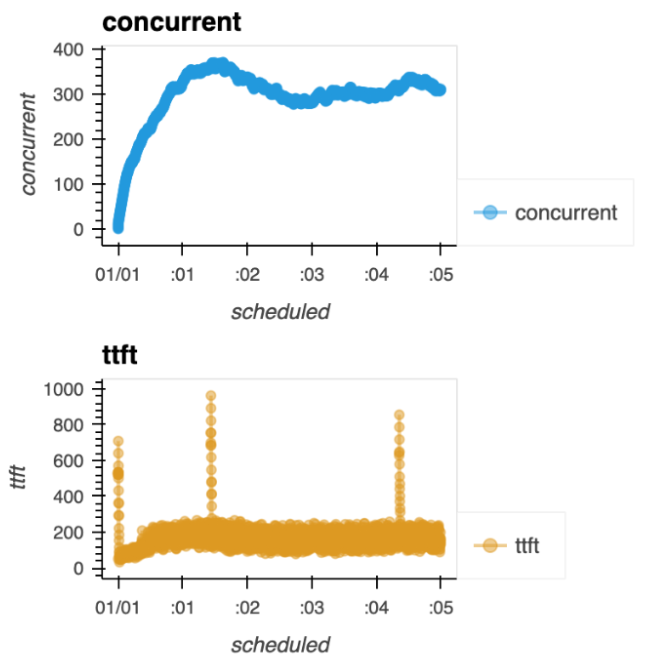

Our first baseline algorithms were a mess, constantly firing false alerts (stopping good runs) in the first minute. We looked at the Time to First Token (TTFT) and total concurrent requests plots of these good runs to see why.

The clue

Look at the concurrent (blue) and TTFT (yellow) plots in Figure 1. The first minute is erratic and noisy before it ramps up and stabilizes. Our algorithm was mistaking this initial chaos for real saturation.

The fix

We built our first rule: Ignore the initial phase. We ignore the first 25% of requests. This simple rule dramatically reduced our false alerts.

Insight 2: The indistinguishable shoot-up

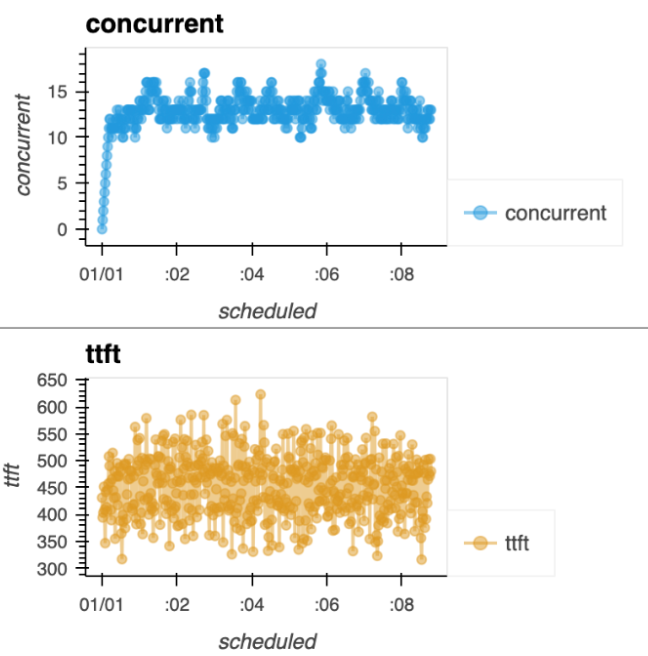

Our next error analysis showed we were still flagging good runs, even after the 25% fix.

The clue

We found a strange "shoot-up" phase: before the very first request is completed, the number of concurrent requests increases in a perfectly linear line (Figure 2). During this brief window, a good run and a bad run look identical.

The fix

We added a grace period. The algorithm must now wait at least 30 seconds and wait until the median TTFT is over 2.5 seconds (proving the server is actually delaying responses) before it can raise an alert.

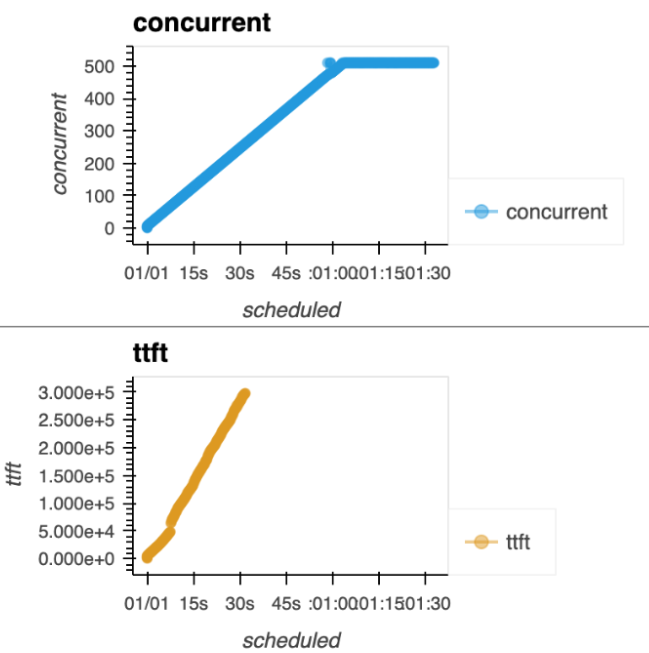

Insight 3: The "true panic" signal

With our false alerts now mostly fixed, we had the opposite problem: we were missing real saturation.

The clue

The graph in Figure 3 is the "panic" we described in part 1. Look at the concurrent (blue) plot and the TTFT (yellow) plot. They aren't just messy—they are climbing in a consistent, relentless, positive line. This is the true signal of oversaturation.

The fix

We added our final, primary rule. An alert is only raised when both TTFT and request concurrency show a consistently positive slope (which we measure using confidence intervals).

Our final, "handcrafted" algorithm

And that's it. Our best algorithm isn't a complex model; it's a set of three simple, hard-won rules:

- Ignore the first 25% of requests to get past the "messy" start.

- Wait 30 seconds and for a 2.5-second median TTFT to get past the "indistinguishable shoot-up."

- Alert only if both TTFT and concurrency are climbing in a consistent, positive line.

This simple, rules-based algorithm was the winner.

Did it work?

Yeah! A couple of months ago, we needed to run another suite of LLM performance benchmarks, and this time we were equipped with an oversaturation detector. It immediately stopped any oversaturated performance benchmarks, reducing the costs by more than a factor of 2, giving us the ability to run double the amount of tests with the same infrastructure.

Conclusion

And with that, we've wrapped up this three-part technical deep dive.

In part 1, you learned how oversaturation can ruin your LLM benchmarking day. In part 2, we discussed how to build an evaluation metric (Soft-C-Index). In this final part 3, you saw how to use data-driven detective work to build an algorithm from scratch. We wanted to share our learning journey with you, and we appreciate you taking that journey with us.

What's next?

We're working on integrating this algorithm into the Red Hat AI ecosystem to make LLM benchmarking more efficient and cost-effective for our customers. Stay tuned!