In today's fast-paced digital landscape, businesses collect vast amounts of data through customer interactions, product sales, SEO clicks, and more. However, the true value of this data lies in its ability to drive business intelligence. In this article, let's see how an event-driven architecture can leverage the capabilities of an AI/ML engine to unlock the true potential of data and transform it into valuable business intelligence.

Data is gold dust

Data is a critical business asset, especially organized data from which businesses can drive critical business decisions. With the right tools, data can help businesses make decisions with confidence and add value to the organization and customers. AIML makes the data productive by simplifying value extraction from data in an automated way.

Respond to data and events in real-time

There is no disputing that AI/ML and data are critical for organizations. Imagine what we could achieve if we could respond to this data in real time!

Apache Kafka provides a distributed streaming platform that excels in handling high-throughput and real-time data streams. With Kafka, you can ingest and process data as it is generated, which is crucial for real-time AI/ML applications that require immediate insights and decision-making. This scalability is essential for AI/ML workloads that involve processing massive datasets or handling concurrent data streams.

Events can trigger AI/ML processing, allowing you to react to changes in data and perform real-time analysis and decision-making. Kafka's messaging model helps make building event-driven architectures so much easier.

Simplify event-driven architectures with OpenShift Serverless

By bringing Red Hat OpenShift Serverless (Red Hat's offering based on Knative Eventing) into the mix, you can abstract away the semantics of streaming. As a developer, Knative Eventing makes it easier to integrate various event sources without the complexity of handling different event sources manually. The various systems involved communicate with each other with a standard-based CloudEvents.

The story

Let me introduce a fictitious retail store that we'll call Globex, that would like to gain a deeper understanding of their customers, products, and overall market landscape.

One way to achieve this is to analyze the product reviews submitted by their customers on their e-commerce website (Figure 1). These reviews can provide deep insights into whether the customer's sentiment is positive, neutral, or negative. A dashboard can provide temporal sentiment analysis that can further help understand the impact of various events such as holiday seasons, changes to a product's pricing, discounts, etc., thereby helping to drive deep insights into customer behavior.

At the same time, they would also like to moderate the reviews for abusive/foul language. This step would set the stage to extend this moderation to prevent sensitive information such as credit card numbers from being published.

Let's now see how this use case can be implemented using an event-driven architecture.

The architecture and components

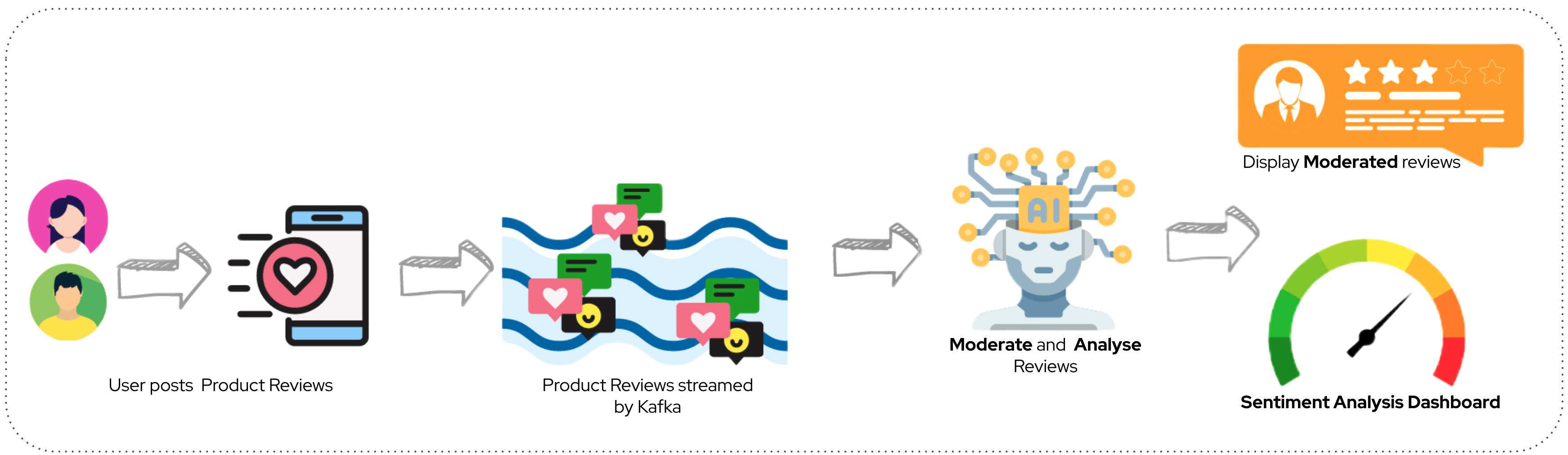

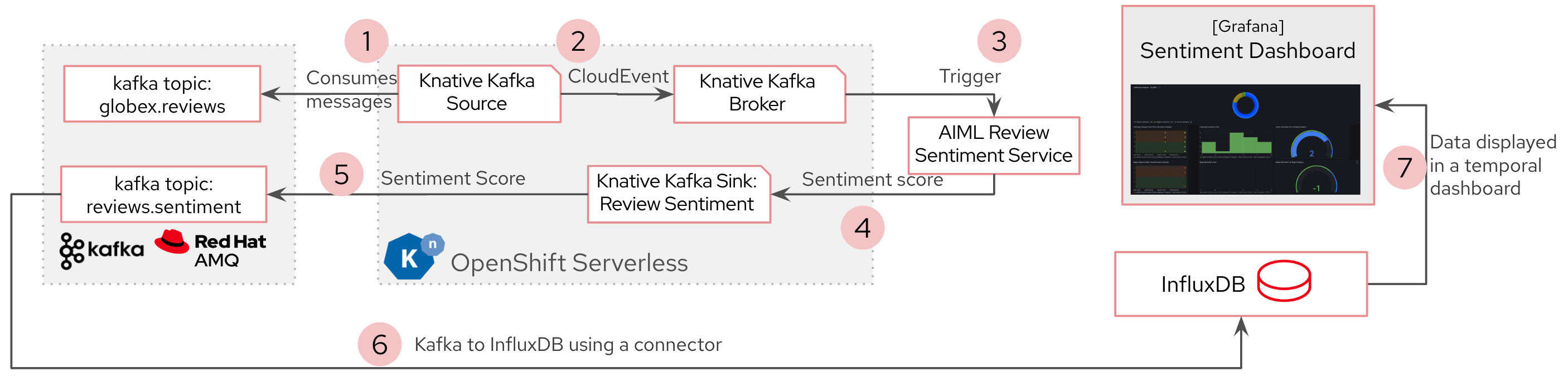

Let us step into each module as this story unfolds and look at the entire data journey through the system components to understand how the various pieces of technology come together to build a robust event-driven architecture, as shown in Figure 2.

The developer team decides to adopt an event-driven architecture pattern with Apache Kafka for data streaming with Quarkus to build intelligent applications using AI/ML to derive actionable decisions. These messages are then consumed by intelligent applications that use AI/ML to moderate and perform sentiment analysis on each review. The moderated messages are then persisted to be displayed on the e-commerce website, and the sentiment analysis is used to build a dashboard.

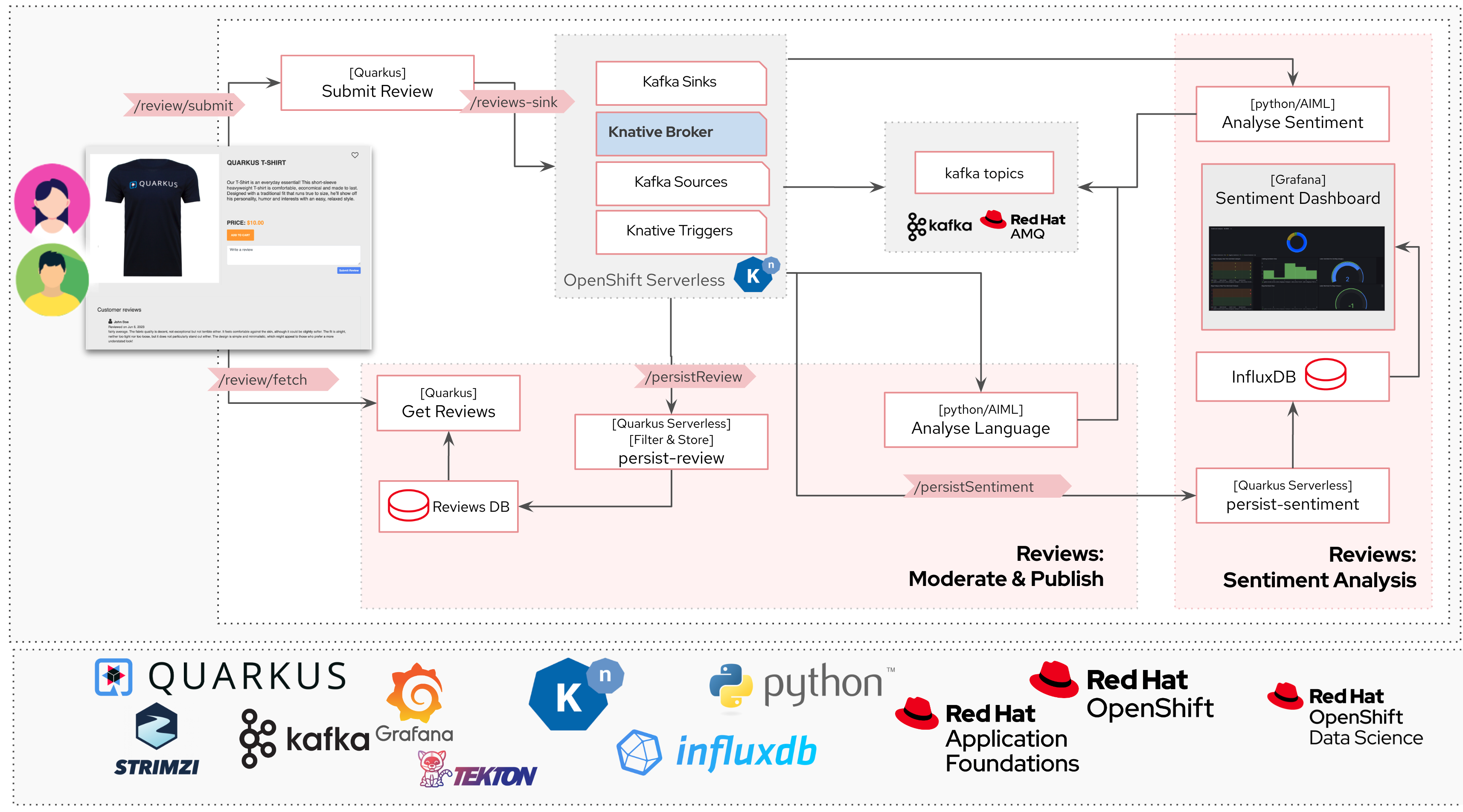

Figure 3 shows the overall architecture, which includes a number of open source components brought together to build this solution. We will look at data flow and analysis step-by-step.

Step 1: Product review submitted by user flows into Kafka

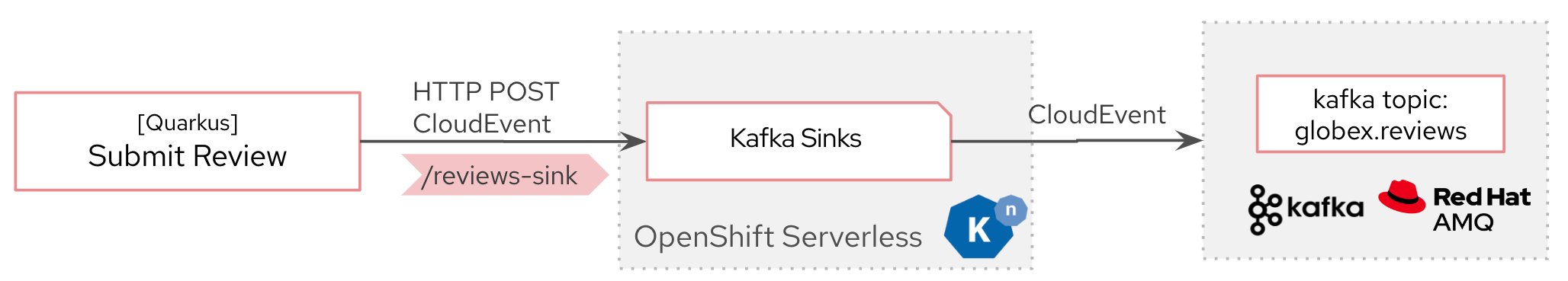

- Each product review submitted needs to be pushed into Kafka for further processing (see Figure 4). Typically, this would be done by a microservice, which will POST the review text to a Kafka producer who knows how to speak with Kafka.

- With the adoption of Knative Eventing, this becomes a simple HTTP call to a sink implementation called KafkaSink.

- A KafkaSink (reviews-sink) receives incoming events via HTTP from the Submit Review service and sends events to a configurable Apache Kafka topic.

Tech details

Red Hat AMQ Streams is easily deployed on Red Hat OpenShift using Strimzi. The Kubernetes operators make setting this up very easy for the team.

Each product review content must be submitted to the Kafka sink as CloudEvents. CloudEvents is fast becoming the industry standard for event specification used to describe event data. A CloudEvent contains specific attributes ce-source and ce-type, which help in routing the events to the correct consumers. Quarkus has built-in support for Cloud Events, which makes it super easy to adopt this specification.

At the end of this step, the product reviews in the form of CloudEvents are now stored in Kafka Topics with the help of Knative Eventing sink.

Step 2: Reviews sent to the intelligent applications for moderation

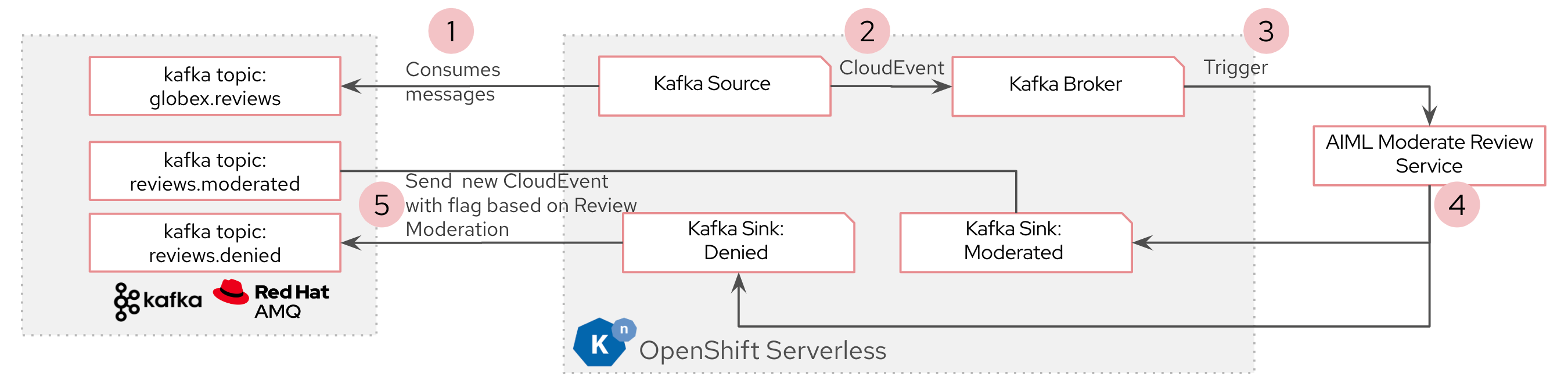

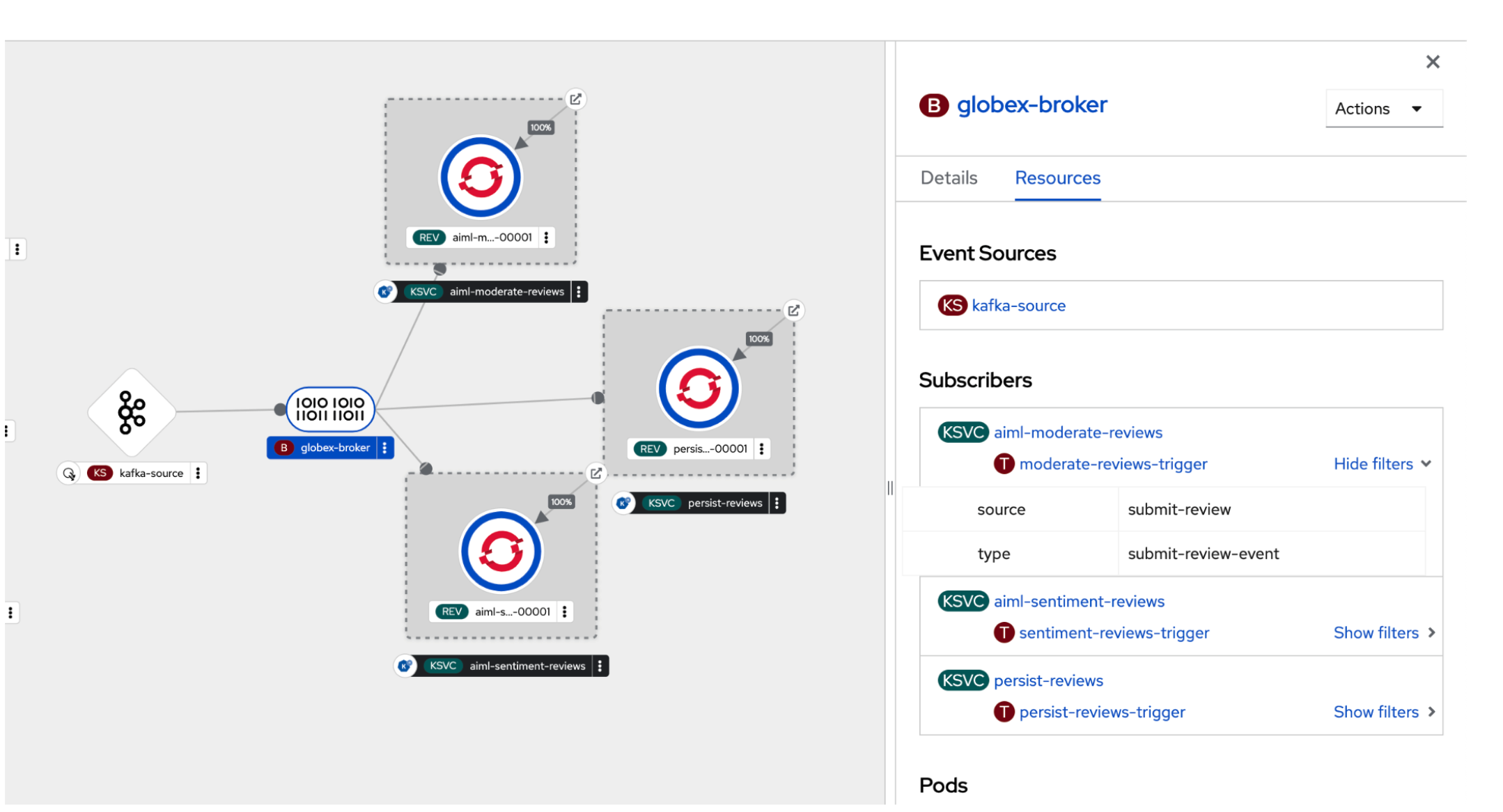

Now that the product review is sent to Kafka, the review is ready to be moderated (Figure 5). The Knative broker globex-broker receives the CloudEvent (product review) from a Kafka source and sends it to the AI/ML Moderate Reviews Knative (Python) service using Knative's Kafka brokers and triggers. This service analyzes the review text and POSTs another CloudEvent with this flag (abusive or not) to KafkaSinks.

Tech details

KafkaSource reads messages stored in existing Apache Kafka topics and sends those messages as CloudEvents through HTTP to a Knative broker for Kafka (Figure 6). Knative brokers have a native integration with Kafka for storing and routing events. Based on the ce-source and ce-type attributes of the Kafka (CloudEvent) message, the broker can then trigger specific subscribers.

The AIML Moderate Reviews service uses the Hate-speech-CNERG/english-abusive-MuRIL AI/ML model to identify if the product review is abusive or not.

Step 3: Moderated reviews persisted in database

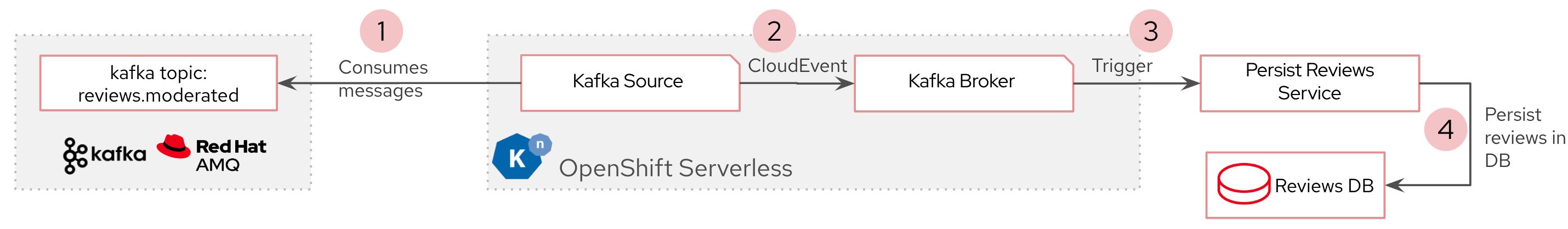

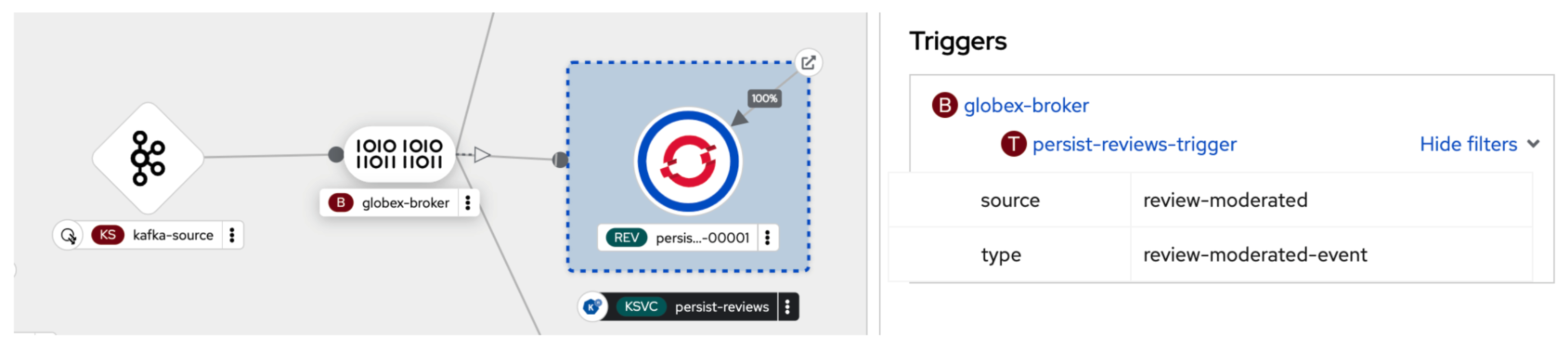

The moderated reviews need to be persisted in a database so that they can be displayed on the e-commerce portal (Figure 7). The Knative broker globex-broker triggers a call to the Persist Reviews Knative (Quarkus) service, which in turn stores the review in an appropriate database.

Tech details

The Persist Reviews is written using Quarkus and Panache (based on Hibernate), and is persisted in PostgreSQL in this case. The dev team might consider using MongoDB instead of PostgreSQL because the product review and the details can be considered as a document. Figure 8 shows how the trigger is mapped to the ce-source and ce-type from the review CloudEvent.

Step 4: Sentiment analysis of each review and build dashboard

The submitted product reviews also need to be analyzed to identify a sentiment score (Figure 9). The Knative broker globex-broker triggers a call to the Persist Reviews Knative (Python) Service, which identifies a score (from -1 to 4) depending on the tone of the review. A score of -1 means that the customer is extremely dissatisfied with the product, and a 4 is the top score for customer satisfaction.

Tech details

The Review Sentiment Knative (Python) service (Figure 10) is an existing AI/ML model nlptown/bert-base-multilingual-uncased-sentiment. InfluxDB is chosen as a time-series database, and the Grafana integrated easily with InfluxDB so as to build excellent dashboards.

Build a robust data pipeline with event-driven architecture without all the hassle

With a recipe that combines the power of AMQ Streams, OpenShift Serverless and Quarkus—and, of course, the magic of AI/ML—businesses have an unprecedented opportunity to extract valuable real-time insights to make intelligent decisions from data flowing from various sources within the organization. Red Hat Application Foundations provides a comprehensive set of components that helps businesses to build a robust event-driven architecture.

Coming up next

While in this solution, we have used existing AI/ML models to moderate and analyze the product reviews, data scientists will want to train their own models. In a future article, we will take this solution a step further and deploy trained models on the Red Hat's OpenShift Data Science platform and make them available as an API.

Red Hat's OpenShift Data Science provides a fully supported environment to train, deploy, monitor ML workloads, and establish MLOps best practices on-premise and in the public clouds. This platform enables data scientists to also the trained ML models to serve intelligent applications in production. Applications can send requests to the deployed model using its deployed API endpoint.

Try this solution pattern

This event-driven architecture solution (with deployment scripts, container images, and code) is available for you to try this out as a solution pattern. The solution pattern includes all the scripts to deploy the solution and a walk-through guide.

Solution patterns are fully coded and easily reproducible solutions to common use cases faced by organizations and can be used to inspire technical decision makers on how to achieve their goals with Red Hat's cloud-native application development and delivery platform.

Last updated: October 12, 2023