The ability to deploy and benchmark large language models (LLMs) in an air-gapped (disconnected) environment is critical for enterprises operating in highly regulated sectors. In this article, we walk through deploying the Red Hat AI Inference Server using vLLM and evaluating its performance with GuideLLM—all within a fully disconnected Red Hat OpenShift cluster.

We will use prebuilt container images, Persistent Volume Claims (PVCs) to mount models and tokenizers, and OpenShift-native Job resources to run benchmarks.

What is GuideLLM?

GuideLLM is an open source benchmarking tool designed to evaluate the performance of LLMs served through vLLM. It provides fine-grained metrics such as:

- Token throughput

- Latency (time-to-first-token, inter-token, request latency)

- Concurrency scaling

- Request-level diagnostics

GuideLLM uses the model’s own tokenizer to prepare evaluation prompts and supports running entirely in disconnected environments. The text corpus used to generate the requests is included in the GuideLLM image, no additional datasets are required to perform benchmarking.

Why does GuideLLM need to use the models own tokenizer?

Different models use different tokenization schemes (e.g., byte-pair encoding, WordPiece, sentencepiece). These affect how a given input string is split into tokens and how long a sequence is in tokens vs. characters. Metrics like "tokens per second" and "time to first token" depend directly on how many tokens are involved in a prompt or response. Using the model’s native tokenizer ensures the prompt token count matches what the model actually receives and the output token count reflects the true workload.

Architecture overview

This benchmark stack includes:

- OpenShift 4.14–4.18: Cluster running the GPU workloads

- Node Feature Discovery (NFD) and NVIDIA GPU Operator: To enable GPU scheduling

- Red Hat AI Inference Server (vLLM): Hosts and serves quantized LLMs

- GuideLLM: Deployed as a containerized job to evaluate inference performance

- Persistent volumes (PVCs):

- Model weights (

/mnt/models) - Tokenizer files (

/mnt/tokenizer) - Benchmark results (

/results)

- Model weights (

Mirroring images for a disconnected OpenShift environment

In air-gapped OpenShift environments, public registries like quay.io and registry.redhat.io are not accessible at runtime. To ensure successful deployments, all required images must be mirrored into an internal registry that is accessible to the disconnected OpenShift cluster. Red Hat provides the oc-mirror tool to help generate a structured image set and transfer it across network zones.

This section outlines how to mirror the necessary images and configure OpenShift to trust and pull from your internal registry.

Prerequisites

- A reachable internal container registry, such as:

- A local registry hosted inside OpenShift (e.g.,

registry.apps.<cluster-domain>) - A mirror registry set up per Red Hat’s disconnected installation docs.

- A local registry hosted inside OpenShift (e.g.,

oc-mirrorandrsyncinstalled on a connected machine- Authentication credentials (e.g., pull secret) for any source registries (like

registry.redhat.io)

Identify required images

Here’s a list of images required for this disconnected deployment:

| Purpose | Source image |

| Red Hat AI Inference Server vLLM runtime | registry.redhat.io/rhaiis/vllm-cuda-rhel9:3.2.1-1756225581 |

| GuideLLM | quay.io/rh-aiservices-bu/guidellm:f1f8ca8 |

UBI base (for rsync), any other minimal image can be used | registry.access.redhat.com/ubi9/ubi:latest |

Create the ImageSet configuration

An ImageSet configuration is a YAML file used by the oc-mirror CLI tool to define which container images should be mirrored from public or external registries into a disconnected OpenShift environment.

Start by defining which images you want to mirror. For benchmarking, we need:

- GuideLLM container image

- Red Hat AI Inference Server image

cat << EOF > /mnt/local-images/imageset-config-custom.yaml

---

kind: ImageSetConfiguration

apiVersion: mirror.openshift.io/v1alpha2

storageConfig:

local:

path: ./

mirror:

additionalImages:

- name: quay.io/rh-aiservices-bu/guidellm:f1f8ca8

- name: registry.redhat.io/rhaiis/vllm-cuda-rhel9:3.2.1-1756225581

helm: {}

EOFThis YAML tells oc-mirror to collect the listed images and store them locally in the current directory.

Run oc-mirror on the internet connected host

Change to the working directory and run:

cd /mnt/local-images

oc-mirror --config imageset-config-custom.yaml file:///mnt/local-imagesThis will:

- Download all required image layers

- Package them into tarballs and metadata directories (e.g.,

mirror_seq2_000000.tar)

Transfer mirror tarballs to the disconnected host

Use rsync or any file transfer method (USB, SCP, etc.) to move the mirrored content:

rsync -avP /mnt/local-images/ disconnected-server:/mnt/local-images/Load the images into the internal registry

On the disconnected host:

cd /mnt/local-images

oc-mirror --from=/mnt/local-images/mirror_seq2_000000.tar docker://$(hostname):8443This command unpacks the image layers and pushes them into the image registry running on the current node at port 8443.

Apply ImageContentSourcePolicy (ICSP)

To allow OpenShift to redirect image pulls to the internal mirror, create an ImageContentSourcePolicy (ICSP):

cat << EOF > /mnt/local-images/imageset-content-source.yaml

---

apiVersion: operator.openshift.io/v1alpha1

kind: ImageContentSourcePolicy

metadata:

name: disconnected-mirror

spec:

repositoryDigestMirrors:

- mirrors:

- registry-host:8443/rh-aiservices-bu/guidellm

source: quay.io/rh-aiservices-bu/guidellm

- registry-host:8443/rhaiss/vllm-cuda-rhel9

source: registry.redhat.io/rhaiis/vllm-cuda-rhel9

EOFoc apply -f /mnt/local-images/imageset-content-source.yamlWarning: Update the mirror registry-host to match the address or DNS name of your internal registry.

This policy ensures that when a pod attempts to pull from quay.io/rh-aiservices-bu/guidellm, OpenShift will instead pull from your local mirror.

Now that the images are available in the disconnected environment, we can proceed to setting up persistent volumes for the model weights and tokenizer, and start running benchmarks.

Copying model weights into a disconnected OpenShift environment

In disconnected environments, model weights and tokenizer files must first be transferred from a connected system to a machine inside the disconnected environment. From there, they can be loaded into OpenShift using persistent volume claims (PVCs) and the oc CLI.

Persistent volume setup

This benchmarking stack requires the following PVCs:

- The model weights directory for vLLM serving.

- The tokenizer JSON/config files for generating test sequences.

- A volume to store benchmark results.

1. Model weights PVC

The model weights must be made available to the Red Hat AI Inference Server (vLLM) pod, create a PVC large enough to store the weights and then copy the weights to the PVC using a model copy pod.

oc create -f - <<EOF

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: llama-31-8b-instruct-w4a16

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

EOFOnce the PVC is created, we can create a pod to temporarily mount the PVC so that we can copy data to it.

Note: In the following example, we're using a ubi9 image to create this temporary pod. Other images can be used if this image is not available in the disconnected environment.

oc create -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: model-copy-pod

spec:

containers:

- name: model-copy

image: registry.access.redhat.com/ubi9/ubi:latest

command: ["sleep", "3600"]

volumeMounts:

- name: models

mountPath: /mnt/models

volumes:

- name: models

persistentVolumeClaim:

claimName: llama-31-8b-instruct-w4a16

EOFCopy the model from the location on the disconnected server using the temporary pod, and then delete the pod.

oc rsync ./local-models/llama/ model-copy-pod:/mnt/models/

oc delete pod model-copy-pod2. Tokenizer PVC

GuideLLM requires access to the tokenizer used by the model, we will create a PVC to store the tokenizer, and similar to the previous step, copy the files to the PVC using a temporary pod.

oc create -f - <<EOF

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: llama-31-8b-instruct-w4a16-tokenizer

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

EOFCreate a temporary pod to mount this PVC

oc create -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: tokenizer-copy-pod

spec:

containers:

- name: tokenizer-copy

image: registry.access.redhat.com/ubi9/ubi:latest

command: ["sleep", "3600"]

volumeMounts:

- name: tokenizer

mountPath: /mnt/tokenizer

volumes:

- name: tokenizer

persistentVolumeClaim:

claimName: llama-31-8b-instruct-w4a16-tokenizer

EOFCopy the tokenizer and config to the PVC using the temporary pod and then delete the pod.

oc cp ./local-models/llama/tokenizer.json tokenizer-copy-pod:/mnt/tokenizer/

oc cp ./local-models/llama/config.json tokenizer-copy-pod:/mnt/tokenizer/

oc delete pod tokenizer-copy-pod3. Benchmark results PVC

It's also recommended to store the benchmarking results in a PVC.

oc create -f - <<EOF

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: benchmark-results

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

EOFDeploy Red Hat AI Inference Server (vLLM)

The Red Hat AI Inference Server enables model inference using the vLLM runtime and supports air-gapped environments out of the box. For this setup, you will deploy Red Hat AI Inference Server as a Deployment resource with attached persistent volumes for model weights and configuration.

This deployment uses the image registry.redhat.io/rhaiis/vllm-cuda-rhel9:3.2.1-1756225581 and is configured for the meta-llama-3.1-8B-instruct-quantized.w4a16 model.

Example deployment manifest

apiVersion: apps/v1

kind: Deployment

metadata:

name: llama

labels:

app: llama

spec:

replicas: 1

selector:

matchLabels:

app: llama

template:

metadata:

labels:

app: llama

spec:

containers:

- name: llama

image: 'registry.redhat.io/rhaiis/vllm-cuda-rhel9:3.2.1-1756225581'

imagePullPolicy: IfNotPresent

command:

- python

- '-m'

- vllm.entrypoints.openai.api_server

args:

- '--port=8000'

- '--model=/mnt/models'

- '--served-model-name=meta-llama-3.1-8B-instruct-quantized.w4a16'

- '--tensor-parallel-size=1'

- '--max-model-len=8096'

resources:

limits:

nvidia.com/gpu: '1'

requests:

nvidia.com/gpu: '1'

volumeMounts:

- name: cache-volume

mountPath: /mnt/models

- name: shm

mountPath: /dev/shm

tolerations:

- key: nvidia.com/gpu

operator: Exists

volumes:

- name: cache-volume

persistentVolumeClaim:

claimName: llama-31-8b-instruct-w4a16

- name: shm

emptyDir:

medium: Memory

sizeLimit: 2Gi

restartPolicy: AlwaysNotes:

- Model weights PVC (llama-31-8b-instruct-w4a16) is mounted at

/mnt/models. --tensor-parallel-sizeis set to1for single-GPU workloads. Adjust accordingly.- GPU toleration is added to ensure the pod lands on a node with an NVIDIA GPU.

Deploying

Save the manifest as rhaiis-deployment.yaml and run:

oc apply -f rhaiis-deployment.yamlVerify the pod is running and exposing port 8000:

oc get pods

oc logs <pod-name> -fExpose the Red Hat AI Inference Server deployment on port 8000

After deploying the Red Hat AI Inference Server (vLLM) server, it is important to expose the pod using a Kubernetes Service to enable communication—either internally (e.g., for benchmarking tools like GuideLLM) or externally (via ingress or route, if desired).

In this guide, we’ll expose the Red Hat AI Inference Server server on port 8000 using a Kubernetes Service, which makes it discoverable to other pods within the OpenShift cluster.

Create the service

Apply the following YAML to expose the deployment via a ClusterIP service named llama-31-8b-instruct-w4a16-svc.

apiVersion: v1

kind: Service

metadata:

name: llama-31-8b-instruct-w4a16-svc

labels:

app: rhaiis

spec:

selector:

app: rhaiis

ports:

- protocol: TCP

port: 8000

targetPort: 8000

type: ClusterIP Save the above manifest as rhaiis-service.yaml and apply it:

oc apply -f rhaiis-service.yamlThis service:

- Routes traffic to pods matching the label app:

rhaiis - Exposes TCP port 8000 (the same as the container port used by the vLLM server)

- Is only accessible within the cluster (type

ClusterIP)

With this deployment, the model is now being served over HTTP from port 8000 inside the cluster and is ready for benchmarking via GuideLLM.

Run the benchmark job

To run the GuideLLM benchmarks, we're going to deploy an OpenShift Job, which will complete once the benchmarking is complete. The expectation is we'll run GuideLLM multiple times with different configurations e.g. sequence-length, to benchmark different loads.

The args section in the GuideLLM benchmark job defines how the test is run. Here's what each key setting does:

--target: The URL of the vLLM model server (Red Hat AI Inference Server endpoint) to benchmark.--tokenizer-path: Path to the model’s tokenizer files, ensuring accurate prompt tokenization.--sequence-length: Length of the input prompt in tokens (e.g., 512).--max-requests: Total number of inference requests to send during the test.--request-concurrency: Number of parallel requests sent at once to simulate load.--output: Location to store the results JSON file (usually backed by a PVC).

These parameters allow you to control test size, load intensity, and output location, providing a flexible framework to benchmark models consistently in disconnected environments.

The following table shows several example configurations demonstrating how different prompt and output sizes, as well as concurrency levels, can be used to mimic real-world workloads.

Prompt tokens | Output tokens | Max duration (s) | Concurrency levels | Use case example |

| 2,000 | 500 | 360 | 1, 5, 10, 25, 50, 100 | General-purpose LLM tasks, chat-like prompts |

| 8,000 | 500 | 360 | 1, 5, 10, 25, 50, 100 | Extended context (e.g., multi-paragraph summarization) |

| 10,000 | 500 | 360 | 1, 5, 10, 25, 50, 100 | Deep document prompts or structured context input |

| 20,000 | 3,000 | 600 | 1, 5, 10, 25, 50, 100 | Long-form generation, code synthesis, legal document Q&A |

| 20,000 | 5,000 | 600 | 1, 5, 10, 25, 50, 100 | Full document completion or summarization of large reports |

Here's an example job:

apiVersion: batch/v1

kind: Job

metadata:

name: guidellm-benchmark

spec:

template:

spec:

containers:

- name: guidellm

image: quay.io/rh-aiservices-bu/guidellm:f1f8ca8

args:

- --target=http://llama-31-8b-instruct-w4a16-rhaiis:8000

- --tokenizer-path=/mnt/tokenizer

- --sequence-length=512

- --max-requests=100

- --request-concurrency=8

- --output=/results/output.json

volumeMounts:

- name: tokenizer

mountPath: /mnt/tokenizer

- name: results

mountPath: /results

restartPolicy: Never

volumes:

- name: tokenizer

persistentVolumeClaim:

claimName: llama-31-8b-instruct-w4a16-tokenizer

- name: results

persistentVolumeClaim:

claimName: benchmark-resultsApply the job:

oc apply -f guidellm-job.yamlOnce the Job is created, a pod will start and run the GuideLLM benchmark until completion. Once the Job is completed an overview of the results will be displayed in the logs.

Viewing the results

The results will be saved as a structured JSON file in the /results mount. Example key metrics:

tokens_per_secondrequest_latencytime_to_first_token_msinter_token_latency_ms

Post run result analysis

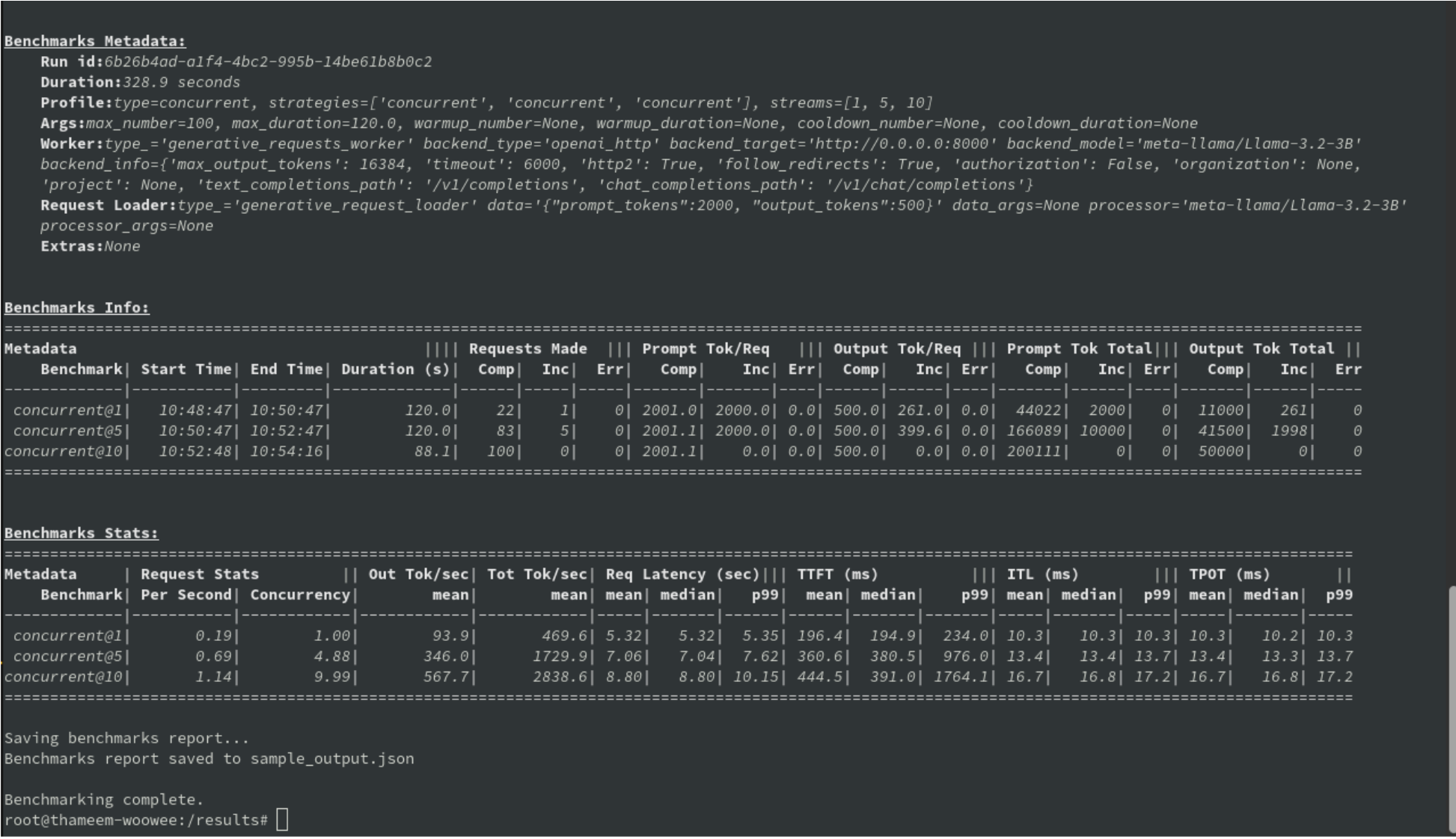

The terminal output is shown in Figure 1.

The GuideLLM output JSON structure is as follows:

benchmarks: A list of all the individual concurrencies and test types that have been run.benchmarkargs: Full test informationDurationEnd_timeExtrasmetrics: Please refer to the successful requests only.inter_token_latency_msoutput_token_countoutput_tokens_per_secondprompt_token_countrequest_concurrencyrequest_latencyrequests_per_secondtime_per_output_token_mstime_to_first_token_mstokens_per_second

request_totalsrun_stats: Includes run-level statsrequests: Contains the individual requests, responses, and metrics for the request in question.

Conclusion

Running LLM serving and benchmarking in a fully disconnected cluster doesn’t have to be hard. With vLLM and GuideLLM, the workflow is simple and OpenShift-native:

- Serve fast with vLLM, no internet required. Point vLLM at a PVC with your model weights, deploy the standard container image, and expose port 8000. Swapping models or scaling GPUs is just a manifest tweak away.

- Benchmark immediately with GuideLLM. GuideLLM ships its own corpus and uses the model’s native tokenizer, so you get accurate TPS/latency metrics without pulling external datasets or tools.

- Mirror once, reuse everywhere. Using

oc-mirror, the few required images are mirrored into your internal registry. After that, every deploy and benchmark runs repeatably with no outbound calls. - All Kubernetes primitives. PVCs, Jobs, and Services keep operations familiar, scriptable, and auditable for regulated environments.

vLLM makes serving LLMs in a disconnected environment straightforward, and GuideLLM makes offline benchmarking easy. Together they provide a clean, reproducible path from model deployment to performance insights—entirely within an air-gapped OpenShift environment.