Distributed inference is changing how large language models (LLMs) are deployed, making it possible to run them efficiently across diverse and scalable infrastructure. This article explains how distributed inference evolved, the open source technologies that enable it, and how the llm-d project is shaping the next generation of intelligent, cluster-wide model serving.

From distributed computing to distributed inference

Before diving into distributed inference, you first need to understand distributed computing, the foundation on which these systems are built.

In 2025, when most developers talk about distributed computing, they are referring to Kubernetes. For Red Hat, that means Red Hat OpenShift (see Figure 1), an enterprise-grade Kubernetes platform designed for hybrid cloud environments.

Historically, web services were hosted on single servers. If the server went down, the service went down with it. Kubernetes changed that model by enabling workloads to run across multiple machines, allowing for load balancing, failover, and efficient resource use. Instead of one application instance tied to a single point of failure, multiple instances can run across a cluster.

This approach extends beyond compute. Storage, networking, and other layers can all be distributed. OpenShift builds on top of these layers to offer a complete, production-ready hybrid cloud foundation.

OpenShift and the open hybrid cloud

OpenShift provides a secure, modular platform for distributed workloads. On top of Kubernetes orchestration, it adds enterprise-grade features like authentication, Role-based access control (RBAC), and a security-focused software supply chain that verifies and signs containerized applications and models running within the cluster. These capabilities help ensure that only trusted workloads execute in production environments.

Above that foundation sits OpenShift AI, which focuses on enabling model training, serving, and management. For generative AI workloads, it integrates vLLM, an open source engine optimized for high-performance inference. To reach true scalability, the community needed a way to run inference across multiple nodes and heterogeneous compute environments. That's where llm-d enters the picture.

Introducing llm-d

llm-d is an open source project dedicated to distributed LLM inference. It was created from the realization that traditional inference frameworks treat models as isolated black boxes bound to a single node or GPU set. The vLLM community, in collaboration with organizations, recognized that scaling inference would require a new approach. The goal was to integrate with existing tools while adding new mechanisms for distributed scheduling and routing.

The result is llm-d, a modular system designed to orchestrate and optimize LLM inference across clusters.

The challenge of LLM inference

When deployed in Kubernetes or OpenShift, LLMs often run as monolithic pods. Inside each pod, multiple layers of the model execute together on one or a few GPUs. While this setup works for smaller deployments, it limits flexibility and observability. Engineers cannot easily assign specific model components to different compute nodes or analyze performance within individual layers.

Another issue is how we measure performance. Traditional application metrics such as CPU utilization, memory consumption, or HTTP request counts do not fully capture LLM behavior. As inference workloads scale, these conventional approaches become inadequate.

Routing limitations in today's inference systems

Most current inference systems rely on generic routing strategies such as round robin or sticky sessions, both of which were designed for web traffic, not LLMs. These strategies do not account for prompt structure, token count, queue depth, or GPU load. They also fail to reuse cached computations efficiently.

This stateless approach means that requests are often routed randomly or evenly, ignoring opportunities to reuse cached key-value (KV) pairs or to prioritize latency-sensitive queries. The result is wasted compute and longer response times.

How llm-d solves these problems

llm-d is organized around three core areas that define how distributed inference is applied in production. The first is disaggregated inference, which separates the prefill and decode stages to make model execution more efficient. The second is smarter scheduling with KV cache and prefix cache-aware routing, which improves performance by reusing computations and routing requests intelligently across nodes. The third is Mixture of Experts (MoE), an approach used by large models such as DeepSeek-R1 and GPT-OSS that distributes specialized parts of a model across multiple nodes to improve scalability and resource utilization.

These three areas form what are known as the well-lit paths of llm-d. Each path represents a practical and progressive way to implement distributed inference within a Kubernetes-based system.

Well-lit path 1: Disaggregated inference

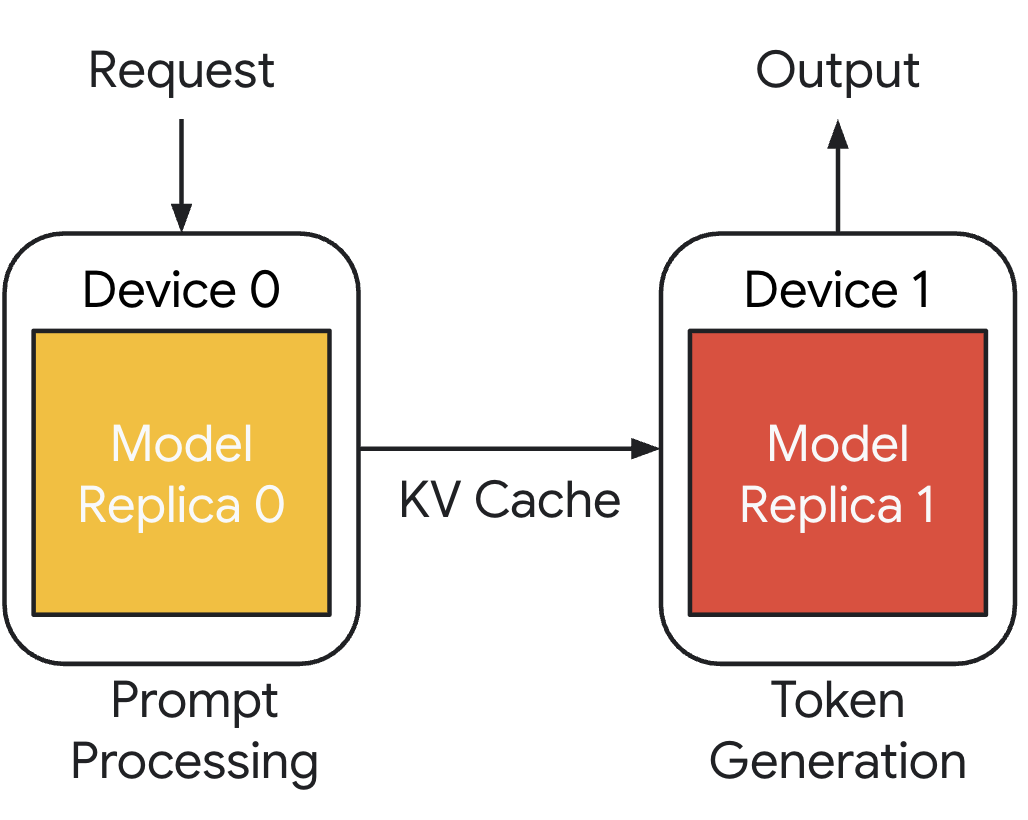

The first well-lit path focuses on how to separate model execution into its key components. One of the most practical aspects of this approach is the ability to work efficiently even when GPU resources are limited. If GPU availability is a challenge, llm-d allows the prefill phase to run on CPUs while the decode phase runs on GPUs. The decode phase is typically more memory intensive and benefits from GPU acceleration, while prefill can often be handled effectively by CPU resources. This flexibility allows organizations to serve larger models on existing hardware that would otherwise be limited by GPU capacity.

Figure 2 shows what disaggregated prefill looks like from end to end. A request first enters the prefill process, which structures the prompt and creates the KV cache. That cache is then shared with the decode process, which generates the output tokens that appear to the user. This workflow can run within a single pod or across multiple pods, depending on available resources.

This disaggregated design improves performance and provides a foundation for further optimization through scheduling and routing, which leads directly into the next well-lit path.

Well-lit path 2: Smarter scheduling and prefix cache-aware routing

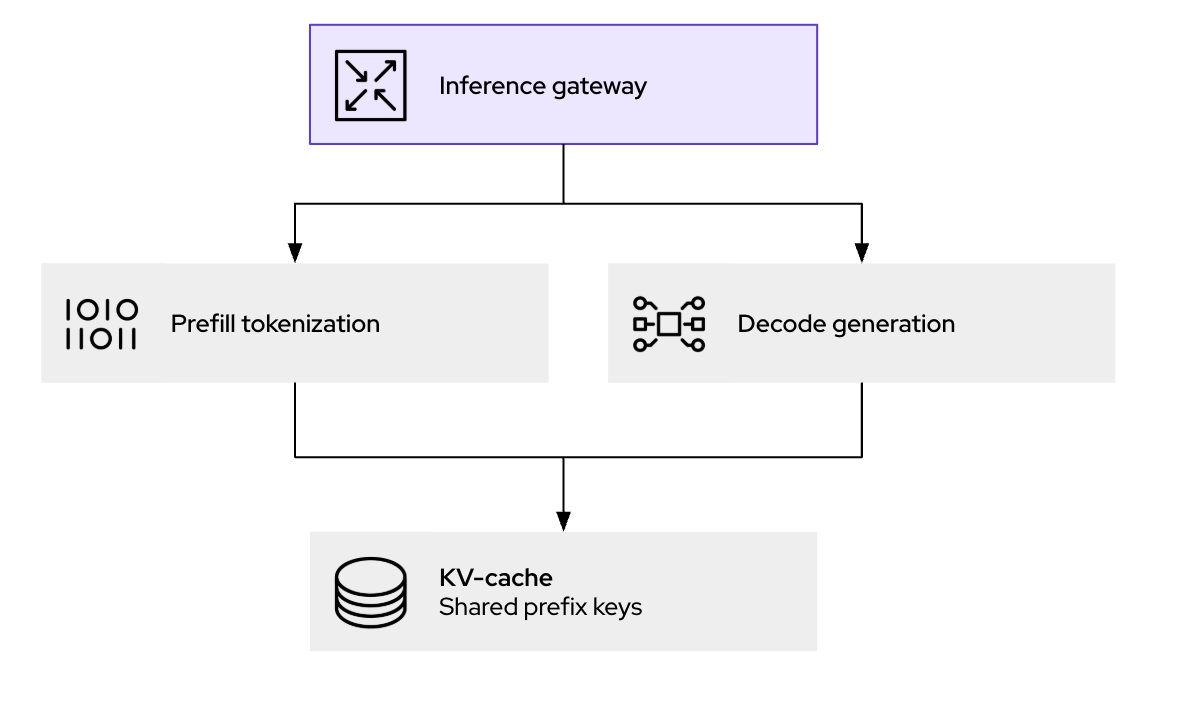

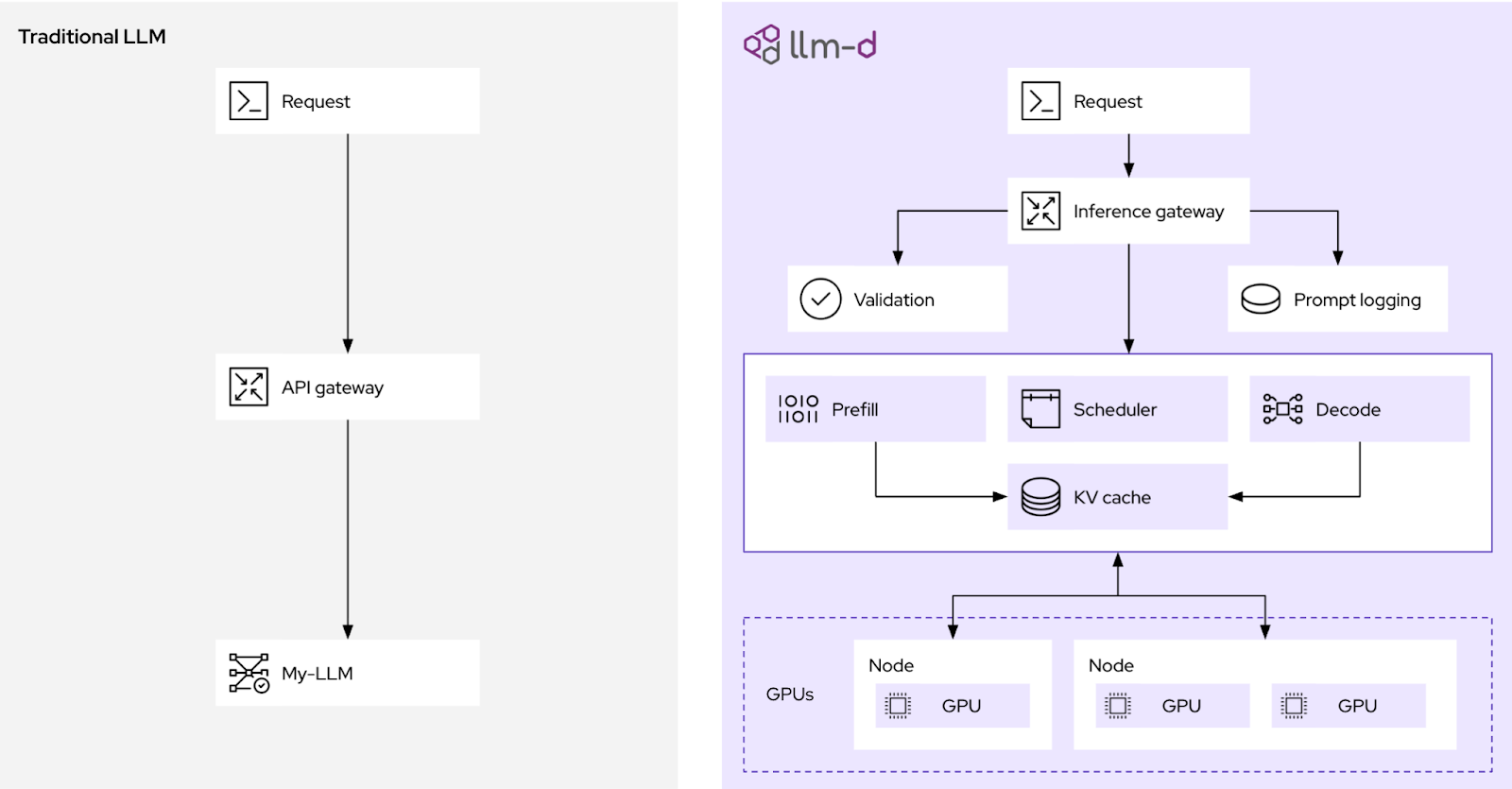

The second path focuses on intelligent routing using an inference gateway (Figure 3). Traditional API gateways make routing decisions based on general system metrics such as CPU or memory use. The inference gateway takes a different approach by becoming prompt-aware, which means it understands the structure and content of incoming prompts and can route them based on cache context and prior requests.

For example, consider a user who requests a summary of a quarterly financial report. If that same document or a similar prompt has already been processed, the inference gateway detects the match and routes the request to the instance holding the relevant cache. This reduces redundant computation, improves time to first token, and lowers overall GPU usage.

Beyond performance, this gateway layer also supports capabilities such as auditing, logging, and guardrails that can be applied consistently across deployments.

Figure 4 compares traditional load balancing on the left with llm-d's inference gateway on the right. In the traditional setup, requests are distributed randomly using round robin or sticky session routing with no awareness of cache state or prompt context. The inference gateway validates each prompt, checks for cache hits, and routes to the optimal node. The result is higher cache reuse, lower latency, and more efficient scheduling across the cluster.

While this example shows one method of intelligent routing, llm-d is designed to support additional smart scheduling strategies as new techniques evolve.

Well-lit path 3: Mixture of Experts (MoE)

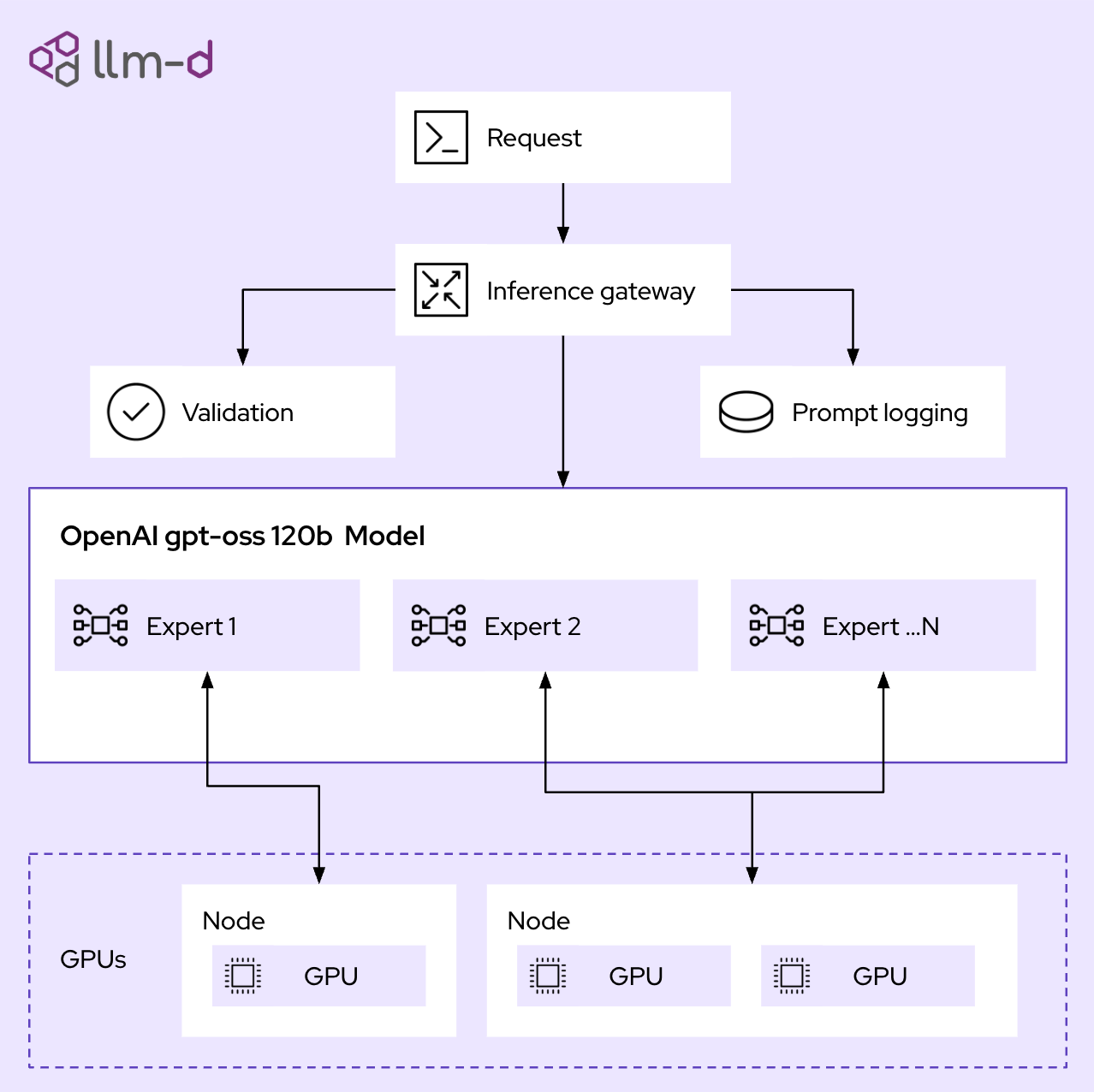

The third well-lit path introduces the concept of expert parallelism, which is essential for running very large models efficiently across distributed environments. A helpful way to understand this is through a university analogy. If you have a history question, you go to the history department rather than physics or admissions. A mixture of experts model works in a similar way by dividing specialized knowledge within the model into separate experts, each responsible for handling specific types of input.

This approach is implemented through two forms of parallelism: expert parallelism (EP) and data parallelism (DP). Expert parallelism, shown in Figure 5, breaks the model into specialized components and distributes them across multiple nodes or servers. This allows organizations to avoid relying on a single large GPU machine. Instead, llm-d makes it possible to use multiple smaller nodes, often with mixed hardware, to scale horizontally across the entire datacenter.

This design enables models such as DeepSeek-R1 and GPT-OSS to run efficiently on standard enterprise infrastructure. Figure 6 shows how requests flow through the inference gateway to different experts located across various nodes and compute types, making large-scale distributed inference achievable and cost effective for production environments.

Building an enterprise-ready gen AI inference platform

When combined, these components—including disaggregated inference, prompt-aware routing, and expert parallelism—form a complete enterprise distributed inference platform. llm-d extends vLLM's core serving capabilities across clusters, using GPUs, CPUs, and TPUs from multiple vendors.

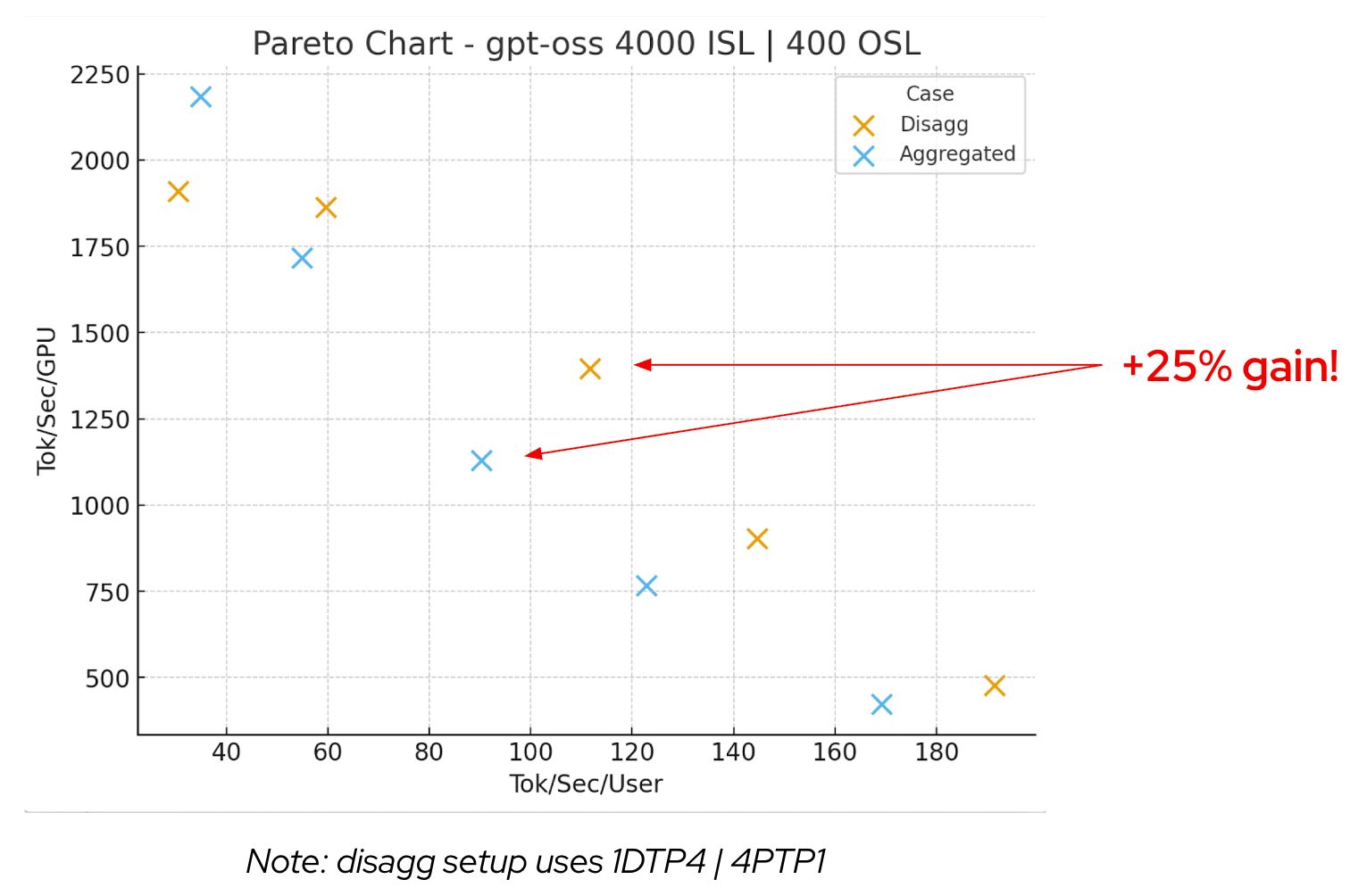

Even with default settings, disaggregated inference often provides an immediate 25% performance boost (Figure 7). You often see an immediate performance gain without any additional configuration. This comes from allowing Kubernetes to schedule the prefill and decode phases separately rather than treating the model as a single unit. By splitting these components, llm-d enables better resource allocation across the cluster, improving throughput and reducing latency. The system can handle more concurrent requests using the same hardware, making distributed inference practical even for organizations with limited GPU availability.

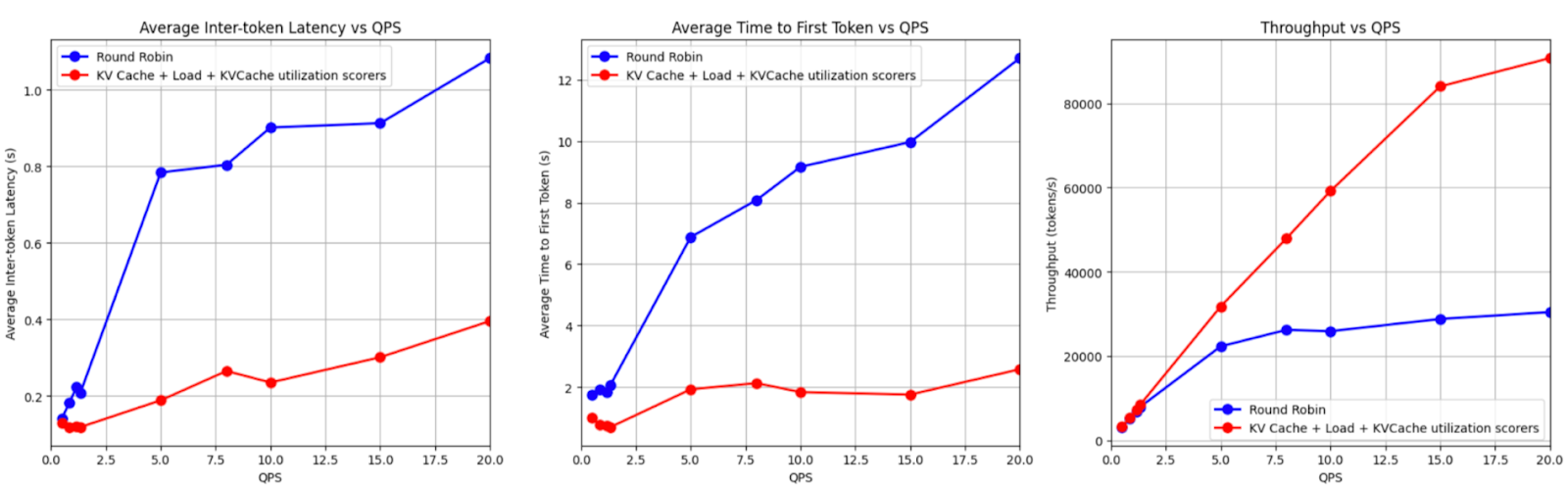

When intelligent scheduling and cache-aware routing are added, performance improves by orders of magnitude (Figure 8). These gains come from reusing cached computations, avoiding redundant prefill operations, and routing prompts to the most efficient nodes based on cache state and workload context. Together, these optimizations dramatically shorten time to first token and deliver faster, more efficient inference across the entire cluster.

Unboxing the LLM: Observability and metrics

By breaking inference into observable parts, llm-d introduces a new level of transparency. Teams can track key metrics such as time to first token, inter-token latency, and cache hit rate, all of which correlate directly to user experience and GPU efficiency. These insights help identify performance bottlenecks, minimize resource waste, and improve SLAs and SLOs across production environments.

The cross-platform nature of llm-d

llm-d is designed to be portable and vendor-neutral. It supports diverse accelerator hardware including NVIDIA and AMD GPUs and Google TPUs, and runs across any Kubernetes-based infrastructure. This makes it easy for enterprises to operationalize distributed inference in heterogeneous environments while maintaining flexibility and control.

Conclusion

The llm-d project is fully open source and available on GitHub, complete with Helm charts for easy deployment on OpenShift clusters. Developers can experiment with disaggregated inference, prompt-aware routing, and expert parallelism today. Within OpenShift AI, these capabilities are being integrated directly into the platform, enabling users to leverage llm-d as a core part of their model-serving stack.

Distributed inference marks a new era for AI infrastructure, bringing the efficiency, observability, and scalability of Kubernetes into the world of large language models. Get involved with the llm-d community to help shape this future and bring enterprise AI fully into the Kubernetes era.