Note: Not for production use on OpenShift AI

vLLM is designed for GPU-accelerated inference in production environments via Red Hat OpenShift AI. It is not intended for CPU-based inference and has not been optimized for CPU performance. This article covers running vLLM on OpenShift for experimental purposes only.

vLLM has rapidly emerged as the de facto inference engine for serving large language models, celebrated for its high throughput, low latency, and efficient use of memory through paged attention. While much of the spotlight has focused on GPU-based deployments, the absence of GPUs shouldn't stop you from experimenting with vLLM or understanding its capabilities.

vLLM and LLMs: A match made in heaven

In this article, I’ll walk you through how to run vLLM entirely on CPUs in a bare OpenShift cluster using nothing but standard Kubernetes APIs and open source tooling. Because I am a performance engineer by craft, we’ll also dive into some fun performance-focused experiments that help explain the current state of the art in LLM inference benchmarking.

This was one of my first experiments running LLMs, and I came into it with no prior background in model inference. So if you're just getting started, you're in good company. The steps I’ll share are straightforward, reproducible, and beginner-friendly, making this a great jumping-off point for anyone curious about LLMs without access to high-end hardware.

Note that this article focuses on exploratory use cases for running vLLM on CPUs within OpenShift. For production-grade deployments of LLMs on OpenShift, Red Hat recommends using Red Hat OpenShift AI, which provides a fully supported, GPU-accelerated platform tailored for deploying, monitoring, and managing AI training and inference workloads.

OpenShift setup

For this experiment, I deployed Red Hat OpenShift 4.18, which is the latest GA version at the time of writing. The cluster was configured with a three-node control plane and five worker nodes, all running on bare metal in an internal Red Hat lab. I used the Assisted Installer to set things up, and the process was pretty straightforward. None of the nodes had GPUs; they were all Intel-based systems with ample compute and memory resources.

Building vLLM for CPU

Update

While this blog post describes the process of building your own CPU images, official pre-built images are now available via vLLM PR #32286.

vLLM is a production-grade inference engine, primarily optimized for GPUs and other hardware accelerators like TPUs. However, it does support basic inference on CPUs as well. That said, no official pre-built container images exist for CPU-only use cases.

To deploy vLLM on my OpenShift cluster, I needed to build and publish a custom image. Fortunately, the vLLM GitHub repository provides various Dockerfiles that make this process straightforward. I used Podman to build the container image locally and pushed it to Quay, Red Hat’s container registry.

Here’s a step-by-step breakdown of the process I followed:

git clone https://github.com/vllm-project/vllm

cd vllm

podman build -t quay.io/smalleni/vllm:latest --arch=x86_64 -f docker/Dockerfile.cpu .

podman push quay.io/smalleni/vllm:latestNow that the vLLM container image has been pushed to Quay, we can deploy it on OpenShift by running it within a pod.

Picking a model to run

The pace of innovation in large language models (LLMs) has been remarkable, with new models emerging almost weekly and performance benchmarks being steadily surpassed. One family of models that has gained significant traction in the industry is Meta’s LLaMA. For my experiment, I selected Llama-3.2-1B-Instruct, a lightweight model with just 1 billion parameters.

This particular variant is instruction-tuned, meaning it has been fine-tuned to better follow natural language instructions, such as answering questions, summarizing text, or generating code based on user prompts. It’s a great option for quick testing and low-resource experimentation. For comparison, models like OpenAI’s GPT-4 are rumored to use a mixture-of-experts architecture with access to upwards of 1.8 trillion parameters, although the exact number remains undisclosed.

This particular model itself is hosted on Hugging Face which is a platform and open-source community best-known for hosting and sharing machine learning models and datasets. The model can be downloaded and used through an access token after signing up, reviewing the license and accepting terms.

Deploying vLLM on OpenShift

With the CPU-optimized vLLM container image ready, I deployed it on OpenShift using standard resources such as Deployment and Service. Because vLLM downloads model files at runtime, I needed persistent storage to cache these assets and avoid repeated downloads across pod restarts.

To enable this, I configured local persistent storage using the Local Storage Operator, which provisions volumes from node-local disks. The vLLM container—built earlier and pushed to Quay—is launched via a Deployment that mounts a volume backed by a PersistentVolumeClaim. This volume is mapped to the Hugging Face cache directory inside the container, allowing it to retain downloaded models across restarts, significantly improving efficiency and reducing startup time.

The vLLM pod requires a privileged Security Context Constraint (SCC) to run. Since the Deployment controller uses the default service account in the namespace to launch the pod, I ensured it has the necessary privileges by adding the privileged SCC to it using:

oc adm policy add-scc-to-user privileged -z default -n <namespace>The container is started with the following command:

vllm serve meta-llama/Llama-3.2-1B-Instruct --port 8001To authenticate with Hugging Face and download the model, I created a secret containing my access token and injected it into the container via the HUGGING_FACE_HUB_TOKEN environment variable.

Finally, I exposed the Deployment using a Service and Route, making the model endpoint accessible from outside the OpenShift cluster. All the manifests needed to reproduce this setup are available in this repository.

Talking to the model

Since vLLM is an OpenAI-compatible model server, testing the deployed model on OpenShift is straightforward. Using a simple curl command directed at the OpenShift route, I was able to interact with the model and verify its functionality. Here's an example of a conversation I had with the model running in the cluster.

[root@d40-h18-000-r660 ~]# curl -X POST http://sai-vllm-vllm.apps.vlan604.rdu2.scalelab.redhat.com/v1/chat/completions -H "Content-Type: application/json" -d '{

"model": "meta-llama/Llama-3.2-1B-Instruct",

"messages": [

{

"role": "user",

"content": "Hello! What is the capital of Massachusetts?"

}

]

}'

{"id":"chatcmpl-68e5c8d9bff84ac6be0f9f0214a9a452","object":"chat.completion","created":1747156472,"model":"meta-llama/Llama-3.2-1B-Instruct","choices":[{"index":0,"message":{"role":"assistant","reasoning_content":null,"content":"The capital of Massachusetts is Boston.","tool_calls":[]},"logprobs":null,"finish_reason":"stop","stop_reason":null}],"usage":{"prompt_tokens":44,"total_tokens":52,"completion_tokens":8,"prompt_tokens_details":null},"prompt_logprobs":null}

Benchmarking inference servers for fun and profit

Now that I had successfully deployed and interacted with a model hosted on vLLM, I was curious to explore how one benchmarks the performance of a model server - specifically, vLLM in this case. Given that my deployment was CPU-only and completely untuned, I wasn’t expecting any record-breaking results. Instead, my goal was to better understand the process of performance benchmarking in the generative AI space.

Fortunately, several open source tools exist for this purpose. One of the more popular options is GuideLLM, developed by the same team behind vLLM, making it a natural choice for this experiment.

It is Python based and can be easily installed with:

pip install guidellmGuideLLM offers a rich set of command-line options, enabling users to customize prompt and output lengths in terms of tokens for precise control over benchmarking scenarios. A particularly useful feature allows it to begin with synchronous requests and gradually scale up to maximum concurrency, helping evaluate the inference server's performance under varying, stepwise throughput loads. Additionally, GuideLLM is designed to work with any OpenAI-compatible model server, not just vLLM, thereby enhancing its versatility and broader relevance.

The command used to kick off the benchmarking in my setup was as below

guidellm benchmark --target http://sai-vllm-vllm.apps.vlan604.rdu2.scalelab.redhat.com/v1 --model meta-llama/Llama-3.2-1B-Instruct --data "prompt_tokens=512,output_tokens=128" --rate-type sweep --max-seconds 240This specifically targets the model hosted in my vLLM pod that is part of a Deployment in OpenShift and exposed via a route, using prompts of 512 tokens and generating outputs of 128 tokens. Prior to running this command, it is important to expose your Hugging Face Access token as HF_TOKEN as GuideLLM requires the tokenizer associated with the target model to generate synthetic data for the benchmark run.

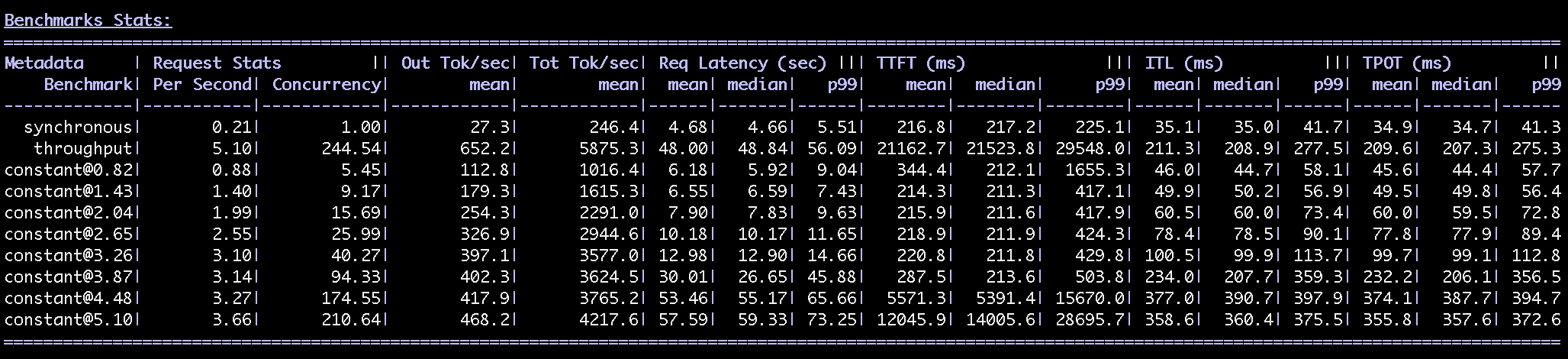

Once the benchmark completes, GuideLLM presents the key performance indicators (KPIs) and various summary statistics for them in an easy to comprehend tabular format (Figure 1).

Here is a brief summary of some of the most critical KPIs used to evaluate LLM and model server performance:

- Requests per second (RPS): Measures how many complete inference requests the server processes per second - reflects overall system throughput.

- Time to first token (TTFT): Time taken from request arrival to the first token emitted - key latency metric affecting perceived responsiveness.

- Inter-token latency (ITL): Average time gap between consecutive output tokens (excluding the first token) - impacts streaming fluency and user experience.

- Total Tokens per second (TPS): Measures the total number of tokens (input + output) processed per second - useful metric for measuring end-to-end performance.

- Output tokens per second: Rate at which output tokens are generated - reflects decoding efficiency during the response phase.

- Time per output token: Average time taken to generate each output token, including the first token as well as all subsequent tokens

Performance experiments

As I mentioned at the outset, this exercise was never about pushing the setup to its performance limits, especially since I wasn’t working with GPUs. That said, the performance engineer in me couldn’t resist experimenting with a few system-level tuning knobs to observe their impact.

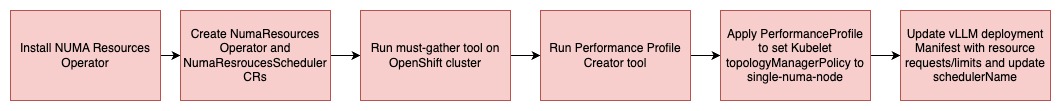

In particular, I was curious whether constraining the vLLM pod to a single NUMA node would yield better performance by avoiding cross-node memory access, which typically introduces additional latency. To test this, I enabled the NUMA-aware secondary scheduler on my OpenShift cluster and used the Performance Profile Creator tool to apply a PerformanceProfile. This tool takes the output of OpenShift must-gather and then uses that information to set the Kubelet's topologyManagerPolicy to single-numa-node on the worker node running the vLLM pod. It also reserved isolated CPU cores on each NUMA node specifically for the vLLM workload, while allocating separate reserved cores for systemd and OpenShift infrastructure pods to ensure minimal interference.

Finally, I updated the vLLM deployment manifest to specify the secondary scheduler and defined resource requests and limits for the container to ensure guaranteed quality of service.

This process is illustrated in Figure 2. As this is an introductory-level article, I’ve omitted the detailed steps and manifests here, but they’re available in the GitHub repository linked earlier in the article.

For most latency-sensitive applications, I would have expected better performance when restricting the pod to a single NUMA node, compared to letting it span across NUMA boundaries. Surprisingly, the results defied expectations—performance actually degraded when I pinned the vLLM pod to a single NUMA node. While I won't quote exact numbers here as this wasn’t a rigorously controlled benchmarking setup, the trend was still notable.

On further reflection, the performance drop made sense: applications with high memory bandwidth demands can suffer when confined to a single NUMA node. In the case of vLLM, the bandwidth requirements for loading model weights into CPU caches likely surpassed what a single NUMA node could deliver, leading to slower performance than when the workload was spread across multiple NUMA nodes. This is precisely why GPU architectures often rely on high-bandwidth memory (HBM) to meet such demands.

The only way to validate my hypothesis was to dig deeper into the OpenShift worker node. While there are many tools and techniques available for such analysis, I opted to focus on instructions per cycle (IPC). This is where the good old Linux perf tool comes in. By running:

perf stat -a sleep 30on the OpenShift worker node hosting the vLLM pod—across both configurations (NUMA-restricted versus interleaved)—I was able to validate my hypothesis. When the workload was interleaved across both NUMA nodes, the Instructions Per Cycle (IPC) was around 1.20. However, when restricted to a single NUMA node, the IPC dropped significantly to 0.52.

An IPC below 1 in a superscalar processor like the one I had typically indicates a memory-bound workload, where the CPU is frequently stalled waiting for data to arrive from memory.

Wrapping up

This article discussed running vLLM on CPUs on pure OpenShift to prototype, validate, and iterate on LLM workflows without needing access to GPUs for non-production use cases.

Last updated: February 18, 2026