The latest release of rhoas, the command-line interface (CLI) for Red Hat OpenShift application services, adds a powerful and flexible feature called service contexts that makes it easier than ever to connect clients to your instances of OpenShift application services. This article illustrates this new feature and shows how it can accelerate your development workflows for stream-based applications.

Service contexts facilitate client connections

Red Hat OpenShift application services, such as Red Hat OpenShift Streams for Apache Kafka and Red Hat OpenShift Service Registry, are managed cloud services that provide a streamlined developer experience for building, deploying, and scaling real-time applications in hybrid-cloud environments.

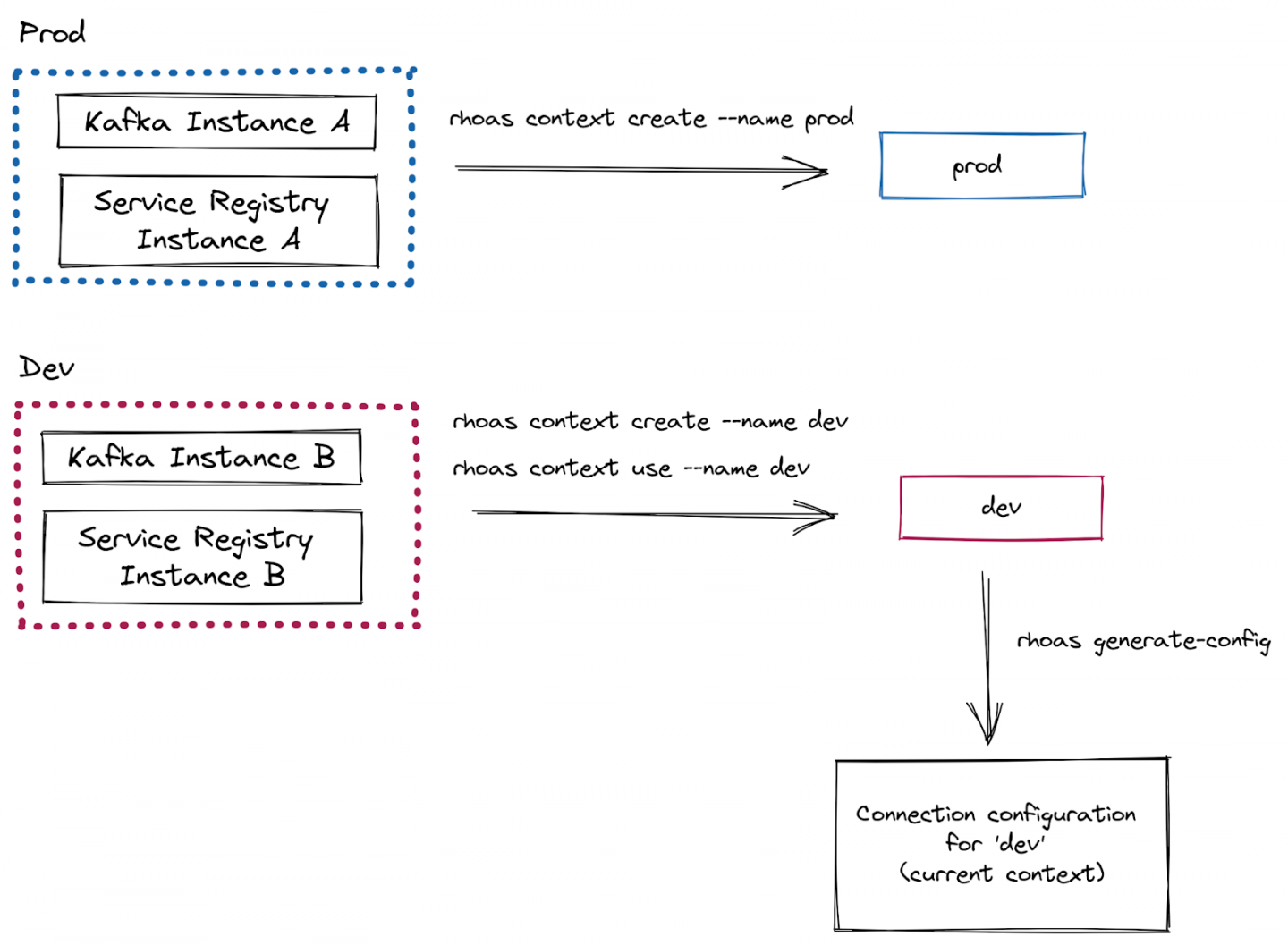

The CLI for OpenShift application services is a rich command-line interface for managing application services. With the new service contexts feature, you can use the CLI to define sets of service instances for specific use cases, projects, or environments, as indicated in Figure 1. After you define a context, a single command can generate the connection configuration information required by client applications.

Thus, service contexts enable you to switch easily between defined sets of service instances and to quickly and reliably generate connection configurations for those instances. This process represents a significant improvement over time-consuming and error-prone workflows that require you to create individual configuration files for standalone service instances and applications in different languages.

This article shows where service contexts excel and why we are excited to introduce this feature.

First, we will walk through a practical example that uses service contexts to connect a local client application to some instances in OpenShift application services. Then we'll look at how to use contexts with OpenShift-based applications and how to share contexts with other team members.

Connect a Quarkus application to OpenShift application services

This example uses service contexts to connect an example Quarkus application to some Kafka and Service Registry instances in OpenShift application services. The Quarkus application produces a stream of quotes (actually randomly generated strings of characters) and displays them on a web page.

First, create a brand new service context:

$ rhoas context create --name quotes-devThe context you just created becomes the current context. You can create multiple contexts, putting different services in each one, and offering contexts to different developers to give them access to sets of services.

Next, create some Kafka and Service Registry instances in the current context:

$ rhoas kafka create --name example-kafka-instance --wait

$ rhoas service-registry create --name example-registry-instanceThe new Kafka and Service Registry instances are automatically added to the current context. You could also add existing Kafka and Service Registry instances to the current context using the context set-kafka and context set-registry CLI commands.

Now, clone a sample Quarkus application to run locally:

$ git clone https://github.com/redhat-developer/app-services-guides.git

$ cd app-services-guides/code-examples/quarkus-service-registry-quickstart/Create a service account for the Quarkus application to authenticate with the Kafka and Service Registry instances in the context. Save the credentials in an environment variables file in the directory for the producer component:

$ rhoas service-account create --type env --output-file ./producer/.envThe sample Quarkus application has two components: a producer and a consumer. The producer generates a stream of quote messages to a Kafka topic. The consumer component consumes these messages and displays their strings on a web page.

The Quarkus application requires a Kafka topic called quotes in your Kafka instance. Create the topic as follows:

$ rhoas kafka topic create --name quotesYou already created a context for your Kafka and Service Registry instances, so you're ready to generate the configuration information required to connect the Quarkus application to these instances through a generate-config command:

$ rhoas generate-config --type env --output-file ./producer/.envAll rhoas commands are executed against all the service instances in the current context, so you didn't need to explicitly specify any instances in the previous command. This broad reach is an important characteristic of service contexts. The feature gives you the flexibility to quickly and seamlessly switch between large sets of service instances and run CLI commands against them.

Append the contents of the connection configuration file to the service account environment variables file:

$ cat ./producer/rhoas.env >> ./producer/.env

The Quarkus application requires the connection configuration information for your context to be available to both the producer and the consumer. You previously generated the configuration in the producer directory, so you can simply copy the same .env file to the consumer directory:

$ cp ./producer/.env ./consumer/.env For the context that you defined, the contents of the .env file should look like the following:

## Generated by rhoas cli

RHOAS_CLIENT_ID=srvc-acct-45038cc5-0eb1-496f-a678-24ca7ed0a7bd

RHOAS_CLIENT_SECRET=001a40b1-9a63-4c70-beda-3447b64a7783

RHOAS_OAUTH_TOKEN_URL=https://sso.redhat.com/auth/realms/redhat-external/protocol/openid-connect/token

## Generated by rhoas cli

## Kafka Configuration

KAFKA_HOST=test-insta-ca---q---mrrjobj---a.bf2.kafka.rhcloud.com:443

## Service Registry Configuration

SERVICE_REGISTRY_URL=https://bu98.serviceregistry.rhcloud.com/t/cc8a243a-feed-4a4c-9394-5a35ce83cca5

SERVICE_REGISTRY_CORE_PATH=/apis/registry/v2

SERVICE_REGISTRY_COMPAT_PATH=/apis/ccompat/v6

The service-account command created a service account (under the environment variable name RHOAS_CLIENT_ID) to authenticate client applications with the Kafka and Service Registry instances in your context. To enable the service account to work with these instances, you need to grant the service account access to the instances:

$ rhoas kafka acl grant-access --producer --consumer --service-account srvc-acct-45038cc5-0eb1-496f-a678-24ca7ed0a7bd --topic quotes --group all

$ rhoas service-registry role add --role manager --service-account srvc-acct-45038cc5-0eb1-496f-a678-24ca7ed0a7bd You previously made the .env file available to both the producer and consumer components of the Quarkus application. Now use Apache Maven to run the producer component:

$ cd producer

$ mvn quarkus:devThe producer component starts to generate quote messages to the dedicated quotes topic in the Kafka instance.

The Quarkus application also created a schema artifact with an ID of quotes-value in the Service Registry instance. The producer uses the schema artifact to validate that each message representing a quote conforms to a defined structure. To view the contents of the schema artifact created by the Quarkus application, run the following command:

$ rhoas service-registry artifact get --artifact-id quotes-valueThe output is:

{

"type": "record",

"name": "Quote",

"namespace": "org.acme.kafka.quarkus",

"fields": [

{

"name": "id",

"type": {

"type": "string",

"avro.java.string": "String"

}

},

{

"name": "price",

"type": "int"

}

]

}Next, with the producer still running, use Apache Maven to run the consumer component:

$ cd consumer

$ mvn quarkus:devThe consumer component consumes the stream of quotes and displays them on your local web page at http://localhost:8080/quotes.html. The consumer component also uses the quotes-value schema artifact to validate that messages conform to the structure defined in the schema.

Figure 2 shows an example of the output displayed on the web page.

This example has shown that, after you defined a context, connecting your client application to OpenShift application services was as easy as generating a single file for connection information and copying this file to the application.

In the final sections of this blog post, we'll look briefly at two other use cases: Using service contexts to connect applications in Red Hat OpenShift, and sharing service contexts with other team members.

Using service contexts to connect OpenShift-based applications

In the previous example, you generated a connection configuration as a set of environment variables, which is convenient for a client application running locally. But what if you're running a container-based application on OpenShift? Well, service contexts make that easy, too. In this situation, you can directly generate the connection configuration information as an OpenShift ConfigMap file:

$ rhoas generate-config --type configmap --output-file ./rhoas-services.yaml

When you have generated a ConfigMap, you can store it using methods such as:

- A Helm chart

- Pushing the ConfigMap to a GitHub repository

You can apply the ConfigMap to an OpenShift project using the following command:

$ oc apply -f ./rhoas-services.yamlWhen you've applied the ConfigMap to your OpenShift project, you can refer to the ConfigMap from various resources, including OpenShift application templates, Source-to-Image (S2I) builds, Helm charts, and service binding configurations.

Sharing service contexts

To share a context with other team members, you just need to share the context's configuration file. For example, you can push the file to a shared GitHub repository as described in this section.

Don't confuse the context's configuration file with the .env file of environment variables that you generated for connection information earlier in the article. Instead, the context file lists the service instances that are in the context. The file contains JSON and is stored locally on your computer. To get the path to the context file, run the following command:

$ rhoas context statusWhen you have the path to the file, you can copy it to a location such as a local Git repository. An example for Linux follows:

$ cp <path-to-context-file> ./profiles.jsonTo share the service context with other developers, commit and push the file to a shared working area such as the team's Git repository. It's safe to push context files even to public repositories because the files contain only identifiers for the service instances.

Now, suppose another team member wants to use the shared context. When that team member has the context file (they fetched it from the shared repository, for example), they must define an environment variable called RHOAS_CONTEXT that points to the context file. An example for Linux follows:

$ export RHOAS_CONTEXT=./profiles.jsonService contexts: Quick, safe, and scalable

This article has shown how the new service contexts feature of the CLI greatly simplifies the job of connecting client applications to sets of service instances in Red Hat OpenShift application services. This powerful and flexible feature automates the work that you previously spent on manual, error-prone configuration tasks and enables your team to focus on what it does best: Developing great stream-based applications.

To learn how to get started with the OpenShift application services CLI and start benefiting from the service contexts feature, see our detailed documentation:

Last updated: June 4, 2024