At Red Hat, we are often asked what consoles and graphical user interfaces (GUIs) can be used with our Kafka products, Red Hat AMQ Streams and Red Hat OpenShift Streams for Apache Kafka. Because our products are fully based on the upstream Apache Kafka project, most available consoles and GUIs designed to work with Kafka also work with our Kafka products. This article illustrates the ease of integration through a look at AKHQ, an open source GUI for Apache Kafka.

Using Apache Kafka

Apache Kafka has quickly become the leading event-streaming platform, enabling organizations to unlock and use their data in new and innovative ways. With Apache Kafka, companies can bring more value to their products by processing real-time events more quickly and accurately.

At a high level, Apache Kafka's architecture is quite simple. It's based on a few concepts such as brokers, topics, partitions, producers, and consumers. However—as with any system—when you deploy, operate, manage, and monitor a production Kafka cluster, things can quickly become complex. To use and manage Kafka clusters in both development and production environments, there are numerous tools on the market, both commercial and open source. These tools range from scripts, GUIs, and powerful command-line interfaces (CLIs) to full monitoring, management, and governance platforms. Each type of tool offers value in specific parts of the software development cycle.

This article shows how to connect AKHQ to a Kafka instance in Red Hat OpenShift Streams for Apache Kafka, a managed cloud service. Using our no-cost, 48-hour trial of OpenShift Streams, you can follow along with the steps. By the end of the article, you will be able to use AKHQ to manage your Kafka instance.

Prerequisites

To follow the instructions in this article, you'll need the following:

- A Kafka instance in Red Hat OpenShift Streams for Apache Kafka (either a trial instance or full instance). A 48-hour trial instance is available at no cost. Go to the Red Hat console, log in with your Red Hat account (or create one), and create a trial instance. You can learn how to create your first Kafka instance in this quick start guide.

- The

rhoasCLI. This is a powerful command-line interface for managing various Red Hat OpenShift Application Services resources, such as Kafka instances, Service Registry instances, service accounts, and more. Download the CLI at this GitHub repository. - A container runtime such as Podman or Docker to run the AKHQ container image used in this article.

The sections that follow show how to generate connection information for a Kafka instance in OpenShift Streams and then use this information to connect AKHQ to the Kafka instance.

Creating a service account

For AKHQ to connect to your Kafka instance, it needs credentials to authenticate with OpenShift Streams for Apache Kafka. In the OpenShift Application Services world, this means that you must create a service account. You can do this using the web console, the CLI, or a REST API call. The following steps show how to do so using the web console:

- Navigate to https://console.redhat.com/application-services/service-accounts and click Create service account.

- Enter a name for the service account. In this case, let's call it

akhq-sa. - Click Create.

- Copy the generated Client ID and Client secret values to a secure location. You'll specify these credentials when configuring a connection to the Kafka instance.

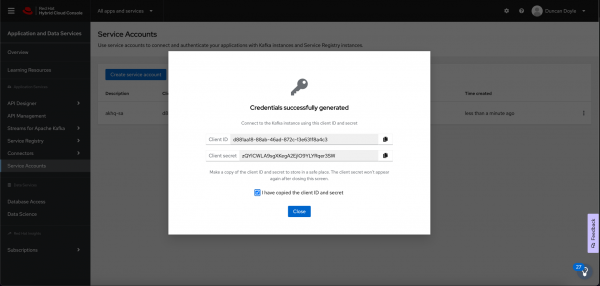

After you save the generated credentials to a secure location, select the confirmation check box and click Close, as shown in Figure 1.

Figure 1: A dialog box shows that security credentials were generated.

Configuring Kafka ACLs

Before AKHQ can use the service account to connect to your Kafka instance in OpenShift Streams, you need to configure authorization of the account using Kafka Access Control List (ACL) permissions. At a minimum, you need to give the service account permissions to create and delete topics and produce and consume messages.

To configure ACL permissions for the service account, this article uses the rhoas CLI. You can also use the web console or the REST API to configure the permissions.

NOTE: In this article, we show how to use AKHQ to manage topics and consumer groups. Therefore, we set only the ACLs required to manage those resources. If you want to manage other Kafka resources—for example, the Kafka broker configuration—you might need to configure additional ACL permissions for your service account. However, always be sure to set only the permissions needed for your use case. Having minimal permissions limits the attack surface of your Kafka cluster, improving security.

The following steps show how to set the required ACL permissions for your service account.

Log in to the

rhoasCLI:rhoas loginA login flow opens in your web browser.

- Log in with the Red Hat account that you used to create your OpenShift Streams instance.

Specify the Kafka instance in OpenShift Streams that you would like to use:

rhoas kafka useThe

rhoas kafka usecommand sets the OpenShift Streams instance in the context of your CLI, meaning that subsequent CLI operations (for example, setting ACLs), are performed against this Kafka instance.Set the required Kafka ACL permissions, as shown in the following example:

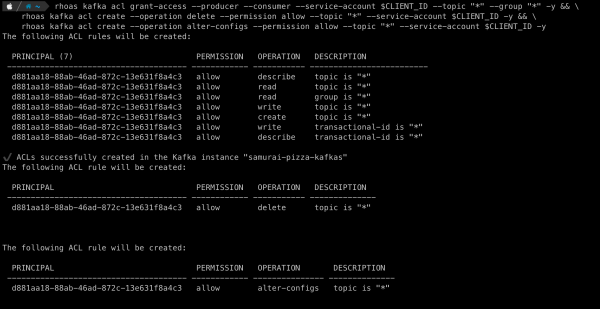

export CLIENT_ID=<your-service-account-client-id> rhoas kafka acl grant-access --producer --consumer --service-account $CLIENT_ID --topic "*" --group "*" -y && \ rhoas kafka acl create --operation delete --permission allow --topic "*" --service-account $CLIENT_ID -y && \ rhoas kafka acl create --operation alter-configs --permission allow --topic "*" --service-account $CLIENT_ID -yThe example invokes the

grant-accesssubcommand to set ACL permissions to produce and consume messages in any topic in the Kafka instance. To fully manage topics from AKHQ, subsequent commands allow thedeleteandalter-configsoperations on any topic. Output from the commands is shown in Figure 2, indicating that the desired permissions and ACLs were created.

Figure 2: Output from the ACL commands show the permissions and ACLs generated. Figure 2: Output from the ACL commands shows the permissions and ACLs generated.

NOTE: This example uses the * wildcard character to set permissions for all topics in the Kafka cluster. You can limit access by setting the permissions for a specific topic name or for a set of topics using a prefix.

Connecting AKHQ to OpenShift Streams for Apache Kafka

With your ACL permissions in place, you can now configure AKHQ to connect to your Kafka instance in OpenShift Streams. There are multiple ways to run AKHQ. The AKQH installation documentation describes the various options in detail.

This article runs AKHQ in a container. This means that you need a container runtime such as Podman or Docker. We show how to run the container using Docker Compose, but you can also use Podman Compose with the same compose file.

The AKHQ configuration in this example is a very basic configuration that connects to an OpenShift Streams Kafka instance using Simple Authentication Security Layer (SASL) OAuthBearer authentication. The configuration uses the client ID and client secret values of your service account and an OAuth token endpoint URL, which is required for authentication with Red Hat's single sign-on (SSO) technology. The example configuration looks like this:

akhq:

# list of kafka cluster available for akhq

connections:

openshift-streams-kafka:

properties:

bootstrap.servers: "${BOOTSTRAP_SERVER}"

security.protocol: SASL_SSL

sasl.mechanism: OAUTHBEARER

sasl.jaas.config: >

org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required

oauth.client.id="${CLIENT_ID}"

oauth.client.secret="${CLIENT_SECRET}"

oauth.token.endpoint.uri="${OAUTH_TOKEN_ENDPOINT_URI}" ;

sasl.login.callback.handler.class: io.strimzi.kafka.oauth.client.JaasClientOauthLoginCallbackHandlerYou'll notice that the configuration also uses the OauthLoginCallbackHandler class from the Strimzi project. This callback handler class is packaged by default with AKHQ, enabling you to use OAuthBearer authentication against OpenShift Streams.

The configuration shown is included in the docker-compose.yml file that we'll use to run our containerized AKHQ instance. You can find the docker-compose.yml file in this GitHub repository.

The following steps show to use Docker Compose to connect AKHQ to your Kafka instance in OpenShift Streams:

Clone the GitHub repository that has the example

docker-compose.ymlfile:git clone https://github.com/DuncanDoyle/rhosak-akhq-blog.git cd rhosak-akhq-blogSet environment variables that define connection information for your Kafka instance.

export CLIENT_ID=<your-service-account-client-id> export CLIENT_SECRET=<your-service-account-client-secret> export BOOTSTRAP_SERVER=<your-kafka-bootstrap-server-url-and-port> export OAUTH_TOKEN_ENDPOINT_URI=https://sso.redhat.com/auth/realms/redhat-external/protocol/openid-connect/tokenThese environment variables specify the client ID and client secret values of your service account, the OAuth token endpoint URL used for authentication with Red Hat's SSO service, and the bootstrap server URL and port of your Kafka instance.

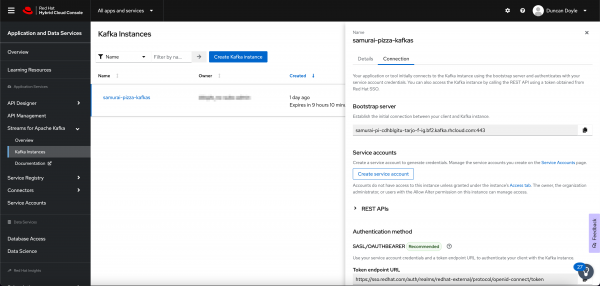

You can get the bootstrap server information for your Kafka instance in the OpenShift Streams web console or by using the

rhoas kafka describeCLI command. You can also get the OAuth token endpoint URL in the web console. However, because this URL is a static value in OpenShift Streams, you can simply set it tohttps://sso.redhat.com/auth/realms/redhat-external/protocol/openid-connect/token.Figure 3 shows how to get the bootstrap server and token URL in the web console.

Figure 3: Choose your Kafka instance to find the bootstrap server and token URL. Start AKHQ using

docker-compose:docker-compose upThe AKHQ management console becomes available at

http://localhost:8080.

If you've configured everything correctly, when you hover your mouse over the datastore icon, you should see an openshift-streams-kafka connection, as shown in Figure 4.

Managing OpenShift Streams

With the AKHQ management console connected, you can now use the console to interact with your Kafka instance in OpenShift Streams.

The following steps show how to create a topic, produce some data, and inspect the data that you've produced to the topic. You can use the OpenShift Streams web console and rhoas CLI to do these things, but remember that the point of this example is to show how you can use the AKHQ console!

- Create a topic. In the lower-right corner of the AKHQ console, click Create a topic.

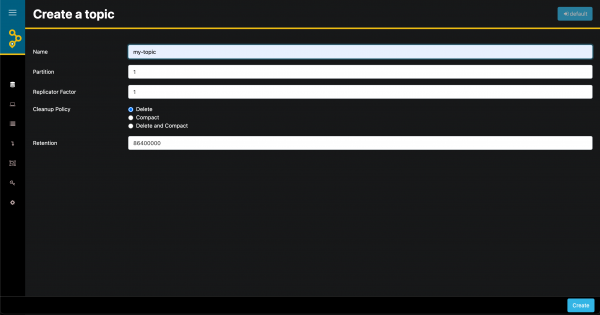

On the topic creation page, name the topic

my-topic, keep the default values for all the other options, and click Create, as shown in Figure 5.

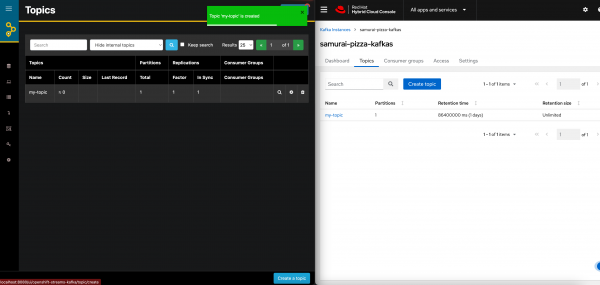

Figure 5: The "Create a topic" page lets you assign a name and other attributes. If you have set ACL permissions on the service account correctly, you see the topic that you just created. You can also see the same topic in the OpenShift Streams web console. Figure 6 shows the same topic as it appears in both consoles.

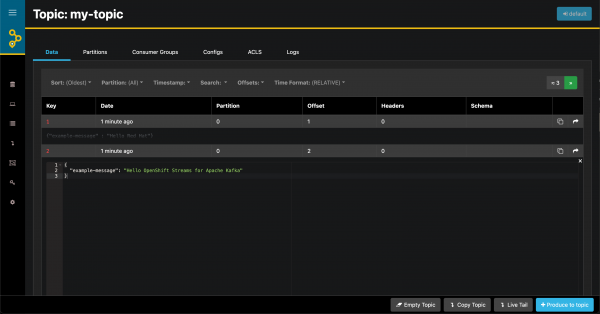

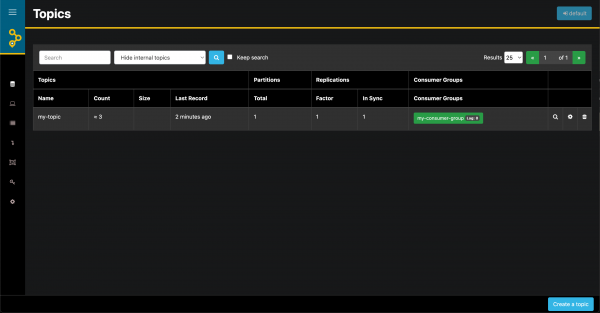

Figure 6: Topic in the AKHQ console and the OpenShift Streams console. To perform tasks such as inspecting and producing messages, viewing consumer groups, inspecting consumer lag, and so on, click your new topic in the AKHQ console. Figure 7 shows a message in JSON format that has come into the topic, and Figure 8 shows statistics about the topic.

Figure 7: A message appears in JSON format in the topic.

Figure 8: Statistics about your Kafka topic.

Red Hat simplifies access to open source tools

This article has shown how to manage and monitor a Kafka instance in Red Hat OpenShift Streams for Apache Kafka using AKHQ. For more information about AKHQ, see the AKHQ documentation.

On a larger scale, the article illustrates the ability to use popular tools from the open source Kafka ecosystem with Red Hat's managed cloud services. This openness gives you the flexibility you need when building enterprise-scale systems based on open source technologies. The use of open standards and non-proprietary APIs and protocols in Red Hat service offerings enables seamless integration with various technologies.

If you haven't yet done so, please visit the Red Hat Hybrid Cloud Console for more information about OpenShift Streams for Apache Kafka, as well as our other service offerings. OpenShift Streams for Apache Kafka provides a 48-hour trial version of our product at no cost. We encourage you to give it a spin.

Last updated: August 27, 2025