It's been almost a year since Red Hat Developer published Build an API using Quarkus from the ground up. That article tried to provide a single full reference implementation of an OpenAPI-compliant REST API using Quarkus. Since then, there's been a major version release of Quarkus, with new features that make building and maintaining a Quarkus-based REST API much easier. In this article, you will revisit the Customer API from the previous article and see how it can be improved thanks to advances in Quarkus.

In creating this article, we made an effort to remain aware of a subtle consideration in any development effort: you always need to keep an eye on your imports. Whenever you add imports, you should consciously attempt to limit your exposure to third-party libraries, focusing on staying in the abstraction layers such as the MicroProfile abstractions. Remember, every library you import is your responsibility to care for and update.

You can find the source code for this article on GitHub. You should familiarize yourself with the original version of the Customer API by reading the earlier article before you begin this one.

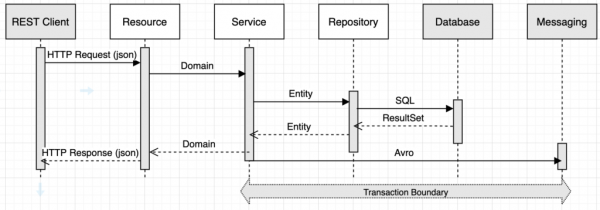

Architecture layers: Resource, service, and repository

The architecture layers of the Customer API haven't changed, as you can see in Figure 1. In a rapid development effort, some developers might think these layers are cumbersome and unnecessary for a prototype or minimum viable product. Using the YAGNI ("You aren't gonna need it") approach has its benefits. In my experience working in enterprise environments, however, the YAGNI approach can come back to haunt a development team. There's a difference between development using best practices and premature optimization, but the dichotomy can be highly subjective. Be mindful of perceived expectations regarding what a prototype means to the business in terms of being production-ready.

Project initialization

Begin where you started last time: at the command line.

mvn io.quarkus.platform:quarkus-maven-plugin:2.6.1.Final:create \

-DprojectGroupId=com.redhat.customer \

-DprojectArtifactId=customer-api \

-DclassName="com.redhat.customer.CustomerResource" \

-Dpath="/customers" \

-Dextensions="config-yaml,resteasy-reactive-jackson"

cd customer-api

Right off the bat, you'll notice we've jumped from 1.13.3 to 2.6.1. That's a pretty big jump, and with it comes a bevy of new features and functionality.

Quarkus added Dev Services into the framework, which use Testcontainers behind the scenes to spin up the necessary infrastructure to perform integration testing in a local environment with production-like services. Java 11 is now the new standard, along with GraalVM 21, Vert.x 4, and Eclipse MicroProfile 4. There's also a new feature for continuous testing, which allows tests to be executed in a parallel process alongside an application running in Dev Mode. The continuous testing feature is a huge gain in development productivity, giving you fast feedback to changes that might break the tests.

Lombok and MapStruct

Lombok is a Java library that helps keep the amount of boilerplate code in an application to a minimum; however, its use is not without controversy in the developer community. There are plenty of articles out there detailing the good and bad sides of using code generation annotations. You will be using it for this application, but as with any library you import, remember that it's up to you and your team to understand its implications.

MapStruct is a code generator that greatly simplifies the implementation of mappings between JavaBean types based on a convention-over-configuration approach. It seems to be less controversial than Lombok; however, it's still doing code generation, so the same caution should be used.

When you're using the two in combination, it's important to have both the Lombok annotation processor and the MapStruct annotation processor configured in the compiler plugin to ensure that both are executed. Here are the excerpts of the pom.xml changes you'll need to make.

<properties>

...

<lombok.version>1.18.22</lombok.version>

<mapstruct.version>1.4.2.Final</mapstruct.version>

...

</properties>

<dependencies>

...

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>${lombok.version}</version>

</dependency>

<dependency>

<groupId>org.mapstruct</groupId>

<artifactId>mapstruct</artifactId>

<version>${mapstruct.version}</version>

</dependency>

...

<dependencies>

<build>

<plugins>

...

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>${compiler-plugin.version}</version>

<configuration>

<compilerArgs>

<arg>-parameters</arg>

</compilerArgs>

<annotationProcessorPaths>

<path>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>${lombok.version}</version>

</path>

<path>

<groupId>org.mapstruct</groupId>

<artifactId>mapstruct-processor</artifactId>

<version>${mapstruct.version}</version>

</path>

</annotationProcessorPaths>

</configuration>

</plugin>

...

</plugins>

</build>

Exceptions

Next, you'll add a quick exception implementation for use in the project. By using a simple ServiceException, you can simplify the exception model and add some message formatting for ease of use in the code.

package com.redhat.exception;

public class ServiceException extends RuntimeException {

public ServiceException(String message) {

super(message);

}

public ServiceException(String format, Object... objects) {

super(String.format(format, objects));

}

}

Repository layer

Since we are starting from the ground up, it's time to talk database. Database interactions are going to be managed by the Quarkus Panache extension. The Panache extension uses Hibernate under the covers, but provides a ton of functionality on top to make developers more productive. The demo will use a PostgreSQL database and will manage the schema using Flyway, which allows database schemas to be versioned and managed in source control. You'll add the Hibernate validator extension as well because validation annotations will be used across all your plain old Java objects.

Add these extensions to the project's pom.xml file.

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-hibernate-validator</artifactId>

</dependency>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-hibernate-orm-panache</artifactId>

</dependency>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-jdbc-postgresql</artifactId>

</dependency>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-flyway</artifactId>

</dependency>

Flyway

Next, you'll put together the customer table. Flyway expects the migration files to be located in a folder on your classpath named db/migration. Here's how you'd create the first version of the customer table:

src/main/resources/db/migration/V1__customer_table_create.sql

CREATE TABLE customer

(

customer_id SERIAL PRIMARY KEY,

first_name TEXT NOT NULL,

middle_name TEXT,

last_name TEXT NOT NULL,

suffix TEXT,

email TEXT,

phone TEXT

);

ALTER SEQUENCE customer_customer_id_seq RESTART 1000000;

This table is different in a couple of subtle ways from its equivalent in the previous article. First, the VARCHAR(100) columns have been replaced with TEXT columns. A TEXT column is a variable-length string column with unlimited length. According to the PostgreSQL documentation, making this change does not have any performance impacts. Why keep an arbitrary limit on column sizes when getting rid of it doesn't cost anything?

The new table also includes an ALTER statement to adjust the starting point for the generated SEQUENCE created from the SERIAL type. By starting at 1 million, you can actually use key values smaller than the starting point to insert baseline test data, which might come in handy in your testing scenarios. Of course, doing this "wastes" a million IDs, but the SERIAL type is an integer capable of holding 2,147,483,647 unique values. This gives you a huge runway.

JPA with Panache

Using the Java Persistence API (JPA) begins with building an entity object. When using Panache, you have a choice between two patterns: Active Record and Repository. I prefer the Repository pattern because I tend to favor the single-responsibility principle, and the Active Record pattern blends the querying actions with the data.

package com.redhat.customer;

import lombok.Data;

import javax.persistence.*;

import javax.validation.constraints.Email;

import javax.validation.constraints.NotEmpty;

@Entity(name = "Customer")

@Table(name = "customer")

@Data

public class CustomerEntity {

@Id

@GeneratedValue(strategy = GenerationType.IDENTITY)

@Column(name = "customer_id")

private Integer customerId;

@Column(name = "first_name")

@NotEmpty

private String firstName;

@Column(name = "middle_name")

private String middleName;

@Column(name = "last_name")

@NotEmpty

private String lastName;

@Column(name = "suffix")

private String suffix;

@Column(name = "email")

@Email

private String email;

@Column(name = "phone")

private String phone;

}

The CustomerEntity hasn't changed from the previous incarnation of the Customer API. The only difference is the use of the @Data annotation from Lombok to handle the generation of the getters/setters/hashCode/equals/toString. The practices detailed in the previous article still hold true:

- I like to name all my JPA entity classes with the suffix

Entity. They serve a purpose: to map back to the database tables. I always provide a layer of indirection betweenDomainobjects andEntityobjects because when it's missing, I've lost more time than I've spent creating and managing the data copying processes. - Because of the way the JPA creates the target object names, you have to explicitly put in the

@Entityannotation with the name of the entity you want so your HQL queries don't have to referenceCustomerEntity. Using the@Entityspecifying the name attribute allows you to use the nameCustomerrather than the class name. - I like to explicitly name both the table and the columns with the

@Tableand@Columnannotations. Why? I've lost more time when a code refactor inadvertently breaks the assumed named contracts than the time it costs to write a few extra annotations.

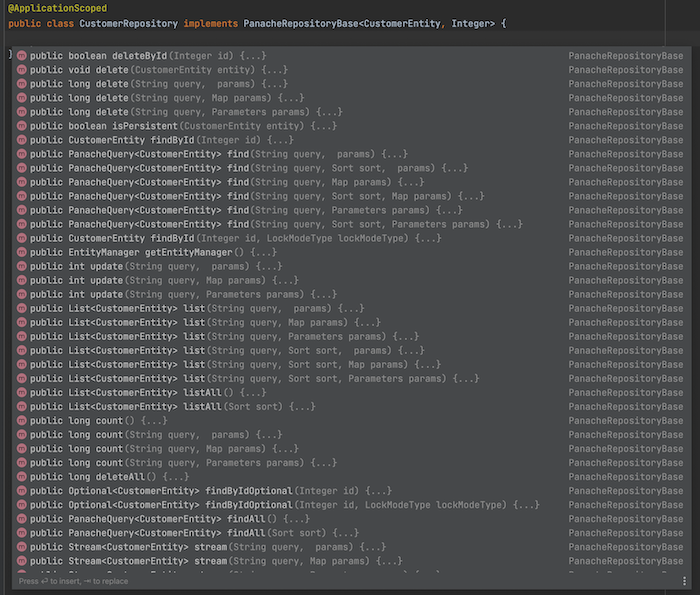

The next step is to create the Repository class:

package com.redhat.customer;

import io.quarkus.hibernate.orm.panache.PanacheRepositoryBase;

import javax.enterprise.context.ApplicationScoped;

@ApplicationScoped

public class CustomerRepository implements PanacheRepositoryBase<CustomerEntity, Integer> {

}

The Panache library adds value by providing the most widely used set of query methods out of the box, as you can see in Figure 2.

Service layer: Domain object, MapStruct mapper, and Service

In this example, the domain object is fairly simple. It's basically a copy of the entity object with the persistence annotations ripped out. As previously mentioned, this might seem like overkill, as the entity object in most basic tutorials tends to just get passed up through the layers. However, if at some point in the future there were a potential divergence between how the data is stored in the database and how the domain is modeled, this additional layer would be of benefit. It takes a bit of work to create the layering now, but that keeps the architecture clean, and it's not worth it to defer the work and restrict the dependencies to their respective layers.

package com.redhat.customer;

import lombok.Data;

import javax.validation.constraints.Email;

import javax.validation.constraints.NotEmpty;

@Data

public class Customer {

private Integer customerId;

@NotEmpty

private String firstName;

private String middleName;

@NotEmpty

private String lastName;

private String suffix;

@Email

private String email;

private String phone;

}

In the service layer, you will need to convert entity objects to domain objects. That's where MapStruct comes in: to do the mapping for you.

package com.redhat.customer;

import org.mapstruct.InheritInverseConfiguration;

import org.mapstruct.Mapper;

import org.mapstruct.MappingTarget;

import java.util.List;

@Mapper(componentModel = "cdi")

public interface CustomerMapper {

List<Customer> toDomainList(List<CustomerEntity> entities);

Customer toDomain(CustomerEntity entity);

@InheritInverseConfiguration(name = "toDomain")

CustomerEntity toEntity(Customer domain);

void updateEntityFromDomain(Customer domain, @MappingTarget CustomerEntity entity);

void updateDomainFromEntity(CustomerEntity entity, @MappingTarget Customer domain);

}

Now that you have the domain class and the needed MapStruct mapper to convert entity-to-domain and vice versa, you can add the Service class for the basic CRUD (Create, Read, Update, Delete) functionality.

package com.redhat.customer;

import com.redhat.exception.ServiceException;

import lombok.AllArgsConstructor;

import lombok.NonNull;

import lombok.extern.slf4j.Slf4j;

import javax.enterprise.context.ApplicationScoped;

import javax.transaction.Transactional;

import javax.validation.Valid;

import java.util.List;

import java.util.Objects;

import java.util.Optional;

@ApplicationScoped

@AllArgsConstructor

@Slf4j

public class CustomerService {

private final CustomerRepository customerRepository;

private final CustomerMapper customerMapper;

public List<Customer> findAll() {

return this.customerMapper.toDomainList(customerRepository.findAll().list());

}

public Optional<Customer> findById(@NonNull Integer customerId) {

return customerRepository.findByIdOptional(customerId)

.map(customerMapper::toDomain);

}

@Transactional

public void save(@Valid Customer customer) {

log.debug("Saving Customer: {}", customer);

CustomerEntity entity = customerMapper.toEntity(customer);

customerRepository.persist(entity);

customerMapper.updateDomainFromEntity(entity, customer);

}

@Transactional

public void update(@Valid Customer customer) {

log.debug("Updating Customer: {}", customer);

if (Objects.isNull(customer.getCustomerId())) {

throw new ServiceException("Customer does not have a customerId");

}

CustomerEntity entity = customerRepository.findByIdOptional(customer.getCustomerId())

.orElseThrow(() -> new ServiceException("No Customer found for customerId[%s]", customer.getCustomerId()));

customerMapper.updateEntityFromDomain(customer, entity);

customerRepository.persist(entity);

customerMapper.updateDomainFromEntity(entity, customer);

}

}

Take note of the use of the Lombok annotations @AllArgsConstructor and @Slf4j. The @AllArgsConstructor generates a constructor based on the class variables present and CDI will do the injection automatically. The @Slf4j annotation generates a log class variable using the Slf4j library.

Resource layer

You are building a REST API, so you need to add the OpenAPI spec to the project. The quarkus-smallrye-openapi extension brings in the MicroProfile OpenAPI annotations and processor; this helps with the generation of both the OpenAPI schema and the Swagger UI, which provides a great set of functionality for ad-hoc testing of the API endpoints.

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-smallrye-openapi</artifactId>

</dependency>

Now that the OpenAPI extension is available, you can implement the CustomerResource class.

package com.redhat.customer;

import com.redhat.exception.ServiceException;

import lombok.AllArgsConstructor;

import lombok.extern.slf4j.Slf4j;

import org.eclipse.microprofile.openapi.annotations.enums.SchemaType;

import org.eclipse.microprofile.openapi.annotations.media.Content;

import org.eclipse.microprofile.openapi.annotations.media.Schema;

import org.eclipse.microprofile.openapi.annotations.parameters.Parameter;

import org.eclipse.microprofile.openapi.annotations.responses.APIResponse;

import org.eclipse.microprofile.openapi.annotations.tags.Tag;

import javax.validation.Valid;

import javax.validation.constraints.NotNull;

import javax.ws.rs.*;

import javax.ws.rs.core.Context;

import javax.ws.rs.core.MediaType;

import javax.ws.rs.core.Response;

import javax.ws.rs.core.UriInfo;

import java.net.URI;

import java.util.Objects;

@Path("/customers")

@Produces(MediaType.APPLICATION_JSON)

@Consumes(MediaType.APPLICATION_JSON)

@Tag(name = "customer", description = "Customer Operations")

@AllArgsConstructor

@Slf4j

public class CustomerResource {

private final CustomerService customerService;

@GET

@APIResponse(

responseCode = "200",

description = "Get All Customers",

content = @Content(

mediaType = MediaType.APPLICATION_JSON,

schema = @Schema(type = SchemaType.ARRAY, implementation = Customer.class)

)

)

public Response get() {

return Response.ok(customerService.findAll()).build();

}

@GET

@Path("/{customerId}")

@APIResponse(

responseCode = "200",

description = "Get Customer by customerId",

content = @Content(

mediaType = MediaType.APPLICATION_JSON,

schema = @Schema(type = SchemaType.OBJECT, implementation = Customer.class)

)

)

@APIResponse(

responseCode = "404",

description = "Customer does not exist for customerId",

content = @Content(mediaType = MediaType.APPLICATION_JSON)

)

public Response getById(@Parameter(name = "customerId", required = true) @PathParam("customerId") Integer customerId) {

return customerService.findById(customerId)

.map(customer -> Response.ok(customer).build())

.orElse(Response.status(Response.Status.NOT_FOUND).build());

}

@POST

@APIResponse(

responseCode = "201",

description = "Customer Created",

content = @Content(

mediaType = MediaType.APPLICATION_JSON,

schema = @Schema(type = SchemaType.OBJECT, implementation = Customer.class)

)

)

@APIResponse(

responseCode = "400",

description = "Invalid Customer",

content = @Content(mediaType = MediaType.APPLICATION_JSON)

)

@APIResponse(

responseCode = "400",

description = "Customer already exists for customerId",

content = @Content(mediaType = MediaType.APPLICATION_JSON)

)

public Response post(@NotNull @Valid Customer customer, @Context UriInfo uriInfo) {

customerService.save(customer);

URI uri = uriInfo.getAbsolutePathBuilder().path(Integer.toString(customer.getCustomerId())).build();

return Response.created(uri).entity(customer).build();

}

@PUT

@Path("/{customerId}")

@APIResponse(

responseCode = "204",

description = "Customer updated",

content = @Content(

mediaType = MediaType.APPLICATION_JSON,

schema = @Schema(type = SchemaType.OBJECT, implementation = Customer.class)

)

)

@APIResponse(

responseCode = "400",

description = "Invalid Customer",

content = @Content(mediaType = MediaType.APPLICATION_JSON)

)

@APIResponse(

responseCode = "400",

description = "Customer object does not have customerId",

content = @Content(mediaType = MediaType.APPLICATION_JSON)

)

@APIResponse(

responseCode = "400",

description = "Path variable customerId does not match Customer.customerId",

content = @Content(mediaType = MediaType.APPLICATION_JSON)

)

@APIResponse(

responseCode = "404",

description = "No Customer found for customerId provided",

content = @Content(mediaType = MediaType.APPLICATION_JSON)

)

public Response put(@Parameter(name = "customerId", required = true) @PathParam("customerId") Integer customerId, @NotNull @Valid Customer customer) {

if (!Objects.equals(customerId, customer.getCustomerId())) {

throw new ServiceException("Path variable customerId does not match Customer.customerId");

}

customerService.update(customer);

return Response.status(Response.Status.NO_CONTENT).build();

}

}

Configuration

This implementation uses profile-aware files rather than including all configuration in a single file. The single-file strategy requires the use of profile keys (%dev, %test, and so on). If there is a lot of configuration, it can make the file long and difficult to manage. Quarkus now allows for profile-specific configuration files that use the filename pattern application-{profile}.yml.

For example, the default configurations can be managed in the application.yml file, but the configuration specific to the dev profile can be placed in a file named application-dev.yml. The profile files still follow the same rules in terms of overriding and extending the configuration, but clean it up and separate it to make it easier to use.

Here are the four files for the configuration.

application.yml:

quarkus:

banner:

enabled: false

hibernate-orm:

database:

generation: none

mp:

openapi:

extensions:

smallrye:

info:

title: Customer API

version: 0.0.1

description: API for retrieving customers

contact:

email: techsupport@redhat.com

name: Customer API Support

url: https://github.com/quarkus-ground-up/customer-api

license:

name: Apache 2.0

url: http://www.apache.org/licenses/LICENSE-2.0.html

application-dev.yml:

quarkus:

log:

level: INFO

category:

"com.redhat":

level: DEBUG

hibernate-orm:

log:

sql: true

flyway:

migrate-at-start: true

locations: db/migration,db/testdata

application-test.yml:

quarkus:

log:

level: INFO

category:

"com.redhat":

level: DEBUG

hibernate-orm:

log:

sql: true

flyway:

migrate-at-start: true

locations: db/migration,db/testdata

application-prod.yml:

quarkus:

log:

level: INFO

flyway:

migrate-at-start: true

locations: db/migration

The most important variations are in prod, where the logging is configured differently, and the db/testdata folder is excluded from the Flyway migrations to make sure test data is not created.

Testing

For testing, you will use JUnit 5, REST-assured, and the AssertJ libraries. Start by adding those to pom.xml.

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-junit5</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>io.rest-assured</groupId>

<artifactId>rest-assured</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.assertj</groupId>

<artifactId>assertj-core</artifactId>

<scope>test</scope>

</dependency>

The CustomerResourceTest class focuses on testing the entire flow for the application.

package com.redhat.customer;

import io.quarkus.test.common.http.TestHTTPEndpoint;

import io.quarkus.test.junit.QuarkusTest;

import io.restassured.http.ContentType;

import org.apache.commons.lang3.RandomStringUtils;

import org.junit.jupiter.api.Test;

import static io.restassured.RestAssured.given;

import static org.assertj.core.api.Assertions.assertThat;

@QuarkusTest

@TestHTTPEndpoint(CustomerResource.class)

public class CustomerResourceTest {

@Test

public void getAll() {

given()

.when()

.get()

.then()

.statusCode(200);

}

@Test

public void getById() {

Customer customer = createCustomer();

Customer saved = given()

.contentType(ContentType.JSON)

.body(customer)

.post()

.then()

.statusCode(201)

.extract().as(Customer.class);

Customer got = given()

.when()

.get("/{customerId}", saved.getCustomerId())

.then()

.statusCode(200)

.extract().as(Customer.class);

assertThat(saved).isEqualTo(got);

}

@Test

public void getByIdNotFound() {

given()

.when()

.get("/{customerId}", 987654321)

.then()

.statusCode(404);

}

@Test

public void post() {

Customer customer = createCustomer();

Customer saved = given()

.contentType(ContentType.JSON)

.body(customer)

.post()

.then()

.statusCode(201)

.extract().as(Customer.class);

assertThat(saved.getCustomerId()).isNotNull();

}

@Test

public void postFailNoFirstName() {

Customer customer = createCustomer();

customer.setFirstName(null);

given()

.contentType(ContentType.JSON)

.body(customer)

.post()

.then()

.statusCode(400);

}

@Test

public void put() {

Customer customer = createCustomer();

Customer saved = given()

.contentType(ContentType.JSON)

.body(customer)

.post()

.then()

.statusCode(201)

.extract().as(Customer.class);

saved.setFirstName("Updated");

given()

.contentType(ContentType.JSON)

.body(saved)

.put("/{customerId}", saved.getCustomerId())

.then()

.statusCode(204);

}

@Test

public void putFailNoLastName() {

Customer customer = createCustomer();

Customer saved = given()

.contentType(ContentType.JSON)

.body(customer)

.post()

.then()

.statusCode(201)

.extract().as(Customer.class);

saved.setLastName(null);

given()

.contentType(ContentType.JSON)

.body(saved)

.put("/{customerId}", saved.getCustomerId())

.then()

.statusCode(400);

}

private Customer createCustomer() {

Customer customer = new Customer();

customer.setFirstName(RandomStringUtils.randomAlphabetic(10));

customer.setMiddleName(RandomStringUtils.randomAlphabetic(10));

customer.setLastName(RandomStringUtils.randomAlphabetic(10));

customer.setEmail(RandomStringUtils.randomAlphabetic(10) + "@rhenergy.dev");

customer.setPhone(RandomStringUtils.randomNumeric(10));

return customer;

}

}

A few updates have been made since the previous article around the use of the @TestHTTPEndpoint(CustomerResource.class) annotation. This annotation reduces the hard-coding of specific endpoint URLs in the tests. For example, instead of having to use the URLs in the http command (e.g., .get("/customers")), the annotation allows the developer to simply call .get(), and the annotation tells the call which resource it's referring to.

Quarkus Dev Services

Now that all the code is in place, you're ready to fire it up. From the command line, start the application in Quarkus Dev Mode.

./mvnw clean quarkus:dev

The first thing that should jump out at you is that you haven't explicitly set anything up regarding the database. But if you look in the logs, you can see that a database has been set up for you.

[io.qua.dat.dep.dev.DevServicesDatasourceProcessor] (build-40) Dev Services for the default datasource (postgresql) started.

The Quarkus Dev Services did that behind the scenes. The database Dev Services will automatically be enabled when a reactive or JDBC datasource extension is present in the application, so long as the database URL has not been configured. The Dev Services ecosystem supports not only databases but a slew of integration products, including AMQP, Apache Kafka, MongoDB, Infinispan, and more, with new ones being added frequently.

Continuous testing

In addition to the Dev Services, Quarkus also has a new continuous testing capability. When you start the application in Dev Mode, this also starts a separate process in the background to execute the tests. Figure 3 shows the command-line options.

Press the r key in the console. The tests should execute in the background, giving you real-time test feedback while running the application in Dev Mode. While the testing process is running, you can actively make code changes, and after every save, the process will detect the changes and the tests will rerun automatically. Talk about real-time feedback! Continuous testing makes it possible to quickly detect breaking tests, and the running server provides the ability to perform ad-hoc tests in parallel.

Observability

Quarkus has a number of easy-to-implement features that give you visibility into your application as it's running.

Health

One of the greatest things about Quarkus is the ability to get very powerful base functionality out of the box simply by adding an extension. A great example is the quarkus-smallrye-health extension. Adding this extension to the demo app will illustrate what it can do.

For bonus points, add the extension to the pom.xml file while the application is running in quarkus:dev mode. Not only will it import the JAR, but it will detect the change and automatically restart the server. To restart the test process, hit the r key again and you are back to where you started.

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-smallrye-health</artifactId>

</dependency>

Importing the smallrye-health extension directly exposes these REST endpoints:

/q/health/live: The application is up and running./q/health/ready: The application is ready to serve requests./q/health/started: The application is started./q/health: Accumulating all health check procedures in the application.

When you check the /q/health endpoint, you will also see something you may not have expected: the transitive addition of the quarkus-agroal extension automatically registers a readiness health check that will validate the datasources.

{

"status": "UP",

"checks": [

{

"name": "Database connections health check",

"status": "UP"

}

]

}

From here, you are now free to build and configure your own custom health checks using the framework.

Metrics

Metrics are a must-have for any application. It's better to include them early to ensure they're available when you really need them. Luckily, there is a Quarkus extension that makes this easy.

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-micrometer-registry-prometheus</artifactId>

</dependency>

Dropping in this extension gives you a Prometheus-compatible scraping endpoint with process, JVM, and HTTP statistics out of the box. Go to the /q/metrics endpoint to check it out. If you want to see the basic HTTP metrics, open the Swagger UI at /q/swagger-ui and hit a couple of the endpoints to see the resulting metrics.

...

http_server_requests_seconds_count{method="GET",outcome="SUCCESS",status="200",uri="/customers",} 4.0

http_server_requests_seconds_sum{method="GET",outcome="SUCCESS",status="200",uri="/customers",} 0.196336987

http_server_requests_seconds_max{method="GET",outcome="SUCCESS",status="200",uri="/customers",} 0.173861414

...

Enabling histograms

The standard metrics are pretty powerful, but a quick configuration change can supercharge them. Most API service-level objectives are built around the number of nines of reliability. To quickly enable metrics for the HTTP server requests with percentiles, add the following configuration to the project.

package com.redhat.configuration;

import io.micrometer.core.instrument.Meter;

import io.micrometer.core.instrument.config.MeterFilter;

import io.micrometer.core.instrument.distribution.DistributionStatisticConfig;

import javax.enterprise.context.ApplicationScoped;

import javax.enterprise.inject.Produces;

public class MicrometerConfiguration {

@Produces

@ApplicationScoped

public MeterFilter enableHistogram() {

return new MeterFilter() {

@Override

public DistributionStatisticConfig configure(Meter.Id id, DistributionStatisticConfig config) {

if (id.getName().startsWith("http.server.requests")) {

return DistributionStatisticConfig.builder()

.percentiles(0.5, 0.95, 0.99, 0.999)

.build()

.merge(config);

}

return config;

}

};

}

}

After the histogram is available, the metrics become much richer and are easy to turn into alerts.

http_server_requests_seconds{method="GET",outcome="SUCCESS",status="200",uri="/customers",quantile="0.5",} 0.00458752

http_server_requests_seconds{method="GET",outcome="SUCCESS",status="200",uri="/customers",quantile="0.95",} 0.011927552

http_server_requests_seconds{method="GET",outcome="SUCCESS",status="200",uri="/customers",quantile="0.99",} 0.04390912

http_server_requests_seconds{method="GET",outcome="SUCCESS",status="200",uri="/customers",quantile="0.999",} 0.04390912

http_server_requests_seconds_count{method="GET",outcome="SUCCESS",status="200",uri="/customers",} 51.0

http_server_requests_seconds_sum{method="GET",outcome="SUCCESS",status="200",uri="/customers",} 0.308183441

If you want to ignore HTTP metrics related to anything in the dev tools /q endpoints, you can add the following to the configuration file.

quarkus:

micrometer:

binder:

http-server:

ignore-patterns:

- '/q.*'

Summary

The amount of change that has come to the Quarkus ecosystem in the past year is really amazing. While the framework still aims to be lightweight and fast, its tooling and overall usability seem to be on an exponential curve of increasing developer productivity. If you are looking for a Java framework as part of an effort around application modernization, or are beginning your journey into Kubernetes-native application development, then Quarkus should definitely be on your shortlist.

Once you're ready to dive in, you can download the complete source code for this article.

Where to learn more

Find additional resources:

- Explore Quarkus quick starts in the Developer Sandbox for Red Hat OpenShift, which offers a free and ready-made environment for experimenting with containerized applications.

- Try free 15-minute interactive learning scenarios.

- Download the e-book Quarkus for Spring Developers, which provides a detailed look at Quarkus fundamentals and offers side-by-side examples of familiar Spring concepts, constructs, and conventions.