Welcome back to our series about building a smart, cloud-native financial advisor powered by AI. In How to build AI-ready applications with Quarkus, we explored how to infuse the application with AI-powered chat and retrieval-augmented generation. In this installment, we'll evolve the WealthWise application into a serverless architecture using Red Hat OpenShift Serverless, enabling more responsive scaling, lower operational costs, and flexible deployment options across hybrid and multi-cloud environments.

Why move to serverless?

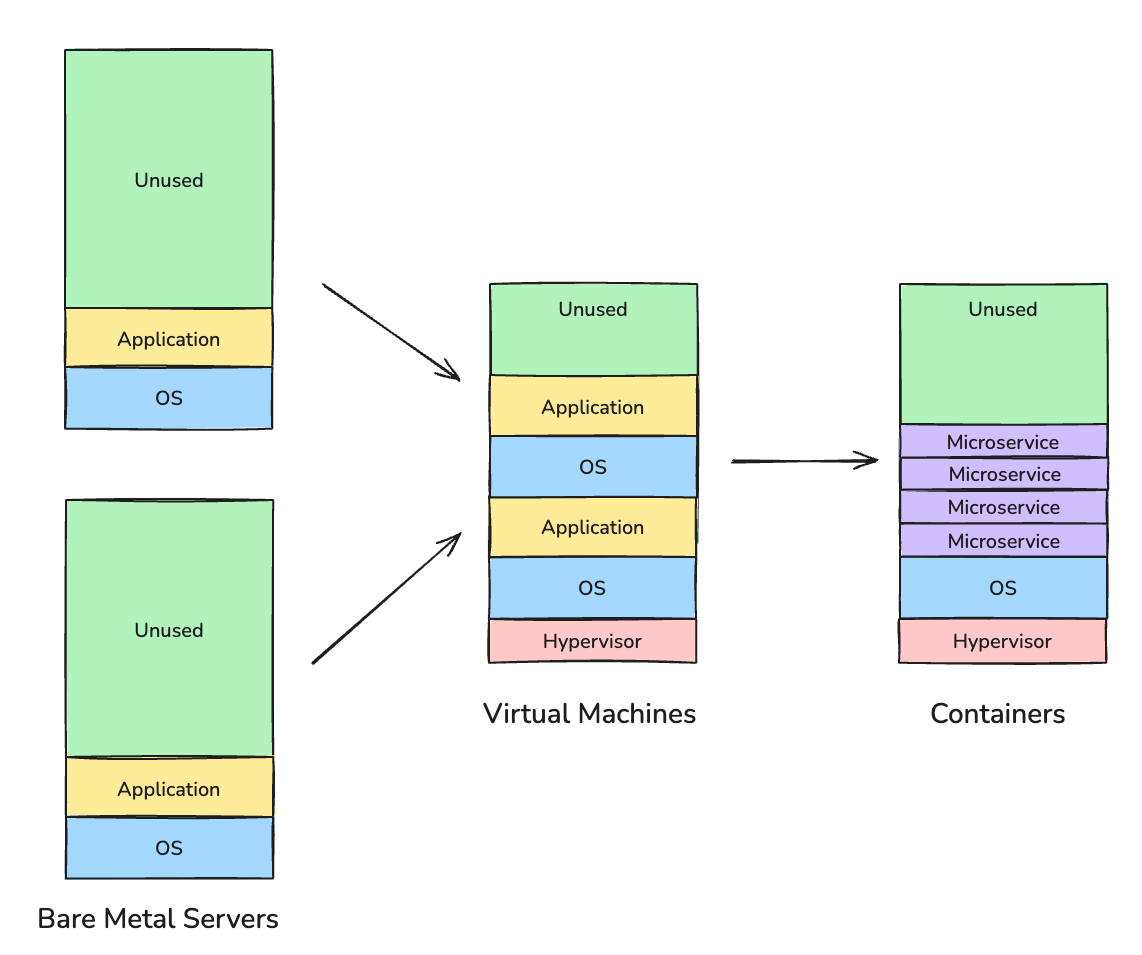

Over time, the deployment models for software have moved to try and best utilize available computing resources. The move from applications running on bare-metal servers to virtual machines allowed for multiple monolithic systems to be run on a single piece of hardware. The introduction of container technology meant that applications could be decomposed into smaller microservices that could again be deployed to make the best use of available computing resources, as shown in Figure 1.

The next logical step is to split a microservice into individual functions so you can scale the use of compute resources at the individual function level.

While all these advantages are relevant for software running on your own infrastructure, they become vital in the modern cloud-native world, where infrastructure is pay-as-you-go and reducing resource consumption is essential for cost efficiency.

This has led to the popularity of serverless computing, where functional units of computing can be deployed and scaled without worrying about the actual server infrastructure hosting them.

Unlocking resource efficiency with OpenShift Serverless

For an application like WealthWise, making efficient use of resources is a challenge. The use of the application tends to be "bursty" and peaks during specific times, like paydays or at tax return time. To allow for the application to deal with those peaks of demand, WealthWise tends to pay for a lot of computing power that sits underutilised during low demand periods. Additionally, WealthWise faces the problem of being able to easily move the deployment of the application to different cloud providers or on-premises, as business needs change.

OpenShift Serverless extends the benefits of serverless computing by allowing for the seamless deployment of serverless functions to anywhere that OpenShift can run: in a data center, in public cloud, or at the edge. Current public cloud serverless implementations are platform-specific, meaning that porting a serverless function to another cloud usually involves additional coding and configuration work. OpenShift Serverless uses standard Kubernetes constructs such as CustomResourceDefinitions and container images, allowing the use of all of the mature Kubernetes tooling provided by OpenShift to manage serverless functions.

Understanding OpenShift Serverless

Red Hat OpenShift Serverless builds on the Knative project to bring Kubernetes-native serverless capabilities to any OpenShift cluster—on-premises, in public clouds, or at the edge. Here's how it works:

- Knative Serving provides autoscaling, route management, and container lifecycle for HTTP-based workloads.

- Knative Eventing enables event-driven communication between components using cloud events.

- Developers write and deploy functions or microservices using standard containers—no need to learn a new platform-specific model.

Benefits for WealthWise include:

- Auto-scaling: The financial services only run when needed; this is ideal for bursty traffic (e.g., end-of-financial-year or salary day).

- Event-driven execution: Process market alerts or user requests as they arrive, reducing idle resource costs.

- Simplified deployment: Use GitOps, command-line interface (CLI), or Kubernetes manifests to deploy functions without overhauling DevOps practices.

What you'll need

Before we jump into evolving WealthWise into a fully serverless architecture, let's make sure you're set up with everything you need to follow along. There are 5 prerequisites:

knCLI: The Knative CLI simplifies creating and managing serverless services on Kubernetes and OpenShift. Download it from the Knative website.- Access to OpenShift: You can either register for the Red Hat's Developer Sandbox or install Red Hat OpenShift Local.

- Container engine (Podman or Docker): Required for building and pushing container images locally.

- Quay.io account: You'll need a container registry to push your application image; sign up at quay.io.

- OpenAI API key: Head to the OpenAI site and generate your personal API key, if you didn't already do so so in part 1 of this series.

Now that the prerequisites are done, we will run a few steps to get our application ready:

Clone the WealthWise project:

git clone https://github.com/derekwaters/wealth-wise.git cd wealth-wiseBuild and push the container images. To prepare each WealthWise service for deployment, we'll use the

kn func buildcommand. This builds the container image for your function using Source-to-Image (S2I), a native OpenShift build strategy.kn func build --path advisor-history --builder s2i --image quay.io/<your-quay-account>/ww-advisor-history:latest --push kn func build --path financial-advisor --builder s2i --image quay.io/<your-quay-account>/ww-financial-advisor:latest --push kn func build --path investment-advisor --builder s2i --image quay.io/<your-quay-account>/ww-investment-advisor:latest --push kn func build --path ww-frontend --builder s2i --image quay.io/<your-quay-account>/ww-frontend:latest --push kn func build --path add-history --builder s2i --image quay.io/<your-quay-account>/ww-add-history:latest --pushKnative and Quarkus take care of everything, from packaging the application to generating an OpenShift Serverless compatible container image to pushing it to Quay—no Dockerfile needed. It's all handled automatically using the configuration you provided.

Create the OpenShift Project and secrets. Our WealthWise application needs a few environment variables to run: the OpenAI API key and the PostgreSQL database credentials, as well as some application-specific Java configuration.

It's good practice to store sensitive values like these as Kubernetes secrets so they're securely injected at runtime and not hardcoded into your image or config:

#Create a dedicated project for your serverless workload oc new-project wealthwise # Create the OpenAI API Key Secret oc create secret generic openai-token \ --from-literal=OPENAI_API_KEY=<your-openai-key> -n wealthwise # Create the PostgreSQL credentials Secret oc create secret generic db-credentials \ --from-literal=QUARKUS_DATASOURCE_JDBC_URL=jdbc:postgresql://wealthwise.wealthwise.svc.cluster.local:5432/wealthwise \ --from-literal=QUARKUS_DATASOURCE_USERNAME=devuser \ --from-literal=QUARKUS_DATASOURCE_PASSWORD=devpass \ -n wealthwise # Create the app config secret for the financial advisor service oc create secret generic financial-advisor-app-properties --from-file=financial-advisor/src/main/resources/application.properties -n wealthwise # Create the app config secret for the investment advisor service oc create secret generic investment-advisor-app-properties --from-file=investment-advisor/src/main/resources/application.properties -n wealthwiseDeploy the PostgreSQL database. In our previous tutorial, Quarkus Dev Services automatically launched a local PostgreSQL container for you. This time, because we're deploying on OpenShift, we need to launch the database ourselves.

OpenShift provides a ready-made PostgreSQL template that you can deploy through the Developer Console or CLI. When configuring it, make sure the DB credentials match those stored in your

db-credentialssecret, that the Database Service Name is ‘wealthwise’ and the PostgreSQL Database Name is ‘wealthwise’. Also ensure that the PostgreSQL version is 12 or higher, as this is required by the default JDBC binding library used in the WealthWise Quarkus applications.- Deploy Kafka components. Later in the serverless deployment of WealthWise, we'll use Knative Eventing to pass event data between functions. This requires an Apache Kafka broker to transmit messages. To install Kafka on OpenShift:

- Log in to your OpenShift web console.

- In the Administrator view, expand the Operator menu and select the OperatorHub option.

- Search for the streams for Apache Kafka operator and select it.

- Click Install and accept all the default options.

Add a new project for your Kafka cluster by running the following command:

oc new-project kafka- Once the Operator has finished installing, in the OpenShift console, change the OpenShift project to the

kafkaproject. - Select the Installed Operators menu option.

- Select the streams for Apache Kafka operator.

- Click the Create Instance link under the Kafka API.

- Change the name of the cluster to

kafka-clusterand keep the remaining defaults. - Click Create.

- Wait until the status of the Kafka cluster is Ready.

- Switch to the ‘wealthwise’ Project.

- Click the Details tab of the streams for Apache Kafka operator.

- Click the Create Instance link under the Kafka Topic API.

- Change the name of the topic to

wealthwise-historyand leave all other values as default. - Click Create to create the Kafka topic.

WealthWise's evolution to serverless

Now that we've made the decision to move WealthWise to an OpenShift Serverless model, how can we go about getting there?

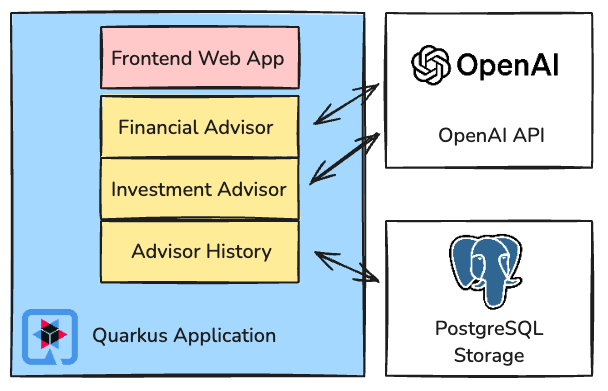

First, let's look at the existing application architecture, illustrated in Figure 2.

This consists of a traditional Java Quarkus-based architecture, running as a persistent long-lived service, servicing requests from a front-end web application.

To take advantage of the benefits of serverless architecture, we can take the following steps:

- Refactor the WealthWise application into Knative functions.

- Deploy OpenShift Serverless in the OpenShift environment.

- Deploy the WealthWise components as Knative Services.

- Scale the serverless functions dynamically on demand.

- Leverage Knative Eventing and Kafka integration.

Refactor the WealthWise application into Knative functions

Knative Serving (part of OpenShift Serverless) allows us to simplify the creation of serverless functions, including scaling up and down based on request demand, and the routing of service traffic. Knative Serving is built out of a series of Kubernetes resources, which you can see depicted in the Knative documentation.

The first step to a serverless world is for us to refactor the single WealthWise Quarkus application into a series of discrete functions. In this case, it makes sense to have a function for providing investment advice, one for the financial advice request, one for history, and one to serve up the front-end web components.

To demonstrate the use of events in OpenShift Serverless, we also have a function to add new history messages from events. To create a Knative function project framework, you can use the Knative command line:

kn func create --language quarkus --template http advisor-historyThere are templates for a series of languages and event modes, which you can see by running:

kn func create --helpThe refactoring process has already been completed in this Git repository, and the code from the single Quarkus application we used in the previous article has been refactored into 5 individual functions.

Deploy OpenShift Serverless in the OpenShift environment

Before deploying our serverless functions, we need to enable the OpenShift Serverless components in OpenShift. To do this, follow these steps:

- Log in to your OpenShift web console.

- In the Administrator view, expand the Operator menu and select the OperatorHub option.

- Search for the Red Hat OpenShift Serverless operator and select it.

- Click the Install button and then accept all the default options.

- Once the Operator has finished installing, change the OpenShift project to the

Knative-servingproject. - Select the Installed Operators menu option.

- Select the OpenShift Serverless operator.

- Click the Create Instance link under the Knative Serving API.

- Accept all the defaults and click Create.

- Change the OpenShift project to the

Knative-eventingproject. - Select the Installed Operators menu option.

- Select the OpenShift Serverless operator.

- Click the Create Instance link under the Knative Eventing API.

- Accept all the defaults and click Create.

Your OpenShift cluster is now ready to deploy serverless functions.

Of course, the Operator and component installation could be configured with Kubernetes resources and deployed using GitOps rather than manually through the OpenShift console.

Deploy the WealthWise components as Knative Services

Now the Knative CLI can be used to deploy the WealthWise functions into the OpenShift Serverless environment. First, you should ensure you are logged in to your OpenShift environment with the oc CLI tool, and that the wealthwise project is active. Then you can use the following commands to deploy the 5 serverless functions.

Deploy the advisor-history service:

kn service create advisor-history \

--image quay.io/<your user>/ww-advisor-history:latest \

--env QUARKUS_HTTP_CORS='true' \

--env QUARKUS_HTTP_CORS_ORIGINS='*' \

--env QUARKUS_HIBERNATE_ORM_DATABASE_GENERATION_CREATE_SCHEMAS='true' \

--env QUARKUS_HIBERNATE_ORM_DATABASE_GENERATION='create' \

--env-from secret:db-credentialsDeploy the financial-advisor service:

kn service create financial-advisor \

--image quay.io/<your user>/ww-financial-advisor:latest \

--env QUARKUS_HTTP_CORS='true' \

--env QUARKUS_HTTP_CORS_ORIGINS='*' \

--env KAFKA_BOOTSTRAP_SERVERS='kafka-cluster-kafka-bootstrap.kafka.svc.cluster.local:9092' \

--env-from secret:openai-token

--mount /deployments/config=secret:financial-advisor-app-propertiesDeploy the investment-advisor service:

kn service create investment-advisor \

--image quay.io/<your user>/ww-investment-advisor:latest \

--env QUARKUS_HTTP_CORS='true' \

--env QUARKUS_HTTP_CORS_ORIGINS='*' \

--env KAFKA_BOOTSTRAP_SERVERS='kafka-cluster-kafka-bootstrap.kafka.svc.cluster.local:9092' \

--env-from secret:openai-token

--mount /deployments/config=secret:investment-advisor-app-propertiesDeploy the frontend service:

kn service create frontend \

--image quay.io/<your user>/ww-frontend:latestDeploy the add-history service:

kn service create add-history \

--image quay.io/<your user>/ww-add-history:latest \

--env QUARKUS_HIBERNATE_ORM_DATABASE_GENERATION_CREATE_SCHEMAS='true' \

--env QUARKUS_HIBERNATE_ORM_DATABASE_GENERATION='create'

--env-from secret:db-credentialsWarning

For these examples, we use Quarkus development settings for CORS, which should not be used in a production environment. Refer to the Quarkus CORS documentation for more details.

In a GitOps config-as-code model, you can also specify these services as Kubernetes resources. For example, you can instantiate the financial-advisor service with the following definition:

apiVersion: serving.Knative.dev/v1

kind: Service

metadata:

name: financial-advisor

namespace: wealthwise

spec:

template:

spec:

containers:

- env:

# NOTE: This CORS configuration is not safe and should only be used in Dev

- name: QUARKUS_HTTP_CORS

value: 'true'

- name: QUARKUS_HTTP_CORS_ORIGINS

value: '*'

envFrom:

- secretRef:

name: openai-token

image: 'quay.io/<your-quay-user>/ww-financial-advisor:latest'The service definition handles routing inbound traffic to the Kubernetes pods running our application, and includes the definition of the container image used for our serverless function.

The full set of resource definitions for the 5 services can be found in the Git repo under the k8s-resources/services folder.

Scaling the serverless functions dynamically on demand

Looking at the service definition above, note that there are no annotations applied in the resource spec. By default, OpenShift Serverless will scale these deployments down to zero running pods and scale them up as load increases. Limits can be applied either through the Kubernetes resource definition or in the call to kn service create.

For example, to specify a minimum scale of 1 pod to a maximum of 3 pods, you can add the following annotation:

apiVersion: serving.Knative.dev/v1

kind: Service

metadata:

name: financial-advisor

namespace: wealthwise

spec:

template:

metadata:

annotations:

autoscaling.Knative.dev/minScale: "1"

autoscaling.Knative.dev/maxScale: "3"

spec:

containers:

- env:

# NOTE: This CORS configuration is not safe and should only be used in Dev

- name: QUARKUS_HTTP_CORS

value: 'true'

- name: QUARKUS_HTTP_CORS_ORIGINS

value: '*'

envFrom:

- secretRef:

name: openai-token

image: 'quay.io/<your-quay-user>/ww-financial-advisor:latest'There are many other configurable options to manage the scaling of your workloads, as outlined in the documentation.

As the pods for this service are scheduled as part of the Kubernetes environment, they can use all the compute management that OpenShift provides.

In the service resource definition, you do not have to define any computing infrastructure or configure any processes for scaling the application under load. You only need to specify the (optional) minimum and maximum allowable scale. In fact, the minimum scale can be set to zero, so that no pods will run until a request is made, at which point a pod will automatically be created to handle the request with a minimal startup delay. This can further reduce our compute requirements to an "as needed" basis.

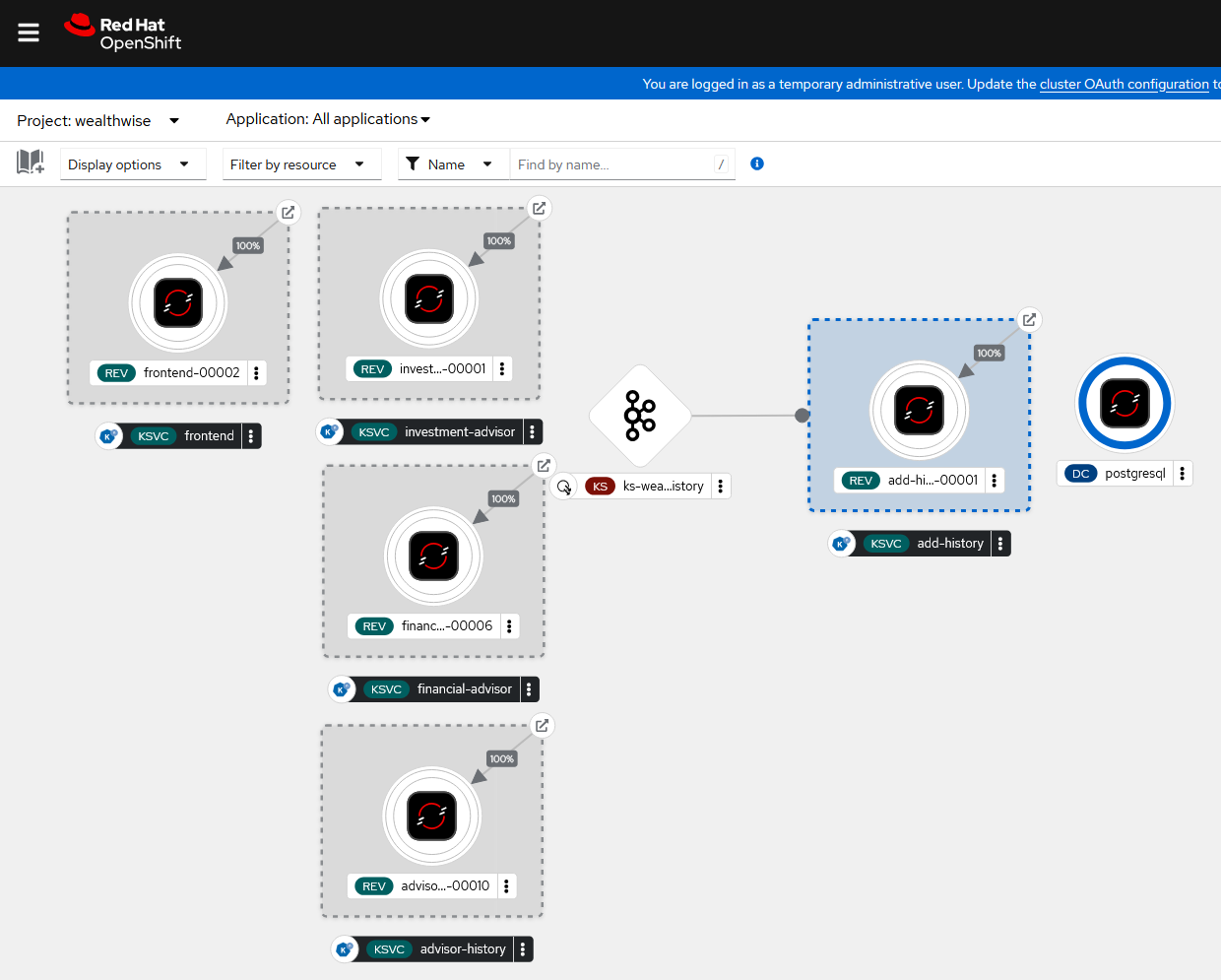

If you look at the OpenShift topology view in the Developer perspective, you will see the 5 serverless functions, all with deployments scaled down to zero (white border). Load the front-end application (you can use the arrow at the top right of the front end Knative Service to open it in a new browser tab) and you will see the front-end deployment scale up a pod (blue border) to support the request. If you then use the application to ask for financial advice, you'll see the financial-advisor pod also scale up. Leave the system for some time, and you will see the deployments scale back to zero running pods.

You can also find out the URL of the front end from the command line by running:

kn service describe frontendLeveraging Knative Eventing and Kafka integration

OpenShift Serverless also incorporates an eventing layer to integrate real time event feeds as inputs and outputs of serverless functions.

This functionality uses Knative Eventing support for Apache Kafka. To enable our application to use integrated eventing, we need to install the Knative Kafka Eventing components and link our Kafka topic to the add-history serverless function. Follow these steps:

- Select the

knative-eventingproject. - Select the Installed Operators menu option.

- Select the OpenShift Serverless operator.

- Click the Create Instance link under the Knative Kafka API.

- Leave the name as

knative-kafka. - Expand the channel section and turn on the Enabled option.

- In the bootstrapServers field, enter the name of the Kafka bootstrap server(s). If you followed the default steps above, this should be:

kafka-cluster-kafka-bootstrap.kafka.svc.cluster.local:9092 - Expand the source section and turn on the enabled option.

- Expand the broker section and turn on the enabled option.

Expand the defaultConfig section under broker. In the bootstrapServers field, enter the name of the Kafka bootstrap server(s). If you followed the default steps above, this should be:

kafka-cluster-kafka-bootstrap.kafka.svc.cluster.local:9092- Expand the sink section and turn on the enabled option.

- Click Create.

Again, you can perform all of these configurations by deploying Kubernetes resources directly to your OpenShift environment. These resource definitions can be seen in the Git repo in the k8s-resources/kafka folder.

In this application, the financial-advisor and investment-advisor serverless functions generate CloudEvents that are sent to a defined Kafka topic called wealthwise-history. The Knative Eventing KafkaSource is then used to automatically forward events from the Kafka topic to the event sink, which in this case is the add-history serverless function.

To create this KafkaSource, run the following command:

oc apply -f k8s-resources\kafka\kafka-source.yamlThis resource file contains a KafkaSource definition. The kn CLI can list additional event sources but can’t create them directly, hence the use of a Kubernetes resource file.

This KafkaSource links our Kafka topic (wealthwise-history) with the "sink" of events, the add-history Knative service.

WealthWise serverless architecture

The final serverless architecture for WealthWise, incorporating all of these changes, is shown in Figure 3.

Each of the depicted Knative services can scale down to zero running pods when demand is low, reducing the costs of keeping the applications running. As user requests are received, OpenShift Serverless will scale up the application pods to deal with bursty request loads. Additionally, when a user makes a request to the investment or financial advice function, a history event is sent to the add-history function using Knative Kafka Eventing.

Tips for OpenShift Serverless

By default, the Knative serverless functions get built into container images without the application.properties file from the source code being included. If you amend your application's configuration, and it works running Quarkus locally but not when you build your function, that might be why.

Many of the Quarkus configuration settings have ENV var equivalents that you can use, like the CORS settings above, but otherwise, you can add the application.properties file as a Secret (or ConfigMap) in OpenShift and then mount that as a volume to the Knative Service, as was done for the investment-advisor and financial-advisor services.

Many of the command-line parameters for the kn func build command can be added to the func.yaml definition file to make building function images simpler. Check the reference for more details.

When using Knative Eventing, it's very easy to get the format of messages confused. Be sure you know whether you're sending CloudEvents or raw JSON events through Kafka, and note that Kafka supports sending raw binary data, so be careful to ensure you know what you're dealing with.

You can use the Kafka testing containers to set up consumer and producer pods to test your eventing interfaces. For example:

kubectl -n wealthwise run kafka-consumer-history -ti --image=quay.io/strimzi/kafka:0.26.1-kafka-3.0.0 --rm=true --restart=Never -- bin/kafka-console-consumer.sh --bootstrap-server kafka-cluster-kafka-bootstrap.kafka.svc.cluster.local:9092 --topic wealthwise-historyThis will start a container that listens and outputs any messages sent to the wealthwise-history topic.

When testing, add the annotations to set minScale to 1, so the pod never shuts down. This lets you more easily access logs or open a terminal to examine the state of your function container.

Because the kn func build command basically builds the entire serverless function container from scratch, it can take a very long time, even if you've made a very small change to your function code. Test as much as you can locally with quarkus dev before you try and build the function image for OpenShift Serverless.

Conclusion: Real-world benefits for WealthWise

Transitioning WealthWise to a serverless model using OpenShift Serverless delivers immediate and strategic gains:

- Reduced infrastructure costs: No more paying for idle pods—each component scales to zero when not needed.

- Better scalability: Components like the AI chatbot can scale out during peak loads and scale down when quiet.

- Improved response times: Cold start optimizations ensure financial logic spins up quickly when a user interacts.

- Enhanced resilience: Stateless functions, autoscaling, and built-in failover mean fewer single points of failure and more robust uptime.

Combined with GitOps and containerized packaging, OpenShift Serverless enables WealthWise to scale intelligently and adapt quickly to changing business demands—without locking into a specific public cloud provider or infrastructure setup.

If you haven't yet, check out the first article in this series: How to build AI-ready applications with Quarkus