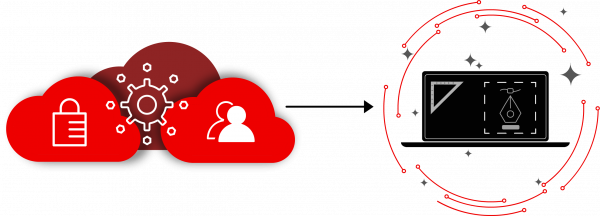

Microservices

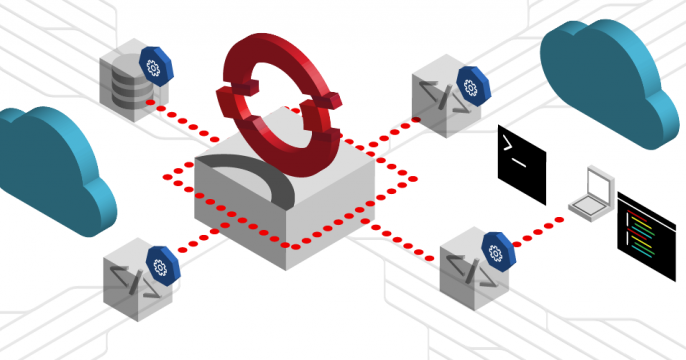

Microservices breakdown your application architecture into smaller, independent components that communicate through APIs.This approach lets multiple team members work on different parts of the architecture simultaneously for faster development. It’s a scalable, flexible, resilient way to build modern applications. .