A great thing about Node.js is how well it performs inside a container. With the shift to containerized deployments and environments comes extra complexity. One such complexity is observing what’s going on within your application and its resources, and when resource use is outside of the expected norms.

Prometheus is a tool that developers can use to increase observability. It is an installable service that gathers instrumentation metrics from your applications and stores them as time-series data. Prometheus is advanced and battle-tested, and a great option for Node.js applications running inside of a container.

Default and custom instrumentation

For your application to feed metrics to Prometheus, it must expose a metrics endpoint. For a Node.js application, the best way to expose the metrics endpoint is to use the prom-client module available from the Node Package Manager (NPM) registry. The prom-client module exposes all of the default metrics recommended by Prometheus.

The defaults include metrics such as process_cpu_seconds_total and process_heap_bytes. In addition to exposing default metrics, prom-client allows developers to define their own metrics, as we'll do in this article.

A simple Express.js app

Let’s start by creating a simple Express.js application. In this application, a service endpoint at /api/greeting accepts GET requests and returns a greeting as JSON. The following commands will get your project started:

$ mkdir my-app && cd my-app $ npm init -y $ npm i express body-parser prom-client

This sequence of commands should create a package.json file and install all of the application dependencies. Next, open the package.json file in a text editor and add the following to the scripts section:

"start": "node app.js"

Application source code

The following code is a fairly simple Express.js application. Create a new file in your text editor called app.js and paste the following into it:

'use strict';

const express = require('express');

const bodyParser = require('body-parser');

// Use the prom-client module to expose our metrics to Prometheus

const client = require('prom-client');

// enable prom-client to expose default application metrics

const collectDefaultMetrics = client.collectDefaultMetrics;

// define a custom prefix string for application metrics

collectDefaultMetrics({ prefix: 'my_app:' });

const histogram = new client.Histogram({

name: 'http_request_duration_seconds',

help: 'Duration of HTTP requests in seconds histogram',

labelNames: ['method', 'handler', 'code'],

buckets: [0.1, 5, 15, 50, 100, 500],

});

const app = express();

const port = process.argv[2] || 8080;

let failureCounter = 0;

app.use(bodyParser.json());

app.use(bodyParser.urlencoded({ extended: true }));

app.get('/api/greeting', async (req, res) => {

const end = histogram.startTimer();

const name = req.query?.name || 'World';

try {

const result = await somethingThatCouldFail(`Hello, ${name}`);

res.send({ message: result });

} catch (err) {

res.status(500).send({ error: err.toString() });

}

res.on('finish', () =>

end({

method: req.method,

handler: new URL(req.url, `http://${req.hostname}`).pathname,

code: res.statusCode,

})

);

});

// expose our metrics at the default URL for Prometheus

app.get('/metrics', async (req, res) => {

res.set('Content-Type', client.register.contentType);

res.send(await client.register.metrics());

});

app.listen(port, () => console.log(`Express app listening on port ${port}!`));

function somethingThatCouldFail(echo) {

if (Date.now() % 5 === 0) {

return Promise.reject(`Random failure ${++failureCounter}`);

} else {

return Promise.resolve(echo);

}

}

Deploy the application

You can use the following command to deploy the application to Red Hat OpenShift:

$ npx nodeshift --expose

This command creates all the OpenShift objects that your application needs in order to be deployed. After the deployment succeeds, you will be able to visit your application.

Verify the application

This application exposes two endpoints: /api/greetings to get the greeting message and /metrics to get the Prometheus metrics. First, you'll see the JSON greeting produced by visiting the greetings URL:

$ curl http://my-app-nodeshift.apps.ci-ln-5sqydqb-f76d1.origin-ci-int-gce.dev.openshift.com/api/greeting

If everything goes well you'll get a successful response like this one:

{"content":"Hello, World!"}

Now, get your Prometheus application metrics using:

$ curl ${your-openshift-application-url}/metrics

You should be able to view output like what's shown in Figure 1.

Configuring Prometheus

As of version 4.6, OpenShift comes with a built-in Prometheus instance. To use this instance, you will need to configure the monitoring stack and enable metrics for user-defined projects on your cluster, from an administrator account.

Create a cluster monitoring config map

To configure the core Red Hat OpenShift Container Platform monitoring components, you must create the cluster-monitoring-config ConfigMap object in the openshift-monitoring project. Create a YAML file called cluster-monitoring-config.yaml and paste in the following:

apiVersion: v1

kind: ConfigMap

metadata:

name: cluster-monitoring-config

namespace: openshift-monitoring

data:

config.yaml: |

enableUserWorkload: true

Then, apply the file to your OpenShift cluster:

$ oc apply -f cluster-monitoring-config.yaml

You also need to grant user permissions to configure monitoring for user-defined projects. Run the following command, replacing user and namespace with the appropriate values:

$ oc policy add-role-to-user monitoring-edit user -n namespace

Create a service monitor

The last thing to do is deploy a service monitor for your application. Deploying the service monitor allows Prometheus to scrape your application's /metrics endpoint regularly to get the latest metrics. Create a file called service-monitor.yaml and paste in the following:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: nodeshift-monitor

name: nodeshift-monitor

namespace: nodeshift

spec:

endpoints:

- interval: 30s

port: http

scheme: http

selector:

matchLabels:

project: my-app

Then, deploy this file to OpenShift:

$ oc apply -f service-monitor.yaml

The whole OpenShift monitoring stack should now be configured properly.

The Prometheus dashboard

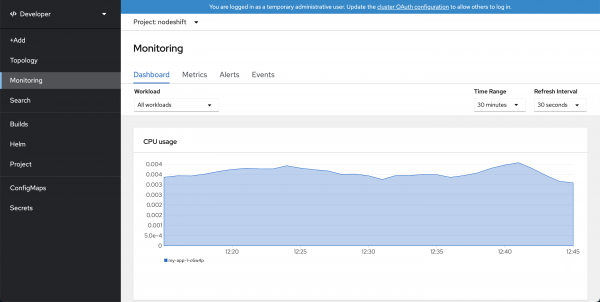

With OpenShift 4.6, the Prometheus dashboard is integrated with OpenShift. To access the dashboard, go to your project and choose the Monitoring item on the left, as shown in Figure 2.

To view the Prometheus metrics (using PromQL), go to the second tab called Metrics. You can query and graph any of the metrics your application provides. For example, Figure 3 graphs the size of the heap.

Testing the application

Next, let's use the Apache Bench tool to add to the load on our application. We'll hit our API endpoint 10,000 times with 100 concurrent requests at a time:

$ ab -n 10000 -c 100 http://my-app-nodeshift.apps.ci-ln-5sqydqb-f76d1.origin-ci-int-gce.dev.openshift.com/api/greeting

After generating this load, we can go back to the main Prometheus dashboard screen and construct a simple query to see how the service performed. We'll use our custom http_request_duration_seconds metric to measure the average request duration during the last five minutes. Type this query into the textbox:

rate(http_request_duration_seconds_sum[5m])/rate(http_request_duration_seconds_count[5m])

Then, go to the Prometheus dashboard to see the nicely drawn graph shown in Figure 4.

We get two lines of output because we have two types of responses: The successful one (200) and the server error (500). We can also see that as the load increases, so does the time required to complete HTTP requests.

Conclusion

This article has been a quick introduction to monitoring Node.js applications with Prometheus. You’ll want to do much more for a production application, including setting up alerts and adding custom metrics to support RED metrics. But I’ll leave those options for another article. Hopefully, this was enough to get you started and ready to learn more.

To learn more about what Red Hat is up to on the Node.js front, check out our new Node.js landing page.

Last updated: September 27, 2024