At the recently concluded Microsoft Ignite 2018 conference in Orlando, I had the honor of presenting to a crowd of Java developers and Azure professionals eager to learn how to put their Java skills to work building next-gen apps on Azure. Of course, that meant showcasing the technology coming out of the popular MicroProfile community, in which Red Hat plays a big part (and makes a fully supported, productized MicroProfile implementation through Thorntail, part of Red Hat OpenShift Application Runtimes).

We did a demo too, which is the main topic of this blog post, showing how easy it is to link your Java MicroProfile apps to Azure services through the Open Service Broker for Azure (the open source, Open Service Broker-compatible API server that provisions managed services in the Microsoft Azure public cloud) and OpenShift's Service Catalog.

Here's how to reproduce the demo.

The Demo

I was joined on stage by Cesar Saavedra from Red Hat (Technical Marketing for MicroProfile and Red Hat) and Brian Benz (Dev Advocate for Microsoft), and we introduced the MicroProfile origins, goals, community makeup, roadmap, and a few other items.

Then it was time for the demo. You can watch the video of the session.

https://www.youtube.com/watch?v=-nAjEsBjkLA

The demo application should look familiar to you:

This game is the classic Minesweeper, first introduced to the Windows world in 1992 with Windows 3.1, and much appreciated by the various graying heads in the audience (shout out to Nick Arocho for his awesome JavaScript implementation of the UI!).

To this game, I added a simple scoreboard backed by a database, and it was our job in the demo to hook this application up to Azure's Cosmos DB service using the MicroProfile Config API, as well integrate with OpenShift's health probes using the simple MicroProfile Health APIs.

The section below describes how to reproduce the demo.

Re-creating the demo

If you just want the source code, here it is, along with a solution branch that adds the necessary changes to link the app to Cosmos DB and OpenShift. But if you want to play along, follow these steps:

Step 1: Get an Azure account

Our first job is to get an Azure account and some credits, all of which are free. Easy, right? Since we're using OpenShift, this could easily be deployed to the cloud of your choice, but Azure is super easy to use, and OpenShift is available in the Azure Marketplace for production-ready multi-node OpenShift deployments. There's also a nice Reference Architecture for all the architects out there.

Step 2: Deploy OpenShift

Since this is a demo, we can take shortcuts, right? In this case, we don't need the full power of a fully armed and operational and productized multi-node OpenShift deployment, so I used a nice "All in One" Azure deployment that Cesar created. To deploy this, simply click here.

You'll need to fill out some information as part of the install. You'll use these values later, so don't forget them:

- Resource Group: Create a new Resource Group to house all of the components (VMs, NICs, storage, etc). Resource Groups are the way Azure groups related resources together. If the named group does not exist, it'll be created for you.

- Location: Pick one close to you to deploy.

- Admin Username / Admin Password: These will be the username and password you'll use to log in to the OpenShift Web Console.

- Ssh Key Data: You'll need to generate an SSH keypair if you want to use

sshto access the resulting virtual machines. Paste in the contents of the public key file once you've created it. - Vm size: Specify a VM size. A default value is provided. If another size or type of VM is required, ensure that the Location contains that instance type.

Agree to the terms and conditions, and click the Purchase button. Then get a cup of coffee; it'll take around 15 minutes to complete and you'll start burning through your credits. (For the default machine type, you'll eat US$20 through US$30 per week!) Click the Deployment Progress notification to watch the progress.

Once it is done, click on the Outputs tab to reveal the URL to your new OpenShift console. Bookmark it, because you'll need it later. Also, don't forget the username/password you provided; you'll need those later too.

If you don't get any outputs, you can always discover the public DNS hostname of your new OpenShift deployment by clicking on the Virtual Machines link at the far left of the Azure Portal, then click on the VM name (same name as the Resource Group you specified), then look for the DNS Name, and open a new browser tab and navigate to https://[THE_DNS_NAME]:8443.

Step 2a: Give yourself cluster-admin access

Although a user in OpenShift was created using the credentials you supplied, this user does not have the cluster-admin rights necessary for installing the service broker components. To give ourselves this ability, we need to use ssh to access the machine and run a command (you did save the SSH public and private key created earlier, right?).

First, log in to the VM running OpenShift:

ssh -i [PRIVATE_KEY_PATH] [ADMIN_USERNAME]@[VM HOSTNAME]

Where:

[PRIVATE_KEY_PATH]is the path to the file containing the private key that corresponds to the public key you used when setting up OpenShift on Azure.[ADMIN_USERNAME]is the name of the OpenShift user you specified.[VM HOSTNAME]is the DNS hostname of the VM running on Azure.

Once logged in via ssh, run this command:

sudo oc adm policy add-cluster-role-to-user cluster-admin [ADMIN_USERNAME]

[ADMIN_USERNAME] is the same as what you used in the ssh command. This will give you the needed rights to install the service broker in the next steps. You can exit the ssh session now.

Step 3: Deploy the Azure Service Broker

Out of the box, this all-in-one deployment of OpenShift includes support for the OpenShift Service Catalog (our implementation of the Open Service Broker API), so all that is left to do is install the Open Service Broker for Azure to expose Azure services in the OpenShift Service Catalog.

This can most easily by installed using Helm (here are installation instructions), and with Helm you can also choose which version of the broker. Microsoft has temporarily taken out experimental services from the GA version of the broker, and is slowly adding them back in, so you'll need to specify a version from earlier this year that includes these experimental services like Cosmos DB's MongoDB API, which the demo uses.

Let's first log in as our admin user to our newly deployed OpenShift deployment using the oc command (if you don't have this command, install the client tools from here):

oc login [URL] -u [ADMIN_USERNAME] -p [PASSWORD]

Here, you need to specify the URL (including port number 8443) to your new OpenShift instance, as well as the username/password you used earlier when setting it up on Azure.

Once logged in, let's deploy the broker with Helm (note that this uses Helm 2.x and it's Tiller-full implementation).

oc create -f https://raw.githubusercontent.com/Azure/helm-charts/master/docs/prerequisities/helm-rbac-config.yaml helm init --service-account tiller helm repo add azure https://kubernetescharts.blob.core.windows.net/azure

With Helm set up and the Azure Helm Charts added, it's time to install the Open Service Broker for Azure, but you'll need four special values that will associate the broker with your personal Azure account through what's called a service principal. Service principals are entities that have an identity and permissions to create and edit resources on an application's behalf. You'll need to first create a service principal following the instructions here, and while creating it and assigning it permissions to your new Resource Group, collect the following values:

- AZURE_SUBSCRIPTION_ID: This is associated with your Azure account and can be found on the Azure Portal (after logging in) by clicking on Resource Groups and then on the name of the resource group created when you deployed OpenShift using the All-In-One deployment. Example:

6ac2eb01-3342-4727-9dfa-48f54bba9726 - AZURE_TENANT_ID: When creating the service principal, you'll see a reference to a Tenant ID, also called a Directory ID. It will also look something like a subscription ID, but they are different! It is associated with the Active Directory instance you have in your account.

- AZURE_CLIENT_ID: The ID of the client (application) you create when creating a service principal, sometimes called an application ID, also similar in structure to the above IDs but different!

- AZURE_CLIENT_SECRET: The secret value for the client (application) you create when creating a service principal. This will be a long-ish base64-encoded string.

Wow, that was fun. With these values, we can now issue the magic helm command to do the tasks and install the Open Service Broker for Azure:

helm install azure/open-service-broker-azure --name osba --namespace osba \ --version 0.11.0 \ --set azure.subscriptionId=[AZURE_SUBSCRIPTION_ID] \ --set azure.tenantId=[AZURE_TENANT_ID] \ --set azure.clientId=[AZURE_CLIENT_ID] \ --set azure.clientSecret=[AZURE_CLIENT_SECRET] \ --set modules.minStability=EXPERIMENTAL

Unfortunately, this uses an ancient version of Redis, so let's use a more recent version and, to simplify things, let's remove the need for persistent volumes (and never use this in production!):

oc set volume -n osba deployment/osba-redis --remove --name=redis-data

oc patch -n osba deployment/osba-redis -p '{"spec": {"template": {"spec": {"containers":[{"name": "osba-redis", "image": "bitnami/redis:4.0.9"}]}}}}'

This will install the Open Service Broker for Azure in the osba Kubernetes namespace and enable the experimental features (like Cosmos DB). It may take some time to pull the images for the broker and for Redis (the default database it uses), and the broker pod might enter a crash loop while it tries to access Redis, but eventually, it should come up. If you screw it up and get errors, you can start over by deleting the osba namespace and trying again (using helm del --purge osba; oc delete project osba and waiting a while until it's really gone and does not appear in oc get projects output).

Run this command to verify everything is working:

oc get pods -n osba

You should see the following (look for the Running status for both):

NAME READY STATUS RESTARTS AGE osba-open-service-broker-azure-846688c998-p86bv 1/1 Running 4 1h osba-redis-5c7f85fcdf-s9xqk 1/1 Running 0 1h

Now that it's installed, browse to the OpenShift Web Console (the URL can be discovered by running oc status). Log in using the same credentials as before, and you should see a number of services and their icons for Azure services (it might take a minute or two for OpenShift to poll the broker and discover all it has to offer):

Type azure into the search box at the top to see a list. Woo! Easy, peasy.

Step 4: Deploy Cosmos DB

Before we deploy the app, let's deploy the database we'll use. (In the demo, I started without a database and did some live coding to deploy the database and change the app to use it. For this blog post, I'll assume you just want to run the final code.)

To deploy Cosmos DB, we'll use the OpenShift Web Console. On the main screen, double-click on the Azure Cosmos DB (MongoDB API) icon. This will walk you through a couple of screens. Click Next on the first screen. On the second screen, elect to deploy Cosmos DB to a new project, and name the project microsweeper. Below that, you can keep all the default settings, except for the following:

- Set defaultConsistencyLevel to Session.

- Type

0.0.0.0/0in the first allowedIPRanges box, and click the Add button. Then click the X button next to the second allowedIPRanges box (don't ask why). - Enter a valid Azure region identifier in the location box, for example,

eastus. - In the resourceGroup box, enter the name of the ResourceGroup you previously created in step 2.

- Click Next.

On the final screen, choose the Create a secret in microsweeper to be used later option. This will later be referenced from the app. Finally, click the Create button and then OpenShift will do its thing, which will take about 5–10 minutes.

Click Continue to the project overview to see the status of the Azure service. During this time, if you visit the Azure Portal in a separate tab, you'll see several resources being created (most notably a Cosmos DB instance). Once it's all done, the Provisioned Services section of the OpenShift Console's Project Overview screen will show that Cosmos DB is ready for use, including a binding that we'll use later.

Step 5: Add MicroProfile health checks and configuration

The app is using two of the many MicroProfile APIs: HealthCheck and Config.

MicroProfile HealthCheck

To the RestApplication class, we've added a simple @Health annotation and a new method:

@Health

@ApplicationPath("/api")

public class RestApplication extends Application implements HealthCheck {

@Override

public HealthCheckResponse call() {

return HealthCheckResponse.named("successful-check").up().build();

}

}

Simple, right? You can add as many of these as you want, and the health check can do whatever it needs to do and be as complex as you want (but not too complex!).

MicroProfile Config

More interesting I think is the addition of the Cosmos DB configuration. Since we exposed Cosmos DB through environment variables, we're able to automatically inject their values using MicroProfile in the ScoreboardServiceCosmos class:

@Inject @ConfigProperty(name = "SCORESDB_uri") private String uri; ... mongoClient = new MongoClient(new MongoClientURI(uri));

The @Inject @ConfigProperty MicroProfile annotations direct Thorntail to look for and dynamically inject values for the uri field, based on the specified name. The MicroProfile Config API specifies a well-defined precedence table to find these, so there are many ways to expose the values to your applications. We will use an environment variable in this demo, but you could also use properties files, ConfigMaps, etc.

Step 6: Deploy the App

The sample app uses Thorntail, Red Hat's fully supported MicroProfile implementation. This is a Java framework, so you'll first need to install Red Hat's OpenJDK to OpenShift so it can be used to build and run the app:

oc create -n openshift -f https://raw.githubusercontent.com/jboss-openshift/application-templates/a1ea009fac7adf0ca34f8ab7dbe5aa0468fe5246/openjdk/openjdk18-image-stream.json

This uses a previous version of the image stream that references Red Hat Container Catalog.

Next, let's deploy the app to our newly created project:

oc project microsweeper oc new-app 'redhat-openjdk18-openshift:1.3~https://github.com/jamesfalkner/microsweeper-demo#solution' \ -e GC_MAX_METASPACE_SIZE=500 \ -e ENVIRONMENT=DEVELOPMENT oc expose svc/microsweeper-demo

This will create a new S2I-based build for the app, build it with Maven and OpenJDK, and deploy the app. Initially, the app will be using an internal database (H2). It may take a few minutes to deploy. When it's done, you should see the following output:

% oc get pods -n microsweeper NAME READY STATUS RESTARTS AGE microsweeper-demo-1-build 0/1 Completed 0 1h microsweeper-demo-3-x8bdg 1/1 Running 0 1h

You can see the completed build pod that built the app and the running app pod.

Once deployed, you can click on the Route URL in the OpenShift Web Console next to the microsweeper-demo service and play the game. You can also get the URL with this:

echo http://$(oc get route microsweeper-demo -o jsonpath='{.spec.host}{"\n"}' -n microsweeper)

Note that it is not yet using MicroProfile or Cosmos DB yet! In the game, enter your name (or use the default), and play the game a few times, ensuring that the scoreboard is updated when you win or lose. To reset the scoreboard, click the X in the upper right. To start the game again, click the smiley or sad face. Good times, right? Let's hook it up to Cosmos DB!

Step 7: Bind Cosmos DB to the app

In a previous step, you deployed the Cosmos DB service to your project, so it is now said to be "provisioned" and bound to the project. You could at this point hard-code the app logic to use the provisioned service's URI, username, password, etc., but that's a terrible long-term approach. It's better to expose the service's credentials dynamically using OpenShift and then change the app to use the values through that dynamic mechanism.

There are two easy ways to expose the service's configuration: through Kubernetes Secrets (where the credentials are exposed through an ordinary file on the filesystem securely transmitted and mounted via a volume in the pod) or through environment variables. I used environment variables because it's easy, but Thorntail/MicroProfile can use either.

First, click on View Secret to view the contents of the secrets we need to bind to Cosmos DB, and then click on Add to Application. This will allow you to choose for which application to add the environment variables to the DeploymentConfig for the application. Select the microsweeper-demo application in the drop-down, and then select the Environment variables option and specify a prefix of SCORESDB_. (Don't forget the underscore!) This will alter the environment of the application once it is re-deployed to add the new environment variables, each of which will start with SCORESDB_ (for example, the URI to the Cosmos DB will be the value of the SCORESDB_uri environment variable).

Click Save.

Now we're ready to switch to Cosmos DB. To do this switch, simply change the value of the ENVIRONMENT environment variable to switch from the H2 database to Cosmos DB within the app:

oc set env dc/microsweeper-demo ENVIRONMENT=PRODUCTION --overwrite

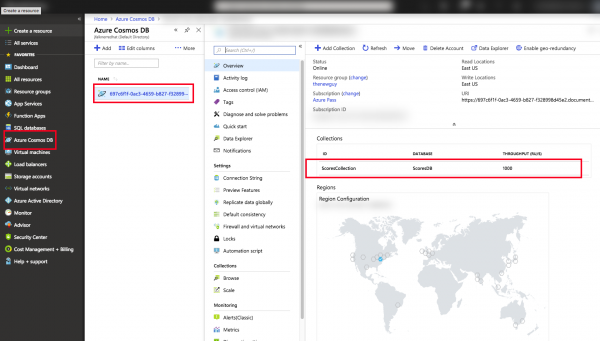

At this point, the app will be re-deployed and start using Cosmos DB! Play the game a few more times, and then head over to the Azure Portal to verify data is being correctly persisted. Navigate to Azure Cosmos DB in the portal, and then click on the single long string that represents the ID of the database. You should see a single collection called ScoresCollection:

Click on ScoresCollection and then on Data Explorer. This tool lets you see the data records (documents) in the database. Using the small "..." menu next to the name of the collection, click on New Query:

Type the simplest of queries into the Query box: {}. Then click Execute Query to see the results. Play the game a few more times, and re-issue the query to confirm data is being persisted properly. Well done!

Next Steps

In this demo, we used two of the MicroProfile APIs that are instrumental in developing Java microservices (HealthCheck and Config) to link the MicroProfile/Thorntail application to Azure services through the Open Service Broker API.

There are many other MicroProfile APIs you can use, and I encourage you to check out the full specifications and the recent release (MicroProfile 2.1). MicroProfile is awesome and is a great way to build Java microservices using truly open, community-driven innovation.

Also see these posts on modern application development, microservices, containers, and Java.

Happy coding!

Last updated: January 12, 2024