Red Hat Developer Blog

Here's our most recent blog content. Explore our featured monthly resource as well as our most recently published items. Don't miss the chance to learn more about our contributors.

View all blogs & articles

This article provides a complete CI/CD workflow utilizing Openshift Dev...

This blog post presents the performance analysis of Identity Management (IdM)...

Discover the new LibSSH connection plug-in for Ansible, replacing the...

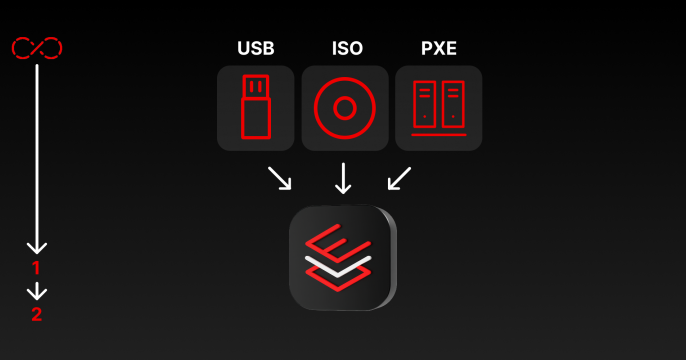

Learn how to create a robust, automated CI/CD pipeline for image mode using...

Learn how to build a zero trust environment using Red Hat Connectivity Link...

This guide demonstrates the configuration process and provides best practices...

Learn how to design agentic workflows, and how the Red Hat AI portfolio...

This post introduces two interactive, sports-themed hands-on labs designed to...

Automate Ansible error resolution with AI. Learn how to ingest logs, group...