With the growth in the use of containers, the need to bundle your application into a container has never been stronger. Many Red Hat customers will be familiar with Source-to-Image (S2I) as an easy way to build container images from application source code. While S2I is a convenient way to build images on Red Hat OpenShift, Red Hat works to support our customers when using a variety of approaches, and we’ve recently seen an increasing interest in building applications using Cloud Native Computing Foundation (CNCF) Buildpacks.

This is the second in a 5-part series of articles on building your applications with CNCF Buildpacks and Red Hat Universal Base Image (UBI). The series will include:

- Building applications with Paketo Buildpacks and Red Hat UBI container images

- Running applications with Paketo Buildpacks and Red Hat UBI container images in OpenShift (this post)

- The journey to enable UBI with the Paketo Buildpacks

- Building applications with UBI and Paketo Buildpacks in CI/CD

- Building a container image for a Quarkus project using Buildpacks

Node.js and Java cloud-native applications for deployment to OpenShift

We are going to deploy either a Node.js or Java application, whichever you choose, in an OpenShift Cluster. You can get an OpenShift cluster with only a few clicks and a free Red Hat account through the Developer Sandbox for Red Hat OpenShift.

To create the cluster:

- Visit the home page of Red Hat OpenShift Developer Sandbox.

- Click the Start your sandbox for free button.

- Follow the instructions. If you don’t have a Red Hat account it will go you through the steps to create one.

- After completing the registration, you will be redirected to the initial page. Click the Launch button on the Red Hat OpenShift section.

- Log in with the Red Hat account you previously created and your sandbox will start within seconds.

If you want to gain familiarity with the OpenShift web console, you can browse through the available documentation.

Setting up image streams and configuring Docker Daemon

In order to be able to deploy our application in OpenShift, we need to push the application container image we have built locally with the ubi builder to a location that is accessible from the OpenShift environment.

The available options for pushing the application container image in an OpenShift environment include:

- Red Hat OpenShift Container Platform’s integrated registry (internal registry).

- An external registry, for example, quay.io or Docker Hub.

- Image streams.

In this article we will use image streams, as they are easy to use and do not require setting up a new account in an external registry.

To set up image streams in OpenShift, follow these steps:

- Switch to the Administrator perspective.

- On the left sidebar select Builds → ImageStreams → Create ImageStream.

- In the text area for creating the image stream, under the metadata section, set a name for the stream. For this tutorial we will choose the

containerized-app-image. - Confirm you have set the correct namespace where your application will be deployed (leaving it to the default should be fine).

- Ensure that the final YAML file looks like the one below:

apiVersion: image.openshift.io/v1

kind: ImageStream

metadata:

name: containerized-app-image

namespace: <your-namespace>- Click the Create button, at the bottom of the page, to create the image stream. You will be redirected immediately to the Details tab of the image stream you created.

- Copy the Public Image Repository URL. This is where we will push the application container image later.

- Use the following

podman logincommand to log in to the image stream registry. This will allow us to push the application container image during the application’s build process:

podman login -u <username> -p <token> <public-image-repository-url><username>: Is located in the upper right corner of the OpenShift web console.<token>: Click the username upper right corner of the OpenShift web console → Copy login command → Display Token<public-image-repository-url>: Copy this from the details tab of the image stream

Using the above command, if the output message is “Login Succeeded”, it means that you have successfully created an ImageStream and logged in so that Podman can push the application built with the ubi builder to the image stream.

Building applications with the Paketo UBI builder

The process of building our application is exactly the same as the process in the previous blog post. The only difference is that we add an extra option (--publish) for pushing the application container image to an image stream or a registry.

Briefly mentioning the steps from the previous article:

- Ensure you have installed and configured Podman (including setting

DOCKER_HOSTif you are on Linux) and the pack CLI. To set docker host you can use:

export DOCKER_HOST="unix://$(podman info -f "{{.Host.RemoteSocket.Path}}")"- Enable experimental features of pack with the following command:

pack config experimental true. - Clone the repository

git clonehttps://github.com/nodeshift-blog-examples/buildpack-openshift-example.git. - Navigate to the application root directory. You can decide if you want to build and deploy either the Node.js or Java application:

- For the Node.js application the root directory is

cd node-example. - For the java application the root directory is

cd java-example.

- For the Node.js application the root directory is

- Build and publish the application container image:

pack build <public-image-repository-url> \

--docker-host=inherit \

--builder paketocommunity/builder-ubi-base \

--publishIn the above command, you will notice that the image name (which is shown as <public-image-repository-url>) matches the name of the image stream. This is necessary so that pack CLI knows where to push the application container image.

Once the command completes you will have built your application with the ubi builder and pushed the container into OpenShift and are ready to deploy the application.

Deploying the application(s) in OpenShift: CLI walkthrough

Note

In this section, having the oc CLI installed is a prerequisite; you can find instructions on installing it on the Getting started with the OpenShift CLI. If you want to go through the process that does not require having oc CLI installed, you can skip this section and follow the instructions in the section Deploying the application(s) in OpenShift: UI Walkthrough.

An easy way to run an application in Kubernetes is by using a deployment. A Kubernetes deployment lets us specify the container we’d like to run along with how to run it. The deployment can specify attributes like the number of replicas of the container to run and the ports to expose.

We will use a deployment to specify the container we built in the earlier section, and to keep things simple, ask that a single copy of the container be run.

The following file specifies a deployment that will get the container running as well as a service and route that is used to expose the application:

apiVersion: apps/v1

kind: Deployment

metadata:

name: buildpack-deployment

annotations:

image.openshift.io/triggers: '[{"from":{"kind":"ImageStreamTag","name":"containerized-app-image:latest"},"fieldPath":"spec.template.spec.containers[?(@.name==\"buildpacks-app\")].image","pause":""}]'

labels:

app: buildpacks-app

spec:

replicas: 1

selector:

matchLabels:

app: buildpacks-app

template:

metadata:

labels:

app: buildpacks-app

spec:

containers:

- name: buildpacks-app

image: <image-repository>

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

---

kind: Service

apiVersion: v1

metadata:

name: buildpack-service

spec:

selector:

app: buildpacks-app

ports:

- name: http

protocol: TCP

port: 8080

targetPort: 8080

---

kind: Route

apiVersion: route.openshift.io/v1

metadata:

name: buildpack-route

labels:

app: buildpacks-app

spec:

to:

kind: Service

name: buildpack-service

tls: {}

port:

targetPort: http

alternateBackends: []

Copy the YAML above and save it into the file deployment.yaml. You will need to update the image section with the image: <image-repository> to match one of the containers you pushed to the image stream earlier during pack build. To find the <image-repository> URL, visit the OpenShift web console → switch to Administrator perspective → select Builds (on the left sidebar) → ImageStreams → Click the ImageStream → View Details tab → Image repository.

You can use the image stream for either the Node.js or Java application depending on which one you’d like to get running.

If you wanted to use an external repository (like quay.io or Docker Hub) instead of an image stream you could point image: to the external repository where you had pushed the image.

Once you have created the file, apply the service and deployment with:

oc apply -f deployment.yamlYou can now skip to the section on taking a look at the deployed application or go through the next section to see how we would use the UI to deploy the application instead of applying the deployment.yaml file.

Deploying the application(s) in OpenShift: UI walkthrough

If you don't want to go through the oc CLI deployment, you can do the same process with the OpenShift Web Console (UI).

Once you have pushed your application to the image stream, you can follow below steps to deploy your application:

- Switch to the Developer perspective and click +Add on the left sidebar.

- Click on the Container images tile.

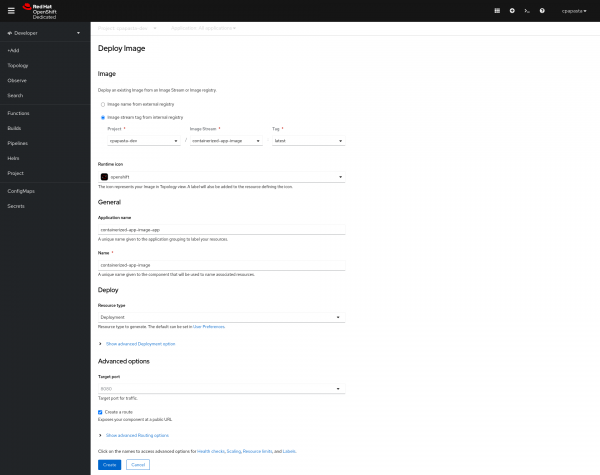

- In the section Deploy an existing Image from an Image Stream or Image registry, select the Image stream tag from internal registry radio button option. In the Image Stream drop-down menu, select the containerized-app-image option with the tag latest to always point to the latest image.

- On the Deploy section, select Deployment as the resource type.

- Leave the rest of the configuration to the defaults. Your configuration should look like what is shown in Figure 1.

Click the Create button at the bottom of the page and you application should be up and running.

View the deployed application in OpenShift

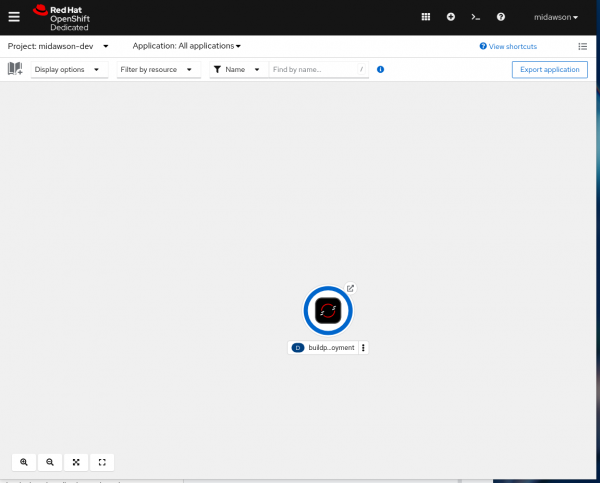

Having gone through the process of deploying the application, you can then go to the developer perspective in OpenShift to see the deployed application and get the external link to the application, as shown in Figure 2.

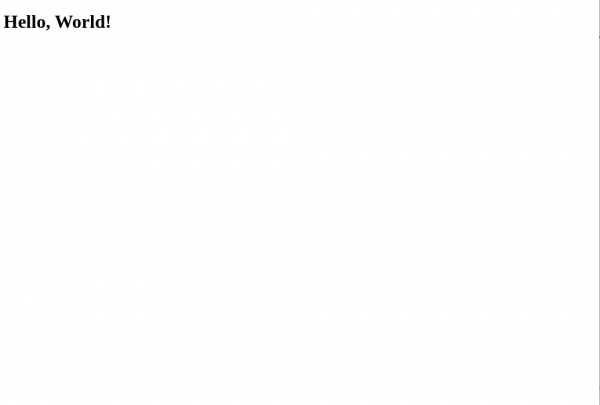

Select the box with the arrow at the top right of the icon for the application. That will open the main page for the application, as shown in Figure 3.

In addition, each time we build our application, the application redeploys with the new image app. This is something that image streams enable, by monitoring if any changes have been pushed to the image stream registry. You could test this out by modifying the text for the application from Hello World! to Hello World2! and rebuilding it and pushing it to the image stream.

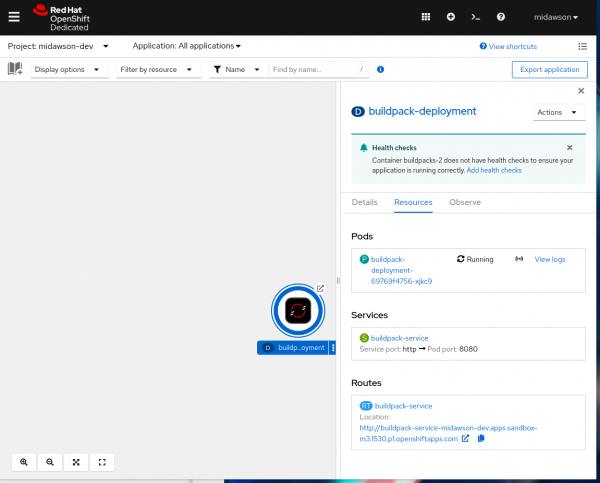

Adding health checks

If you click the icon for the application, you'll see that we’ve not yet configured OpenShift to use the liveness and readiness endpoints that we included in the application as shown in Figure 4.

We can use the Add health checks link to do that.

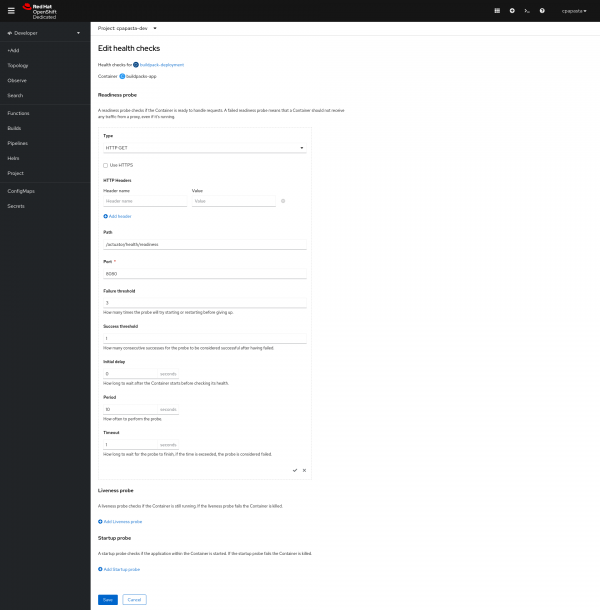

On the page for adding health checks, select the Add Readiness probe option and fill in /actuator/health/readiness for the path. Then select the check at the bottom left to add the readiness endpoint, as shown in Figure 5.

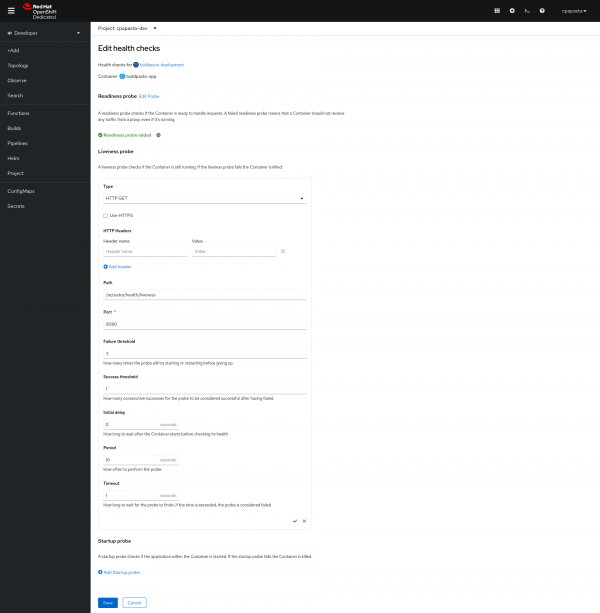

Then select the Add Liveness probe option and use /actuator/health/liveness for the path.

Then select the check at the bottom left to add the liveness endpoint, as shown in Figure 6.

Finally, select the Add option to complete adding the two endpoints.

OpenShift will now check the liveness endpoint at the interval specified and restart the container if there is no response. When the container is restarted it will first hit the readiness endpoint before sending traffic to the starting container. You could see this in action by modifying the path for the liveness endpoint to something different (i.e., to a path that does not exist) and you’ll see that the application gets restarted once the liveness endpoint fails to respond.

Wrapping up

In this article we took you through building an application with the UBI Paketo Buildpacks, pushing the container for the application to OpenShift and then running the application in the container. Once you’ve built the application, you can easily deploy it to a Kubernetes environment and run managed just like any other application.