In today's data-driven world, organizations are always looking for new ways to get insightful information from their new or existing data in order to spread their business. Red Hat OpenShift AI enables data scientists and developers to efficiently collaborate and create solid, scalable, and reproducible data science projects in response to this demand.

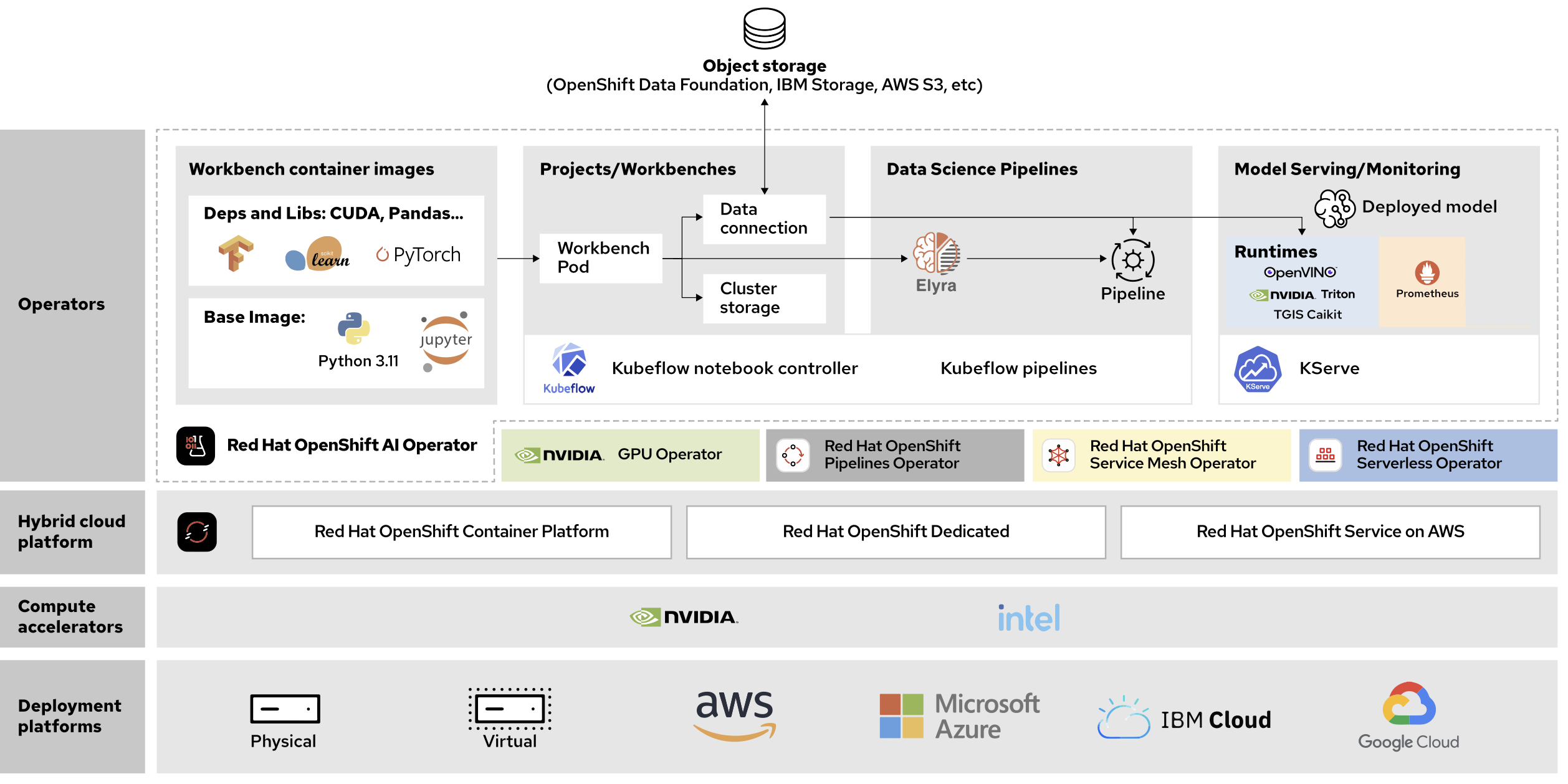

Architecture of Red Hat OpenShift AI

Red Hat OpenShift AI helps you build out an enterprise grade AI and MLOps platform to create and deliver GenAI and predictive models by providing supported AI tooling on top of OpenShift. It’s based on Red Hat OpenShift, a container based application platform that efficiently scales to handle workload demands of AI operations and models. You can run your AI workloads across the hybrid cloud, including edge and disconnected environments.

Additionally, Red Hat actively partners with leading AI vendors, such as Anaconda, Intel, IBM, and NVIDIA to make their tools available for data scientists as OpenShift AI components. You can optionally activate these components to expand the OpenShift AI capabilities. Depending on the component that you want to activate, you might need to install an operator, use the Red Hat Ecosystem, or add a workbench image.

Figure 1 depicts the general architecture of a OpenShift AI deployment, including the most important concepts and components.

Workbenches

In OpenShift AI, a workbench is a containerized environment for data scientists. A workbench image is a container image that OpenShift AI uses to create workbenches. OpenShift AI includes a number of images such as PyTorch, TensorFlow, and etc. that are ready to use for multiple common data science stacks. Workbenches run as OpenShift pods and are designed for machine learning and data science. These workbenches include the Jupyter notebook execution environment and data science libraries. You can also import your own custom workbench image into Red Hat OpenShift AI if the default images do not meet your requirements.

Cluster storage

OpenShift AI uses cluster storage, which is a Persistent Volume Claim (PVC) mounted in a specific directory of the workbench container. This ensures that users will be able to retain their work after logging out or workbench restart.

Data connections

In OpenShift AI, a data connection contains configuration parameters using which you can connect workbenches to S3-compatible storage services. OpenShift AI injects the data connection configuration as environment variables into the workbench.

Data science pipelines

In OpenShift AI, a data science pipeline is a workflow that executes scripts or Jupyter notebooks in an automated way. Using pipelines, you can automate the execution of different steps and store the results. A standard pipeline may include these steps: gathering data, cleaning data, training a model, evaluating the model, and finally saving the model to S3 storage. You can easily create the pipelines through Elyra UI.

Model serving

Model server uses a data connection to download the model file from S3-compatible storage. After the download, the model server exposes the model via REST or gRPC APIs. OpenShift AI uses Kserve as the model serving platform and supports model runtimes such as OpenVINO, Triton, Text Generation Inference Server (TGIS), Caikit etc.

Model monitoring

With the monitoring and logging features provided by OpenShift AI, you can keep tabs on the performance of your data science workloads and fix any potential problems.

See Red Hat OpenShift AI in action: try the solution pattern

Solution patterns from Red Hat help you understand how to build real world use cases with reproducible demos, deployment scripts, and guides.

In the Machine Learning and Data Science Pipelines using OpenShift AI Solution Pattern, we demonstrate how we can build, train and deploy machine learning model using Red Hat OpenShift AI. We will consume the model via REST endpoint through a Python application deployed in the OpenShift platform.

Conclusion

For data science teams looking to improve communication, streamline workflows, and easily deploy models into production, Red Hat OpenShift AI is a game-changer. It gives data scientists the tools and infrastructure they need to focus on what they do best—extract important insights from data—while also enabling containerization, Kubernetes integration, and a variety of other potent features.

Go ahead and try out the Machine Learning and Data Science Pipelines using OpenShift AI Solution Pattern.