What if we told you there's a GitHub repository that makes building, running, and developing AI-powered applications from your laptop as easy as making toast? No cloud-based AI platform required, no specialized hardware accelerators needed, and with the most up-to-date open source models? If you like what’s cooking, dig in to explore AI Lab Recipes.

What is AI Lab Recipes?

The ai-lab-recipes repository is a collaboration of data scientists and application developers that brings together best practices from both worlds. The result is a set of containerized AI-powered applications and tools that are fun to build, easy to run, and convenient to fork and make your own.

AI Lab recipes started as the home for the Podman Desktop AI Lab extension’s sample applications, or recipes. There’s an excellent post that describes the AI Lab extension. In this article, we'll explore AI Lab Recipes further. We’ll use the code generation application as an example throughout. To follow along, clone the ai-lab-recipes repository locally. Also, if you don’t yet have Podman installed, head to podman.io.

Recipes

There are several recipes to help developers quickly prototype new AI and large language model (LLM)-based applications. Each recipe includes the same main ingredients: models, model servers, and AI interfaces. These are combined in various ways, packaged as container images, and can be run as pods with Podman. The recipes are grouped into different categories (food groups) based on the AI functions. The current recipe groups are audio, computer vision, multimodal, and natural language processing.

Let’s look at the natural language processing code generation application closer. Keep in mind that every example application follows this same pattern. The tree below shows the file structure that gives each recipe its name and flavor.

$ tree recipes/natural_language_processing/codegen/

recipes/natural_language_processing/codegen/

├── Makefile

├── README.md

├── ai-lab.yaml

├── app

│ ├── Containerfile

│ ├── codegen-app.py

│ └── requirements.txt

├── bootc

│ ├── Containerfile

│ ├── Containerfile.nocache

│ └── README.md

├── build -> bootc/build

└── quadlet

├── README.md

├── codegen.image -> ../../../common/quadlet/app.image

├── codegen.kube

└── codegen.yamlA Makefile provides targets to automate building and running. Refer to the Makefile document for a complete explanation. For example, make build uses podman build to build the AI interface image from the codegen Containerfile. A custom image tag can be given with:

$ cd recipes/natural_language_processing/codegen

$ make APP_IMAGE=quay.io/your-repo/codegen:tag buildEach recipe includes a definition file, ai-lab.yaml, that the Podman Desktop AI Lab extension uses to build and run the application. The app folder contains the code and files required to build the AI interface. A bootc/ folder contains files necessary to embed the codegen application as a service within a bootable container image. The subject of bootable container images and the bootc folder deserves its own post. Learn more about them from Dan Walsh: Image Mode for Red Hat Enterprise Linux Quick Start: AI Inference

Finally, a quadlet/ folder in each recipe provides templates for generating a systemd service to manage a Podman pod that runs the AI application. The service is useful when paired with bootable container images. However, as you’ll see below, the generated pod YAML can be used to run the application as a pod locally. Now that the basic format for a recipe has been laid out, it’s time to gather the main ingredients: the model, model server, and AI interface.

Model

The first ingredient needed for any AI-powered application is an AI model. The recommended models in ai-lab-recipes are hosted on HuggingFace and are packaged with the Apache 2.0 or MIT License. The models/ folder provides automation for downloading models as well as a Containerfile for building images that contain the bare minimum for serving up a model.

For the code generation example, we recommend mistral-7b-code-16k-qlora.. All the models recommended in ai-lab-recipes are quantized GGUF files, with Q4_K_M quantization, and are sized between 3-5 GB (mistral-7b-code is 4.37GB) and require 6-8 GB RAM. All this to say that the data scientists have spoken and the models recommended for each recipe make sense for each use case, and they should also run well on your laptop. However, ai-lab-recipes is set up in such a way that switching out a model is easy, and encourages developers to experiment with different models to find which one works best for them.

Model server

A model isn’t all that useful in an application unless it’s being served. A model server is a program that serves machine-learning models, such as LLMs, and makes their functions available via an API. This makes it easy for developers to incorporate AI into their applications. The model_servers folder in ai-lab-recipes provides descriptions and code for building several of these model servers.

Many of the ai-lab-recipes use the llamacpp_python model server. This server can be used for various generative AI applications and with many different models. That said, users should know each sample application can be paired with a variety of model servers. Furthermore, model servers can be built according to different hardware accelerators and GPU toolkits, such as Cuda, ROCm, Vulkan, etc. The llamacpp_python model server images are based on the llama-cpp-python project that provides python bindings for llama.cpp. This provides a Python-based and OpenAI API compatible model server that can run LLMs of various sizes locally across Linux, Windows, or Mac.

The llamacpp_python model server requires models to be converted from their original format, typically a set of *.bin or *.safetensor files into a single GGUF formatted file. Many models are available in GGUF format already on HuggingFace. But if you can’t find an existing GGUF version of the model you want to use, you can use the model converter utility available in this repo to convert models yourself.

AI interface

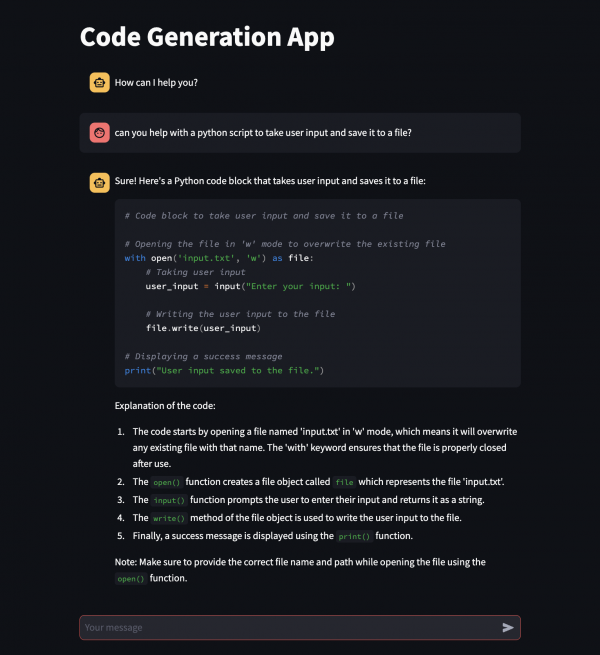

The final ingredient—the icing on the cake, as they say—is an AI Interface—a spiffy UI along with the necessary client code to interact with the model via the API endpoint provided by the model server. You’ll find a Containerfile and the necessary code in each of the recipes under the app/ folder, as it is here for the code generation example. Most of the recipes use Streamlit for their UI. Streamlit is an open source framework that is incredibly easy to use and really fun to interact with. We think you’ll agree. However, just like with everything else, it’s easy to swap out the Streamlit based container in any recipe for your preferred front-end tool. We went with the boxed cake here but nothing is stopping you from whipping up something from scratch!

Quadlets

Now that we’ve got our ingredients in place, let’s cook up our first AI application. Aside from the Podman Desktop AI Lab extension, the quickest way to start an application from ai-lab-recipes is to generate a pod definition YAML. For this, head to any ai-lab-recipes quadlet/ folder. What's a quadlet? From this post we know “Quadlet is a tool for running Podman containers under systemd in an optimal way by allowing containers to run in a declarative way.” In this example, we won’t actually run our application under systemd, but we will use the quadlet target that every sample application includes to generate a pod definition. Then we’ll use podman kube play to launch the pod. If you’ve never tried podman kube play before, you’re about to experience the convenience of running multiple containers together as a pod.

Are you ready? All that is required to launch a local AI-powered code-generation assistant is the following:

$ cd recipes/natural_language_processing/codegen

$ make quadlet

$ podman kube play build/codegen.yamlIt will take a few minutes to download the images. While we’re waiting, let’s explain what just happened. The make quadlet command generated the following files:

$ ls -al build

lrwxr-xr-x 1 somalley staff 11B Apr 22 17:21 build -> bootc/build

$ ls -al bootc/build/

total 24

drwxr-xr-x 5 somalley staff 160B Apr 22 17:21 .

drwxr-xr-x 6 somalley staff 192B Apr 22 17:21 ..

-rw-r--r-- 1 somalley staff 209B Apr 22 17:21 codegen.image

-rw-r--r-- 1 somalley staff 330B Apr 22 17:21 codegen.kube

-rw-r--r-- 1 somalley staff 970B Apr 22 17:21 codegen.yamlWith Podman’s quadlet feature, any files placed in the /usr/share/containers/systemd/system will create a systemd service. With the files above, a service named codegen would be created. This is beyond the scope of this post, but for more info on that, check out Image mode for Red Hat Enterprise Linux quick start: AI inference.

For now, codegen.yaml is the only file necessary. It is a pod definition that specifies a model container, a model-server container, and an AI interface container. The model file is shared with the model server using a volume mount. Take a look!

$ cat bootc/build/codegen.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

app: codegen

name: codegen

spec:

initContainers:

- name: model-file

image: quay.io/ai-lab/mistral-7b-code-16k-qlora:latest

command: ['/usr/bin/install', "/model/model.file", "/shared/"]

volumeMounts:

- name: model-file

mountPath: /shared

containers:

- env:

- name: MODEL_ENDPOINT

value: http://0.0.0.0:8001

image: quay.io/ai-lab/codegen:latest

name: codegen-inference

ports:

- containerPort: 8501

hostPort: 8501

securityContext:

runAsNonRoot: true

- env:

- name: HOST

value: 0.0.0.0

- name: PORT

value: 8001

- name: MODEL_PATH

value: /model/model.file

image: quay.io/ai-lab/llamacpp_python:latest

name: codegen-model-service

ports:

- containerPort: 8001

hostPort: 8001

securityContext:

runAsNonRoot: true

volumeMounts:

- name: model-file

mountPath: /model

volumes:

- name: model-file

emptyDir: {}References to images at quay.io/ai-lab in the pod definition above are public, so you can run this application as is. The images for all models, model servers, and AI interfaces are built for both x86_64 (amd64) and arm64 architectures.

To manage the codegen pod, use the following:

podman pod list

podman pod start codegen

podman pod stop codegen

podman pod rm codegenTo inspect the containers that make up the codegen pod:

podman ps

podman logs <container-id>To interact with the codegen application (finally!), visit http://localhost:8501. You should see something like Figure 1, where you can ask your new code assistant for help throughout your day, maybe even to create new AI-powered applications. If you do, be sure to contribute them back to ai-lab-recipes. Unless, of course, you prefer to keep your concoctions secret sauce.