Red Hat Advanced Cluster Management for Kubernetes governance provides an extensible framework for enterprises to introduce their own security and configuration policies that can be applied to managed Red Hat OpenShift or Kubernetes clusters. For more information on Red Hat Advanced Cluster Management policies, I recommend that you read the Comply to standards using policy based governance and Implement Policy-based Governance Using Configuration Management blogs.

As part of the Red Hat Advanced Cluster Management and Red Hat OpenShift Platform Plus subscriptions, there is a supported Gatekeeper Operator that was recently updated to Gatekeeper 3.11.1. In this article, let's explore the Gatekeeper Operator and its integration with Red Hat Advanced Cluster Management, which helps you achieve multicluster admission control and auditing.

Gatekeeper overview

Gatekeeper is a Kubernetes admission controller that leverages the Open Policy Agent (OPA) engine. It does this through the use of validating and mutating Kubernetes webhooks. It is also capable of auditing the existing state of the cluster and reporting on objects violating the policy. In this article, you'll learn how to use Gatekeeper to audit existing objects and block new objects that don't comply with your policies.

A new type of validation rule is defined using a ConstraintTemplate object, which contains the OPA Rego code and the accepted parameters. Based on that ConstraintTemplate, Gatekeeper will automatically generate and apply a custom resource definition (CRD) to be able to create constraints as Kubernetes objects.

One example from the Gatekeeper documentation shows a ConstraintTemplate definition. The embedded Rego is validating that the labels provided in the labels parameter are defined on the object under review. See the following sample:

apiVersion: templates.gatekeeper.sh/v1

kind: ConstraintTemplate

metadata:

name: k8srequiredlabels

spec:

crd:

spec:

names:

kind: K8sRequiredLabels

validation:

# Schema for the `parameters` field

openAPIV3Schema:

type: object

properties:

labels:

type: array

items:

type: string

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package k8srequiredlabels

violation[{"msg": msg, "details": {"missing_labels": missing}}] {

provided := {label | input.review.object.metadata.labels[label]}

required := {label | label := input.parameters.labels[_]}

missing := required - provided

count(missing) > 0

msg := sprintf("you must provide labels: %v", [missing])

}After defining the ConstraintTemplate, you can define constraints based on the template using the CRD generated and applied by Gatekeeper. The example constraint below requires that all namespaces have the label of team set to any value. Notice that the enforcementAction is to deny. This setting prevents namespaces from being created without the team label. The enforcementAction could also be set to warn, which does not deny the request but warns you when they create or update a namespace that does not have the team label set. The last option is dryrun, which does not impact admission control. All options allow Gatekeeper to audit existing namespaces with the constraint. See the following example of these settings:

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sRequiredLabels

metadata:

name: ns-must-have-team

spec:

enforcementAction: deny

match:

kinds:

- apiGroups:

- ""

kinds:

- Namespace

parameters:

labels:

- teamAfter applying the constraint, if you request to create a namespace with kubectl create namespace test-ns, then it is denied with the following message:

Error from server (Forbidden): admission webhook "validation.gatekeeper.sh" denied the request: [ns-must-have-teams] you must provide labels: {"team"}To view the audit violations of the existing namespaces, you can see those listed in the constraint's status with the following command:

kubectl get k8srequiredlabels ns-must-have-teams -o yamlThe output should look similar to what is shown below. Note that there are 81 total violations, but Gatekeeper records a subset of the violations so that the K8sRequiredLabels object does not get too large. To override this, you must set the spec.audit.constraintViolationLimit field on the Gatekeeper object used to configure the Gatekeeper Operator. See the following example of this override:

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sRequiredLabels

metadata:

annotations:

name: ns-must-have-gk

spec:

enforcementAction: deny

match:

kinds:

- apiGroups:

- ""

kinds:

- Namespace

parameters:

labels:

- team

status:

auditTimestamp: "2023-12-05T19:08:45Z"

totalViolations: 81

violations:

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: default

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: default-broker

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: e2e-rbac-test-1

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: e2e-rbac-test-2

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: hive

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: hypershift

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: kube-node-lease

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: kube-public

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: kube-system

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: local-cluster

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: multicluster-engine

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: open-cluster-management

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: open-cluster-management-agent

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: open-cluster-management-agent-addon

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: open-cluster-management-global-set

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: open-cluster-management-hub

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: openshift

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: openshift-apiserver

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: openshift-apiserver-operator

version: v1

- enforcementAction: deny

group: ""

kind: Namespace

message: 'you must provide labels: {"team"}'

name: openshift-authentication

version: v1As shown above, Gatekeeper is highly customizable and can be used to prevent policy violations while also detecting already existing violations. Doing this across multiple clusters is challenging, and the Red Hat Advanced Cluster Management integration with Gatekeeper helps you by making violations for all clusters visible in one location.

Installing Gatekeeper using Red Hat Advanced Cluster Management

The simplest way to install the latest Gatekeeper Operator for all clusters is to use the "Install Red Hat Gatekeeper Operator policy" policy template in the Red Hat Advanced Cluster Management console. This both installs the Operator when enforced and reports on the installation status.

To install the Gatekeeper Operator, complete the following steps:

- Go to the Governance navigation item > Policies tab > and click the Create policy button.

- For the Name field, enter

install-gatekeeper. - For the Namespace field, select open-cluster-management-global-set or a namespace configured for Red Hat Advanced Cluster Management policies.

- Click Next > Remediation > select Enforce for the policy to enforce the installation of the Gatekeeper Operator when the policy is deployed.

- Click the Add policy template drop-down.

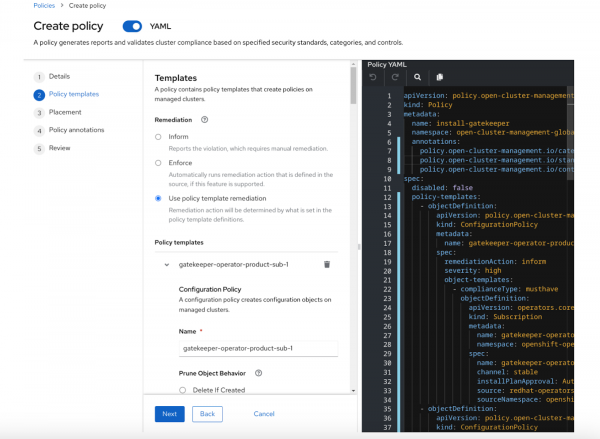

- Select Install Red Hat Gatekeeper Operator policy. You then see a wizard like the example shown in Figure 1.

- To generate a placement that applies the policy to all registered clusters, click Next or the Placement wizard step > New placement > Review wizard step.

- Go to the YAML sidebar and view the YAML. Verify that the YAML looks like the following:

apiVersion: policy.open-cluster-management.io/v1

kind: Policy

metadata:

name: install-gatekeeper

namespace: open-cluster-management-global-set

annotations:

policy.open-cluster-management.io/categories: CM Configuration Management

policy.open-cluster-management.io/standards: NIST SP 800-53

policy.open-cluster-management.io/controls: CM-2 Baseline Configuration

spec:

disabled: false

remediationAction: enforce

policy-templates:

- objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: gatekeeper-operator-product-sub

spec:

remediationAction: inform

severity: high

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

name: gatekeeper-operator-product

namespace: openshift-operators

spec:

name: gatekeeper-operator-product

channel: stable

installPlanApproval: Automatic

source: redhat-operators

sourceNamespace: openshift-marketplace

- objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: gatekeeper-operator-status

spec:

remediationAction: inform

severity: high

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: operators.coreos.com/v1alpha1

kind: ClusterServiceVersion

metadata:

namespace: openshift-gatekeeper-system

spec:

displayName: Gatekeeper Operator

status:

phase: Succeeded

- objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: gatekeeper

spec:

remediationAction: inform

severity: high

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: operator.gatekeeper.sh/v1alpha1

kind: Gatekeeper

metadata:

name: gatekeeper

spec:

audit:

logLevel: INFO

replicas: 1

validatingWebhook: Enabled

mutatingWebhook: Disabled

webhook:

emitAdmissionEvents: Enabled

logLevel: INFO

replicas: 2

- objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: gatekeeper-status

spec:

remediationAction: inform

severity: high

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: v1

kind: Pod

metadata:

namespace: openshift-gatekeeper-system

labels:

control-plane: audit-controller

status:

phase: Running

- complianceType: musthave

objectDefinition:

apiVersion: v1

kind: Pod

metadata:

namespace: openshift-gatekeeper-system

labels:

control-plane: controller-manager

status:

phase: Running

---

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: Placement

metadata:

name: install-gatekeeper-placement

namespace: open-cluster-management-global-set

spec: {}

---

apiVersion: policy.open-cluster-management.io/v1

kind: PlacementBinding

metadata:

name: install-gatekeeper-placement

namespace: open-cluster-management-global-set

placementRef:

name: install-gatekeeper-placement

apiGroup: cluster.open-cluster-management.io

kind: Placement

subjects:

- name: install-gatekeeper

apiGroup: policy.open-cluster-management.io

kind: Policy- Click Submit.

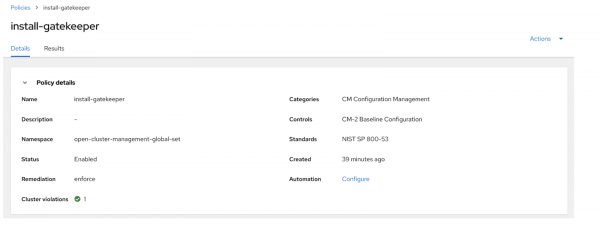

After the policy has been deployed to all your clusters and the Gatekeeper Operator has successfully been installed, you can see the policy marked as compliant, as shown in Figure 2.

Using the Red Hat Advanced Cluster Management Gatekeeper integration

The Red Hat Advanced Cluster Management 2.8+ Gatekeeper integration enables multicluster distribution of Gatekeeper ConstraintTemplate objects and constraints, and aggregates Gatekeeper audit violations data back to the hub for an entire Kubernetes fleet. To use this integration, you must use a Red Hat Advanced Cluster Management policy to deploy the Gatekeeper constraint, and optionally the ConstraintTemplate, to the managed clusters.

Using the example from the Gatekeeper Overview section, create a Red Hat Advanced Cluster Management policy as shown in the following example. Notice the policy-templates array includes both the ConstraintTemplate and the ns-must-have-team constraint. The Red Hat Advanced Cluster Management policy has the remediationAction of enforce, which translates to enforcementAction: deny being set on the constraint. If the Red Hat Advanced Cluster Management policy had the remediationAction of inform, it would have translated to enforcementAction: warn being set on the constraint. If the Red Hat Advanced Cluster Management policy had no remediationAction set, then the enforcementAction set on the constraint would be honored.

apiVersion: policy.open-cluster-management.io/v1

kind: Policy

metadata:

name: ns-teams-label

namespace: open-cluster-management-global-set

spec:

disabled: false

remediationAction: enforce

policy-templates:

- objectDefinition:

apiVersion: templates.gatekeeper.sh/v1

kind: ConstraintTemplate

metadata:

name: k8srequiredlabels

spec:

crd:

spec:

names:

kind: K8sRequiredLabels

validation:

openAPIV3Schema:

type: object

properties:

labels:

type: array

items:

type: string

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package k8srequiredlabels

violation[{"msg": msg, "details": {"missing_labels": missing}}] {

provided := {label | input.review.object.metadata.labels[label]}

required := {label | label := input.parameters.labels[_]}

missing := required - provided

count(missing) > 0

msg := sprintf("you must provide labels: %v", [missing])

}

- objectDefinition:

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sRequiredLabels

metadata:

name: ns-must-have-team

spec:

match:

kinds:

- apiGroups:

- ""

kinds:

- Namespace

parameters:

labels:

- team

---

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: Placement

metadata:

name: ns-teams-label-placement

namespace: open-cluster-management-global-set

spec: {}

---

apiVersion: policy.open-cluster-management.io/v1

kind: PlacementBinding

metadata:

name: ns-teams-label-placement

namespace: open-cluster-management-global-set

placementRef:

name: ns-teams-label-placement

apiGroup: cluster.open-cluster-management.io

kind: Placement

subjects:

- name: ns-teams-label

apiGroup: policy.open-cluster-management.io

kind: PolicyAfter the policy is applied and Gatekeeper has perfomed the audit on the managed clusters, you view the audit results from the Red Hat Advanced Cluster Management console. To view the audit results, complete the following steps:

- Go to the Governance navigation item.

- Click on the Policies tab > the

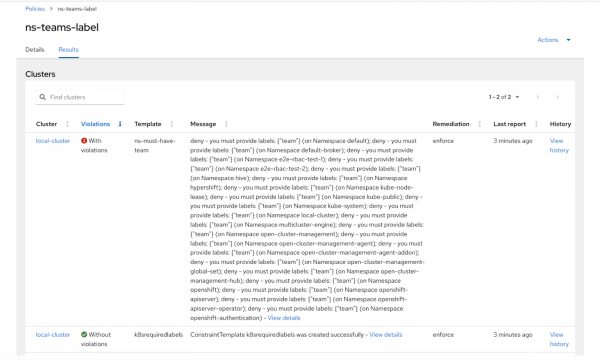

ns-teams-labelpolicy > the Results tab. - View the compliance results. It should look similar to the example shown in Figure 3.

- Notice that the

ns-must-have-teamtemplate is noncompliant and its message contains all the audit failures detected by Gatekeeper. - Click View details for the

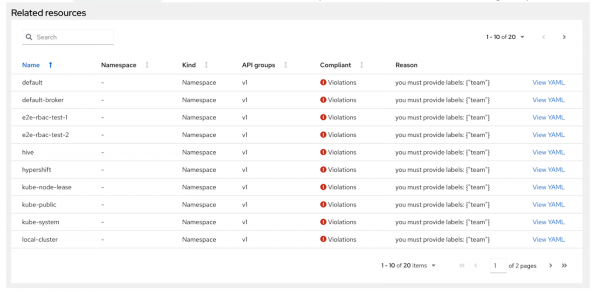

ns-must-have-teampolicy template. - Notice that the Related resources section table contains all the namespaces that failed, as shown in Figure 4.

- Notice that by default, the namespace contains a maximum of 20 results. To increase this limit, see the Gatekeeper Overview section.

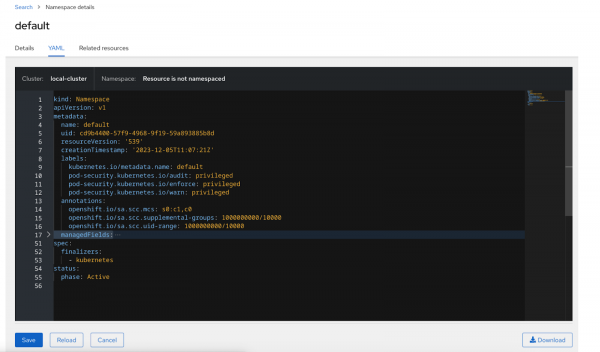

- From the table, click any of the View YAML links to utilize Red Hat Advanced Cluster Management Search to view the YAML of namespaces that failed the audit on the managed cluster. For example, if you click on View YAML for the default namespace, then you would see the YAML shown in Figure 5.

Note

If the Red Hat Advanced Cluster Management policy were to be deleted, the ConstraintTemplate and constraint would be removed from the managed clusters.

Conclusion

Gatekeeper is a powerful, flexible, and performant Kubernetes policy engine and admission controller. Red Hat provides a supported Operator with the Red Hat Advanced Cluster Management and Red Hat OpenShift Platform Plus subscriptions. By leveraging Red Hat Advanced Cluster Management Gatekeeper integration, you can have a simple Gatekeeper installation, constraint management, and audit results aggregation of an entire Kubernetes fleet from a single console.

Last updated: June 14, 2024