Many modern application designs are event-driven, aiming to deliver events quickly. This article describes how to orchestrate event-driven microservices using standards like CNCF CloudEvents and Kubernetes APIs for Knative to simplify EDA-style application development.

The Event Driven Architecture (EDA) allows the implementation of loosely coupled applications and services. In this model, event producers do not know for which event consumers are listening, and the event itself does not know the consequences of its occurrence. EDA is a good option for distributed application architectures.

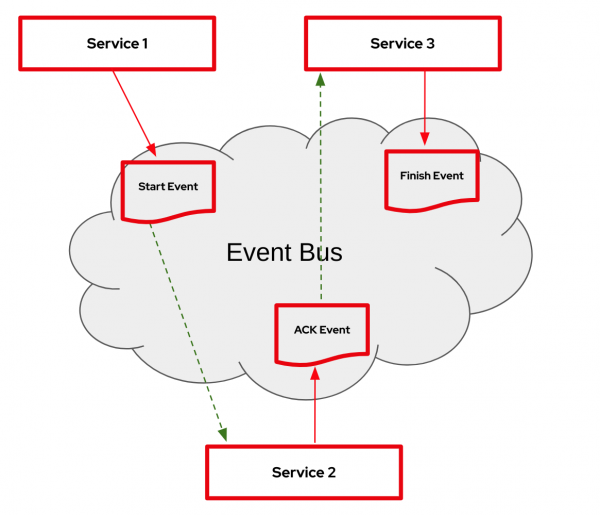

Figure 1 illustrates an example of an event-driven application consisting of three services, producing and consuming different events with an event bus responsible for the orchestration and routing of the events. Note that “Service 3” produces an event indicating the business process finished, but there is no consumer for the event.

It is simple to implement another consumer for the “Finish Event” with the flexible architecture of event-driven systems.

Knative Eventing

How can you build a system on Kubernetes that orchestrates events and routes them to consumers? Luckily there is Knative Eventing, which offers a collection of APIs that enable cloud native developers to use an event-driven architecture within their applications and services. You can use these APIs to create components that route events from event producers to event consumers, known as sinks, that receive events.

Knative Eventing uses standard HTTP requests to send and receive events between event producers and sinks. These events conform to a CNCF industry standard called CloudEvents, which enables creating, parsing, sending, and receiving events in any programming language. The binding between the HTTP protocol and CloudEvents is standardized in this specification. Although the focus of this article is on HTTP, it is worth mentioning that the CloudEvents specification also describes bindings for other protocols, such as AMQP or Websocket.

Event mesh with Knative Broker

One of the key APIs in Knative Eventing is the Knative Broker API, which defines an event mesh aiding the event orchestration and routing. Figure 2 shows a complete process, covering purchases from an online web shop and ends when the order is completely delivered at the customer's door. The process is implemented by a couple of microservice applications that are consuming and producing events. There is no direct communication or invocation between the services. Instead, the applications are loosely coupled and communicate only via events.

The orchestration of the event exchange is handled by an event mesh. In our case, this is the Knative Broker for Apache Kafka.

apiVersion: eventing.knative.dev/v1

kind: Broker

metadata:

annotations:

eventing.knative.dev/broker.class: Kafka

name: order-broker

spec:

config:

apiVersion: v1

kind: ConfigMap

name: order-broker-config

---

apiVersion: v1

kind: ConfigMap

metadata:

name: order-broker-config

data:

bootstrap.servers: <url>

auth.secret.ref.name: <optional-secret-name>

default.topic.partitions: "10"

default.topic.replication.factor: "3"

The broker is annotated to pick the Kafka-backed implementation and points to a ConfigMap holding configuration about the Apache Kafka Topic, internally used by the broker. Generally, it is also recommended to configure aspects of delivery guarantees and retries. But we skipped this to keep this article simple. For more details on best practices for Knative Broker configurations, read the article, Our advice for configuring Knative Broker for Apache Kafka.

After the Broker definition has been applied with oc apply, you can check for the broker and its status as follows:

oc get brokers.eventing.knative.dev -n orders

NAME URL AGE READY

order-broker http://kafka-broker-ingress.knative-eventing.svc.cluster.local/orders/order-broker 46m True

Every Knative Broker object exposes an HTTP endpoint acting as the Ingress for CloudEvents. The URL can be found on the status of each broker object. The following is an example of an HTTP POST request that could be sent from the “Online Shop” service to the event mesh, aka the Knative Kafka Broker.

curl -v -X POST \

-H "content-type: application/json" \

-H "ce-specversion: 1.0" \

-H "ce-source: /online/shop" \

-H "ce-type: order.requested" \

-H "ce-id: 1f1380d4-8ff2-4ab0-b2ba-54811226c21b" \

-d '{"customerId": 20207-19, "orderId": "f8bc3445-b844"}' \

http://kafka-broker-ingress.knative-eventing.svc.cluster.local/orders/order-broker

But how is the event getting delivered to the “Payment Service”, since there is no direct coupling between the two?

Event orchestration and routing

While the Broker API implements an event mesh, it goes hand-in-hand with the trigger API, which the broker is using to route messages based on a given set of rules or criteria to their destination.

apiVersion: eventing.knative.dev/v1

kind: Trigger

metadata:

name: trigger-order-requested

spec:

broker: order-broker

filter:

attributes:

type: order.requested

source: /online/shop

subscriber:

ref:

apiVersion:v1

kind: Service

name: payment

This example is a trigger for the “order-broker” which contains two filters, each for different CloudEvent attributes metadata:

- Type

- Source

Both rules are treated as an AND, and the event is only routed to the referenced payment service by the Knative Broker if both rules match.

The routing of the matching CloudEvent to the referenced Kubernetes Service (payment) is done by the Knative Broker using HTTP. This allows a flexible architecture for the implementation of the processing services since simply all that is needed is a Web Server program, regardless of the written language. Besides the Kubernetes Service API, we can also reference a Knative Serving Service, supporting serverless principals.

If the referenced service replies with a CloudEvent in its HTTP response, this event is returned back to the Knative Broker and available for further processing. Using a different trigger with a matching rule can route those events to other service applications.

CloudEvent processing with Knative Functions

One simple way to create microservices that are processing standard CloudEvents is to leverage the Knative Functions project. It contains templates for a number of languages and platforms, such as:

- Golang

- Node.js (JavaScript)

- Quarkus (Java)

- Spring Boot (Java)

- Python

- Rust

The following script is the implementation of the “Payment Service” application, which was written based on the Quarkus Funqy template. Funqy is part of Quarkus’s serverless strategy that provides a portable Java API for developers to write serverless functions and deploy them to heterogeneous serverless runtimes, including AWS Lambda, Azure Functions, Google Cloud, and Knative. With Funqy, developers can easily bind their methods to CloudEvents, using the @Funq annotation. Funqy ensures that the @Funq annotated Java method is invoked with the HTTP request from the Knative Broker, containing the “OrderRequested” CloudEvent.

Note: The OrderRequests object is part of the CloudEvent payload in a serialized JSON format, as indicated by the previous cURL example.

@Funq

public CloudEvent<PaymentReceived> orderRequest(final CloudEvent<OrderRequested> order) {

LOG.debug("Incoming CloudEvent with ID: " + order.id());

try {

final PaymentReceived payment = paymentProvider.processPayment(order.data());

return CloudEventBuilder.create()

.id(UUID.randomUUID().toString())

.type("payment.received")

.source("/payment")

.build(payment);

} catch (InvalidPaymentException ipe) {

// recover from here

return CloudEventBuilder.create().build(...);

}

}

The CloudEvent payload is deserialized to the “OrderRequest” type, using the data() method from the CloudEvents API and processed by a payment provider service. Once the payment is approved, the Knative Function code returns a different CloudEvent with type payment.received, indicating the payment has been received.

Let’s have a look at the diagram in Figure 2 where the “Order Service” subscribed to the “payment.received” event. Whenever such an event is available in the event mesh, the Knative Broker will dispatch it to the subscribed “Order Service” and continue the process of the shopping cart application.

Note: In case of a failure, we see the InvalidPaymentException and a different CloudEvent with an error type returned to the broker, indicating that a failure has occurred. For more information on how to configure the Knative Broker for delivery guarantees and retries, please refer to the previously mentioned article.

Using application-specific events

When working with event-driven microservices, it is highly recommended that every service or function should respond to incoming requests with an outgoing event on its HTTP response. The CloudEvents should be domain-specific and provide context about their state on the CloudEvent metadata attributes. It is very important to not return the same CloudEvent type that goes into a function because this would cause a filter loop on the executing Knative Broker. For successful event processing and domain-specific failures, a service should always return a CloudEvent to the Knative Broker.

To handle network-level failures occurring while the Knative Broker tries to deliver the CloudEvents (such as HTTP 4xx/5xx errors), we recommend configuring a Dead-Letter-Sink for improved delivery guarantees.

Knative Eventing simplifies event-driven microservices

The article described how the Knative Eventing Broker and Trigger APIs help to orchestrate event-driven microservices. The architecture is based on standardized Kubernetes APIs for Knative and CNCF CloudEvents. We also discussed how the implementation of EDA-style applications are loosely coupled and how to implement a simple routing approach for events using Knative Eventing. Leveraging industry standards is a good investment for any application architecture. If you have questions, please comment below. We welcome your feedback.

Last updated: January 22, 2024