This is the first in a series of articles about building and deploying software as a service (SaaS) applications, which will focus on software and deployment architectures. The topics the series will cover can be used as the basis for a checklist for SaaS architecture. These topics include:

- Approaches to providing multi-tenancy in SaaS applications

- How to convert a web application to Software-as-a-Service

- Multi-cloud storage strategies for SaaS applications

- Security controls and considerations for SaaS

- Implement multitenant SaaS on Kubernetes

- How to program a multitenant SaaS platform in Kubernetes

- How to autoscale your SaaS application infrastructure

As we publish articles in this series, we will update this article and provide the links to them. Bookmark this article for easy access to the complete set.

Throughout the series, we will highlight technologies that can be used to help you build and deploy SaaS applications. In particular, we will discuss best practices and the advantages that a container orchestration platform like Kubernetes and Red Hat OpenShift have for running SaaS applications.

This article provides background information on SaaS. Those unfamiliar with Kubernetes will also get a brief introduction to that platform to prepare you for the rest of this series.

What is SaaS?

From the end users' perspective, the defining characteristic of a SaaS application is that it's on-demand software typically delivered through the web, so the users only need a browser or mobile device to access it. The provider of the SaaS application manages all the software and infrastructure needed to deliver the application service to the consumers of the application.

SaaS applications are economically attractive because the development, infrastructure, and support costs are shared by multiple customers of the service. Consumers can start using the application without capital expenditures or waiting for the software to be installed. Subscription-based pricing lowers the risk for consumers, which results in shortened sales cycles for SaaS providers. The recurring revenue stream from subscriptions can be invested into the application to grow market share.

Agility is a significant advantage that SaaS providers have over traditional software vendors. SaaS consumers have nearly immediate access to new releases, allowing SaaS providers to iterate quickly. The ability to deliver new features to market quickly can be a competitive edge.

Because SaaS applications are shared, their consumers are referred to as tenants. A tenant could either be a single user or a collection of users who belong to the same organization. Each tenant has a view that shows that they are using a private resource. Even though they might be sharing hardware or software instances, other tenants' use of the system is usually completely hidden from view. This is referred to as multitenancy.

Red Hat OpenShift provides many capabilities to make SaaS deployments easier. It is a platform-as-a-service (PaaS) that builds on Kubernetes' capabilities to orchestrate multiple workloads and provide rapid scaling—capabilities that have made Kubernetes the de facto standard for cloud-native applications. Automated build and deployment pipelines, Kubernetes Operators, and the ability to manage infrastructure as code are just some of the features that make OpenShift ideal for building and deploying SaaS solutions.

What Kubernetes concepts are important for SaaS?

For the discussion of SaaS architecture in this series, it is helpful to understand a few relevant terms and concepts related to Kubernetes and Red Hat OpenShift. In this series, any discussion of Kubernetes will also apply to Red Hat OpenShift unless explicitly noted, since Red Hat OpenShift is an enterprise Kubernetes platform.

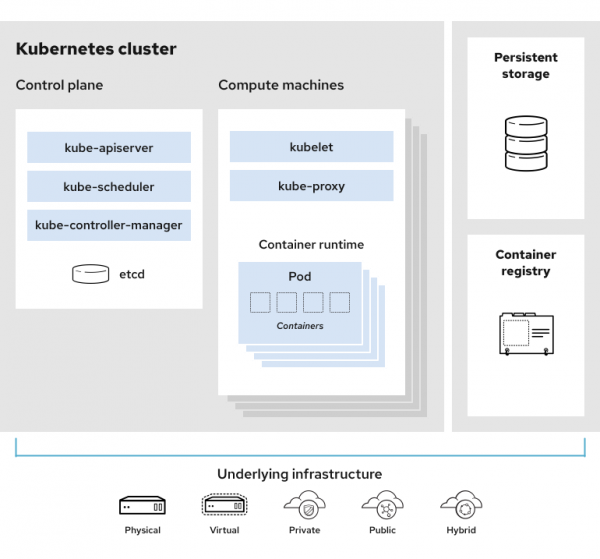

A Kubernetes cluster is a group of hosts working together to run Linux containers in an orchestrated fashion. The Kubernetes concepts relevant to SaaS architecture include:

- Control plane: The collection of processes that manage the Kubernetes cluster and allocate work to the hosts in that cluster. For a production environment, the control plane typically runs on three dedicated nodes to provide high availability.

- Worker nodes: These are the hosts that run the application workloads in the cluster. The control plane assigns work to these nodes in the form of pods. The number of worker node hosts in the cluster is determined by the anticipated or observed load of the applications that run on the cluster.

- Pod: A group of one or more containers running on a single node. The containers running in the pod can be the application itself or application components such as a web server. A node can run many unrelated pods or multiple instances of the same pod as necessary to handle the load.

- Deployments and workload management: Kubernetes provides a number of flexible mechanisms, including deployments and stateful sets, for declaring which workloads should be running and how they should be managed by the cluster's control plane. A deployment can be used to declare that a specific number of pods running the application's web server are required. It is then up to Kubernetes to decide which nodes to run those pods on and replace them if either a pod or node fails.

- Services: Services within Kubernetes provide a mechanism to access an application component, such as a web service, running in one or more pods while avoiding any coupling to a specific pod or pods. Pods are ephemeral; they can be created and destroyed as necessary for failover and scaling. The abstraction of the network from services allows Kubernetes to route requests and provide load balancing between pods.

The components of a Kubernetes cluster are shown in Figure 1.

Pods abstract network and storage away from the underlying containers. This allows pods to move to other worker nodes in the cluster as needed. The containers in a pod share a virtual IP address, hostname, and other resources that allow the containers within a pod to communicate with each other.

To allow pods to be easily moved and replicated, network services such as web servers are exposed outside of a pod by defining Kubernetes services. Kubernetes is responsible for routing any service requests to a running pod that can handle them, no matter where it is running in the cluster. In addition to allowing pods to move between worker nodes, using services also allows scaling by providing the ability to route and balance requests to multiple pods providing the same service.

For more information on Kubernetes concepts, refer to Red Hat's What is Kubernetes? article.

A look ahead

Be sure to follow the articles in this SaaS architecture series which cover approaches to multitenancy, security, and the options available for creating a defense-in-depth security strategy. The full list of articles will remain at the top of this article.

Red Hat SaaS Foundations is a partner program designed for building enterprise-grade SaaS solutions on the Red Hat OpenShift or Red Hat Enterprise Linux platforms and deploying them across multiple cloud and non-cloud footprints. Email us to learn more about partnering with Red Hat to build your SaaS.

Last updated: September 20, 2023