This is the second article of a two-part series in which I describe the automated testing infrastructure that I am developing for the SystemTap project. The first article, "Automating the testing process for SystemTap, Part 1: Test automation with libvirt and Buildbot," described my solution for managing test machines and for producing SystemTap test results. This follow-up article continues by describing Bunsen, the toolkit I developed for storing and analyzing test results.

Figure 1 summarizes the components of the testing infrastructure and how they interact.

Revisiting the seven steps for successful testing

In Part 1, I listed seven steps for successfully testing a software project:

- Step 1: Provisioning test machines and virtual machines (VMs).

- Step 2: Installing the SystemTap project and running the test suite.

- Step 3: Sending test results to a central location.

- Step 4: Receiving and storing test results in a compact format.

- Step 5: Querying the test results.

- Step 6: Analyzing the test results.

- Step 7: Reporting the analysis in a readable format.

The first three steps relate to testing the project and collecting test results. I discussed these steps in Part 1. The Bunsen toolkit implements the next four steps, which pertain to storing the collected test results and analyzing them. I will describe the implementation for each of these steps and finish by summarizing my key design ideas and outlining potential future improvements.

Step 4: Receiving and storing test results in a compact format

Once test results have been received by the test result server, they must be stored in a compact format for later analysis. For this purpose, I developed a test result storage and analysis toolkit called Bunsen.

Currently, Bunsen can parse, store, and analyze test result log files from projects whose test suites are based on the DejaGnu testing framework. Bunsen has been tested extensively with SystemTap test results, and work is also ongoing to adapt Bunsen for the requirements of the GDB (GNU Debugger) project.

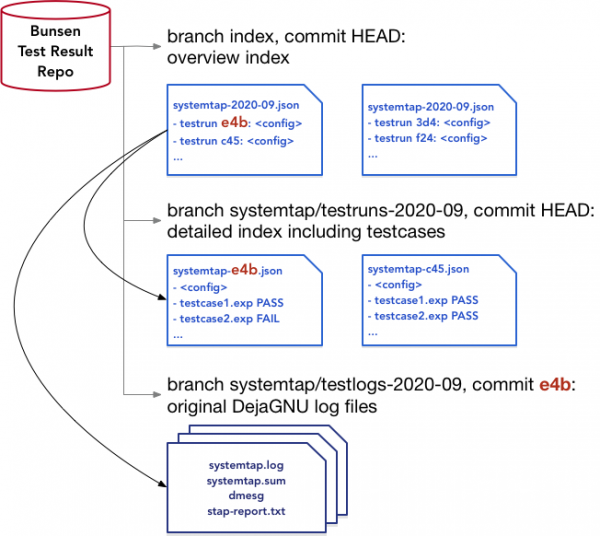

Bunsen’s test result storage and indexing engine is based on the Git version control system. Bunsen stores a set of test results across several branches of a Git repository, according to the following scheme:

- First, Bunsen stores the original test result log files in the Git repository. The files are stored in a Git branch named according to the scheme

projectname/testlogs-year-month. For example, log files from SystemTap testing obtained in August 2020 would be stored undersystemtap/testlogs-2020-08. Each revision in this branch stores a different set of test result log files. When a new revision is created for a new set of test results, log files for previously added test results are retained in the previous revision of the same branch, but are removed from the new revision. This minimizes the size of the Git working copy of eachtestlogsbranch. Because a set of test result log files may contain on the order of 50MB of plaintext data, a working copy that contained test result log files for many runs of the test suite could become extremely large. Storing one set of test results per revision makes it possible to check out working copies containing only one set of test results. - Second, Bunsen parses the test result log files and produces a representation of the test results in JSON format. The JSON representation contains the results for each test case, as well as a summary of the test machine configuration. The JSON representation also contains a reference to the commit ID of the

testlogsbranch revision that stores the original test result log files. A simplified example follows showing how test results are converted to a JSON representation. Suppose the following results are contained in asystemtap.logfile:... Running /opt/stap-checkout/testsuite/systemtap.base/cast-scope.exp ... doing compile Executing on host: g++ /opt/stap-checkout/testsuite/systemtap.base/cast-scope.cxx -g -isystem/opt/stap-chec\ kout/testsuite -isystem/opt/stap-checkout/stap_install/include -lm -o cast-scope-m32.exe (timeout = 300) spawn -ignore SIGHUP g++ /opt/stap-checkout/testsuite/systemtap.base/cast-scope.cxx -g -isystem/opt/stap-che\ ckout/testsuite -isystem/opt/stap-checkout/stap_install/include -lm -o cast-scope-m32.exe pid is 26539 -26539 output is PASS: cast-scope-m32 compile executing: stap /opt/stap-checkout/testsuite/systemtap.base/cast-scope.stp -c ./cast-scope-m32.exe PASS: cast-scope-m32 doing compile Executing on host: g++ /opt/stap-checkout/testsuite/systemtap.base/cast-scope.cxx -g -isystem/opt/stap-chec\ kout/testsuite -isystem/opt/stap-checkout/stap_install/include -lm -o cast-scope-m32.exe (timeout = 300) spawn -ignore SIGHUP g++ /opt/stap-checkout/testsuite/systemtap.base/cast-scope.cxx -g -isystem/opt/stap-che\ ckout/testsuite -isystem/opt/stap-checkout/stap_install/include -lm -o cast-scope-m32.exe pid is 26909 -26909 output is PASS: cast-scope-m32 compile executing: stap /opt/stap-checkout/testsuite/systemtap.base/cast-scope.stp -c ./cast-scope-m32.exe PASS: cast-scope-m32 doing compile Executing on host: g++ /opt/stap-checkout/testsuite/systemtap.base/cast-scope.cxx -g -isystem/opt/stap-chec\ kout/testsuite -isystem/opt/stap-checkout/stap_install/include -O -lm -o cast-scope-m32-O.exe (timeout = 300) spawn -ignore SIGHUP g++ /opt/stap-checkout/testsuite/systemtap.base/cast-scope.cxx -g -isystem/opt/stap-che\ ckout/testsuite -isystem/opt/stap-checkout/stap_install/include -O -lm -o cast-scope-m32-O.exe pid is 27279 -27279 output is PASS: cast-scope-m32-O compile executing: stap /opt/stap-checkout/testsuite/systemtap.base/cast-scope.stp -c ./cast-scope-m32-O.exe PASS: cast-scope-m32-O testcase /opt/stap-checkout/testsuite/systemtap.base/cast-scope.exp completed in 20 seconds Running /opt/stap-checkout/testsuite/systemtap.base/cast-user.exp ... doing compile Executing on host: gcc /opt/stap-checkout/testsuite/systemtap.base/cast-user.c -g -lm -o /opt/stap-check\ out/stap_build/testsuite/cast-user.exe (timeout = 300) spawn -ignore SIGHUP gcc /opt/stap-checkout/testsuite/systemtap.base/cast-user.c -g -lm -o /opt/stap-checkou\ t/stap_build/testsuite/cast-user.exe pid is 27649 -27649 output is PASS: cast-user compile executing: stap /opt/stap-checkout/testsuite/systemtap.base/cast-user.stp /opt/stap-checkout/stap_build/test\ suite/cast-user.exe -c /opt/stap-checkout/stap_build/testsuite/cast-user.exe FAIL: cast-user line 5: expected "" Got "WARNING: Potential type mismatch in reassignment: identifier 'cast_family' at /opt/stap-checkout/testsuite/systemta\ p.base/cast-user.stp:25:5" " source: cast_family = @cast(sa, "sockaddr", "<sys/socket.h>")->sa_family" " ^" "Number of similar warning messages suppressed: 15." "Rerun with -v to see them." testcase /opt/stap-checkout/testsuite/systemtap.base/cast-user.exp completed in 6 seconds Running /opt/stap-checkout/testsuite/systemtap.base/cast.exp ... executing: stap /opt/stap-checkout/testsuite/systemtap.base/cast.stp FAIL: systemtap.base/cast.stp line 6: expected "" Got "WARNING: Potential type mismatch in reassignment: identifier 'cast_pid' at /opt/stap-checkout/testsuite/systemtap.b\ ase/cast.stp:14:5" " source: cast_pid = @cast(curr, "task_struct", "kernel<linux/sched.h>")->tgid" " ^" "Number of similar warning messages suppressed: 4." "Rerun with -v to see them." testcase /opt/stap-checkout/testsuite/systemtap.base/cast.exp completed in 8 seconds ...

Bunsen produces the following JSON representation of these test results:

... {"name": "systemtap.base/cast-scope.exp", "outcome": "PASS", "origin_sum": "systemtap.sum.autotest.2.6.32-754.33.1.el6.i686.2020-12-05T03:17-0500:383", "origin_log": "systemtap.log.autotest.2.6.32-754.33.1.el6.i686.2020-12-05T03:17-0500:3091-3116"}, {"name": "systemtap.base/cast-user.exp", "outcome": "FAIL", "subtest": "FAIL: cast-user\n", "origin_sum": "systemtap.sum.autotest.2.6.32-754.33.1.el6.i686.2020-12-05T03:17-0500:386"}, {"name": "systemtap.base/cast.exp", "outcome": "FAIL", "subtest": "FAIL: systemtap.base/cast.stp\n", "origin_sum": "systemtap.sum.autotest.2.6.32-754.33.1.el6.i686.2020-12-05T03:17-0500:388"}, ...The JSON representation is stored in a branch named according to the scheme

projectname/testruns-year-month, for examplesystemtap/testruns-2020-08.Because the JSON representation is much more compact than the original test suite log files, the latest revision in the

testrunsbranch can retain all of the test suite runs stored in that branch. This makes it possible to check out a working copy summarizing all of the test results for one month. - Finally, Bunsen stores a summary of the JSON representation containing the test machine configuration, but not the detailed test results. This representation is appended to the file

projectname-year-month.jsonin the branchindex. Therefore, to gain access to a summary of all of the test results in the Bunsen repository, it is sufficient to check out a working copy of thisindexbranch.

Figure 2 summarizes the layout of a Bunsen test result repository.

The Git repository format provides significant space advantages for storing the test result log files, due to the large degree of similarity across different sets of test results. Across a large number of test suite runs, the same test case results and the same test case output tend to recur with only slight variations. Git’s internal representation can pack multiple test results into a compressed representation in which recurring test case results are de-duplicated.

For example, a collection of SystemTap test result logs collected from 1,562 runs of the test suite took up 103GB of disk space when stored uncompressed. A de-duplicated Git repository of the same test results produced by Bunsen took up only 2.7GB. This repository included both the original test result logs and the JSON representation of the test results.

Step 5: Querying the test results

In addition to the storage and indexing engine, Bunsen includes a collection of analysis scripts that can be used to extract information from a test result repository. Analysis scripts are Python programs that use Bunsen’s storage engine to access the repository.

Some of the analysis scripts included with Bunsen are intended for querying information about the content of specific test runs. The following example commands demonstrate how to locate and examine the results of a recent test run, and how to compare them with the results of prior runs.

The list_runs and list_commits scripts list the test runs stored in the test results repository and display the Git commit ID of the test result log files. The list_runs script simply lists all of the test runs; list_commits takes the location of a checkout of the SystemTap Git repository and lists the test runs that tested each commit in the repository’s main branch:

$ ./bunsen.py +list_commits source_repo=/opt/stap-checkout sort=recent restrict=3 commit_id: 1120422c2822be9e00d8d11cab3fb381d2ce0cce summary: PR27067 <<< corrected bug# for previous commit * 2020-12 5d53f76... pass_count=8767 fail_count=1381 arch=x86_64 osver=rhel-6 * 2020-12 c88267a... pass_count=8913 fail_count=980 arch=i686 osver=rhel-6 * 2020-12 299d9b4... pass_count=7034 fail_count=2153 arch=x86_64 osver=ubuntu-18-04 * 2020-12 53a18f0... pass_count=9187 fail_count=1748 arch=x86_64 osver=rhel-8 * 2020-12 7b37e97... pass_count=8752 fail_count=1398 arch=x86_64 osver=rhel-6 * 2020-12 8cd3b77... pass_count=8909 fail_count=1023 arch=i686 osver=rhel-6 * 2020-12 a450958... pass_count=10714 fail_count=2457 arch=x86_64 osver=rhel-7 * 2020-12 50dc14a... pass_count=10019 fail_count=3590 arch=x86_64 osver=fedora-31 * 2020-12 6011ae2... pass_count=9590 fail_count=2111 arch=i686 osver=fedora-30 * 2020-12 771bf86... pass_count=9976 fail_count=2617 arch=x86_64 osver=fedora-32 * 2020-12 1335891... pass_count=10013 fail_count=2824 arch=x86_64 osver=fedora-34 commit_id: 341bf33f14062269c52bcebaa309518d9972ca00 summary: staprun: handle more and fewer cpus better * 2020-12 368ee6f... pass_count=8781 fail_count=1372 arch=x86_64 osver=rhel-6 * 2020-12 239bbe9... pass_count=8904 fail_count=992 arch=i686 osver=rhel-6 * 2020-12 b138c0a... pass_count=6912 fail_count=2276 arch=x86_64 osver=ubuntu-18-04 * 2020-12 bf893d8... pass_count=9199 fail_count=1760 arch=x86_64 osver=rhel-8 * 2020-12 8be8643... pass_count=10741 fail_count=2450 arch=x86_64 osver=rhel-7 * 2020-12 94c84ab... pass_count=9996 fail_count=3662 arch=x86_64 osver=fedora-31 * 2020-12 a06bc5f... pass_count=10139 fail_count=2712 arch=x86_64 osver=fedora-34 * 2020-12 8d4ad0e... pass_count=10042 fail_count=2518 arch=x86_64 osver=fedora-32 * 2020-12 b6388de... pass_count=9591 fail_count=2146 arch=i686 osver=fedora-30 commit_id: a26bf7890196395d73ac193b23e271398731745d summary: relay transport: comment on STP_BULK message * 2020-12 1b91c6f... pass_count=8779 fail_count=1371 arch=x86_64 osver=rhel-6 * 2020-12 227ff2b... pass_count=8912 fail_count=983 arch=i686 osver=rhel-6 ...

Given the Git commit ID for a test run, the show_logs script displays the contents of the test result log files for that test run. For example, the following show_logs invocation examines the test results for the latest SystemTap commit on Fedora 32. Within the Bunsen repository, these test results have a Git commit ID of 771bf86:

$ ./bunsen.py +show_logs testrun=771bf86 "key=systemtap.log*" | less

...

Running /opt/stap-checkout/testsuite/systemtap.maps/map_hash.exp ...

executing: stap /opt/stap-checkout/testsuite/systemtap.maps/map_hash_II.stp -DMAPHASHBIAS=-9999

FAIL: systemtap.maps/map_hash_II.stp -DMAPHASHBIAS=-9999

line 1: expected "array[2048]=0x1"

Got "ERROR: Couldn't write to output 0 for cpu 1, exiting.: Bad file descriptor"

executing: stap /opt/stap-checkout/testsuite/systemtap.maps/map_hash_II.stp -DMAPHASHBIAS=-9999 --runtime=dyninst

FAIL: systemtap.maps/map_hash_II.stp -DMAPHASHBIAS=-9999 --runtime=dyninst

line 1: expected "array[2048]=0x1"

Got "array[2048]=1"

"array[2047]=1"

...

In addition to viewing test results directly, we typically want to compare them against test results from a known baseline version of the project. For the SystemTap project, a suitable baseline version would be a recent SystemTap release. To find the baseline, the list_commits script can again be used to list test results for SystemTap commits around the time of release 4.4:

$ pushd /opt/stap-checkout $ git log --oneline ... 931e0870a releng: update-po 1f608d213 PR26665: relayfs-on-procfs megapatch, rhel6 tweaks 988f439af (tag: release-4.4) pre-release: version timestamping, NEWS tweaks f3cc67f98 pre-release: regenerate example index 1a4c75501 pre-release: update-docs ... $ popd $ ./bunsen.py +list_commits source_repo=/notnfs/staplogs/upstream-systemtap sort=recent | less ... commit_id: 931e0870ae203ddc80d04c5c1425a6e3def49c38 summary: releng: update-po * 2020-11 6a3e8b1... pass_count=9391 fail_count=760 arch=x86_64 osver=rhel-6 * 2020-11 349cdc9... pass_count=9096 fail_count=800 arch=i686 osver=rhel-6 * 2020-11 136ebbb... pass_count=8385 fail_count=818 arch=x86_64 osver=ubuntu-18-04 * 2020-11 f1f93dc... pass_count=9764 fail_count=1226 arch=x86_64 osver=rhel-8 * 2020-11 2a70a1d... pass_count=10094 fail_count=1510 arch=x86_64 osver=fedora-33 * 2020-11 45aabfd... pass_count=11817 fail_count=1357 arch=x86_64 osver=rhel-7 * 2020-11 cfeee53... pass_count=9958 fail_count=1785 arch=i686 osver=fedora-30 * 2020-11 838831d... pass_count=11085 fail_count=2569 arch=x86_64 osver=fedora-31 * 2020-11 e07dd16... pass_count=10894 fail_count=1734 arch=x86_64 osver=fedora-32 commit_id: 988f439af39a359b4387963ca4633649866d8275 summary: pre-release: version timestamping, NEWS tweaks * 2020-11 2f928a5... pass_count=9877 fail_count=1270 arch=aarch64 osver=rhel-8 * 2020-11 815dfc0... pass_count=11831 fail_count=1345 arch=x86_64 osver=rhel-7 * 2020-11 f2ca771... pass_count=11086 fail_count=2562 arch=x86_64 osver=fedora-31 * 2020-11 5103bb5... pass_count=8400 fail_count=804 arch=x86_64 osver=ubuntu-18-04 * 2020-11 a5745c3... pass_count=9717 fail_count=1216 arch=x86_64 osver=rhel-8 * 2020-11 64de18e... pass_count=10094 fail_count=1464 arch=x86_64 osver=fedora-33 * 2020-11 b23e63d... pass_count=10895 fail_count=1733 arch=x86_64 osver=fedora-32 commit_id: 1a4c75501e873d1281d6c6c0fcf66c0f2fc1104e summary: pre-release: update-docs * 2020-11 78a1959... pass_count=8383 fail_count=819 arch=x86_64 osver=ubuntu-18-04 * 2020-11 7fd7908... pass_count=9753 fail_count=1214 arch=x86_64 osver=rhel-8 * 2020-11 935b961... pass_count=10092 fail_count=1509 arch=x86_64 osver=fedora-33 ...

The diff_runs script compares test results from two different test runs. For example, the following diff_runs invocation compares the recent test results on Fedora 32 x86_64 with test results on the same system around the time of release 4.4:

$ ./bunsen.py +diff_runs baseline=b23e63d latest=771bf86 | less ... * PASS=>FAIL systemtap.maps/map_hash.exp FAIL: systemtap.maps/map_hash_stat_II.stp * PASS=>FAIL systemtap.maps/map_hash.exp FAIL: systemtap.maps/map_hash_stat_SI.stp * PASS=>FAIL systemtap.maps/map_hash.exp FAIL: systemtap.maps/map_hash_stat_SSI.stp -DMAPHASHBIAS=2 * PASS=>FAIL systemtap.maps/map_wrap.exp FAIL: systemtap.maps/map_wrap2.stp ...

Finally, the diff_commits script compares all test results corresponding to two different revisions. Instead of presenting a separate comparison for each pair of test runs, diff_commits attempts to select pairs of matching system configurations to compare.

The output of diff_commits lists each changed test case exactly once, grouping test cases according to the set of comparisons in which they changed.

For example, the following diff_commits invocation compares all test runs for the recent SystemTap commit 341bf33 with all of the test runs for the release 4.4 commit 988f439:

$ ./bunsen.py +diff_commits source_repo=/notnfs/staplogs/upstream-systemtap baseline=988f439 latest=1120422 | less ... Regressions by version Found 9 regressions for: (arch=x86_64 osver=rhel-7) -> (arch=x86_64 osver=rhel-6) (arch=x86_64 osver=rhel-7) -> (arch=i686 osver=rhel-6) (arch=x86_64 osver=rhel-8) -> (arch=x86_64 osver=rhel-8) (arch=x86_64 osver=rhel-7) -> (arch=x86_64 osver=rhel-7) (arch=x86_64 osver=fedora-31) -> (arch=x86_64 osver=fedora-31) (arch=x86_64 osver=rhel-7) -> (arch=i686 osver=fedora-30) (arch=x86_64 osver=fedora-32) -> (arch=x86_64 osver=fedora-32) (arch=x86_64 osver=rhel-7) -> (arch=x86_64 osver=fedora-34) * PASS=>ERROR systemtap.apps/busybox.exp ERROR: tcl error sourcing /notnfs/smakarov/stap-checkout/testsuite/systemtap.apps/busybox.exp. * PASS=>FAIL systemtap.onthefly/kprobes_onthefly.exp FAIL: kprobes_onthefly - otf_start_disabled_iter_4 (stap) * PASS=>KFAIL systemtap.onthefly/tracepoint_onthefly.exp KFAIL: tracepoint_onthefly - otf_timer_10ms (stap) (PRMS: 17256) * KFAIL=>PASS systemtap.onthefly/tracepoint_onthefly.exp KFAIL: tracepoint_onthefly - otf_timer_10ms (invalid output) (PRMS: 17256) * PASS=>FAIL systemtap.printf/end1b.exp FAIL: systemtap.printf/end1b * PASS=>FAIL systemtap.printf/mixed_outb.exp FAIL: systemtap.printf/mixed_outb * PASS=>FAIL systemtap.printf/out1b.exp FAIL: systemtap.printf/out1b * PASS=>FAIL systemtap.printf/out2b.exp FAIL: systemtap.printf/out2b * PASS=>FAIL systemtap.printf/out3b.exp FAIL: systemtap.printf/out3b Found 3 regressions for: (arch=x86_64 osver=rhel-7) -> (arch=x86_64 osver=rhel-6) (arch=x86_64 osver=fedora-31) -> (arch=x86_64 osver=fedora-31) * FAIL=>PASS systemtap.apps/java.exp FAIL: multiparams (0) * FAIL=>PASS systemtap.apps/java.exp FAIL: multiparams 3.0 (0) * PASS=>FAIL systemtap.base/ret-uprobe-var.exp FAIL: ret-uprobe-var: TEST 1: @var in return probes should not be stale (4.1+) (kernel): stderr: string should be "", but got "ERROR: Couldn't write to output 0 for cpu 2, exiting.: Bad file descriptor ...

Because the test results are stored in compressed format in a Git repository, there is a visible latency between invoking an analysis script and receiving the results. An invocation of +list_runs, +list_commits, or +diff_runs takes on the order of 1 second to complete. An invocation of the +diff_commits script must extract and compare multiple sets of test results, and takes on the order of 10 seconds to complete.

Step 6: Analyzing the test results

When we look at a recent set of test results, it is not always clear which test failures are new and which test failures occurred repeatedly over the history of the project. If a test failure has occurred repeatedly across many sets of test results, it is helpful to know when that the failure first appeared during the testing history of the project.

As an initial solution to filter out repeated test failures, I developed an analysis script named find_regressions. This script traverses the Git history of the project in chronological order and checks the test results for each revision to identify new changes in test results.

If a change already occurred at least once within a configurable number of previous commits (for example, within the last 10 prior commits), it is considered to be an already occurring change. Otherwise, it is considered to be a newly occurring change.

This analysis filters out nondeterministic test cases whose results frequently change back and forth between "pass" and "fail" outcomes. The final output of find_regressions lists the newly occurring changes associated with each commit, along with the number of times each change recurred after subsequent commits.

For example, this HTML file produced by an invocation of find_regressions summarizes newly occurring regressions in the bpf.exp test case for the 25 most recent revisions in the SystemTap project.

Of course, an ideal goal for test-result analysis would be to precisely identify the test result changes caused by each commit or by each change in the test environment. Because of the nature of the SystemTap test suite, precisely identifying a cause for each change in the test results is a difficult problem. Filtering out repeated failures can be thought of as a first approximation towards solving this problem.

Step 7: Reporting the analysis in a readable format

The examples in the previous section demonstrated how a Bunsen test results repository can be queried by logging into the test result server and invoking analysis scripts from the command line. However, this is not the most convenient way of examining test results. It would be preferable to access the test results remotely through a web interface.

The analysis scripts included with Bunsen include an option to generate test results in HTML format. In addition, the Bunsen toolkit includes a CGI script, bunsen-cgi.py, which accepts requests to run Bunsen analysis scripts and returns an HTML version of the analysis results.

For example, this HTML file was produced by an invocation of the list_commits script and summarizes the test results for the 25 most recent revisions in the SystemTap project.

When developing the HTML option for Bunsen analysis output, I opted to keep the format simple for two reasons. First, I wanted to avoid the development and maintenance overheads associated with a more complex interactive web console such as Grafana. Second, I wanted the HTML files produced by Bunsen to be self-contained and viewable without having to make additional requests to the test results server, so that analysis output could be saved to disk, shared over email, and attached to bug reports.

Conclusion to Part 2

By carefully analyzing the requirements for testing the SystemTap project, I was able to eliminate a large portion of the manual effort required to produce and store test results. Along the way, I had to develop solutions for a number of difficulties.

First, I needed to test SystemTap across a large variety of Linux environments. To solve this problem, I used simple shell scripting and the libvirt framework’s extensive automation support to automate steps that previously had to be done manually to set up a SystemTap test machine.

Second, because of the large size of SystemTap’s test result log files, I needed a storage format with a significant compression factor. To solve this problem, I designed the Bunsen toolkit to take advantage of Git’s de-duplicated storage format. This format achieves a significant degree of compression at the cost of noticeable latency when querying the collected test results. In the future, latency could be reduced by adding a caching layer between the compressed Git repository and the analysis scripts that access it.

Third, the large volume of test results and the large volume of nondeterministic test cases mean that it is a time-consuming manual task even to scrutinize the collected test results for new failures. Rather than rework the entire SystemTap test suite to eliminate nondeterministic and environment-dependent test cases, I decided to develop a solution based on improved analysis of test results. In the future, I hope to improve my analysis scripts and find more effective ways to filter out and report new changes in a project’s test results.

The difficult problem of test result analysis is not unique to the SystemTap project. Many other open source programs have test suites that are extremely large and include test cases with unpredictable behavior. Reworking the test suites to remove these test cases would tie up a large amount of scarce developer time. Therefore, it would be worthwhile to develop tools to make it easier to analyze collected test results across a variety of projects. The test result storage and analysis functionality in the Bunsen toolkit is a first step in that direction.

Last updated: February 12, 2024