These days, microservices-based architectures are being implemented almost everywhere. One business function could be using a few microservices that generate lots of network traffic in the form of messages being passed around. If we can make the way we pass messages more efficient by having a smaller message size, we could the same infrastructure to handle higher loads.

Protobuf (short for "protocol buffers") provides language- and platform-neutral mechanisms for serializing structured data for use in communications protocols, data storage, and more. gRPC is a modern, open source remote procedure call (RPC) framework that can run anywhere. Together, they provide an efficient message format that is automatically compressed and provides first-class support for complex data structures among other benefits (unlike JSON).

Microservices environments require lots of communication between services, and for this to happen, services need to agree on a few things. They need to agree on an API for exchanging data, for example, POST (or PUT) and GET to send and receive messages. And they need to agree on the format of the data (JSON). Clients calling the service also need to write lots of boilerplate code to make the remote calls (frameworks!). Protobuf and gRPC provide a way to define the schema of the message (JSON cannot) and generate skeleton code to consume a gRPC service (no frameworks required).

While JSON is a human-readable format that provides a nested data structure, it has a few drawbacks, for example, no schema, objects can get quite large, and there might be a lack of comments.

This article shows how gRPC and Protobuf can provide a solution to many of these limitations.

So what are gRPC and Protobuf?

gRPC is a modern, open source remote procedure call (RPC) framework that can run anywhere. It enables client and server applications to communicate transparently and makes it easier to build connected systems. gRPC is incubating in CNCF.

As a fun project, build a streaming server in JSON over HTTP. Then you will know what I am talking about. Streaming is built in with gRPC. See this for more gRPC concepts. Somehow, gRPC reminds me of CORBA.

Protobuf is a data serialization tool. Protobuf provides the capability to define fully typed schemas for messages. It allows you to insert documentation in the message itself.

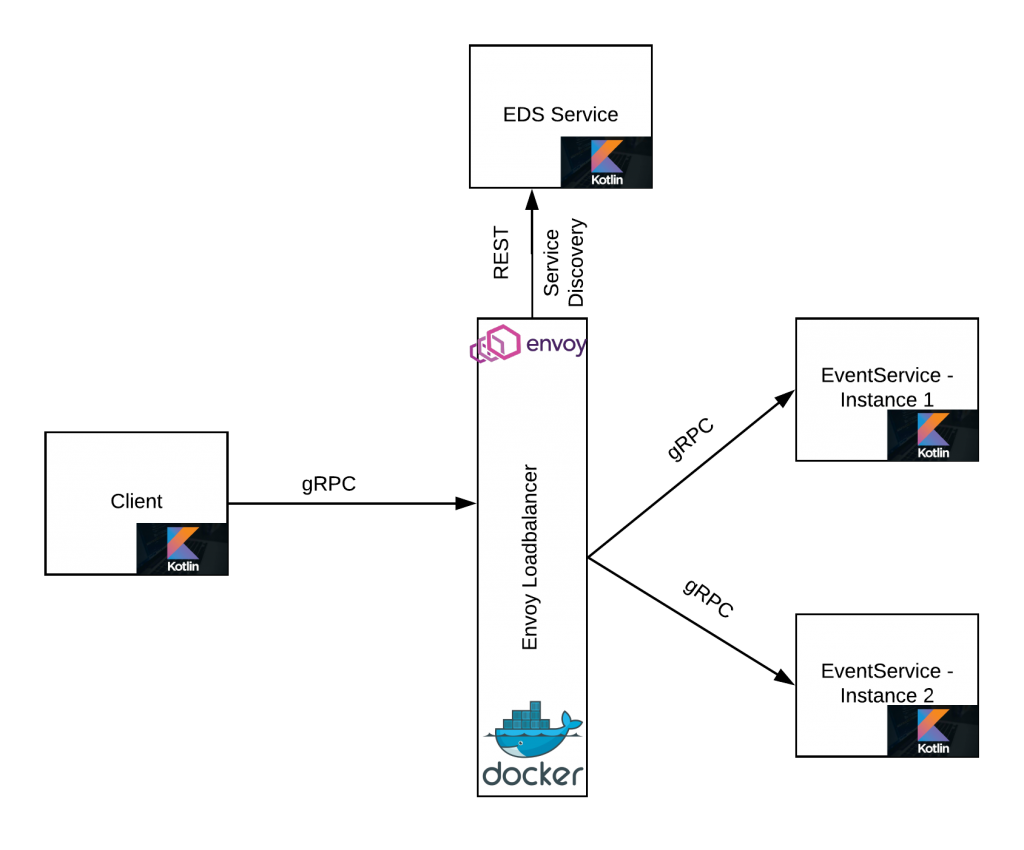

gRPC uses HTTP/2 with a persistent connection and multiplexing for better performance compared to services based on REST over HTTP 1.1. The persistent connection, however, creates a problem with level 4 proxies. We need a proxy that supports load balancing on level 7. Envoy can proxy the gRPC calls with load balancing support on the server. Envoy also provides service discovery based on an external service known as EDS, and I will show how to use that feature of Envoy, too.

What we will build

In this article, I am building a Kotlin-based gRPC service. I will load balance between multiple instances of my service using Enovy proxy. I have also configured a simple REST service that provides the service discovery for the Envoy proxy. The basic architecture is as follows.

Set up the components

First, we need to define a Protobuf message that will serve as the contract between the client and the server (refer to event.proto for the complete file):

syntax = "proto3";

import "google/protobuf/empty.proto";

package event;

option java_package = "com.proto.event";

option java_multiple_files = true;

message Event {

int32 event_id = 1;

string event_name = 2;

repeated string event_hosts = 3;

}

enum EVENT_TYPE {

UNDECLARED = 0;

BIRTHDAY = 1;

MARRIAGE = 2;

}

message CreateEventResponse{

string success = 1;

}

message AllEventsResponse{

Event event = 1;

}

service EventsService{

rpc CreateEvent(Event) returns (CreateEventResponse) {};

rpc AllEvents(google.protobuf.Empty) returns (stream AllEventsResponse) {};

}

This message will then be used by the Gradle gRPC plugin to generate stubs. The client and server code will use these stubs. You can run Gradle's generateProto task to generate the stubs.

Now it is time to write the server:

val eventServer = ServerBuilder.forPort(50051)

.addService(EventsServiceImpl()) //refer to the server implementation

.build()

eventServer.start()

println("Event Server is Running now!")

Runtime.getRuntime().addShutdownHook( Thread{

eventServer.shutdown()

} )

eventServer.awaitTermination()

Once the boilerplate code of the server is complete, we write the server's business logic, which prints a hardcoded message and returns a fixed response.

override fun createEvent(request: Event?, responseObserver: StreamObserver<CreateEventResponse>?) {

println("Event Created ")

responseObserver?.onNext(CreateEventResponse.newBuilder().setSuccess("true").build())

responseObserver?.onCompleted()

}

Next, let's write a client to consume our events service:

fun main(args: Array<String>) {

var eventsChannel = ManagedChannelBuilder.forAddress("10.0.0.112", 8080)

.usePlaintext()

.build()

var eventServiceStub = EventsServiceGrpc.newBlockingStub(eventsChannel)

for(i in 1..20) {

eventServiceStub.createEvent(Event.newBuilder().setEventId(i).setEventName("Event $i").build())

}

eventsChannel.shutdown()

}

I copied the server code into another file and changed the port number to mimic multiple instances of our events service.

Envoy proxy configuration has three parts. All these settings are in envoy.yaml. Make sure you change the IP address of the EDS service according to your settings. Update the IP address of the service in the EDSServer.kt file.

Define a front-end service. This service will receive requests from the clients.

listeners:

- name: envoy_listener

address:

socket_address: { address: 0.0.0.0, port_value: 8080 }

filter_chains:

- filters:

- name: envoy.http_connection_manager

config:

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: local_service

domains: ["*"]

routes:

- match: { prefix: "/" }

route: { cluster: grpc_service }

http_filters:

- name: envoy.router

Define a back-end service (the name is grpc_service in the envoy.yaml file). The front-end service will load balance the calls to this set of servers. Note that this doesn't know about the location of the actual back-end service. The location of the back-end service (aka the service discovery) is provided via an EDS service. See the paragraph after the following code, which discusses defining the EDS endpoint.

- name: grpc_service

connect_timeout: 5s

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

type: EDS

eds_cluster_config:

eds_config:

api_config_source:

api_type: REST

cluster_names: [eds_cluster]

refresh_delay: 5s

Optionally, define an EDS endpoint. (You can provide a fixed list of servers, too.) This is another service that will provide the list of back-end endpoints. This way, Envoy can dynamically adjust to the available servers. I have written this EDS service as a simple class.

- name: eds_cluster

connect_timeout: 5s

type: STATIC

hosts: [{ socket_address: { address: 10.0.0.112, port_value: 7070 }}]

Execution

Copy the project locally:

git clone https://github.com/masoodfaisal/grpc-example.git

Build the project using Gradle:

cd grpc-example ./gradlew generateProto ./gradlew build

Bring up the EDS server to provide the service discovery for the Envoy proxy:

cd grpc-example ./gradlew -PmainClass=com.faisal.eds.EDSServerKt execute

Initialize multiple instances of the service:

cd grpc-example ./gradlew -PmainClass=com.faisal.grpc.server.EventServerKt execute ./gradlew -PmainClass=com.faisal.grpc.server.EventServer2Kt execute

Run the Enovy proxy:

cd envoy-docker docker build -t envoy:grpclb . docker run -p 9090:9090 -p 8080:8080 envoy:grpclb

My client makes a call in a loop, which showcases that the load is distributed in a round-robin fashion.

./gradlew -PmainClass=com.faisal.grpc.client.EventClientKt execute

Conclusion

gRPC provides better performance, less boilerplate code to manage, and a strongly typed schema for your microservices. Other features of gRPC that are useful in the microservices world are retries, timeouts, and error handling. A particularly great article on gRPC is available on the CNCF website.

May your next service be in gRPC.

Last updated: June 6, 2023