Large language models can generate impressive language, but they still struggle to operate effectively within enterprise systems. To make these models useful, you need more than good prompts. You need a reliable way for models to find the right context, call the right tools, follow enterprise policies, and leave an auditable record of their actions.

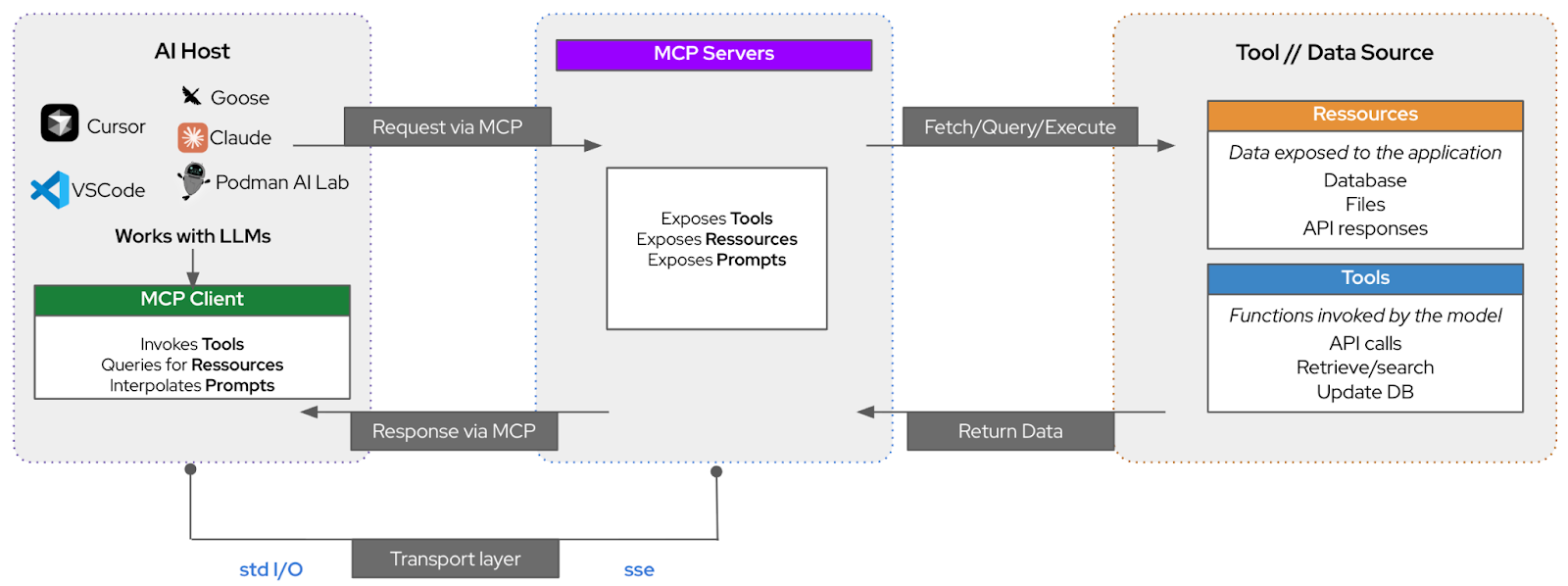

The Model Context Protocol (MCP) offers that foundation. MCP standardizes how models discover, select, and call tools (Figure 1). This helps developers move from simple chatbots to reliable, active applications without reinventing every integration from scratch.

The evolution of interacting with LLMs

Early LLM applications were simple. You asked a question and the model responded based on the data it was trained on (Figure 2). If you typed "What is Red Hat?", the model searched its internal parameters for an answer drawn from the public internet. These responses reflected the model provider's training set, not your organization's unique knowledge.

That limitation quickly became obvious. Enterprise data—from design documents and Jira tickets to meeting transcripts and product wikis—lived outside the model's reach. Without that context, responses were generic and often incomplete. Developers began exploring ways to bring private knowledge into the model's reasoning process and context window (the amount of information it can "see" at once).

Chatting with your documents: RAG as a first step

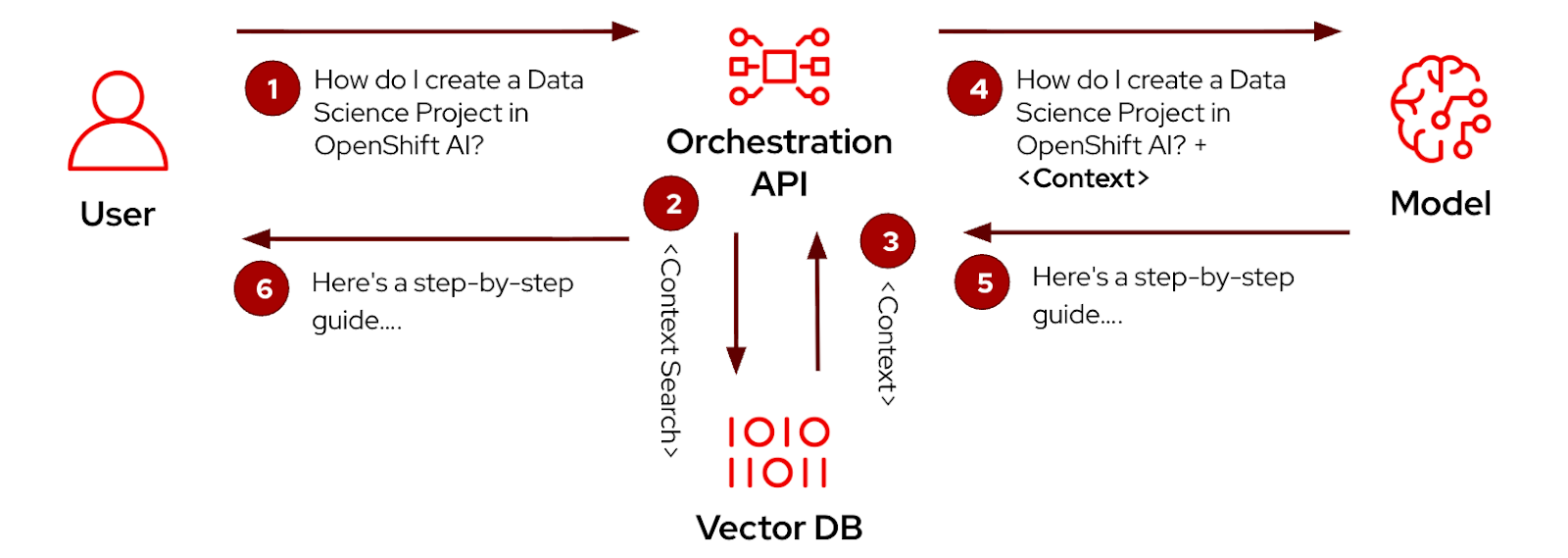

The first major breakthrough was retrieval-augmented generation (RAG). RAG connects models to vector or graph databases so they can perform semantic search and retrieve information relevant to a query.

Imagine asking, "How do I create a data science project in Red Hat OpenShift AI?" A RAG pipeline searches internal documentation, retrieves the top results, and passes them into the model's context window. The model then generates a precise, grounded answer.

Figure 3 shows this pipeline in action. By supplementing what the model already knows with external knowledge, RAG makes answers much more accurate and up-to-date. Still, it requires constant retrieval, even for simple requests that do not need additional context. As models gained reasoning ability, it became clear that they should decide when to fetch data and even when to act.

Beyond RAG: The rise of agentic AI

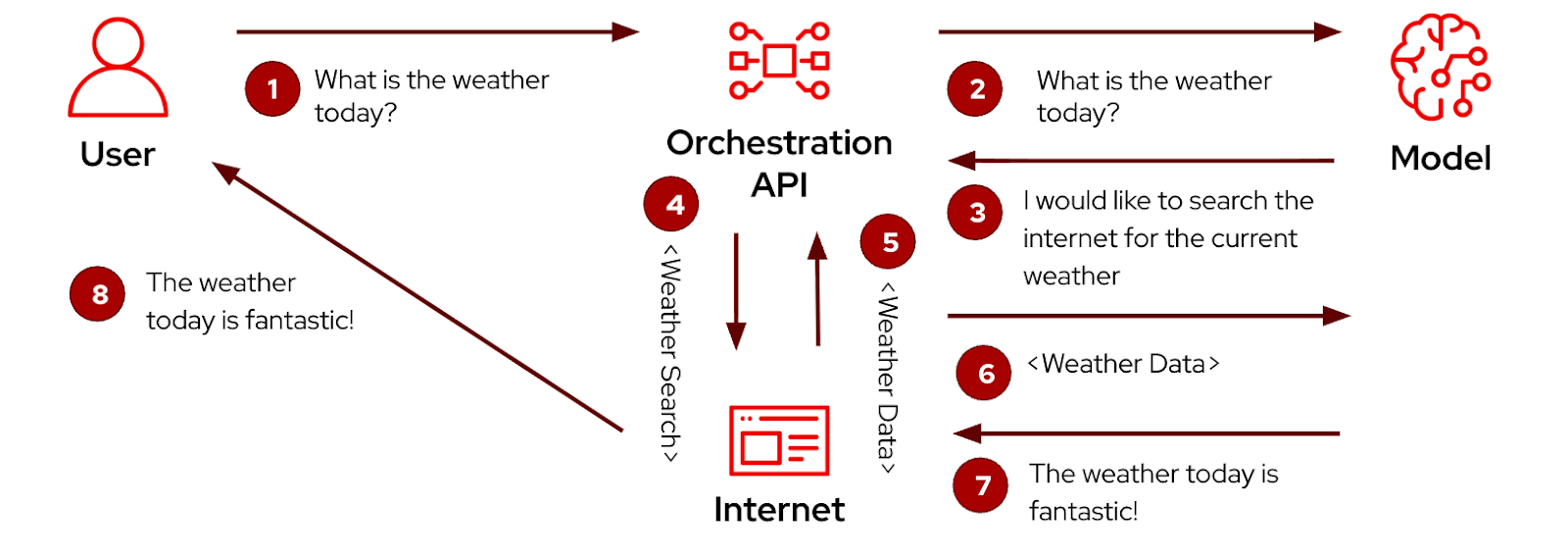

This shift gave rise to tool calling, where an LLM does not just read information but performs actions through APIs. Instead of just searching a document store, a model can now access external services to retrieve or modify data. For example, when a user asks "What is the weather today?", the model can call the AccuWeather API to fetch the current forecast, interpret the response, and return a clear summary. In the same way, it might open a pull request in GitHub or check the health of a Kubernetes cluster and post a summary of any issues to Slack.

Figure 4 illustrates this evolution. It shows how the model identifies available tools, selects the right one for the task, and performs the action. The LLM becomes context-aware, understanding both the question and the systems it can interact with. This marks the transition from simple text generation to agentic AI, systems that can reason, plan, and act on behalf of the user.

However, this new flexibility introduced new challenges. Every organization implemented tool calling differently, writing custom code for each integration. This resulted in duplication, fragmentation, and a lack of shared standards. MCP addresses these issues by defining one consistent way for models to communicate with tools.

Introducing the Model Context Protocol (MCP)

MCP defines a clear way for models to call external tools. Instead of hard-coding logic for every service, you register an MCP server that exposes an interface the model can understand.

A client, typically an LLM or an application using one, sends a standardized request. The server executes the action against a system like GitHub, Slack, or Kubernetes. (These systems already have public servers available for use). The MCP layer coordinates communication between them. See Figure 5.

Think of MCP as a common language for model-to-tool interaction, much like TCP standardized network communication. Anthropic introduced the protocol in late 2024, and the ecosystem has expanded rapidly since then. There are now tens of thousands of community-built MCP servers that connect to data storage systems, communication platforms, productivity apps, and infrastructure tools. Clients such as Claude Desktop, ChatGPT, and many open source applications can register these servers and call them directly.

Making MCP enterprise-ready

The promise of MCP is clear, but for large companies to use it, they need more than just compatibility. They need trust, governance, and control.

Enterprises need to verify that the right people and models can call the right tools with the right permissions. They must track which MCP servers are running, what versions they use, and what actions they perform. They need automated scanning, signing, and certification to confirm that each server is secure and compliant. They must also be able to observe and audit tool calls in real time.

These needs shape Red Hat's approach to MCP within Red Hat OpenShift AI. Red Hat is extending the platform to include built-in identity and access management through role-based access control (RBAC) and OAuth, lifecycle and metadata management for every MCP server, and enhanced observability as a core capability. With these controls in place, MCP becomes more useful, safe, and ready for production environments.

MCP on Red Hat AI

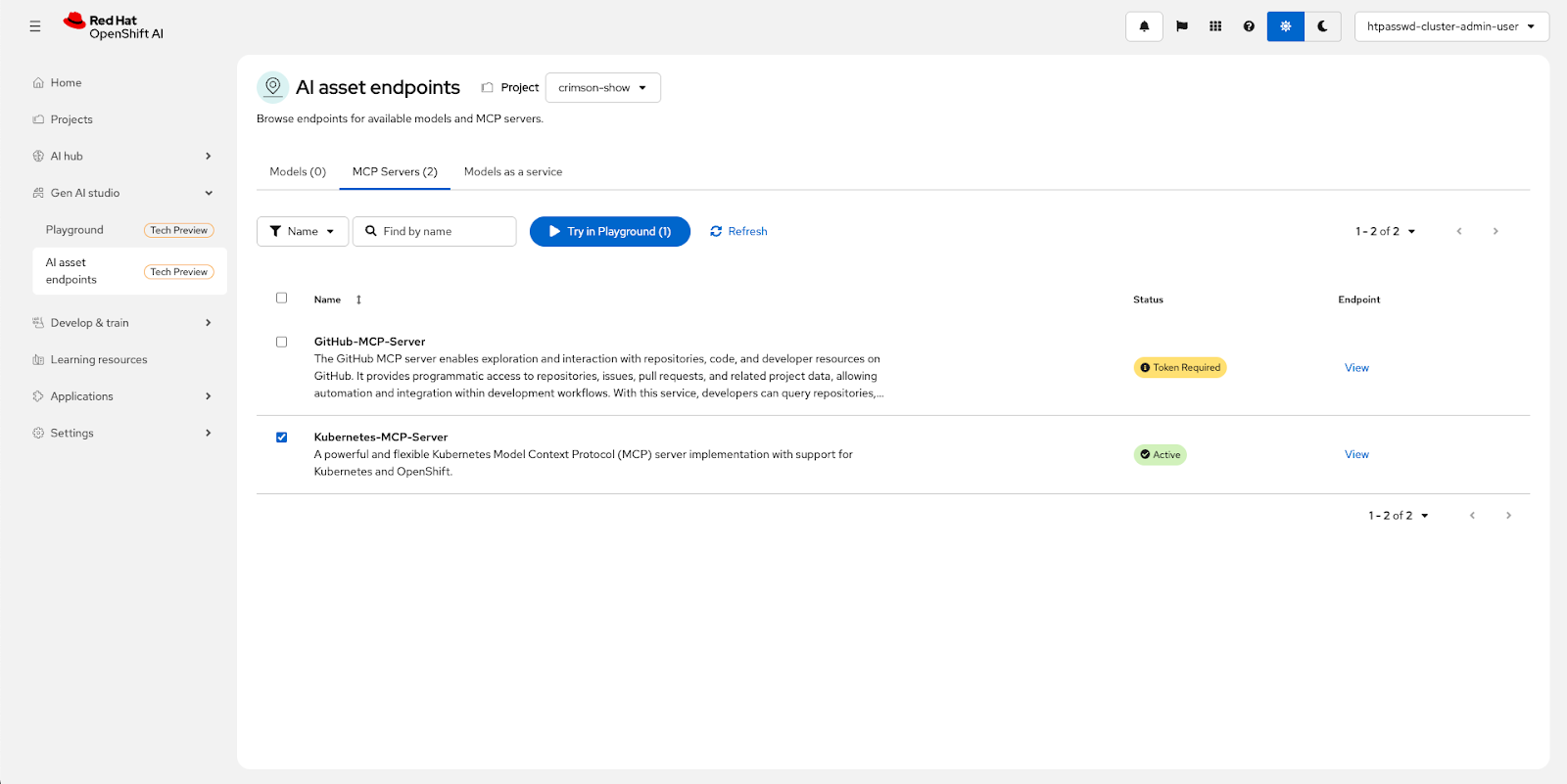

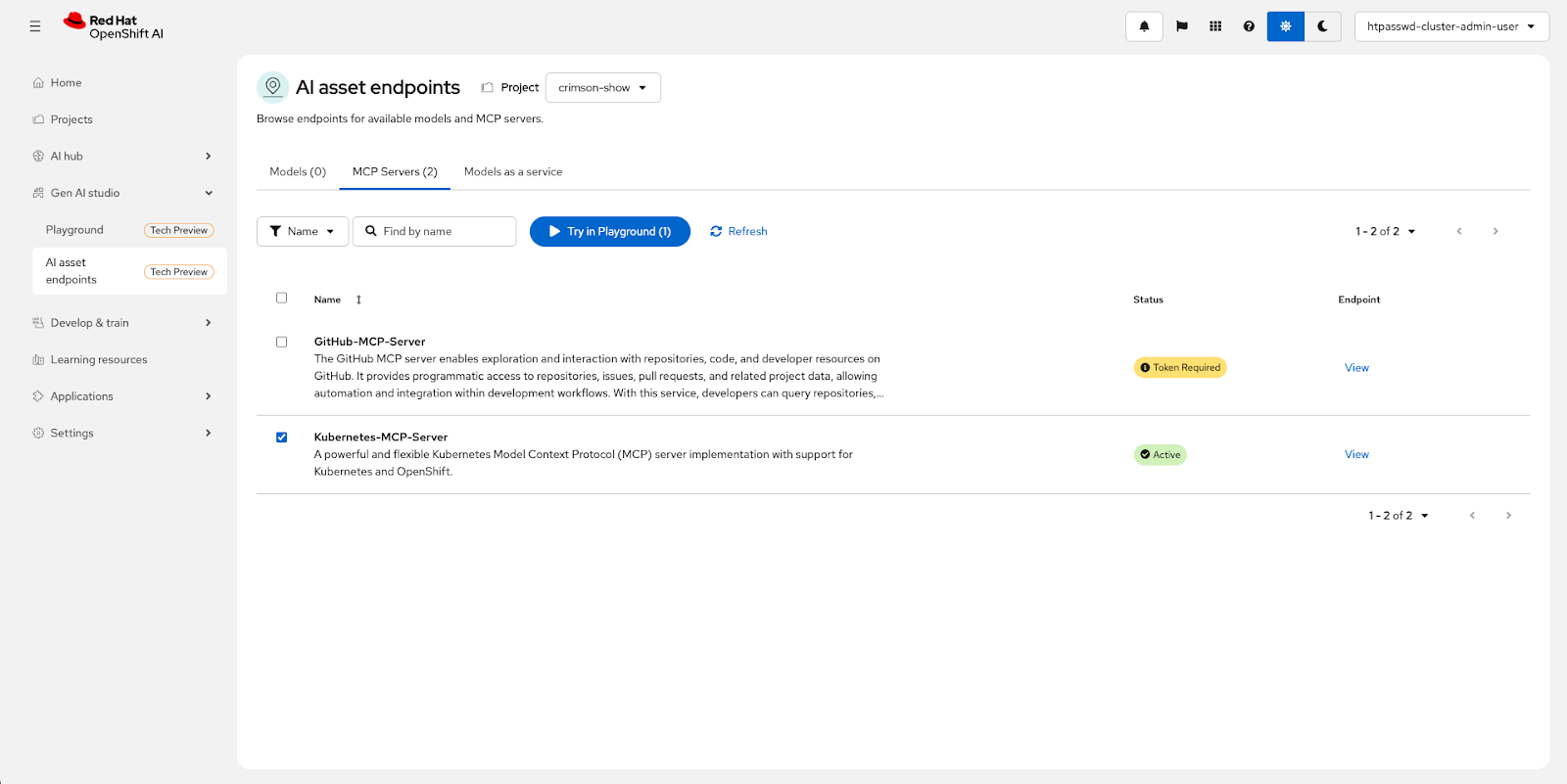

OpenShift AI 3 adds MCP support directly to the platform, giving AI engineers a fast way to connect models to tools. A guided creation flow walks users through spinning up an MCP server, containerizing it, and deploying it safely.

Once deployed, the Playground (Figure 6) allows teams to experiment safely. They can run a server alongside a model, observe its behavior, and fine-tune it before moving to production. Approved MCP servers then appear in the AI Assets listing, a centralized catalog where engineers can discover, reuse, and extend trusted integrations.

Because this capability integrates with Llama Stack, models can invoke MCP tools easily during inference. The result is an environment designed for instant experimentation today and certified, governed usage tomorrow.

Red Hat's end-to-end MCP lifecycle

Red Hat's vision for MCP in OpenShift AI extends beyond experimentation. It includes a complete lifecycle for developing, certifying, and running MCP servers.

Everything begins with the registry, a secure staging area where new servers are scanned, quarantined if needed, and enriched with metadata. After they are validated, servers move into the catalog, a curated set of certified integrations available to all AI engineers within the organization.

Lifecycle management ensures that each server is tracked and versioned. Updates are scanned and signed automatically, while deprecated versions are retired safely. At runtime, the MCP gateway ties it all together. It enforces policy and RBAC rules, applies rate limits and logging, and provides consistent observability for every request.

Together, these components make MCP predictable and governable at scale.

A quick demo of Model Context Protocol

To see MCP in action, check out the following video demonstration. Through a chat interface (Goose in this example), the model connects to both OpenShift and Slack MCP servers. It retrieves system information, detects anomalies, and posts updates to Slack.

The road ahead for MCP in OpenShift AI

In the near term, OpenShift AI 3.0 and future versions will add more support. MCP will appear in the AI Assets catalog and Playground to simplify experimentation (Figure 7). Red Hat will also onboard partner and ISV MCP servers for early access while previewing the full MCP registry, catalog, and gateway stack. Each release will add richer metadata for certification status, security scans, and maturity levels.

Beyond that, Red Hat is building a longer-term vision for MCP-as-a-Service (MCPaaS), a managed layer for hosting, observing, and auditing MCP servers centrally. The AI Hub will unify models, agents, guardrails, and MCP servers as composable AI assets. The OpenShift DevHub plug-in will show developers which servers they can use directly within their workspace, and MCP for AI Coders will provide access in Red Hat OpenShift Dev Spaces or local environments.

Red Hat product teams are also developing their own MCP servers for OpenShift, Red Hat Ansible Automation Platform, Red Hat Enterprise Linux, and Red Hat Lightspeed, enabling new combinations of infrastructure automation and intelligent behavior. As these servers mature, developers will be able to orchestrate complex workflows such as a model coordinating updates across clusters or triggering an Ansible playbook entirely through MCP.

Building the future of agentic AI

As you've learned about the brief history of LLM application design, MCP is a major shift in how we connect our AI models to existing systems. It lets developers build models that act with context, enforce policy, and integrate safely into enterprise environments.

By enabling Model Context Protocol into OpenShift AI, Red Hat is giving teams a complete foundation for agentic AI, one where experimentation, governance, and scalability coexist. Developers can test an MCP server in the Playground, publish it to the catalog, and deploy it with confidence.

As the ecosystem grows, expect MCP to become as fundamental to AI development as containers are to cloud infrastructure, a standard layer that makes intelligent automation predictable, secure, and reusable.

Last updated: January 13, 2026