Tiered storage was marked as production-ready with the Apache Kafka 3.9.0 release. This feature is a game changer for long-term data retention and cost efficiency. It allows you to scale compute and storage resources independently, provides better client isolation, and enables faster maintenance of your Kafka clusters. We covered the full implementation details in the article Kafka tiered storage deep dive.

On the client side, nothing changes when consuming messages from remote segments, but there are still challenges when reading remote data. This article describes 2 related problems, their solutions, and some tuning advice.

Key configurations

There are 4 important configurations to understand before diving into the details, shown in Table 1.

| Configuration | Type | Component | Default |

|---|---|---|---|

| Hard | Broker | 1048588 (~1 MiB) |

| Hard | Topic | 1048588 (~1 MiB) |

| Soft | Consumer | 52428800 (50 MiB) |

| Soft | Consumer | 1048576 (1 MiB) |

The broker configuration message.max.bytes, can be overwritten by the topic configuration max.message.bytes. These settings establish a hard limit on the amount of bytes that a Kafka broker accepts from producers, measured after compression when compression is enabled. If the limit is exceeded, the broker returns RecordTooLargeException.

The consumer configuration fetch.max.bytes sets a soft limit to the amount of bytes that a Kafka broker should return to a single fetch request. This prevents excessive memory and network bandwidth consumption by limiting the amount of data returned for a single fetch request.

The consumer configuration max.partition.fetch.bytes sets a soft limit to the amount of bytes that a Kafka broker should return for each partition in a single fetch request. This configuration, along with partition shuffling, prevents partition starvation.

If the consumer issues N parallel fetch requests, the memory consumption should not exceed min(N * fetch.max.bytes, max.partition.fetch.bytes * num_partitions).

Both max.partition.fetch.bytes and fetch.max.bytes limits can be exceeded when the first batch in the first non-empty partition is larger than the configured value. In this case, the batch is returned to ensure that the consumer can make progress.

Configuring max.message.bytes <= fetch.max.bytes prevents oversized fetch responses.

Fetch requests

Let's see how these configurations are used in a few fetch request examples.

Respecting the limit

This example demonstrates how a Kafka consumer retrieves data from multiple partitions while respecting the configured byte limits. See Figure 1.

Configuration:

fetch.max.bytes=1000max.partition.fetch.bytes=800max.message.bytes=1000

The consumer sends a fetch request targeting 2 partitions. The broker responds with a total of 1,000 bytes distributed as follows:

- partition A: 800 bytes (hitting the per-partition limit)

- partition B: 200 bytes (limited by remaining fetch budget)

Even though partition B could potentially return up to 800 bytes (per its partition limit), it's restricted to 200 bytes because the total fetch limit (1000 bytes) has nearly been reached after partition A's 800-byte contribution.

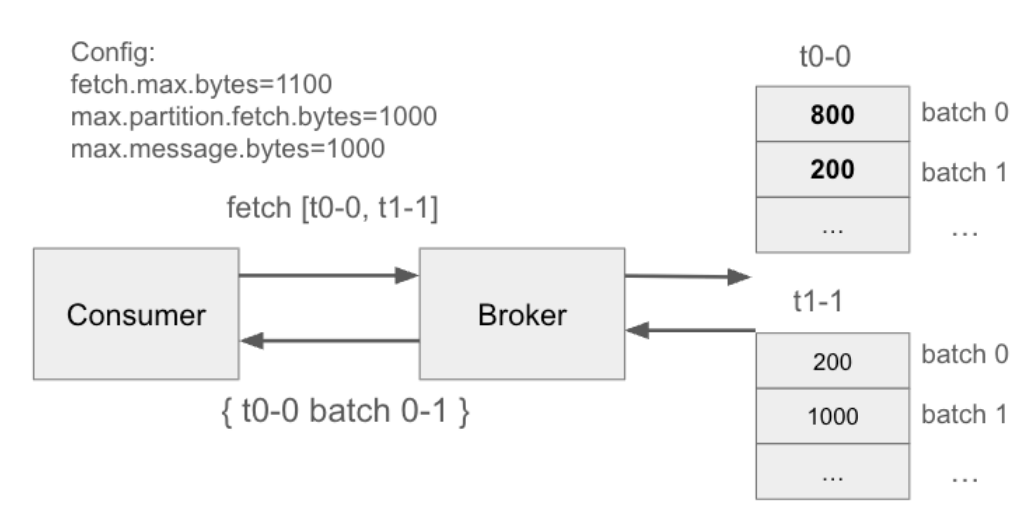

Skipping partitions

This example demonstrates how a Kafka consumer might skip entire partitions when individual message batches are too large to fit within the remaining fetch budget. See Figure 2.

Configuration:

fetch.max.bytes=1100max.partition.fetch.bytes=1000max.message.bytes=1000

The consumer sends a fetch request targeting 2 partitions. The broker processes them in this order:

- Partition A: returns 1,000 bytes of data

- Partition B: gets skipped entirely

After partition A contributes 1,000 bytes, only 100 bytes remain within the total fetch budget (1,100 - 1,000 = 100). However, the next available message batch in partition B is 200 bytes in size. Because this batch doesn't fit within the remaining 100-byte budget, the entire partition is skipped.

Kafka fetches data in complete message batches, not individual bytes. If a batch is too large for the remaining fetch budget, the entire partition is skipped for that fetch request, even if smaller individual messages within the batch could theoretically fit.

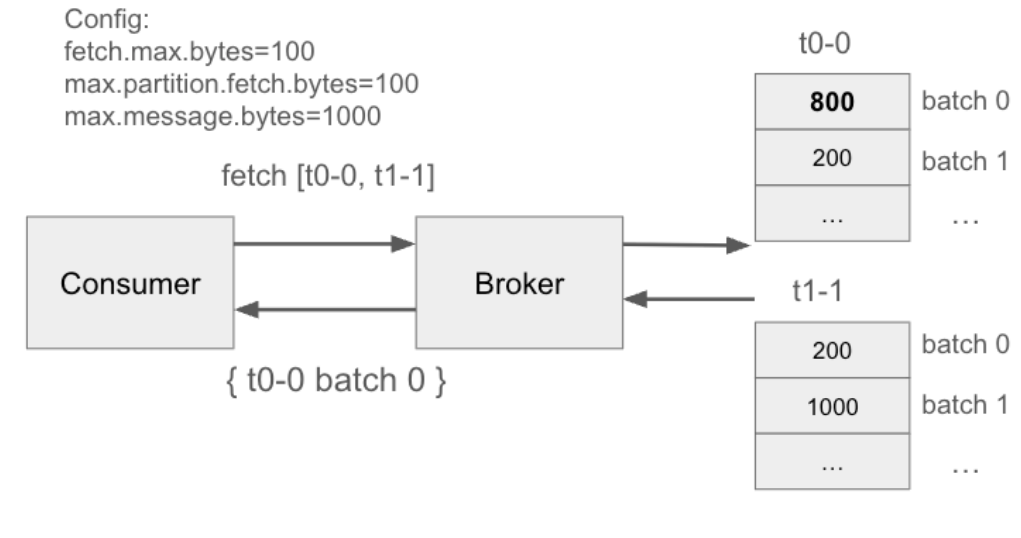

Exceeding the limit

This example demonstrates how a Kafka consumer can exceed its configured fetch limit when a single message batch is larger than the total fetch budget. See Figure 3.

Configuration:

fetch.max.bytes=100max.partition.fetch.bytes=100max.message.bytes=1000

The consumer sends a fetch request targeting 2 partitions. The broker processes them as follows:

- partition A: returns 800 bytes of data (exceeding both limits)

- partition B: gets skipped entirely

When the broker encounters a message batch in partition A that is 800 bytes, it cannot split or truncate the batch to fit within the 100-byte limits. Instead, it returns the complete batch to ensure that the consumer can make progress, even though this violates both the per-partition limit (100 bytes) and the total fetch limit (100 bytes).

Because the fetch response has already exceeded the total fetch.max.bytes limit due to partition A's large batch, partition B is skipped entirely to prevent further limit violations.

Kafka prioritizes consumers over strict byte limits. When a single batch exceeds the configured limits, the broker will return the complete batch, but will skip subsequent partitions to minimize the extent of the limit violation.

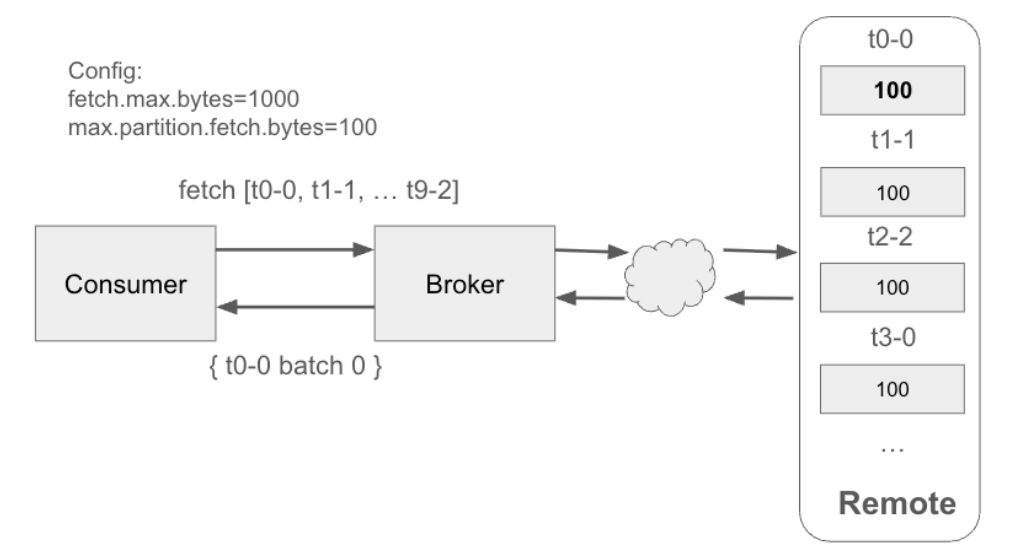

Sequential remote fetches problem

When tiered storage is enabled, each fetch request can only handle a single remote storage partition (KAFKA-14915).

When consuming from multiple partitions with tiered storage enabled, the consumer must issue separate fetch requests for each remote partition. In contrast, data on a local disk can be retrieved by combining multiple partitions into a single fetch request. See Figure 4.

Consider a scenario where there are max.partition.fetch.bytes (100 bytes) of data in each target partition. A consumer group is processing data from 10 different partitions. If the data is in local storage, the consumer could get messages from all partitions from each fetch request, but if that same data is in remote storage, the consumer would need to make 10 fetch requests, one for each partition.

In this case, the end-to-end latency is the sum of all individual fetch latencies. This creates a significant bottleneck for consumers processing topics with many partitions where the majority of data has been tiered to remote storage.

The following logs show 2 fetch requests handling 2 remote partitions sequentially:

[2025-07-07 18:22:14,189] DEBUG Reading records from remote storage for topic partition t0-0 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)

[2025-07-07 18:22:14,190] DEBUG Finished reading records from remote storage for topic partition t0-0 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)

...

[2025-07-07 18:22:14,195] DEBUG Reading records from remote storage for topic partition t1-1 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)

[2025-07-07 18:22:14,196] DEBUG Finished reading records from remote storage for topic partition t1-1 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)This problem can lead to:

- Increased network latency: More round trips between the consumer and the broker.

- Reduced throughput: The consumer spends more time waiting for multiple individual responses rather than processing data efficiently.

- Higher broker load: The broker has to handle a larger number of smaller fetch requests.

Applied solution

In Kafka 4.2.0, the consumer will be able to run multiple RemoteLogReader tasks for each fetch request. The following logs show a single fetch request handling 4 remote partitions in parallel:

[2025-07-07 18:14:29,630] DEBUG Reading records from remote storage for topic partition dUxC379pR7Ge9sxMOyd_nw:t0-1 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)

[2025-07-07 18:14:29,630] DEBUG Reading records from remote storage for topic partition gj-shIPmQfagA88kQoZulg:t1-0 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)

[2025-07-07 18:14:29,630] DEBUG Reading records from remote storage for topic partition gj-shIPmQfagA88kQoZulg:t1-1 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)

[2025-07-07 18:14:29,630] DEBUG Reading records from remote storage for topic partition dUxC379pR7Ge9sxMOyd_nw:t0-0 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)

...

[2025-07-07 18:14:29,631] DEBUG Finished reading records from remote storage for topic partition dUxC379pR7Ge9sxMOyd_nw:t0-1 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)

[2025-07-07 18:14:29,631] DEBUG Finished reading records from remote storage for topic partition gj-shIPmQfagA88kQoZulg:t1-1 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)

[2025-07-07 18:14:29,631] DEBUG Finished reading records from remote storage for topic partition dUxC379pR7Ge9sxMOyd_nw:t0-0 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)

[2025-07-07 18:14:29,631] DEBUG Finished reading records from remote storage for topic partition gj-shIPmQfagA88kQoZulg:t1-0 (org.apache.kafka.server.log.remote.storage.RemoteLogReader)Each remote fetch creates an asynchronous reader thread from a pool of size remote.log.reader.threads. When this pool is exhausted, additional reads return empty data.

It is worth mentioning that the consumer will only get a reply after all remote read tasks have been completed, whether they succeed, encounter errors, or time out when remote.fetch.max.wait.ms is exceeded.

Until this fix is released, we recommend the following workaround:

- Increase

max.partition.fetch.bytesto allow remote partitions to return larger data payloads per fetch request. - Adjust

fetch.max.bytesto accommodate the increased per-partition limits. - Increase

remote.fetch.max.wait.msto reduce timeout-related fetches. When the remote fetches time out, consumers must retry the entire request. This is particularly important when theRemoteStorageManagerlacks caching or experiences cache evictions, as these scenarios require complete remote data retrieval restart.

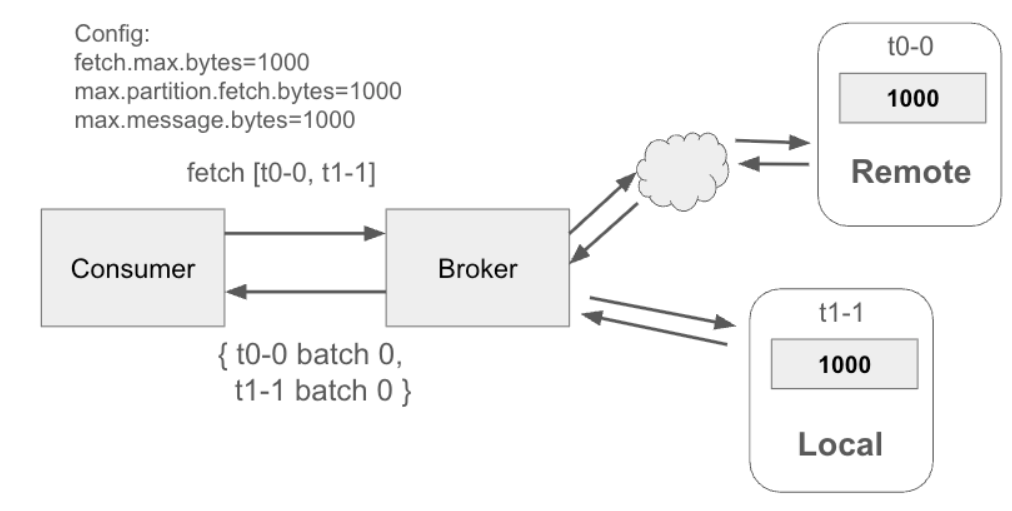

Broken fetch limit problem

When tiered storage is enabled, remote fetches can significantly exceed the fetch.max.bytes limit (KAFKA-19462).

When consuming from 2 partitions, where the data to fetch is stored on a local disk for one partition and on a remote disk for the other, the returned data size is 2,000, which is over the configured 1,000, even if we set fetch.max.bytes=1000 and max.message.bytes=1000. See Figure 5.

The core of the problem lies in how the remote fetch interacts with the Kafka broker's original fetch logic. When fetching from local disks, the broker has precise control over the amount of data it sends back based on fetch.max.bytes and max.partition.fetch.bytes configurations.

Instead, when fetching from remote storage, the broker doesn't know how much data is available. Querying the remote metadata log would introduce too much latency, so it simply does not account for remote bytes, which causes this problem.

This problem can lead to:

- Out-of-Memory (OOM) errors: If a consumer is configured with a relatively small

fetch.max.bytesvalue, but a remote fetch returns a much larger batch of records, the consumer might attempt to allocate more memory than it has available, which can lead to crashes. - Unpredictable resource consumption: It becomes difficult to reason about and provision resources for consumers if their memory footprint can unexpectedly spike.

- Network congestion: Large, unexpected fetches can overwhelm network links, impacting other services.

However, the memory consumption remains bounded because the sequential remote fetches problem above limits each fetch request to the maximum of fetch.max.bytes + max.partition.fetch.bytes of data.

Applied solution

To avoid exceeding the fetch size limit, we now assume that each remote storage read task will get max.partition.fetch.bytes size. This assumption makes sense because the data in remote storage is old data, which in most cases, there will be enough of fetchable data.

This fix will be released in Kafka 4.2.0 along with the fix for the sequential remote fetches problem to avoid unpredictable memory usage when multiple read tasks run in parallel. Until this fix is released, we recommend configuring fetch.max.bytes and max.partition.fetch.bytes appropriately based on your available system resources.

Summary

Kafka's tiered storage feature delivers compelling benefits for long-term data retention and cost optimization by seamlessly moving older data to cheaper remote storage. But this powerful capability comes with tradeoffs. Consumers accessing remote data can encounter performance bottlenecks that don't exist with local storage.

This article explored 2 critical problems that impact remote data consumption and shows how recent improvements in Kafka 4.2.0 address them. For teams running earlier versions, we provided practical workarounds to minimize the impact.

Tiered storage represents a major evolution in Kafka's architecture, and the community continues refining this feature with each release. As adoption grows, real-world feedback becomes essential for identifying edge cases and optimization opportunities. Whether you're evaluating tiered storage or already running it in production, sharing your experiences helps drive improvements that benefit the entire Kafka ecosystem.