Red Hat Quay.io was the first private container registry on the internet and began serving images back in 2013. Today, it hosts millions of images for hundreds of thousands of critical customer workloads, including Red Hat’s own product catalog.

One of the marquee features of Quay.io is free security scanning for any image that we host. This happens automatically as soon as you push an image to Quay.io. Not only does it scan your image at upload time, it also provides an up-to-date view of your vulnerabilities, no matter how long ago you pushed it. There is no need to ask Quay.io to rescan your image—that information is available whenever you request it.

This is critical, as container images typically only gather more and more vulnerabilities as they age. An old container image isn’t always a bad container image (especially if it still powers your application), but more than likely, plenty of vulnerabilities have been discovered in its libraries and base OS since you first built it. Figure 1 shows a sample security report with hundreds of vulnerabilities detected.

The Security Report on Quay.io provides this up to date view of what vulnerabilities we’ve discovered in your image. It also reports on recent vulnerabilities; this is important, as every day hundreds of new vulnerabilities are documented.

But how does all that work? And how can we provide this information so quickly for literally any image out of millions?

Introducing Clair

Clair is the open source project that powers the vulnerability detection behind Quay.io. Clair was designed to inspect container image contents and then rapidly match those contents against known vulnerability information. "Scanning" all of Quay.io in real time would be impractical due to the sheer number of images and vulnerabilities, but Clair can give real-time vulnerability results in an efficient manner by taking advantage of a few guarantees container images give us.

Container images are immutable. This means that once Clair has inspected an image’s contents, it is guaranteed that those contents will never change. Of course, a user might push new versions of an image, but from Clair’s perspective each of those images are distinct (identified by a manifest digest). So once Clair has gone through an image’s contents, it never needs to go through them again.

Container images are made of individual layers of content. Each layer is a single tarball that can be uniquely identified via an SHA value. This makes the inspection process simpler because we can now index each layer independently. This greatly reduces the problem space, as we can build a data structure called an

IndexReportwhich contains all of the package and library data found in all the layers an image contains, as shown in Figure 2.

Lastly, image layers are usually shared across images themselves. This further reduces our problem space when inspecting an image. Chances are we’ve already seen some (or most) of the layers already (Figure 3). Since we know they are immutable and uniquely identifiable, we can avoid re-indexing them. This helps greatly when a development team’s CI pipelines are producing repeated copies of an application and only the top most layers are changing. Clair can devote time to indexing only the layers it hasn’t yet seen.

Knowing these facts about container images, Clair breaks vulnerability reporting into three parts.

Indexing

When Clair is presented with an image to "scan," it first indexes the image contents. Indexing is the act of looking through all of the image layers in well-known areas for OS packages, languages, libraries, and such. Once an image has been successfully indexed, Clair stores an IndexReport for that manifest ID. The IndexReport is itself like a little database that can explain what’s inside the image. Requesting an IndexReport for an image is idempotent. If Clair is presented with a manifest that it has already indexed, it will simply return the existing IndexReport.

Matching

When Clair is asked to produce a list of vulnerabilities about an image (via its manifest digest), it performs a matching operation. Clair is configured with several matchers that each know how to compare entries in an IndexReport with known vulnerability sources. The end result of all matchers is a VulnReport structure that contains the vulnerability data that Quay.io shows in its UI. Requesting a VulnReport is not idempotent, as the set of possible vulnerabilities may have changed since the last time a VulnReport was requested for that manifest ID.

Updating

Because the universe of known vulnerabilities continues to grow, Clair must continuously check to keep its databases up to date. The updaters work asynchronously every few hours to see what new vulnerabilities have been published and add them to Clair’s database. This ensures Clair can give up-to-date answers at all times. Clair behind Quay.io is configured with the following vulnerability data sources:

- Alpine Linux

- AWS Linux

- Debian Linux

- Oracle Linux

- VMWare Photon Linux

- Red Hat Enterprise Linux

- Red Hat Ecosystem Catalog

- SUSE Linux

- Ubuntu Linux

- Open Source Vulnerability

- NVD CVSS (this is primarily used to get severity ratings when a CVE does not have one already)

Putting it all together

Clair operates as a set of indexing and matching services behind Quay.io. Let’s walk through what happens behind the scenes when a user pushes an image to Quay.io, as shown in Figure 4.

- A user pushes a very old Redis image to Quay.io. The Quay.io API receives the request and takes the uploaded layers and manifest.

- Once the push is successfully completed, the manifest becomes available to the security worker that runs periodically to find newly pushed images.

- The security worker passes the manifest to Clair’s

indexerservice and requests anIndexReportbe created. If Clair has never seen this manifest then the image layers are pulled from Quay.io and indexed, creating anIndexReport. If Clair has already seen this image (e.g., the same manifest was submitted by two different security worker instances) then Clair does nothing.

Now let’s see what happens when a user goes to the Security Report page on Quay.io for an image (Figure 5).

- When the user opens the Security Report page, Quay.io sends the manifest digest for that image to Clair’s

matcherservice. - The

matchercontacts theindexerservice to see if anIndexReportis available. If anIndexReportis not available (for example, the image has just been pushed seconds earlier and theindexeris still working) then the user is told that the scan results are not yet available. Otherwise, theIndexReportis provided back to thematcherand its contents are compared against the lists of known vulnerabilities for each artifact type. - Any identified vulnerabilities are recorded in a

VulnReportstructure which is then handed back to Quay.io. This forms the basis of theSecurityReportpage. - While all of this is happening, the

updatersare working asynchronously in the background to periodically pull vulnerability data into thematcherdatabase.

Making it scale

Clair was designed so that it can be deployed in numerous configurations. This is because the performance profiles of indexing and matching are quite different. Indexing tends to be very I/O intensive, as it needs to not only scan the full image contents but it also employs considerable amounts of temporary disk space while working. Matching tends to be very memory intensive as it needs to hold IndexReports and vulnerability data from the database for many simultaneous requests. To make sure Clair can scale to meet as many different scenarios as possible, deployments can leverage three different patterns. See Figure 6.

For the simplest deployment, indexing, matching, and updating can all be deployed as a single process with a single database instance. If one side of the database load is starting to affect overall performance, the indexer and matcher databases can be split out into separate instances. If there are CPU or memory limitations, it is possible to split the indexer away from the matcher and updater processes as well.

On Quay.io we are running the indexer as a 40-pod StatefulSet and the matcher as a 10-pod Deployment, each with their own dedicated Postgres instances. This gives us good performance with the ability to increase capacity either at the pod or database level as push traffic on Quay.io increases.

Measuring performance

For Quay.io customers, the two most important metrics regarding security are how fast initial results are available after a push and how fast current results appear when the Security Report page is viewed. These equate directly to the time Clair takes to produce an IndexReport and the time to produce a VulnReport.

Roughly speaking, the Clair behind Quay.io can produce an IndexReport between 3 to 4 seconds. It is impossible to get a precise value, as the time needed to generate an IndexReport is directly related to the size of the image pushed. For tiny (sub GiB) images, Clair can produce the IndexReport in milliseconds however larger images can take substantially longer to scan. Measured in "real time," this means the "queued" indicator on a freshly pushed image typically clears in about a minute or so (based on other factors within Quay.io). See Figure 7.

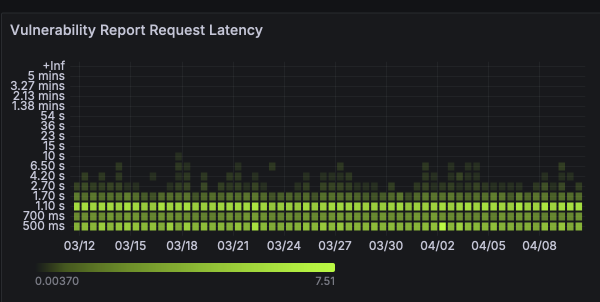

The time needed to compute a VulnReport is more consistent compared to IndexReport generation. Generally speaking we hand these back to Quay.io within a second or less. Interestingly, we observe that most VulnReports are produced either within 500 milliseconds or around 1 second. The exact reason for this is something that we’re continuing to investigate. These latencies allow Quay.io to populate its Security tab within a reasonable response time for someone sitting behind a web browser. See Figure 8.

Conclusion

Tracking and reporting on vulnerabilities across a large container registry like Quay.io requires careful architectural choices. Quay.io provides an experience where all container images appear to be continuously scanned no matter how long ago they were pushed. This is something that simply cannot be done cost effectively in a naive manner. A more clever solution is required. Clair balances the tasks of understanding (indexing) and reporting (matching) across two services that work together to efficiently produce security information on any image in Quay.io. With a modular architecture, we can scale up (or down) either side of the problem space as traffic fluctuates on Quay.io. This architecture also helps future-proof us as new vulnerability sources and use cases for Clair are found.