AI-powered search, the combination of artificial intelligence technologies and search engines, enables semantic and similarity search that goes beyond keyword matching to understand the intent and context of a query.

The integration of machine learning with Elasticsearch revolutionizes search and data analysis. Elasticsearch, known for its real-time search capabilities, can be powered by machine learning algorithms to provide context-sensitive and intelligent results.

In this article, you will discover how this powerful combination gives users the ability to efficiently index large text datasets and perform multilingual semantic-similarity searches in Elasticsearch using a pretrained model.

The article's step-by-step guide assumes you have a Jupyter notebook or Python environment to run the code, as well as an accessible instance of Elasticsearch running. There are no restrictions, but we recommend using Red Hat OpenShift Container Platform with the Red Hat OpenShift AI and Elasticsearch (ECK) operators. We also recommend creating a sandbox environment through Red Hat Developer, where you can get started simply and quickly, at no cost.

All the code can be found in the tarcis-io/ml_elasticsearch repository.

1. Machine learning

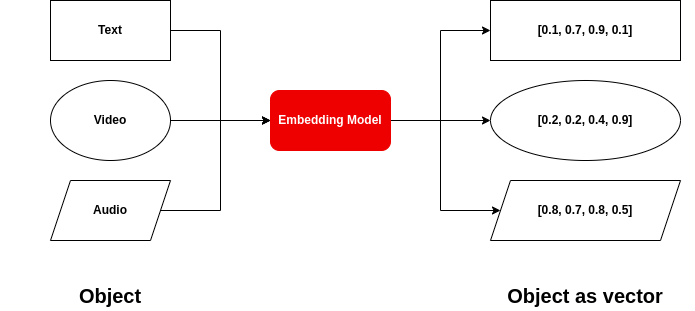

In machine learning and Natural Language Processing (NLP), models take vectors (arrays of numbers) as input. Embedding is a technique used to represent words, phrases, images, audios or others entities as vectors of real numbers in a high-dimensional space (as illustrated in Figure 1). These embeddings capture semantic relationships and contextual information, allowing algorithms to better understand and process language.

1.1. Universal Sentence Encoder Multilingual

The Universal Sentence Encoder is a model developed by Google that produces fixed-size embeddings for input sentences or short texts. These embeddings are designed to capture semantic information about the meaning of the input text, making them useful for a variety of natural language processing tasks, such as text classification, text clustering and semantic search.

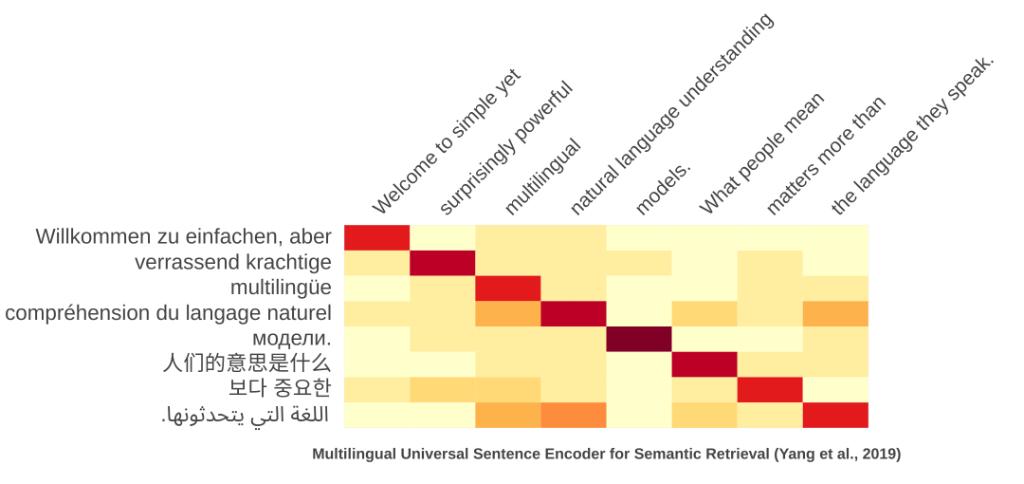

The Multilingual Universal Sentence Encoder module (shown in Figure 2) is an extension of the Universal Sentence Encoder that includes training on multiple tasks across different languages.

2. Elasticsearch

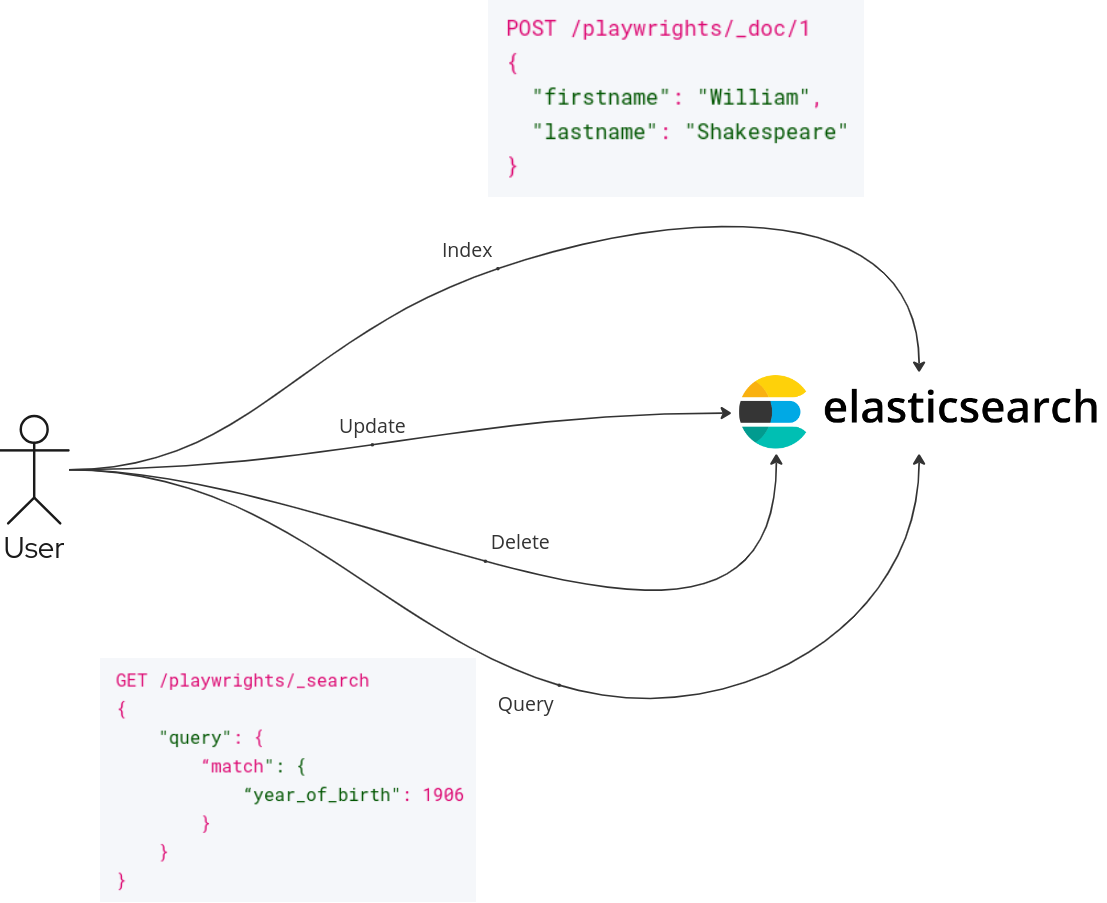

Elasticsearch is an open source distributed search and analytics engine designed for scalability and real-time search. It can handle large volumes of data of different types, including structured, unstructured, and geospatial data. Elasticsearch is commonly used in various applications, including log and event data analysis, monitoring, business intelligence, and search engines. Its versatility and scalability make it a popular choice for organizations dealing with large and complex datasets.

2.1. Index

In Elasticsearch, an index is a collection of documents that share a similar structure and are stored together for efficient searching and retrieval. It serves as the primary unit for organizing and managing data (Figure 3).

3. Show me the code!

3.1. Index the dataset

The first step is to download the BBC News dataset and index it in Elasticsearch. The notebook that implements these tasks is 01_index_dataset.ipynb.

3.1.1. Install and import the required packages

To perform indexing tasks, install and import the following packages:

import tensorflow_text

from datasets import load_dataset

from elasticsearch import Elasticsearch

from IPython import display

from tensorflow_hub import load3.1.2. Create the Elasticsearch client

Create the client that will be responsible for executing actions in Elasticsearch. At a minimum, you must specify the host and some form of authentication, such as a username and password:

es_host = '<elasticsearch_host>'

es_username = '<elasticsearch_username>'

es_password = '<elasticsearch_password>'

es = Elasticsearch(

hosts = es_host,

basic_auth = (es_username, es_password),

verify_certs = False

)

es.info()If the connection has been established, the es.info() method should return something similar to this:

ObjectApiResponse({ "tagline":"You Know, for Search" })3.1.3. Download the BBC News dataset

In this article we will use the BBC News dataset which contains over 2000 news in text format, all categorized. We can download it just by calling the load_dataset method from the datasets package:

bbc_news_dataset = load_dataset('SetFit/bbc-news')The output must be an object of type DatasetDict:

DatasetDict({

train: Dataset({

features: ['text', 'label', 'label_text'],

num_rows: 1225

})

test: Dataset({

features: ['text', 'label', 'label_text'],

num_rows: 1000

})

})3.1.4. Download the Multilingual Universal Sentence Encoder model

In machine learning, a model is a mathematical representation that learns patterns from data to make predictions or decisions without being explicitly programmed.

The Multilingual Universal Sentence Encoder is a model with a Convolutional Neural Network (CNN) architecture specialized on multiple tasks and multiple languages able to create a single embedding space common to all 16 languages which it has been trained on, showing strong performance in multilingual retrieval.

From the tensorflow-hub package, we can easily use this model with the following expression:

model = load('https://www.kaggle.com/models/google/universal-sentence-encoder/frameworks/TensorFlow2/variations/multilingual-large/versions/2')The model's input is text, and its output is an array of 512 items containing the embeddings of the input expression. For example, for input:

model('Hello World, ML Elasticsearch!')[0].numpy()The output should be:

array([ 6.04993142e-02, 2.46977881e-02, 3.43629159e-02, -3.45645919e-02,

-2.19440423e-02, 1.74631681e-02, 4.07335758e-02, -7.56947622e-02,

-4.24392615e-03, -9.12883040e-03, -7.34170526e-02, -8.02445412e-02,

7.20020905e-02, 3.64579372e-02, -4.03201990e-02, 3.09278686e-02,

-4.60638112e-04, 2.28298176e-03, 8.05738419e-02, 1.25325136e-02,

8.55052546e-02, -4.09091152e-02, 7.49715557e-03, 1.39080118e-02,

4.30730805e-02, -3.43654901e-02, 5.11647621e-03, 2.94517819e-02,

1.54668670e-02, 6.75039068e-02, 1.02604687e-01, -3.43576036e-02,

1.10444212e-02, -5.98613322e-02, -2.86747441e-02, 3.58597264e-02,

1.37920845e-02, -1.31028690e-04, -5.92646468e-03, -7.39952251e-02,

3.12727243e-02, -5.23758633e-03, -4.90117408e-02, -2.00900845e-02,

7.94764757e-02, 1.69147346e-02, -7.27028772e-03, 4.55966964e-02,

2.82147657e-02, -1.79359596e-02, 3.01514324e-02, -4.47459966e-02,

-6.71745390e-02, 4.77596521e-02, 7.86093343e-03, -1.41343456e-02,

-3.58230583e-02, -1.85324792e-02, 4.14996557e-02, -2.08834168e-02,

8.51072446e-02, 4.16630059e-02, -5.32974862e-02, -4.40437198e-02,

-4.28032987e-02, -8.95944908e-02, 3.19887549e-02, 6.05730340e-02,

-3.23659391e-03, 7.54942596e-02, -5.46579855e-03, 3.77340917e-03,

5.46114445e-02, -4.40792646e-03, -3.59019917e-03, 6.38055429e-02,

1.04503930e-02, 5.62766846e-03, -3.87495980e-02, 4.47553173e-02, ... ])3.1.5. Create the index for the dataset

We can then create the index that will store the records from the BBC News dataset using the Elasticsearch client. It is possible and necessary to change the parameters according to your needs:

bbc_news_index = 'bbc_news'

es.indices.create(

index = bbc_news_index,

settings = {

'number_of_shards' : 2,

'number_of_replicas' : 1

},

mappings = {

'properties' : {

'text' : { 'type' : 'text' },

'vector' : { 'type' : 'dense_vector', 'dims' : 512, 'index' : True },

'metadata' : {

'properties' : {

'label' : { 'type' : 'integer' },

'label_text' : { 'type' : 'text' },

'dataset_type' : { 'type' : 'text' }

}

}

}

}

)The result should be a positive feedback message, for example:

ObjectApiResponse({'acknowledged': True, 'shards_acknowledged': True, 'index': 'bbc_news'})3.1.6. Index the BBC News dataset in the Elasticsearch

Now, index the BBC News dataset in Elasticsearch using the client created previously. The dataset is divided between train and test, and for this separation we will use the dataset_type field. We will also use the text_embeddings field to save the embeddings of the text field of the dataset. This field will be used to perform the multilingual semantic-similarity search:

for dataset_type in bbc_news_dataset:

dataset = bbc_news_dataset[dataset_type]

size = len(dataset)

for index, item in enumerate(dataset, start = 1):

display.clear_output(wait = True)

print(f'Indexing BBC News { dataset_type } dataset : { index } / { size }')

document = {

'text' : item['text'],

'vector' : model(item['text'])[0].numpy(),

'metadata' : {

'label' : item['label'],

'label_text' : item['label_text'],

'dataset_type' : dataset_type

}

}

es.index(index = bbc_news_index, document = document)A counter will appear that displays the indexing progress. When finished, you can check the total number of records in the index by calling the es.count() method:

es.count(index = bbc_news_index)ObjectApiResponse({'count': 2225, '_shards': {'total': 2, 'successful': 2, 'skipped': 0, 'failed': 0}})3.2. Search

With the data from the BBC News dataset indexed in our running instance of Elasticsearch, we can perform searches using the text field for similarity search and the text_embeddings field for multilingual semantic-similarity search.

The code to implement this section can be found in notebook 02_search.ipynb. Let's skip the part about importing packages, creating the Elasticsearch client and downloading the Multilingual Universal Sentence Encoder model, shown in the previous steps.

3.2.1. Create the base search function

The search() function will be the basis used for searching and presenting the result:

def search(query):

result = es.search(index = bbc_news_index, query = query, size = 1)

result = result['hits']['hits']

if len(result) == 0:

print('no results found...')

return

result = result[0]

print(f"score : { result['_score'] }")

print(f"label : { result['_source']['metadata']['label_text'] }")

print(f"text : { result['_source']['text'] }")3.2.2. Create the similarity_search function

Let's define the function that will perform the similarity search. This is the default format used by Elasticsearch to search for indexed documents. We will use the text field:

def similarity_search(text):

query = {

'match' : {

'text' : text

}

}

search(query)We can test the function on some examples, the same text in different languages:

text_english = 'european economic growth'

text_spanish = 'crecimiento económico europeo'

text_portuguese = 'crescimento econômico europeu'

similarity_search(text_english)

similarity_search(text_spanish)

similarity_search(text_portuguese)We will see that when searching for the text in English, we will have the following result:

score : 12.638578

label : business

text : newest eu members underpin growth the european union s newest members will bolster europe s economic growth in 2005 according to a new report. the eight central european states which joined the eu last year will see 4.6% growth the united nations economic commission for europe (unece) said. in contrast the 12 euro zone countries will put in a lacklustre performance generating growth of only 1.8%. the global economy will slow in 2005 the unece forecasts due to widespread weakness in consumer demand. it warned that growth could also be threatened by attempts to reduce the united states huge current account deficit which in turn might lead to significant volatility in exchange rates. unece is forecasting average economic growth of 2.2% across the european union in 2005. however total output across the euro zone is forecast to fall in 2004 from 1.9% to 1.8%. this is due largely to the faltering german economy which shrank 0.2% in the last quarter of 2004. on monday germany s bdb private banks association said the german economy would struggle to meet its 1.4% growth target in 2005. separately the bundesbank warned that germany s efforts to reduce its budget deficit below 3% of gdp presented huge risks given that headline economic growth was set to fall below 1% this year. publishing its 2005 economic survey the unece said central european countries such as the czech republic and slovenia would provide the backbone of the continent s growth. smaller nations such as cyprus ireland and malta would also be among the continent s best performing economies this year it said. the uk economy on the other hand is expected to slow in 2005 with growth falling from 3.2% last year to 2.5%. consumer demand will remain fragile in many of europe s largest countries and economies will be mostly driven by growth in exports. in view of the fragility of factors of domestic growth and the dampening effects of the stronger euro on domestic economic activity and inflation monetary policy in the euro area is likely to continue to wait and see the organisation said in its report. global economic growth is expected to fall from 5% in 2004 to 4.25% despite the continued strength of the chinese and us economies. the unece warned that attempts to bring about a controlled reduction in the us current account deficit could cause difficulties. the orderly reversal of the deficit is a major challenge for policy makers in both the united states and other economies it noted.However, for the same text in Portuguese and Spanish, no results are found:

no results found...3.2.3. Create the multilingual_semantic_similarity_search function

Finally, let's define the function that will perform the multilingual semantic-similarity search.

In this function, we will use the client to perform the search and use cosine similarity between the input embeddings (using, again, the pretrained Multilingual Universal Sentence Encoder model) and those indexed in Elasticsearch:

def multilingual_semantic_similarity_search(text):

query = {

'script_score' : {

'query' : { 'match_all' : {} },

'script' : {

'source' : "cosineSimilarity(params.vector, 'vector') + 1.0",

'params' : { 'vector' : model(text)[0].numpy() }

}

}

}

search(query)Lastly, we can use this function for the same examples defined previously:

multilingual_semantic_similarity_search(text_english)

multilingual_semantic_similarity_search(text_spanish)

multilingual_semantic_similarity_search(text_portuguese)For all examples, the same text in different languages, we should get the same result although with a small difference in score calculated by cosine similarity:

score : 1.4061848

label : business

text : newest eu members underpin growth the european union s newest members will bolster europe s economic growth in 2005 according to a new report. the eight central european states which joined the eu last year will see 4.6% growth the united nations economic commission for europe (unece) said. in contrast the 12 euro zone countries will put in a lacklustre performance generating growth of only 1.8%. the global economy will slow in 2005 the unece forecasts due to widespread weakness in consumer demand. it warned that growth could also be threatened by attempts to reduce the united states huge current account deficit which in turn might lead to significant volatility in exchange rates. unece is forecasting average economic growth of 2.2% across the european union in 2005. however total output across the euro zone is forecast to fall in 2004 from 1.9% to 1.8%. this is due largely to the faltering german economy which shrank 0.2% in the last quarter of 2004. on monday germany s bdb private banks association said the german economy would struggle to meet its 1.4% growth target in 2005. separately the bundesbank warned that germany s efforts to reduce its budget deficit below 3% of gdp presented huge risks given that headline economic growth was set to fall below 1% this year. publishing its 2005 economic survey the unece said central european countries such as the czech republic and slovenia would provide the backbone of the continent s growth. smaller nations such as cyprus ireland and malta would also be among the continent s best performing economies this year it said. the uk economy on the other hand is expected to slow in 2005 with growth falling from 3.2% last year to 2.5%. consumer demand will remain fragile in many of europe s largest countries and economies will be mostly driven by growth in exports. in view of the fragility of factors of domestic growth and the dampening effects of the stronger euro on domestic economic activity and inflation monetary policy in the euro area is likely to continue to wait and see the organisation said in its report. global economic growth is expected to fall from 5% in 2004 to 4.25% despite the continued strength of the chinese and us economies. the unece warned that attempts to bring about a controlled reduction in the us current account deficit could cause difficulties. the orderly reversal of the deficit is a major challenge for policy makers in both the united states and other economies it noted.4. Conclusion

In conclusion, the integration of machine learning with Elasticsearch unveils a transformative synergy, enhancing the capabilities of data retrieval, analysis, and decision-making.

This paper presented a way to apply this combination to multilingual semantic-similarity search compared to traditional search, which can be inspired and carried out in the same way for data in other formats, such as videos and texts, and can also be adapted to your business needs while maintaining a high level of performance.

Last updated: August 27, 2024