Intel has been partnering with Red Hat to offer a solution for Red Hat OpenShift Data Science that includes Intel AI tools. With the help of these tools, OpenShift Data Science can perform faster and more efficiently.

The partnership between Intel and Red Hat came in response to the needs of their customers for an enterprise-grade solution for data science. The need for a more efficient and faster solution stems from the rise in demand for data scientists, which is happening at an exponential rate.

The new tools include support for popular frameworks such as TensorFlow, PyTorch, and MXNet, as well as optimized workflows for deploying AI models on Kubernetes clusters. OpenShift Data Science also provides tight integration with key components of the Intel Xeon Scalable processors family, including Intel Deep Learning Boost (DLBoost), which delivers performance that's up to three times better than previous generations for deep learning workloads.

The need and solution

Have you ever been in a situation where you were trying to increase the performance of your AI or data science application, but didn't have access to a GPU? Well, think about using one of the great software offerings available in OpenShift Data Science's managed software offering: The Intel AI Analytics Toolkit.

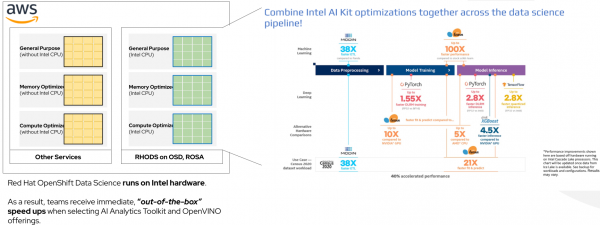

OpenShift Data Science runs on Intel hardware in AWS. As a result, you get "out of the box" acceleration during two critical stages of machine learning: Model development and training, and the deployment of a model to production.

The Intel AI Analytics Toolkit (or AI Kit) offers easy-to-use accelerations without major code changes. Simply swap out or add to existing packages for the optimized, Intel-supplied version to take advantage of their optimizations.

Take a look at how the AI Kit runs on OpenShift Data Science (Figure 1).

You can see all benchmarks and configurations here.

The left side of Figure 1 shows that OpenShift Data Science is always deployed on Intel hardware, regardless of the node type. Other AWS services, Red Hat or otherwise, might not use Intel exclusively. As a result, developers on OpenShift Data Science can take full advantage of Intel's optimizations either through AI Kit or OpenVINO.

On the right side of Figure 1, you can see the performance optimizations that users can experience by implementing AI Kit optimizations in their workloads. As you can see, AI Kit benefits the entire data science pipeline, with developers able to see anywhere from up to 100x+ performance gains with Intel Extension for Scikit-Learn*, or 2.8x faster quantized inference using the Intel Optimizations for TensorFlow and the Intel Neural Compressor. Developers can see these great performance gains on Intel architecture with, at most, a few lines of code changed—that’s it!

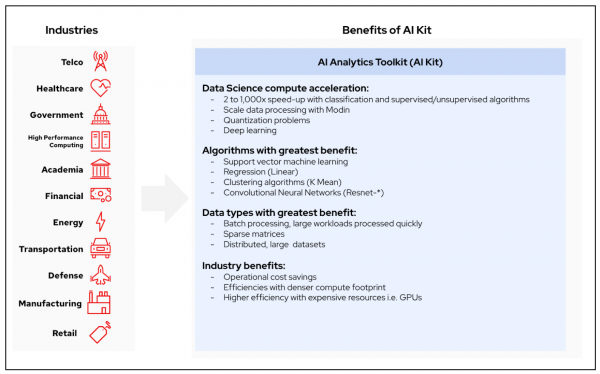

Common AI/ML opportunities and algorithms can be used across industries and have direct computational benefits from AI Kit. Benefits are outlined in Figure 2.

Faster and cheaper

Increasing computational efficiency on deployed models means higher throughput (or more predictions in the same amount of time) and faster turnaround times. These new additions to the OpenShift portfolio make it easier for data scientists and analytics professionals to develop and deploy AI.

By increasing computational performance, you can save operational expenditures by:

- Increasing the density of your compute footprint.

- Iterating on your data science process more quickly because computations finish sooner.

- Solving more problems with efficient computations.

- Increasing the efficient use of expensive resources.

Intel's AI tools help OpenShift Data Science performance in a number of ways:

- Helping to optimize machine learning workloads on Intel Xeon processors.

- Enabling data scientists to create and deploy powerful analytics models at scale.

- Accelerating data science workflows by up to 10x.

- Generating insights from raw data.

Resources

Interested in trying out the Intel AI Analytics Toolkit? You can try it out for free with the OpenShift Data Science sandbox.

For more information on the AI Kit, see:

For more information on the benefits of this Red Hat/Intel partnership, see the joint whitepaper on the subject, or the announcement on the Red Hat blog.

Last updated: September 9, 2025