In this article, you'll learn about user and group authorization through LDAP to an Apache Kafka cluster. For a Kafka broker, user- and group-based authorization are handled by Access Control Lists (ACLs). More fine-grained approaches to user and group authorization on Kafka cluster resources also use single sign-on (SSO). This article combines LDAP with Red Hat's single sign-on technology, which can write Kafka ACL rules.

This use case applies to teams that are currently employing common identity management services (IdMs) such as Active Directory (AD) or LDAP. We demonstrate how to synchronize users and groups from an IdM into Red Hat's SSO. We then show how to create ACL rules in Red Hat's SSO to apply authorization rules and policies to Kafka. This procedure allows our Kafka cluster to exploit policies we define in Red Hat's SSO to provide access to our users and groups. The problem statement for this article is:

A Kafka administrator wants to manage user and group permissions to publish and consume messages from specific topics by adding them to an AD/LDAP group.

We use Ansible to automate the deployment of OpenLDAP, Red Hat's SSO, and Red Hat AMQ Streams on Red Hat OpenShift.

This article covers the following topics:

- TLS OAuth 2.0 authentication with AMQ Streams

- TLS OAuth 2.0 authorization with AMQ Streams

- Integrating Red Hat's SSO and LDAP users and groups

- Kafka broker authorization using Red Hat's SSO

Architecture

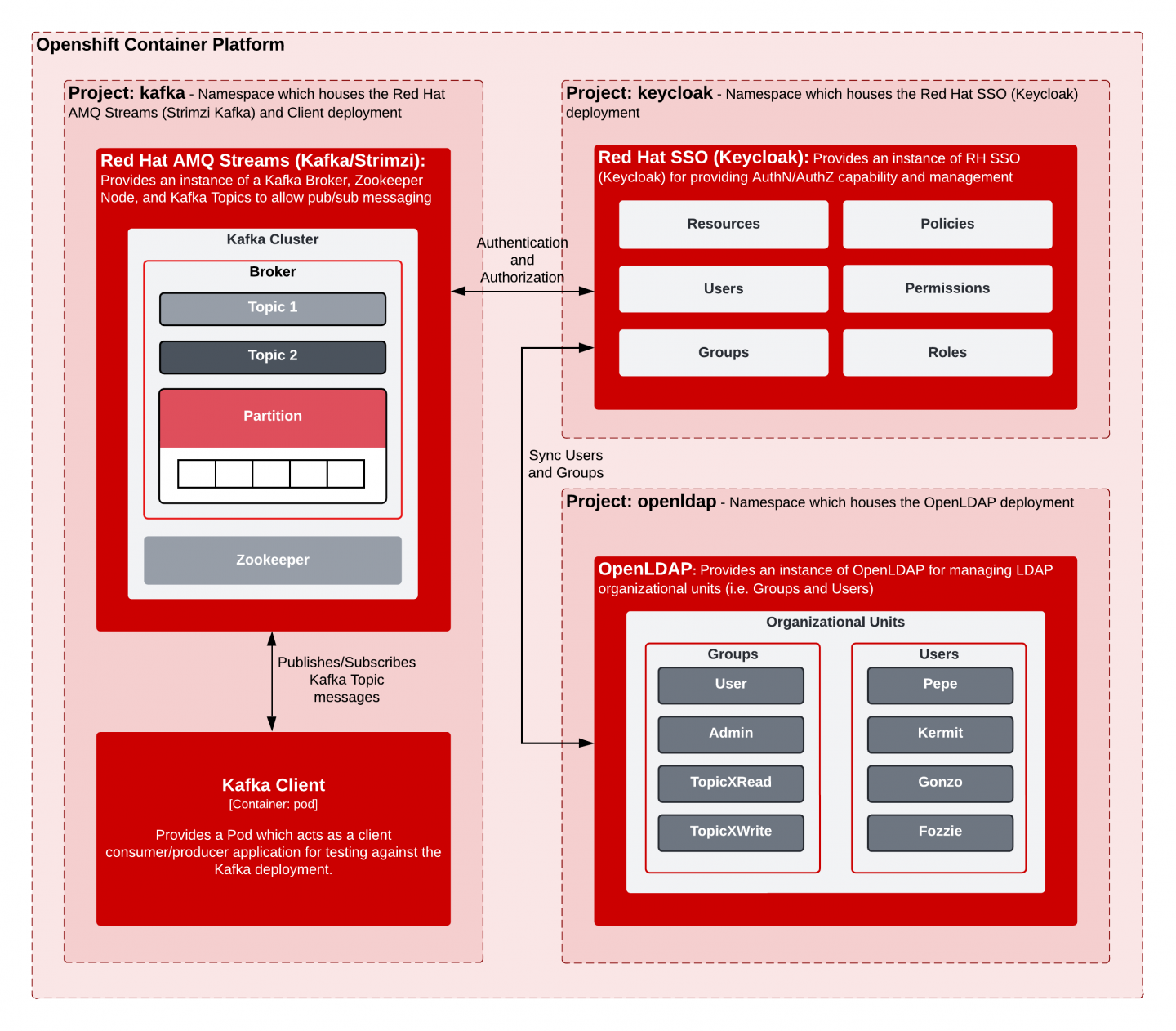

Figure 1 diagrams the systems involved in this logical architecture, indicating how they relate to and interact with each other. All application components are deployed and run in the Red Hat OpenShift Container Platform. The application components are:

- OpenLDAP: An open source LDAP Server for managing LDAP groups and users. Organizational units (OUs) such as users and groups are defined here and then synchronized into Red Hat's SSO.

- Red Hat's SSO: A single sign-on service based on the open source Keycloak project. Red Hat's SSO is an open source identity and access management solution that adds authentication and authorization to applications and secure services. This component is used primarily to manage user and group policies, rules, and access to resources. In this article, users and groups in Red Hat's SSO are synchronized to the LDAP OUs, users, and groups defined in OpenLDAP.

- Red Hat AMQ Streams: A distributed event store and stream processing platform based on Kafka.

- Kafka client: Used in this article to test the authorization and authentication rules and policies defined by Red Hat's SSO. The client publishes and subscribes to Kafka topics upon successful authentication and authorization.

Video demonstration

You can watch me step through the procedure in this article in the following video.

Prerequisites

The following resources are necessary to run this demonstration:

- An accessible OpenShift cluster with sufficient privileges.

- This setup was tested on OpenShift 4.9.x-4.10.x.

- Prior versions of 4.x.x might also work as well, but have not been exhaustively tested.

- The following open source tools, installed on your local system and added to your PATH:

- kubectx/kubens

- OpenShift oc client tool, version 4.6 or higher

- Ansible

- Python client for OpenShift

- jq

- OpenSSL

- keytool

Installation

Start by cloning the example for this article, Kafka + Keycloak + LDAP: Broker Authorization Sample, from our GitHub repository:

$ git clone https://github.com/keunlee/amq-streams-broker-authorization-sample.git

$ cd amq-streams-broker-authorization-sampleThe next part of the installation seems super simple, but behind the scenes, a bunch of installation and configuration tasks are going on. Ansible automation makes the process look easy.

To inspect the automation steps for individual products, look in each directory under /ocp/bootstrap/roles. The tasks directory for each role contains the necessary automation steps to install OpenLDAP, Red Hat's SSO, and AMQ Streams as well as the configurations needed for integration and demonstration.

Before installation commences, make sure that you are logged in to OpenShift with sufficient privileges (i.e., kubeadmin, a cluster role of cluster-admin, etc.).

You also need to have the following namespaces available for use in this demonstration, prior to installation, because the installation will attempt to provision them:

openldapkafkakeycloak

Now kick off installation:

$ cd ocp

$ ./install.shUsers, groups, and resource policies

At this point, the demo installation should be complete. You should be able to log in to Red Hat's SSO's administrative console. To get the route to this console, enter:

$ KEYCLOAK_ADMIN_CONSOLE=$(oc -n keycloak get routes keycloak -o jsonpath='{.spec.host}')

# username/password: admin/admin

echo https://$KEYCLOAK_ADMIN_CONSOLE

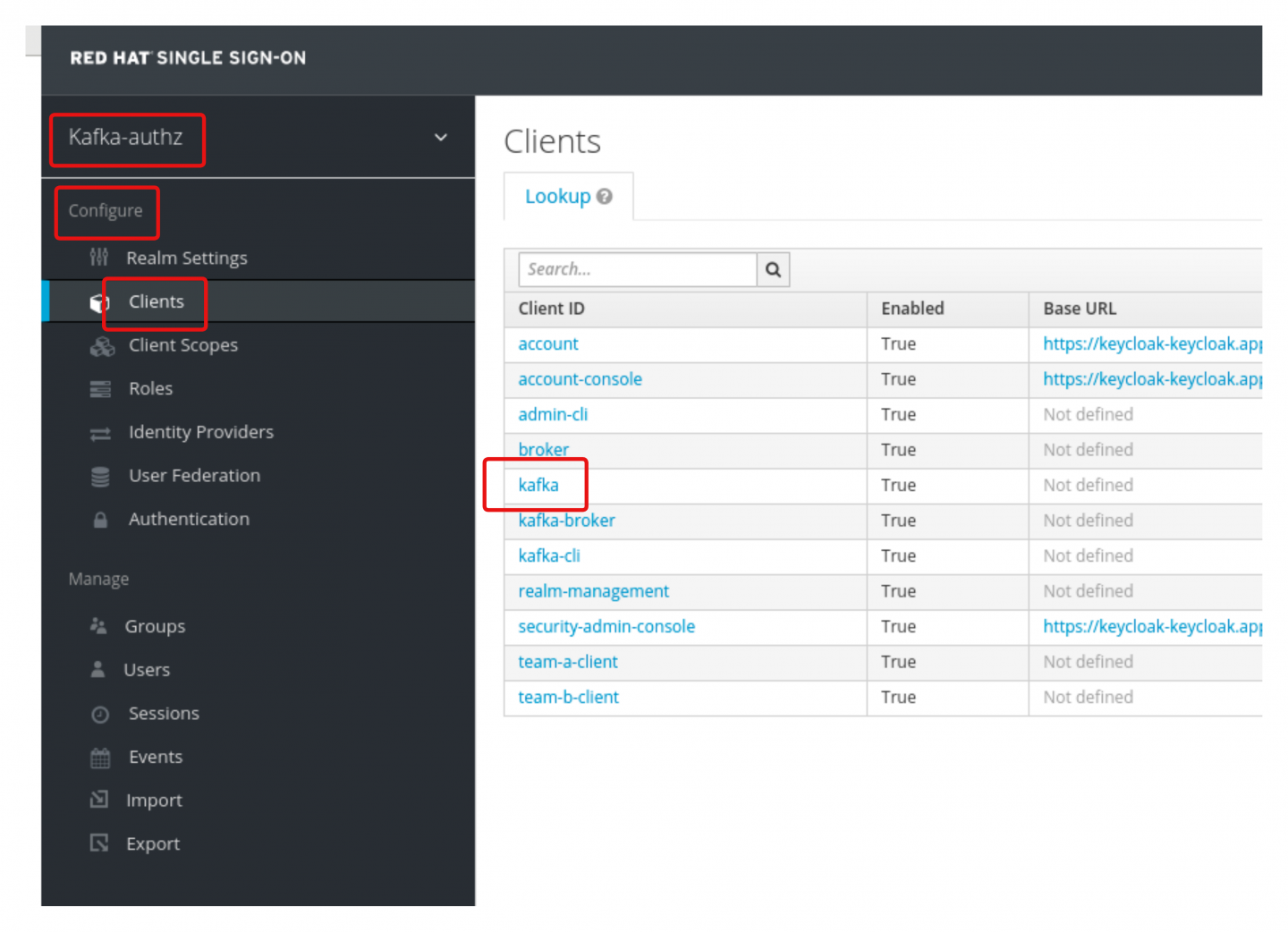

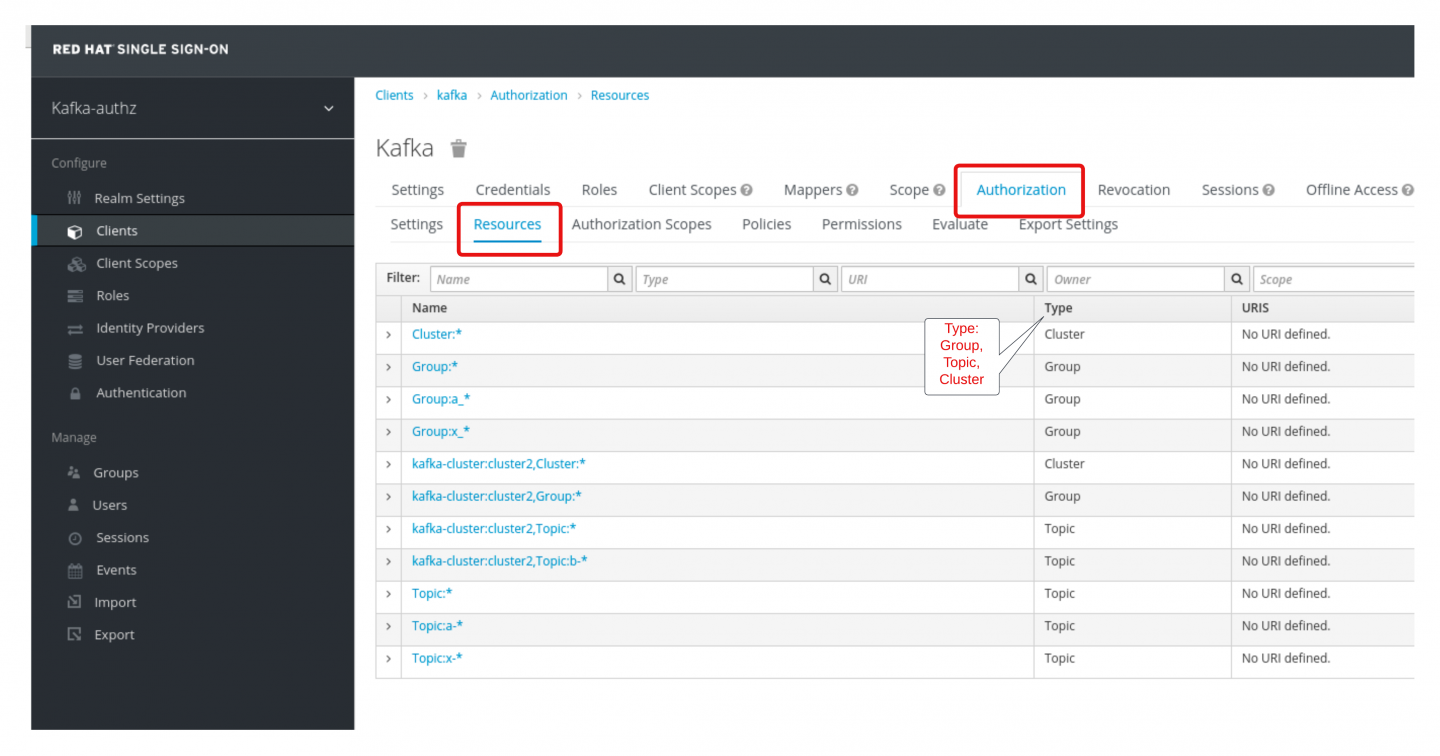

At the console, pick the kafka-authz realm. To consume or publish to a Kafka topic, you must represent the topic as a resource in Red Hat's SSO. Resources are created under Configure > Clients > kafka > Authorization > Resources (see Figures 2 and 3).

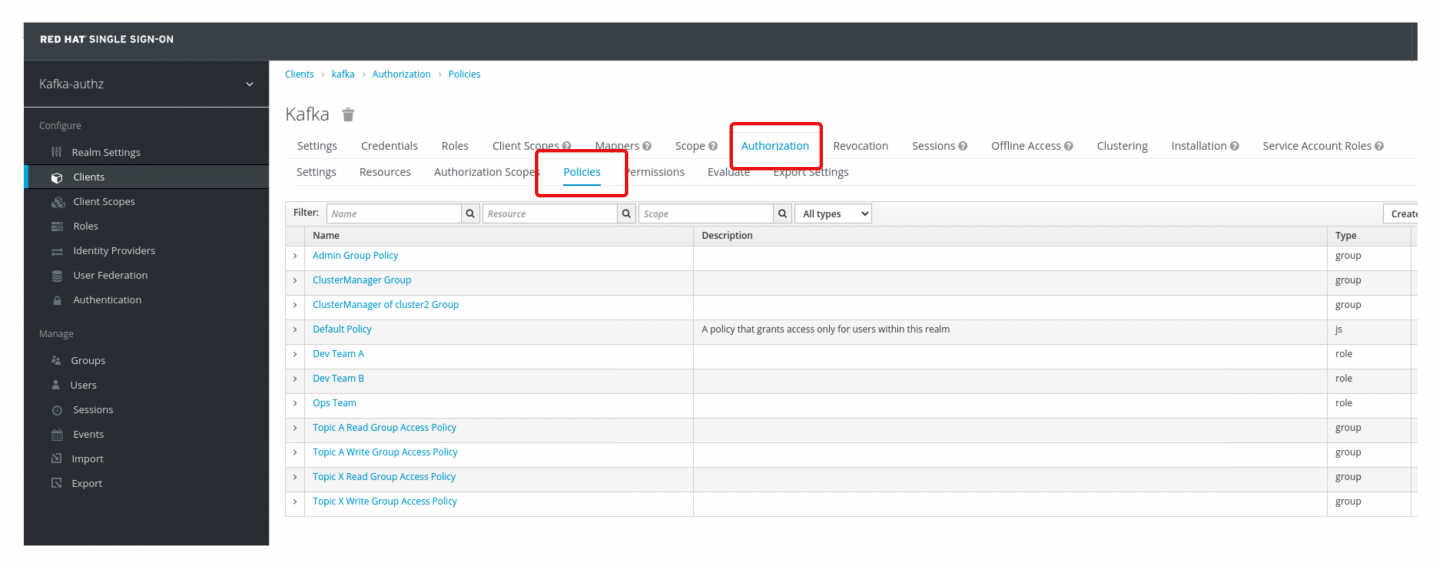

Under Configure > Client > kafka > Authorization > Policies you can find policies associated with dependent permissions. These permissions manage LDAP groups such as TopicAWrite, TopicARead, TopicXWrite, and TopicXRead (shown in Figure 4).

Red Hat's SSO provides the means to create policies and permissions around resources. Examples of these resources include Kafka topics, consumer groups, and Kafka clusters.

In our demonstration, topic resources are created and used to reflect named filters. For example, a topic resource with the name Topic:a-* denotes the resource filters on Kafka topics that start with the character pattern of a- (including the hyphen).

The same naming filter paradigm also applies to consumer resources. For instance, a resource group with the name Group:a_* denotes the resource filters on Kafka consumer groups that start with the character pattern of a_ (this time with an underscore).

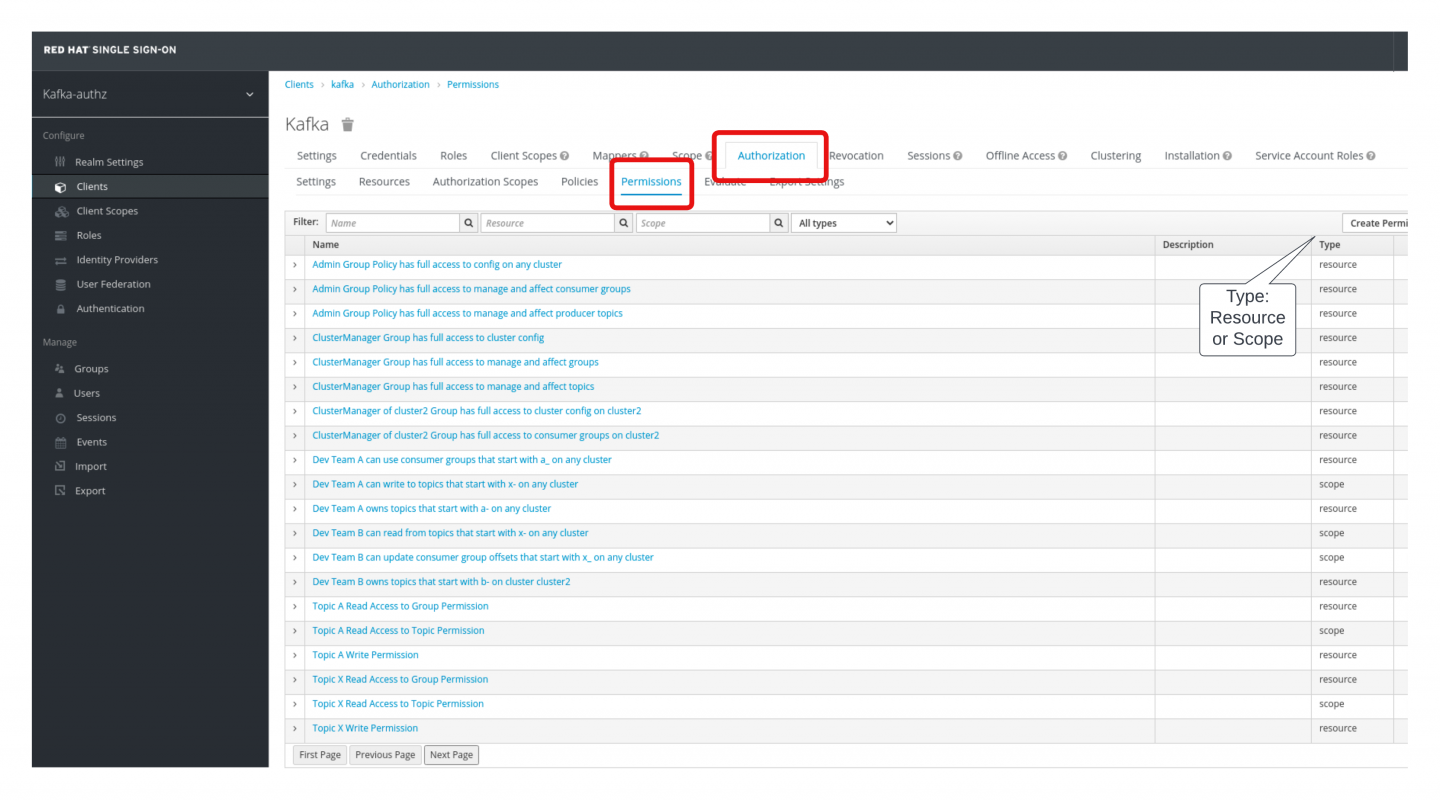

To manage user and group permissions, go to Configure > Client > kafka > Authorization > Permissions (see Figure 5).

In this view, you can visually define rules and permissions for users and groups. It is worth mentioning that permissions are managed either at the scope level (e.g., read, write, describe) or at the resource level (e.g., Kafka topic, group). This detail is significant because it demonstrates how Red Hat's SSO is able to grant permissions on resources granularly.

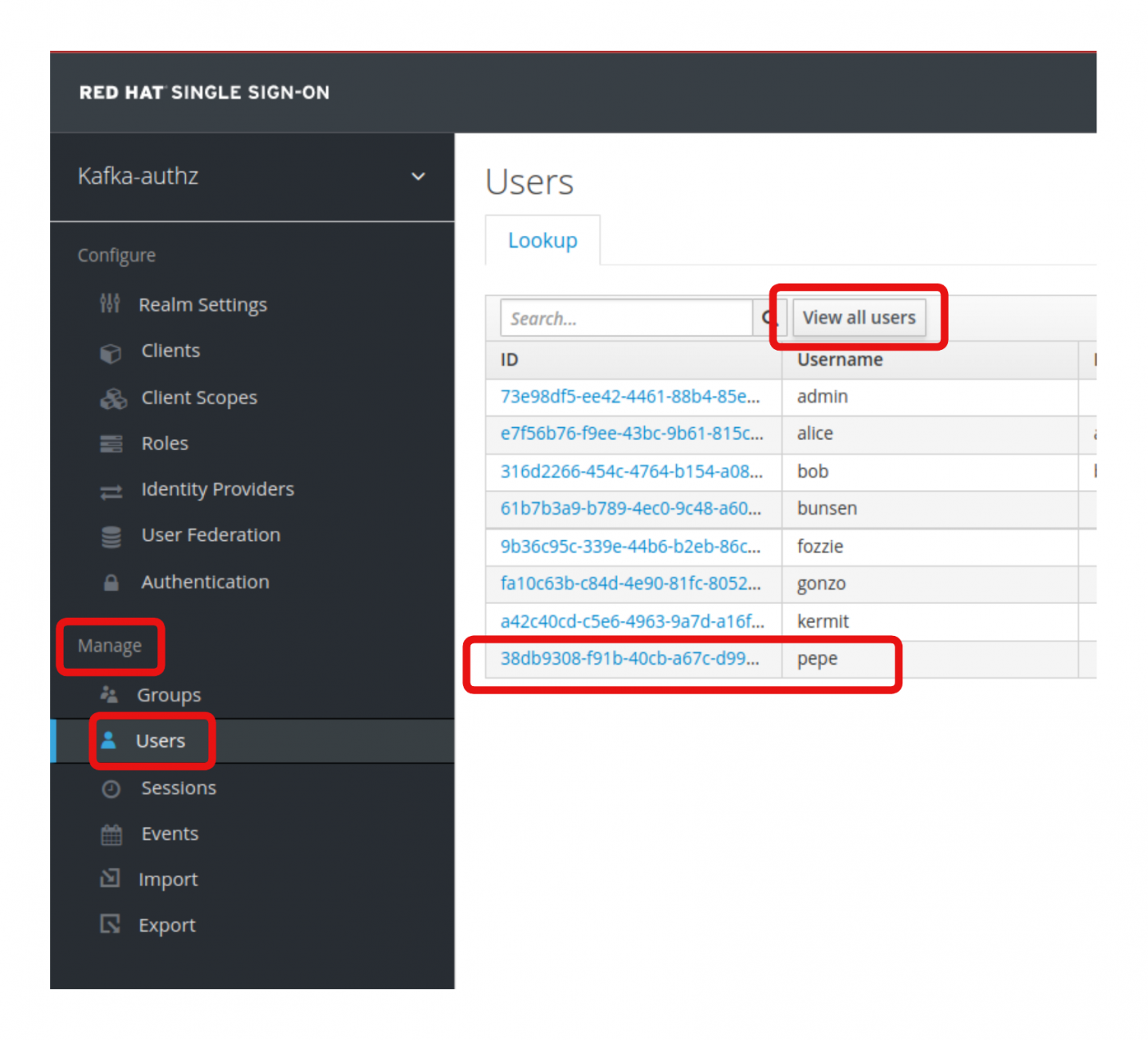

Under Manage > Users, click View all users (Figure 6).

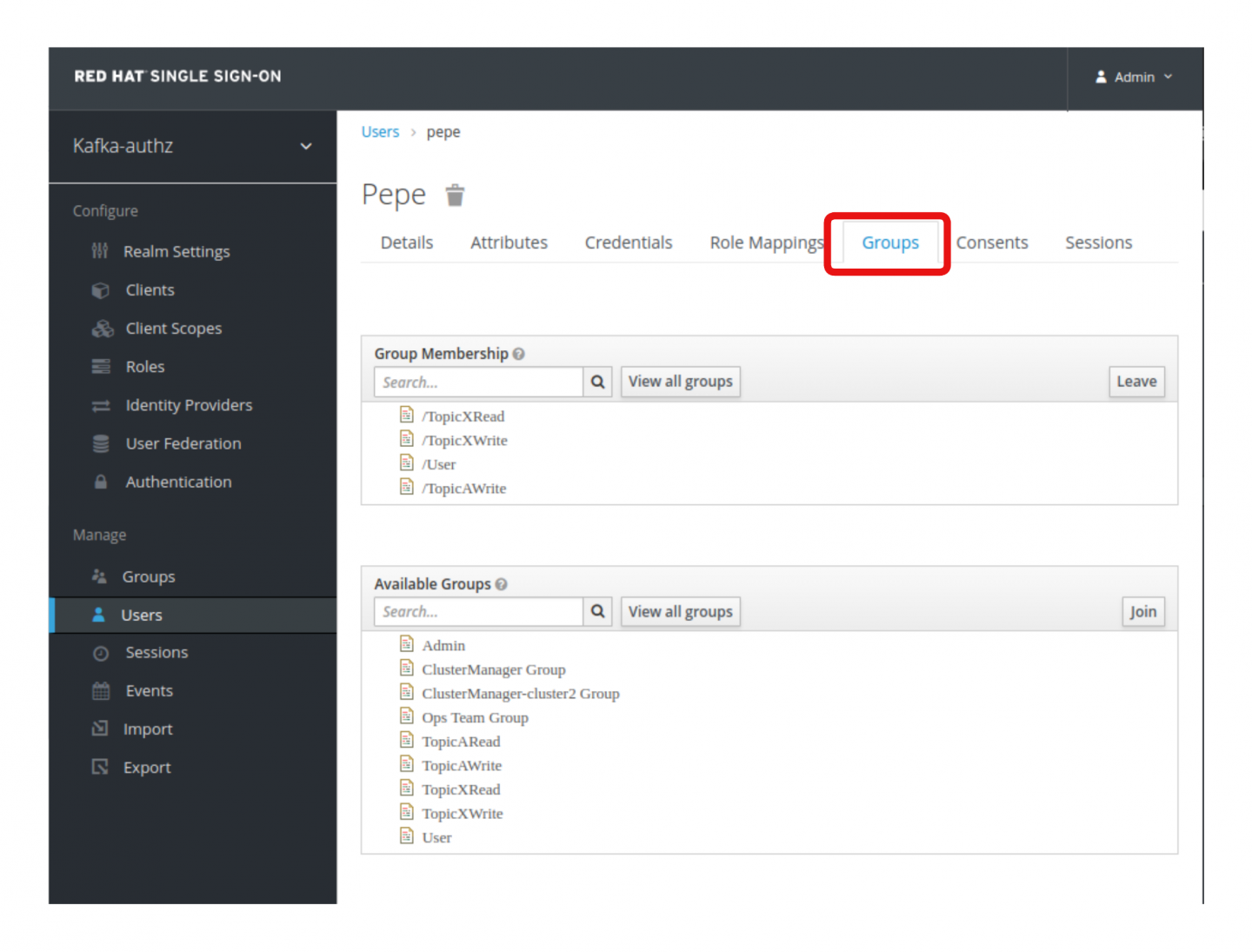

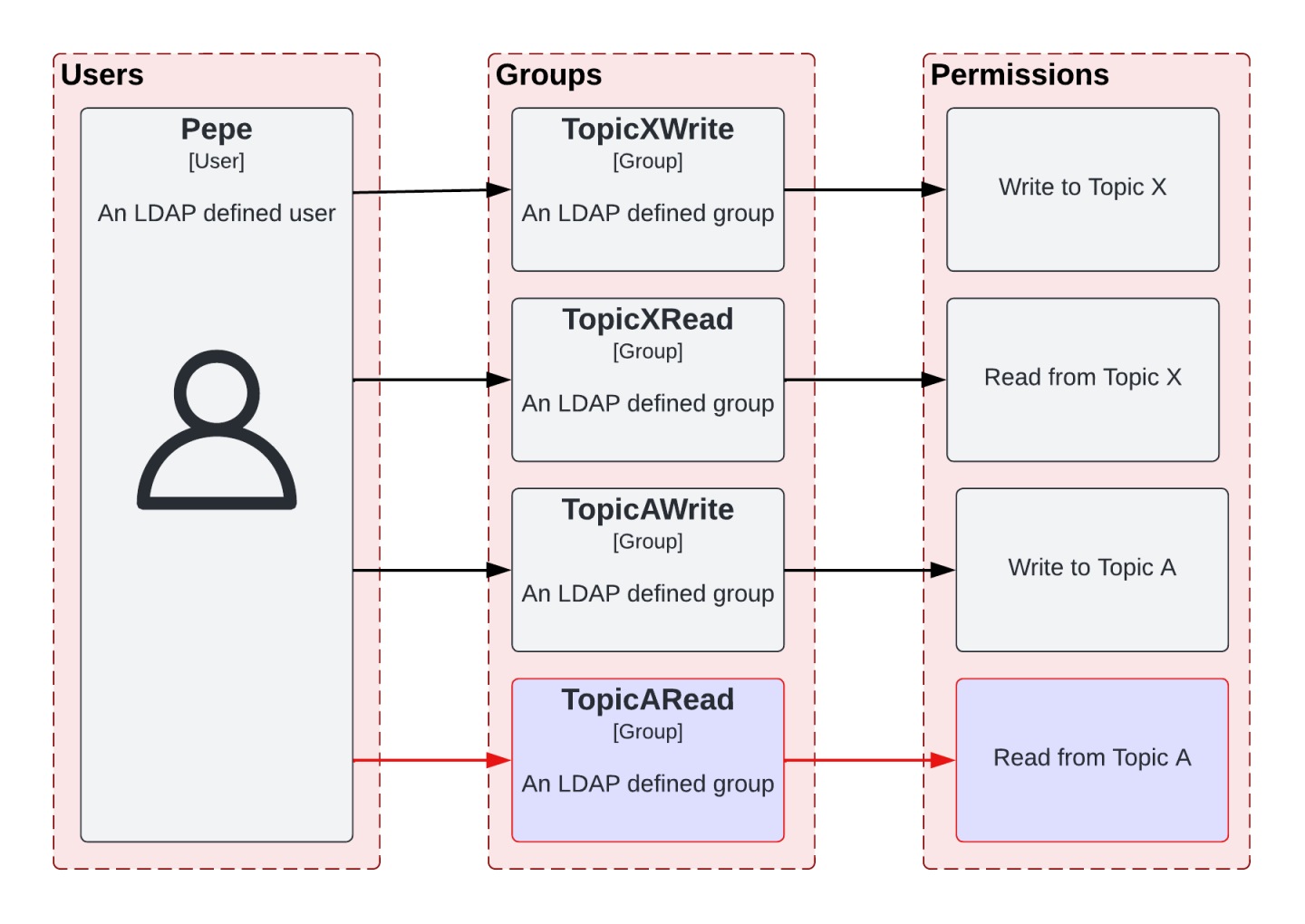

Click on the user pepe and go to the user's Groups tab. Note that this user is a member of the groups TopicAWrite, TopicXRead, and TopicXWrite, as shown in Figure 7.

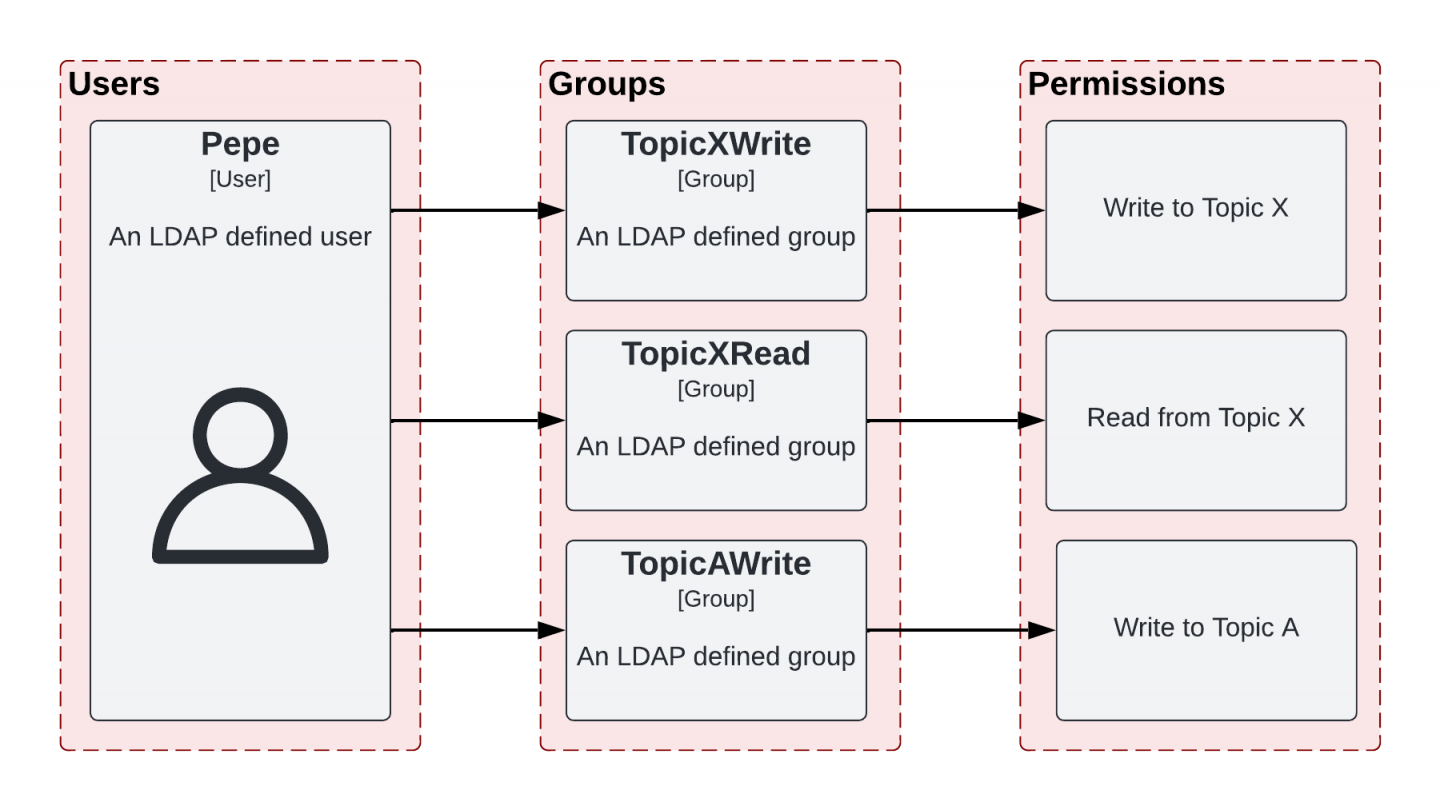

These groups are attached to various policies that contain permissions on resources (such as groups, Kafka topics, and Kafka clusters). The user pepe is able to execute the following actions based on the user's group assignments, which are also attached to policies and permissions (see Figure 8):

- Group

TopicAWriteallows its users to push messages to Kafka topics that start witha-. - Group

TopicXReadallows its users to consume messages from Kafka topics that start withx-. - Group

TopicXWriteallows its users to push messages from Kafka topics that start withx-.

We would like Pepe to also consume messages from the Kafka topic a-topic. However, the user is currently unable to do this, as they lack the permissions to read from the topic. In order for the user to read from the topic, you can assign the user to another LDAP group. As defined in Red Hat's SSO, this group has the policy and rules to enable reading from the Kafka a-topic topic (Figure 9). We'll give pepe this authorization later in this article.

Update user and group assignments and validate policies

AMQ Streams' default cluster configuration does not come with authorization preconfigured. In our instance of AMQ Streams, authorization is configured and enabled. Authorization is performed using a token endpoint, which Red Hat's SSO makes available. The explicit authorization configuration can be found in the /ocp/roles/ocp4-install-rh-amqstreams/templates/kafka.yaml.j2 template file under spec:kafka:authorization.

For demonstration purposes, the following subsections deploy a producer/consumer client application named kafka-client that communicates with the Kafka cluster. We will use this client application to demonstrate Kafka message production and consumption and how to set the authorizations needed for communication.

Step 1: Connect to the application from terminals

We interact with our example application through two terminals:

- An OpenShift terminal for working with the OpenShift cluster at the command line

- A Kafka client terminal for working in the Kafka client application

Leave both terminals open during the whole example.

To create the OpenShift terminal, open a new terminal and log in to OpenShift from that terminal using the oc login command.

To create the Kafka client terminal, open a separate terminal and start a Bash remote shell running the Kafka client application as follows. If successful, you will be presented with a Bash prompt:

# IMPORTANT!! Get RH SSO/Keycloak OpenShift Route -- Make a note of this

# i.e. keycloak-keycloak.apps.openshift-domain.com

# you will need this value a few steps later

oc -n keycloak get routes keycloak -o jsonpath='{ .spec.host }'

# switch to the `kafka` namespace

oc project kafka

# check the running pods

# you should see the following running pod: kafka-client-shell

oc -n kafka get po

# terminal into the pod

oc rsh -n kafka kafka-client-shell /bin/bash

Step 2: Produce messages on a Kafka topic within the client application

From the Kafka client terminal, to get access to Kafka as a consumer or producer, you must first generate a token from Red Hat's SSO, as shown here:

# set up your TLS environment

export PASSWORD=truststorepassword

export KAFKA_OPTS=" \

-Djavax.net.ssl.trustStore=/opt/kafka/certificates/kafka-client-truststore.p12 \

-Djavax.net.ssl.trustStorePassword=$PASSWORD \

-Djavax.net.ssl.trustStoreType=PKCS12"

# IMPORTANT!! get value of RH SSO Route from previous steps and replace here:

export RH_SSO_OCP_ROUTE=<keycloak-keycloak.apps.openshift-domain.com>

# add TOKEN ENDPOINT to env

export TOKEN_ENDPOINT=https://$RH_SSO_OCP_ROUTE/auth/realms/kafka-authz/protocol/openid-connect/token

# generate an oauth2 jwt

REFRESH_TOKEN=$(~/bin/oauth.sh -q pepe) # password: pass

Before producing messages on the Kafka topic, you need to create a producer/consumer configuration in the form of a properties file:

# generate oauth user properties - pepe

cat > ~/pepe.properties << EOF

security.protocol=SASL_SSL

sasl.mechanism=OAUTHBEARER

sasl.jaas.config=org.apache.kafka.common.security.oauthbearer.OAuthBearerLoginModule required \

oauth.refresh.token="$REFRESH_TOKEN" \

oauth.client.id="kafka-cli" \

oauth.token.endpoint.uri="$TOKEN_ENDPOINT" ;

sasl.login.callback.handler.class=io.strimzi.kafka.oauth.client.JaasClientOauthLoginCallbackHandler

EOF

# pepe produces messages on "a-topic". Add few messages. Ctrl-C to quit

bin/kafka-console-producer.sh --broker-list my-cluster-kafka-bootstrap.kafka:9093 --topic a-topic --producer.config ~/pepe.properties

This producer/consumer configuration has all the necessary authorization-related configuration along with the token you created for pepe. You can now produce messages on the Kafka a-topic topic as an authorized user. If the a-topic topic doesn't exist, Kafka creates it automatically when first writing to it.

Add a few messages in the topic and then press Ctrl+C to exit.

Step 3: Consume messages on a Kafka topic within an authorized client application

To understand the tasks you need to do next, try as the user pepe to consume messages from the a-topic topic created in the previous step:

$ bin/kafka-console-consumer.sh --bootstrap-server my-cluster-kafka-bootstrap.kafka:9093 --topic a-topic --group a_consumer_group_001 --from-beginning --consumer.config ~/pepe.propertiesYou will get an authorization error as a result:

org.apache.kafka.common.errors.GroupAuthorizationException: Not authorized to access group: a_consumer_group_001This is expected. The error appears because the user pepe isn't yet a member of the TopicARead LDAP group. You need to add pepe to that group in LDAP and resynchronize the user and group assignments in Red Hat's SSO to grant pepe the rights to consume messages from the a-topic topic.

From the OpenShift terminal you created in Step 1, enter the following command to add the user pepe to the TopicARead group (see /ocp/bootstrap/roles/ocp4-install-openldap/files/add-pepe-to-read.ldif for reference):

# Get Openldap POD

OPENLDAP_POD=$(oc -n openldap get po -l deploymentconfig=openldap-server -o custom-columns=:metadata.name)

OPENLDAP_POD=`echo $OPENLDAP_POD | xargs`

# Add Pepe to TopicARead Group in OpenLDAP

oc -n openldap exec -it $OPENLDAP_POD -- /bin/bash -c 'ldapmodify -x -H ldap://openldap-server.openldap:389 -D "cn=Manager,dc=example,dc=com" -w admin -f /tmp/add-pepe-to-read.ldif'

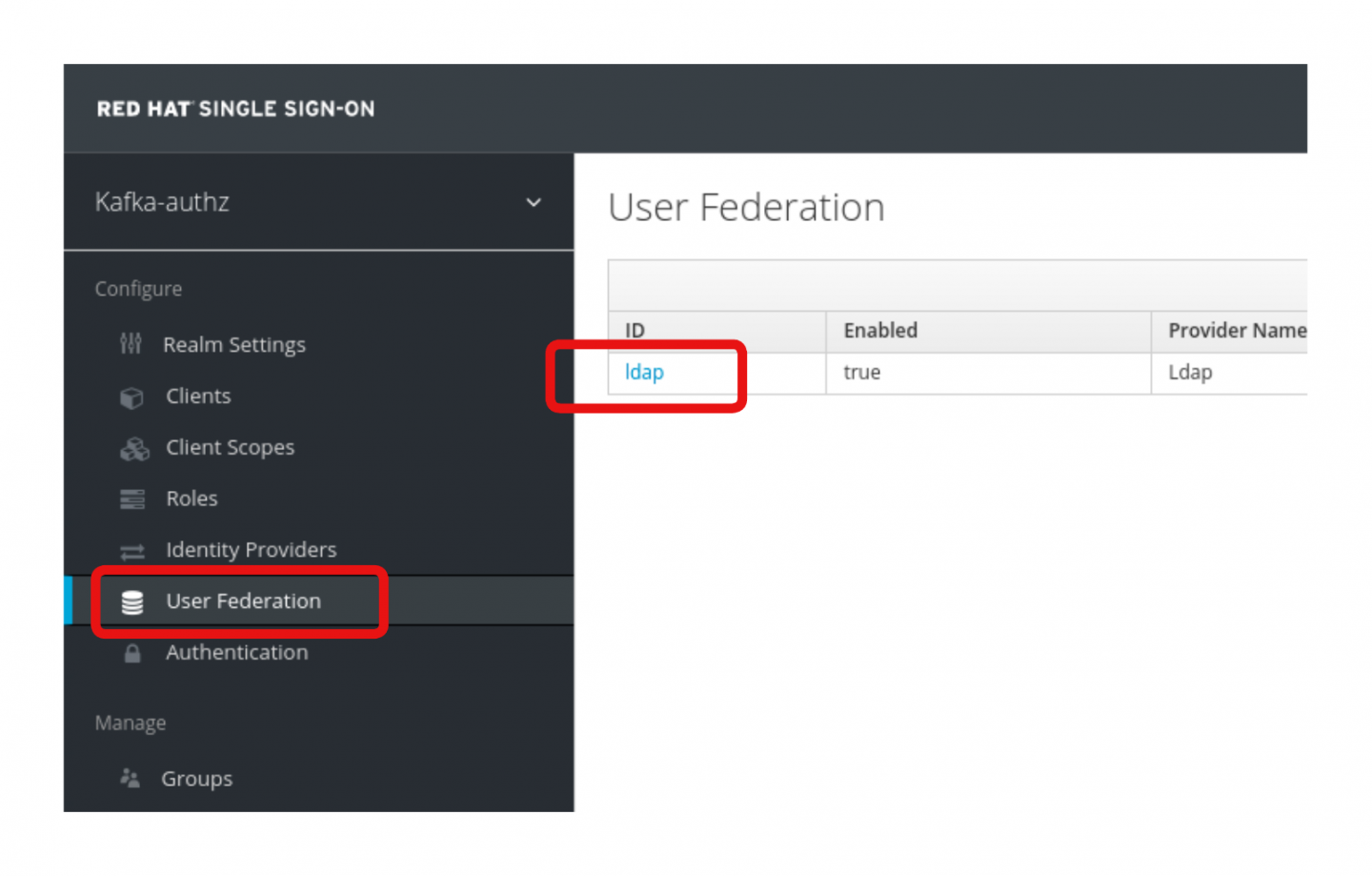

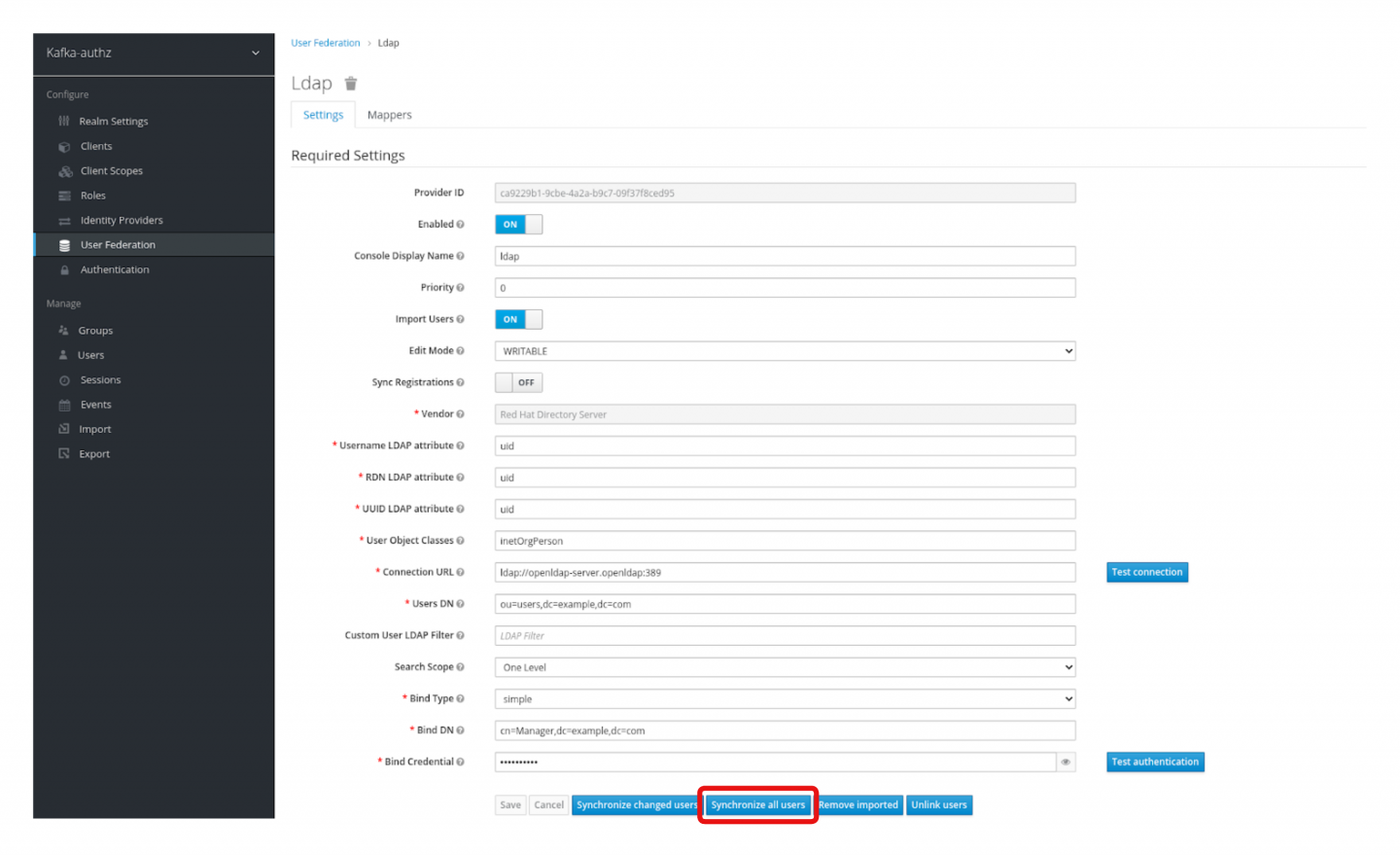

In Red Hat's SSO, under User Federation > Ldap, click Synchronize all users. Red Hat's SSO will up all users and their group mappings from OpenLDAP. You can validate pepe's new group assignment in Red Hat's SSO, and it will show that pepe is now a member of the TopicARead group (see Figures 10 and 11).

To validate pepe's membership and permissions, run the following command from the Kafka client terminal:

$ bin/kafka-console-consumer.sh --bootstrap-server my-cluster-kafka-bootstrap.kafka:9093 --topic a-topic --group a_consumer_group_001 --from-beginning --consumer.config ~/pepe.propertiesThe user pepe should now be authorized to consume all messages produced earlier on the Kafka a-topic topic.

Conclusion

This article demonstrated the deployment and integration of OpenLDAP, Red Hat's SSO, and Red Hat AMQ Streams on the Red Hat OpenShift Container Platform, employing Ansible for automation.

You were able to synchronize LDAP users and groups to Red Hat's SSO, create resources, and then create roles, policies, and rules around those resources. We were able to manage Kafka ACLs from Red Hat's SSO.

Finally, the article demonstrated TLS OAuth 2.0 authentication and authorization with AMQ Streams via Kafka broker authorization using Red Hat's SSO. With this connection, we were able to illustrate producer/consumer operations with authorization on Kafka topics for LDAP users via Red Hat's SSO roles and policies.

Last updated: October 25, 2024