AI & Node.js

Create intelligent, efficient, and user-friendly experiences by integrating AI into JavaScript applications.

Why Node.js with AI?

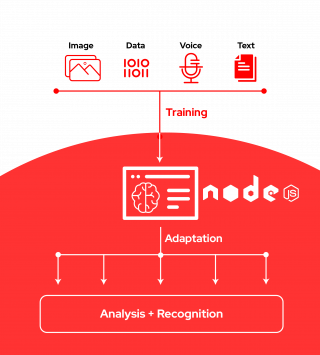

Al and Large Language Models (LLMs) are growing as an important tool for Web applications. As a JavaScript/Node.js developer, it's important to understand how to fit into this growing space. While Python is often thought of as the language for Al and model development, this does not mean that all application development will shift to Python. Instead, the tools/languages that are the best fit for each part of an application will continue to win out and those components will be integrated together to form the overall solution.

How AI and Node.js help developers

Retrieval Augmented Generation (RAG)

Knowledge of LLMs is limited to the data it has been trained on. Retrieval Augmented Generation or RAG makes an LLM aware of context-specific knowledge.

Improving Chatbot result with Retrieval Augmented Generation (RAG) and Node.js

Tools and Agents

Tools and Agents can help provide LLMs with additional capabilities like integrating with an external API and even building an agentic workflow.

Ollama recently announced tool support and like many popular libraries for...

Artificial intelligence (AI) and large language models (LLMs) are becoming...

Featured AI and Node.js articles and blogs

Here are some resources to help you get started in adding Al to your Node.js applications.

Explore large language models (LLMs) by trying out the Granite model on...

Experimenting with a Large Language Model powered Chatbot with Node.js

Use AI and Node.js to generate a JSON response that contains a summarized email

Developer Learning Exercises

Red Hat products that support Al and Node.js

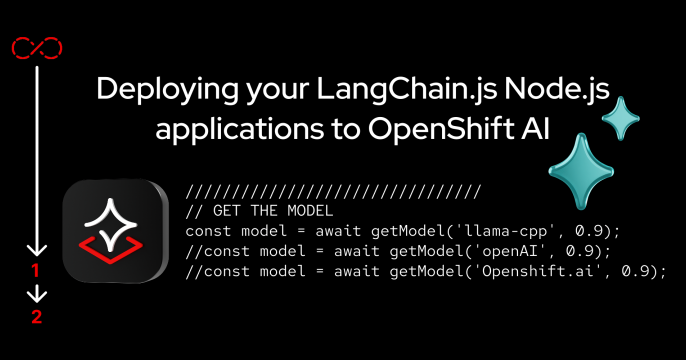

Develop, deploy, and run large language models (LLMs) in individual server...

Build, manage, and deploy containers and Kubernetes locally with desktop...

A cloud service that gives data scientists and developers a powerful AI/ML...

Red Hat build of Node.js makes it possible to run JavaScript outside of a...