Connect everywhere with Serverless Integration

Optimized scaling with a better developer experience...plus, it connects everywhere.

Explore event architecture

You split up your monolithic codebase into smaller artifacts and thought you were done! Now you’ve reached the hardest part: how do you split your data, and how do you keep your system working with it?

If this rings a bell, then you need to watch this DevNation Tech Talk streamed on January 16, 2020. We’ll explore how events with an event-driven architecture can help you succeed in this distributed data world.

Learn concepts like CQRS, event sourcing, and how you can use them in a distributed architecture with REST, message brokers, and Apache Kafka.

Integration resources

Learn how to integrate ArgoCD and Red Hat Developer Hub with OpenShift GitOps.

See how to use Apache Camel to turn LLMs into reliable text-processing...

Use Red Hat Lightspeed to simplify inventory management and convert natural...

Learn how to achieve complete service mesh observability on OpenShift by...

Discover the new Camel dashboard for OpenShift to monitor a fleet of Camel...

Discover the new features of Red Hat build of Apache Camel 4.14, including...

What is serverless connectivity?

Since the surge of Serverless-as-a-Service (SaaS) offering and building of microservices, developers find themselves doing more connecting and orchestrating work than ever before, but managing and optimizing the deployment of this integration code takes up too much time and effort. Serverless is a cloud computing model that makes the provisioning and scaling of servers transparent to developers. The event-driven and stateless nature of integration applications makes them a perfect fit for the serverless model, and serverless allows developers to focus on application development with flexible deployment and on optimal resource usage.

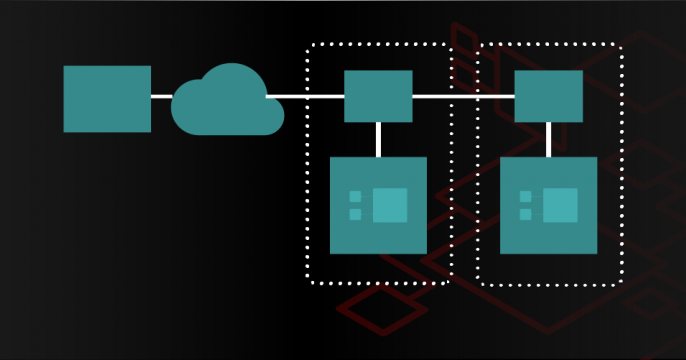

Serverless integration in OpenShift

Camel K provides the best practice platform and framework to build serverless integration. With the help of operator patterns and underlying serverless technologies, developers can focus on building their code. Camel K runs on top of Quarkus with the power of supersonic, lightweight runtime.

Red Hat Serverless with AMQ Streams provides a high through-put but reliable foundation for the event mesh. The events are used to trigger the initiation of serverless applications.

Based on the OpenShift platform that simplifies container management and provides the basic infrastructure, OpenShift serverless provides on-demand scaling as well as a mechanism for hiding the lower-level complexity to developer.

Cloud-native development that connects systems

Orchestrate real-time cloud events and enhance developer productivity with auto-detect dependencies and lifecycles.

Learn serverless integration in your browser

An event streaming platform using Red Hat Streams for Apache Kafka based on...

Extend capabilities with no changes to legacy apps through data integration...

Discover how an API First Approach provides the right framework to build APIs...

An accelerated path to migrating applications from Red Hat Fuse to Red Hat...

Event-driven Sentiment Analysis using Kafka, Knative and AI/ML

Expand your API Management strategy beyond RESTful APIs into event-driven...